User login

Green light puts the stop on migraine

, according to results of a small study from the University of Arizona, Tucson.

“This is the first clinical study to evaluate green light exposure as a potential preventive therapy for patients with migraine, “ senior author Mohab M. Ibrahim, MD, PhD, said in a press release. “Now I have another tool in my toolbox to treat one of the most difficult neurologic conditions – migraine.”

“Given the safety, affordability, and efficacy of green light exposure, there is merit to conduct a larger study,” he and coauthors from the university wrote in their paper.

The study included 29 adult patients (average age 52.2 years), 22 with chronic migraine and the rest with episodic migraine who were recruited from the University of Arizona/Banner Medical Center chronic pain clinic. To be included, patients had to meet the International Headache Society diagnostic criteria for chronic or episodic migraine, have an average headache pain intensity of 5 out of 10 or greater on the numeric pain scale (NPS) over the 10 weeks prior to enrolling in the study, and be dissatisfied with their current migraine therapy.

The patients were free to start, continue, or discontinue any other migraine treatments as recommended by their physicians as long as this was reported to the study team.

White versus green

The one-way crossover design involved exposure to 10 weeks of white light emitting diodes, for 1-2 hours per day, followed by a 2-week washout period and then 10 weeks’ exposure to green light emitting diodes (GLED) for the same daily duration. The protocol involved use of a light strip emitting an intensity of between 4 and 100 lux measured at approximately 2 m and 1 m from a lux meter.

Patients were instructed to use the light in a dark room, without falling asleep, and to participate in activities that did not require external light sources, such as listening to music, reading books, doing exercises, or engaging in similar activities. The daily minimum exposure of 1 hour, up to a maximum of 2 hours, was to be completed in one sitting.

The primary outcome measure was the number of headache days per month, defined as days with moderate to severe headache pain for at least 4 hours. Secondary outcomes included perceived reduction in duration and intensity of the headache phase of the migraine episodes assessed every 2 weeks with the NPS, improved ability to fall and stay asleep, improved ability to perform work and daily activity, improved quality of life, and reduction of pain medications.

The researchers found that when the patients with chronic migraine and episodic migraine were examined as separate groups, white light exposure did not significantly reduce the number of headache days per month, but when the chronic migraine and episodic migraine groups were combined there was a significant reduction from 18.2 to 16.5 headache days per month.

On the other hand, green light did result in significantly reduced headache days both in the separate (from 7.9 to 2.4 days in the episodic migraine group and 22.3 to 9.4 days in the chronic migraine group) and combined groups (from 18.4 to 7.4 days).

“While some improvement in secondary outcomes was observed with white light emitting diodes, more secondary outcomes with significantly greater magnitude including assessments of quality of life, Short-Form McGill Pain Questionnaire, Headache Impact Test-6, and Five-level version of the EuroQol five-dimensional survey without reported side effects were observed with green light emitting diodes,” the authors reported.

“The use of a nonpharmacological therapy such as green light can be of tremendous help to a variety of patients that either do not want to be on medications or do not respond to them,” coauthor Amol M. Patwardhan, MD, PhD, said in the press release. “The beauty of this approach is the lack of associated side effects. If at all, it appears to improve sleep and other quality of life measures,” said Dr. Patwardhan, associate professor and vice chair of research in the University of Arizona’s department of anesthesiology.

Better than white light

Asked to comment on the findings, Alan M. Rapoport, MD, clinical professor of neurology at the University of California, Los Angeles, said research has shown for some time that exposure to green light has beneficial effects in migraine patients. This study, although small, does indicate that green light is more beneficial than is white light and reduces headache days and intensity. “I believe patients would be willing to spend 1-2 hours a day in green light to reduce and improve their migraine with few side effects. A larger randomized trial should be done,” he said.

The study was funded by support from the National Center for Complementary and Integrative Health (to Dr. Ibrahim), the Comprehensive Chronic Pain and Addiction Center–University of Arizona, and the University of Arizona CHiLLI initiative. Dr. Ibrahim and one coauthor have a patent pending through the University of Arizona for use of green light therapy for the management of chronic pain. Dr. Rapoport is a former president of the International Headache Society. He is an editor of Headache and CNS Drugs, and Editor-in-Chief of Neurology Reviews. He reviews for many peer-reviewed journals such as Cephalalgia, Neurology, New England Journal of Medicine, and Headache.

, according to results of a small study from the University of Arizona, Tucson.

“This is the first clinical study to evaluate green light exposure as a potential preventive therapy for patients with migraine, “ senior author Mohab M. Ibrahim, MD, PhD, said in a press release. “Now I have another tool in my toolbox to treat one of the most difficult neurologic conditions – migraine.”

“Given the safety, affordability, and efficacy of green light exposure, there is merit to conduct a larger study,” he and coauthors from the university wrote in their paper.

The study included 29 adult patients (average age 52.2 years), 22 with chronic migraine and the rest with episodic migraine who were recruited from the University of Arizona/Banner Medical Center chronic pain clinic. To be included, patients had to meet the International Headache Society diagnostic criteria for chronic or episodic migraine, have an average headache pain intensity of 5 out of 10 or greater on the numeric pain scale (NPS) over the 10 weeks prior to enrolling in the study, and be dissatisfied with their current migraine therapy.

The patients were free to start, continue, or discontinue any other migraine treatments as recommended by their physicians as long as this was reported to the study team.

White versus green

The one-way crossover design involved exposure to 10 weeks of white light emitting diodes, for 1-2 hours per day, followed by a 2-week washout period and then 10 weeks’ exposure to green light emitting diodes (GLED) for the same daily duration. The protocol involved use of a light strip emitting an intensity of between 4 and 100 lux measured at approximately 2 m and 1 m from a lux meter.

Patients were instructed to use the light in a dark room, without falling asleep, and to participate in activities that did not require external light sources, such as listening to music, reading books, doing exercises, or engaging in similar activities. The daily minimum exposure of 1 hour, up to a maximum of 2 hours, was to be completed in one sitting.

The primary outcome measure was the number of headache days per month, defined as days with moderate to severe headache pain for at least 4 hours. Secondary outcomes included perceived reduction in duration and intensity of the headache phase of the migraine episodes assessed every 2 weeks with the NPS, improved ability to fall and stay asleep, improved ability to perform work and daily activity, improved quality of life, and reduction of pain medications.

The researchers found that when the patients with chronic migraine and episodic migraine were examined as separate groups, white light exposure did not significantly reduce the number of headache days per month, but when the chronic migraine and episodic migraine groups were combined there was a significant reduction from 18.2 to 16.5 headache days per month.

On the other hand, green light did result in significantly reduced headache days both in the separate (from 7.9 to 2.4 days in the episodic migraine group and 22.3 to 9.4 days in the chronic migraine group) and combined groups (from 18.4 to 7.4 days).

“While some improvement in secondary outcomes was observed with white light emitting diodes, more secondary outcomes with significantly greater magnitude including assessments of quality of life, Short-Form McGill Pain Questionnaire, Headache Impact Test-6, and Five-level version of the EuroQol five-dimensional survey without reported side effects were observed with green light emitting diodes,” the authors reported.

“The use of a nonpharmacological therapy such as green light can be of tremendous help to a variety of patients that either do not want to be on medications or do not respond to them,” coauthor Amol M. Patwardhan, MD, PhD, said in the press release. “The beauty of this approach is the lack of associated side effects. If at all, it appears to improve sleep and other quality of life measures,” said Dr. Patwardhan, associate professor and vice chair of research in the University of Arizona’s department of anesthesiology.

Better than white light

Asked to comment on the findings, Alan M. Rapoport, MD, clinical professor of neurology at the University of California, Los Angeles, said research has shown for some time that exposure to green light has beneficial effects in migraine patients. This study, although small, does indicate that green light is more beneficial than is white light and reduces headache days and intensity. “I believe patients would be willing to spend 1-2 hours a day in green light to reduce and improve their migraine with few side effects. A larger randomized trial should be done,” he said.

The study was funded by support from the National Center for Complementary and Integrative Health (to Dr. Ibrahim), the Comprehensive Chronic Pain and Addiction Center–University of Arizona, and the University of Arizona CHiLLI initiative. Dr. Ibrahim and one coauthor have a patent pending through the University of Arizona for use of green light therapy for the management of chronic pain. Dr. Rapoport is a former president of the International Headache Society. He is an editor of Headache and CNS Drugs, and Editor-in-Chief of Neurology Reviews. He reviews for many peer-reviewed journals such as Cephalalgia, Neurology, New England Journal of Medicine, and Headache.

, according to results of a small study from the University of Arizona, Tucson.

“This is the first clinical study to evaluate green light exposure as a potential preventive therapy for patients with migraine, “ senior author Mohab M. Ibrahim, MD, PhD, said in a press release. “Now I have another tool in my toolbox to treat one of the most difficult neurologic conditions – migraine.”

“Given the safety, affordability, and efficacy of green light exposure, there is merit to conduct a larger study,” he and coauthors from the university wrote in their paper.

The study included 29 adult patients (average age 52.2 years), 22 with chronic migraine and the rest with episodic migraine who were recruited from the University of Arizona/Banner Medical Center chronic pain clinic. To be included, patients had to meet the International Headache Society diagnostic criteria for chronic or episodic migraine, have an average headache pain intensity of 5 out of 10 or greater on the numeric pain scale (NPS) over the 10 weeks prior to enrolling in the study, and be dissatisfied with their current migraine therapy.

The patients were free to start, continue, or discontinue any other migraine treatments as recommended by their physicians as long as this was reported to the study team.

White versus green

The one-way crossover design involved exposure to 10 weeks of white light emitting diodes, for 1-2 hours per day, followed by a 2-week washout period and then 10 weeks’ exposure to green light emitting diodes (GLED) for the same daily duration. The protocol involved use of a light strip emitting an intensity of between 4 and 100 lux measured at approximately 2 m and 1 m from a lux meter.

Patients were instructed to use the light in a dark room, without falling asleep, and to participate in activities that did not require external light sources, such as listening to music, reading books, doing exercises, or engaging in similar activities. The daily minimum exposure of 1 hour, up to a maximum of 2 hours, was to be completed in one sitting.

The primary outcome measure was the number of headache days per month, defined as days with moderate to severe headache pain for at least 4 hours. Secondary outcomes included perceived reduction in duration and intensity of the headache phase of the migraine episodes assessed every 2 weeks with the NPS, improved ability to fall and stay asleep, improved ability to perform work and daily activity, improved quality of life, and reduction of pain medications.

The researchers found that when the patients with chronic migraine and episodic migraine were examined as separate groups, white light exposure did not significantly reduce the number of headache days per month, but when the chronic migraine and episodic migraine groups were combined there was a significant reduction from 18.2 to 16.5 headache days per month.

On the other hand, green light did result in significantly reduced headache days both in the separate (from 7.9 to 2.4 days in the episodic migraine group and 22.3 to 9.4 days in the chronic migraine group) and combined groups (from 18.4 to 7.4 days).

“While some improvement in secondary outcomes was observed with white light emitting diodes, more secondary outcomes with significantly greater magnitude including assessments of quality of life, Short-Form McGill Pain Questionnaire, Headache Impact Test-6, and Five-level version of the EuroQol five-dimensional survey without reported side effects were observed with green light emitting diodes,” the authors reported.

“The use of a nonpharmacological therapy such as green light can be of tremendous help to a variety of patients that either do not want to be on medications or do not respond to them,” coauthor Amol M. Patwardhan, MD, PhD, said in the press release. “The beauty of this approach is the lack of associated side effects. If at all, it appears to improve sleep and other quality of life measures,” said Dr. Patwardhan, associate professor and vice chair of research in the University of Arizona’s department of anesthesiology.

Better than white light

Asked to comment on the findings, Alan M. Rapoport, MD, clinical professor of neurology at the University of California, Los Angeles, said research has shown for some time that exposure to green light has beneficial effects in migraine patients. This study, although small, does indicate that green light is more beneficial than is white light and reduces headache days and intensity. “I believe patients would be willing to spend 1-2 hours a day in green light to reduce and improve their migraine with few side effects. A larger randomized trial should be done,” he said.

The study was funded by support from the National Center for Complementary and Integrative Health (to Dr. Ibrahim), the Comprehensive Chronic Pain and Addiction Center–University of Arizona, and the University of Arizona CHiLLI initiative. Dr. Ibrahim and one coauthor have a patent pending through the University of Arizona for use of green light therapy for the management of chronic pain. Dr. Rapoport is a former president of the International Headache Society. He is an editor of Headache and CNS Drugs, and Editor-in-Chief of Neurology Reviews. He reviews for many peer-reviewed journals such as Cephalalgia, Neurology, New England Journal of Medicine, and Headache.

FROM CEPHALALGIA

Simple blood test plus AI may flag early-stage Alzheimer’s disease

, raising the prospect of early intervention when effective treatments become available.

In a study, investigators used six AI methodologies, including Deep Learning, to assess blood leukocyte epigenomic biomarkers. They found more than 150 genetic differences among study participants with Alzheimer’s disease in comparison with participants who did not have Alzheimer’s disease.

All of the AI platforms were effective in predicting Alzheimer’s disease. Deep Learning’s assessment of intragenic cytosine-phosphate-guanines (CpGs) had sensitivity and specificity rates of 97%.

“It’s almost as if the leukocytes have become a newspaper to tell us, ‘This is what is going on in the brain,’ “ lead author Ray Bahado-Singh, MD, chair of the department of obstetrics and gynecology, Oakland University, Auburn Hills, Mich., said in a news release.

The researchers noted that the findings, if replicated in future studies, may help in providing Alzheimer’s disease diagnoses “much earlier” in the disease process. “The holy grail is to identify patients in the preclinical stage so effective early interventions, including new medications, can be studied and ultimately used,” Dr. Bahado-Singh said.

“This certainly isn’t the final step in Alzheimer’s research, but I think this represents a significant change in direction,” he told attendees at a press briefing.

The findings were published online March 31 in PLOS ONE.

Silver tsunami

The investigators noted that Alzheimer’s disease is often diagnosed when the disease is in its later stages, after irreversible brain damage has occurred. “There is currently no cure for the disease, and the treatment is limited to drugs that attempt to treat symptoms and have little effect on the disease’s progression,” they noted.

Coinvestigator Khaled Imam, MD, director of geriatric medicine for Beaumont Health in Michigan, pointed out that although MRI and lumbar puncture can identify Alzheimer’s disease early on, the processes are expensive and/or invasive.

“Having biomarkers in the blood ... and being able to identify [Alzheimer’s disease] years before symptoms start, hopefully we’d be able to intervene early on in the process of the disease,” Dr. Imam said.

It is estimated that the number of Americans aged 85 and older will triple by 2050. This impending “silver tsunami,” which will come with a commensurate increase in Alzheimer’s disease cases, makes it even more important to be able to diagnose the disease early on, he noted.

The study included 24 individuals with late-onset Alzheimer’s disease (70.8% women; mean age, 83 years); 24 were deemed to be “cognitively healthy” (66.7% women; mean age, 80 years). About 500 ng of genomic DNA was extracted from whole-blood samples from each participant.

The researchers used the Infinium MethylationEPIC BeadChip array, and the samples were then examined for markers of methylation that would “indicate the disease process has started,” they noted.

In addition to Deep Learning, the five other AI platforms were the Support Vector Machine, Generalized Linear Model, Prediction Analysis for Microarrays, Random Forest, and Linear Discriminant Analysis.

These platforms were used to assess leukocyte genome changes. To predict Alzheimer’s disease, the researchers also used Ingenuity Pathway Analysis.

Significant “chemical changes”

Results showed that the Alzheimer’s disease group had 152 significantly differentially methylated CpGs in 171 genes in comparison with the non-Alzheimer’s disease group (false discovery rate P value < .05).

As a whole, using intragenic and intergenic/extragenic CpGs, the AI platforms were effective in predicting who had Alzheimer’s disease (area under the curve [AUC], ≥ 0.93). Using intragenic markers, the AUC for Deep Learning was 0.99.

“We looked at close to a million different sites, and we saw some chemical changes that we know are associated with alteration or change in gene function,” Dr. Bahado-Singh said.

Altered genes that were found in the Alzheimer’s disease group included CR1L, CTSV, S1PR1, and LTB4R – all of which “have been previously linked with Alzheimer’s disease and dementia,” the researchers noted. They also found the methylated genes CTSV and PRMT5, both of which have been previously associated with cardiovascular disease.

“A significant strength of our study is the novelty, i.e. the use of blood leukocytes to accurately detect Alzheimer’s disease and also for interrogating the pathogenesis of Alzheimer’s disease,” the investigators wrote.

Dr. Bahado-Singh said that the test let them identify changes in cells in the blood, “giving us a comprehensive account not only of the fact that the brain is being affected by Alzheimer’s disease but it’s telling us what kinds of processes are going on in the brain.

“Normally you don’t have access to the brain. This gives us a simple blood test to get an ongoing reading of the course of events in the brain – and potentially tell us very early on before the onset of symptoms,” he added.

Cautiously optimistic

During the question-and-answer session following his presentation at the briefing, Dr. Bahado-Singh reiterated that they are at a very early stage in the research and were not able to make clinical recommendations at this point. However, he added, “There was evidence that DNA methylation change could likely precede the onset of abnormalities in the cells that give rise to the disease.”

Coinvestigator Stewart Graham, PhD, director of Alzheimer’s research at Beaumont Health, added that although the initial study findings led to some excitement for the team, “we have to be very conservative with what we say.”

He noted that the findings need to be replicated in a more diverse population. Still, “we’re excited at the moment and looking forward to seeing what the future results hold,” Dr. Graham said.

Dr. Bahado-Singh said that if larger studies confirm the findings and the test is viable, it would make sense to use it as a screen for individuals older than 65. He noted that because of the aging of the population, “this subset of individuals will constitute a larger and larger fraction of the population globally.”

Still early days

Commenting on the findings, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, noted that the investigators used an “interesting” diagnostic process.

“It was a unique approach to looking at and trying to understand what might be some of the biological underpinnings and using these tools and technologies to determine if they’re able to differentiate individuals with Alzheimer’s disease” from those without Alzheimer’s disease, said Dr. Snyder, who was not involved with the research.

“Ultimately, we want to know who is at greater risk, who may have some of the changing biology at the earliest time point so that we can intervene to stop the progression of the disease,” she said.

She pointed out that a number of types of biomarker tests are currently under investigation, many of which are measuring different outcomes. “And that’s what we want to see going forward. We want to have as many tools in our toolbox that allow us to accurately diagnose at that earliest time point,” Dr. Snyder said.

“At this point, [the current study] is still pretty early, so it needs to be replicated and then expanded to larger groups to really understand what they may be seeing,” she added.

Dr. Bahado-Singh, Dr. Imam, Dr. Graham, and Dr. Snyder have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, raising the prospect of early intervention when effective treatments become available.

In a study, investigators used six AI methodologies, including Deep Learning, to assess blood leukocyte epigenomic biomarkers. They found more than 150 genetic differences among study participants with Alzheimer’s disease in comparison with participants who did not have Alzheimer’s disease.

All of the AI platforms were effective in predicting Alzheimer’s disease. Deep Learning’s assessment of intragenic cytosine-phosphate-guanines (CpGs) had sensitivity and specificity rates of 97%.

“It’s almost as if the leukocytes have become a newspaper to tell us, ‘This is what is going on in the brain,’ “ lead author Ray Bahado-Singh, MD, chair of the department of obstetrics and gynecology, Oakland University, Auburn Hills, Mich., said in a news release.

The researchers noted that the findings, if replicated in future studies, may help in providing Alzheimer’s disease diagnoses “much earlier” in the disease process. “The holy grail is to identify patients in the preclinical stage so effective early interventions, including new medications, can be studied and ultimately used,” Dr. Bahado-Singh said.

“This certainly isn’t the final step in Alzheimer’s research, but I think this represents a significant change in direction,” he told attendees at a press briefing.

The findings were published online March 31 in PLOS ONE.

Silver tsunami

The investigators noted that Alzheimer’s disease is often diagnosed when the disease is in its later stages, after irreversible brain damage has occurred. “There is currently no cure for the disease, and the treatment is limited to drugs that attempt to treat symptoms and have little effect on the disease’s progression,” they noted.

Coinvestigator Khaled Imam, MD, director of geriatric medicine for Beaumont Health in Michigan, pointed out that although MRI and lumbar puncture can identify Alzheimer’s disease early on, the processes are expensive and/or invasive.

“Having biomarkers in the blood ... and being able to identify [Alzheimer’s disease] years before symptoms start, hopefully we’d be able to intervene early on in the process of the disease,” Dr. Imam said.

It is estimated that the number of Americans aged 85 and older will triple by 2050. This impending “silver tsunami,” which will come with a commensurate increase in Alzheimer’s disease cases, makes it even more important to be able to diagnose the disease early on, he noted.

The study included 24 individuals with late-onset Alzheimer’s disease (70.8% women; mean age, 83 years); 24 were deemed to be “cognitively healthy” (66.7% women; mean age, 80 years). About 500 ng of genomic DNA was extracted from whole-blood samples from each participant.

The researchers used the Infinium MethylationEPIC BeadChip array, and the samples were then examined for markers of methylation that would “indicate the disease process has started,” they noted.

In addition to Deep Learning, the five other AI platforms were the Support Vector Machine, Generalized Linear Model, Prediction Analysis for Microarrays, Random Forest, and Linear Discriminant Analysis.

These platforms were used to assess leukocyte genome changes. To predict Alzheimer’s disease, the researchers also used Ingenuity Pathway Analysis.

Significant “chemical changes”

Results showed that the Alzheimer’s disease group had 152 significantly differentially methylated CpGs in 171 genes in comparison with the non-Alzheimer’s disease group (false discovery rate P value < .05).

As a whole, using intragenic and intergenic/extragenic CpGs, the AI platforms were effective in predicting who had Alzheimer’s disease (area under the curve [AUC], ≥ 0.93). Using intragenic markers, the AUC for Deep Learning was 0.99.

“We looked at close to a million different sites, and we saw some chemical changes that we know are associated with alteration or change in gene function,” Dr. Bahado-Singh said.

Altered genes that were found in the Alzheimer’s disease group included CR1L, CTSV, S1PR1, and LTB4R – all of which “have been previously linked with Alzheimer’s disease and dementia,” the researchers noted. They also found the methylated genes CTSV and PRMT5, both of which have been previously associated with cardiovascular disease.

“A significant strength of our study is the novelty, i.e. the use of blood leukocytes to accurately detect Alzheimer’s disease and also for interrogating the pathogenesis of Alzheimer’s disease,” the investigators wrote.

Dr. Bahado-Singh said that the test let them identify changes in cells in the blood, “giving us a comprehensive account not only of the fact that the brain is being affected by Alzheimer’s disease but it’s telling us what kinds of processes are going on in the brain.

“Normally you don’t have access to the brain. This gives us a simple blood test to get an ongoing reading of the course of events in the brain – and potentially tell us very early on before the onset of symptoms,” he added.

Cautiously optimistic

During the question-and-answer session following his presentation at the briefing, Dr. Bahado-Singh reiterated that they are at a very early stage in the research and were not able to make clinical recommendations at this point. However, he added, “There was evidence that DNA methylation change could likely precede the onset of abnormalities in the cells that give rise to the disease.”

Coinvestigator Stewart Graham, PhD, director of Alzheimer’s research at Beaumont Health, added that although the initial study findings led to some excitement for the team, “we have to be very conservative with what we say.”

He noted that the findings need to be replicated in a more diverse population. Still, “we’re excited at the moment and looking forward to seeing what the future results hold,” Dr. Graham said.

Dr. Bahado-Singh said that if larger studies confirm the findings and the test is viable, it would make sense to use it as a screen for individuals older than 65. He noted that because of the aging of the population, “this subset of individuals will constitute a larger and larger fraction of the population globally.”

Still early days

Commenting on the findings, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, noted that the investigators used an “interesting” diagnostic process.

“It was a unique approach to looking at and trying to understand what might be some of the biological underpinnings and using these tools and technologies to determine if they’re able to differentiate individuals with Alzheimer’s disease” from those without Alzheimer’s disease, said Dr. Snyder, who was not involved with the research.

“Ultimately, we want to know who is at greater risk, who may have some of the changing biology at the earliest time point so that we can intervene to stop the progression of the disease,” she said.

She pointed out that a number of types of biomarker tests are currently under investigation, many of which are measuring different outcomes. “And that’s what we want to see going forward. We want to have as many tools in our toolbox that allow us to accurately diagnose at that earliest time point,” Dr. Snyder said.

“At this point, [the current study] is still pretty early, so it needs to be replicated and then expanded to larger groups to really understand what they may be seeing,” she added.

Dr. Bahado-Singh, Dr. Imam, Dr. Graham, and Dr. Snyder have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, raising the prospect of early intervention when effective treatments become available.

In a study, investigators used six AI methodologies, including Deep Learning, to assess blood leukocyte epigenomic biomarkers. They found more than 150 genetic differences among study participants with Alzheimer’s disease in comparison with participants who did not have Alzheimer’s disease.

All of the AI platforms were effective in predicting Alzheimer’s disease. Deep Learning’s assessment of intragenic cytosine-phosphate-guanines (CpGs) had sensitivity and specificity rates of 97%.

“It’s almost as if the leukocytes have become a newspaper to tell us, ‘This is what is going on in the brain,’ “ lead author Ray Bahado-Singh, MD, chair of the department of obstetrics and gynecology, Oakland University, Auburn Hills, Mich., said in a news release.

The researchers noted that the findings, if replicated in future studies, may help in providing Alzheimer’s disease diagnoses “much earlier” in the disease process. “The holy grail is to identify patients in the preclinical stage so effective early interventions, including new medications, can be studied and ultimately used,” Dr. Bahado-Singh said.

“This certainly isn’t the final step in Alzheimer’s research, but I think this represents a significant change in direction,” he told attendees at a press briefing.

The findings were published online March 31 in PLOS ONE.

Silver tsunami

The investigators noted that Alzheimer’s disease is often diagnosed when the disease is in its later stages, after irreversible brain damage has occurred. “There is currently no cure for the disease, and the treatment is limited to drugs that attempt to treat symptoms and have little effect on the disease’s progression,” they noted.

Coinvestigator Khaled Imam, MD, director of geriatric medicine for Beaumont Health in Michigan, pointed out that although MRI and lumbar puncture can identify Alzheimer’s disease early on, the processes are expensive and/or invasive.

“Having biomarkers in the blood ... and being able to identify [Alzheimer’s disease] years before symptoms start, hopefully we’d be able to intervene early on in the process of the disease,” Dr. Imam said.

It is estimated that the number of Americans aged 85 and older will triple by 2050. This impending “silver tsunami,” which will come with a commensurate increase in Alzheimer’s disease cases, makes it even more important to be able to diagnose the disease early on, he noted.

The study included 24 individuals with late-onset Alzheimer’s disease (70.8% women; mean age, 83 years); 24 were deemed to be “cognitively healthy” (66.7% women; mean age, 80 years). About 500 ng of genomic DNA was extracted from whole-blood samples from each participant.

The researchers used the Infinium MethylationEPIC BeadChip array, and the samples were then examined for markers of methylation that would “indicate the disease process has started,” they noted.

In addition to Deep Learning, the five other AI platforms were the Support Vector Machine, Generalized Linear Model, Prediction Analysis for Microarrays, Random Forest, and Linear Discriminant Analysis.

These platforms were used to assess leukocyte genome changes. To predict Alzheimer’s disease, the researchers also used Ingenuity Pathway Analysis.

Significant “chemical changes”

Results showed that the Alzheimer’s disease group had 152 significantly differentially methylated CpGs in 171 genes in comparison with the non-Alzheimer’s disease group (false discovery rate P value < .05).

As a whole, using intragenic and intergenic/extragenic CpGs, the AI platforms were effective in predicting who had Alzheimer’s disease (area under the curve [AUC], ≥ 0.93). Using intragenic markers, the AUC for Deep Learning was 0.99.

“We looked at close to a million different sites, and we saw some chemical changes that we know are associated with alteration or change in gene function,” Dr. Bahado-Singh said.

Altered genes that were found in the Alzheimer’s disease group included CR1L, CTSV, S1PR1, and LTB4R – all of which “have been previously linked with Alzheimer’s disease and dementia,” the researchers noted. They also found the methylated genes CTSV and PRMT5, both of which have been previously associated with cardiovascular disease.

“A significant strength of our study is the novelty, i.e. the use of blood leukocytes to accurately detect Alzheimer’s disease and also for interrogating the pathogenesis of Alzheimer’s disease,” the investigators wrote.

Dr. Bahado-Singh said that the test let them identify changes in cells in the blood, “giving us a comprehensive account not only of the fact that the brain is being affected by Alzheimer’s disease but it’s telling us what kinds of processes are going on in the brain.

“Normally you don’t have access to the brain. This gives us a simple blood test to get an ongoing reading of the course of events in the brain – and potentially tell us very early on before the onset of symptoms,” he added.

Cautiously optimistic

During the question-and-answer session following his presentation at the briefing, Dr. Bahado-Singh reiterated that they are at a very early stage in the research and were not able to make clinical recommendations at this point. However, he added, “There was evidence that DNA methylation change could likely precede the onset of abnormalities in the cells that give rise to the disease.”

Coinvestigator Stewart Graham, PhD, director of Alzheimer’s research at Beaumont Health, added that although the initial study findings led to some excitement for the team, “we have to be very conservative with what we say.”

He noted that the findings need to be replicated in a more diverse population. Still, “we’re excited at the moment and looking forward to seeing what the future results hold,” Dr. Graham said.

Dr. Bahado-Singh said that if larger studies confirm the findings and the test is viable, it would make sense to use it as a screen for individuals older than 65. He noted that because of the aging of the population, “this subset of individuals will constitute a larger and larger fraction of the population globally.”

Still early days

Commenting on the findings, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, noted that the investigators used an “interesting” diagnostic process.

“It was a unique approach to looking at and trying to understand what might be some of the biological underpinnings and using these tools and technologies to determine if they’re able to differentiate individuals with Alzheimer’s disease” from those without Alzheimer’s disease, said Dr. Snyder, who was not involved with the research.

“Ultimately, we want to know who is at greater risk, who may have some of the changing biology at the earliest time point so that we can intervene to stop the progression of the disease,” she said.

She pointed out that a number of types of biomarker tests are currently under investigation, many of which are measuring different outcomes. “And that’s what we want to see going forward. We want to have as many tools in our toolbox that allow us to accurately diagnose at that earliest time point,” Dr. Snyder said.

“At this point, [the current study] is still pretty early, so it needs to be replicated and then expanded to larger groups to really understand what they may be seeing,” she added.

Dr. Bahado-Singh, Dr. Imam, Dr. Graham, and Dr. Snyder have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM PLOS ONE

Encephalopathy common, often lethal in hospitalized patients with COVID-19

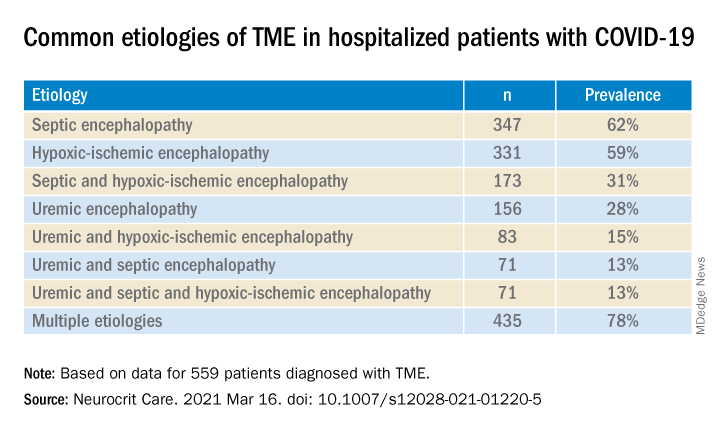

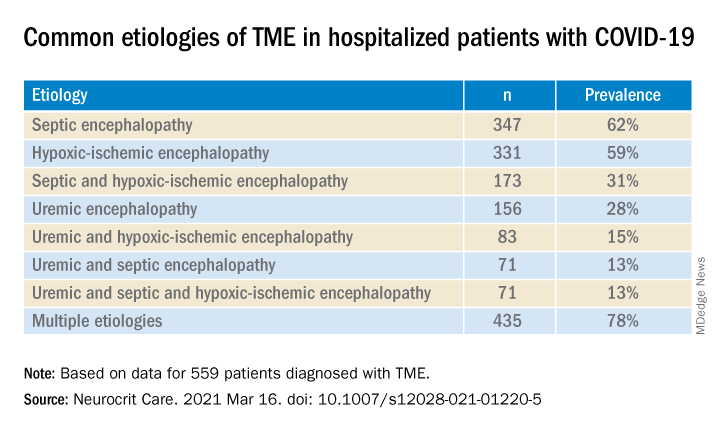

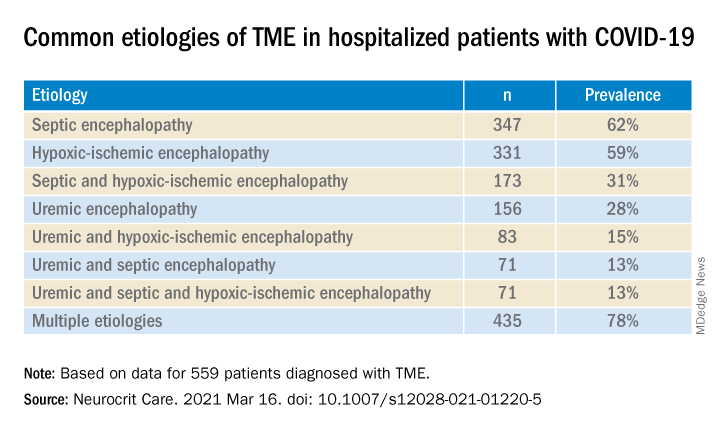

, new research shows. Results of a retrospective study show that of almost 4,500 patients with COVID-19, 12% were diagnosed with TME. Of these, 78% developed encephalopathy immediately prior to hospital admission. Septic encephalopathy, hypoxic-ischemic encephalopathy (HIE), and uremia were the most common causes, although multiple causes were present in close to 80% of patients. TME was also associated with a 24% higher risk of in-hospital death.

“We found that close to one in eight patients who were hospitalized with COVID-19 had TME that was not attributed to the effects of sedatives, and that this is incredibly common among these patients who are critically ill” said lead author Jennifer A. Frontera, MD, New York University.

“The general principle of our findings is to be more aggressive in TME; and from a neurologist perspective, the way to do this is to eliminate the effects of sedation, which is a confounder,” she said.

The study was published online March 16 in Neurocritical Care.

Drilling down

“Many neurological complications of COVID-19 are sequelae of severe illness or secondary effects of multisystem organ failure, but our previous work identified TME as the most common neurological complication,” Dr. Frontera said.

Previous research investigating encephalopathy among patients with COVID-19 included patients who may have been sedated or have had a positive Confusion Assessment Method (CAM) result.

“A lot of the delirium literature is effectively heterogeneous because there are a number of patients who are on sedative medication that, if you could turn it off, these patients would return to normal. Some may have underlying neurological issues that can be addressed, but you can›t get to the bottom of this unless you turn off the sedation,” Dr. Frontera noted.

“We wanted to be specific and try to drill down to see what the underlying cause of the encephalopathy was,” she said.

The researchers retrospectively analyzed data on 4,491 patients (≥ 18 years old) with COVID-19 who were admitted to four New York City hospitals between March 1, 2020, and May 20, 2020. Of these, 559 (12%) with TME were compared with 3,932 patients without TME.

The researchers looked at index admissions and included patients who had:

- New changes in mental status or significant worsening of mental status (in patients with baseline abnormal mental status).

- Hyperglycemia or with transient focal neurologic deficits that resolved with glucose correction.

- An adequate washout of sedating medications (when relevant) prior to mental status assessment.

Potential etiologies included electrolyte abnormalities, organ failure, hypertensive encephalopathy, sepsis or active infection, fever, nutritional deficiency, and environmental injury.

Foreign environment

Most (78%) of the 559 patients diagnosed with TME had already developed encephalopathy immediately prior to hospital admission, the authors report. The most common etiologies of TME among hospitalized patients with COVID-19 are listed below.

Compared with patients without TME, those with TME – (all Ps < .001):

- Were older (76 vs. 62 years).

- Had higher rates of dementia (27% vs. 3%).

- Had higher rates of psychiatric history (20% vs. 10%).

- Were more often intubated (37% vs. 20%).

- Had a longer length of hospital stay (7.9 vs. 6.0 days).

- Were less often discharged home (25% vs. 66%).

“It’s no surprise that older patients and people with dementia or psychiatric illness are predisposed to becoming encephalopathic,” said Dr. Frontera. “Being in a foreign environment, such as a hospital, or being sleep-deprived in the ICU is likely to make them more confused during their hospital stay.”

Delirium as a symptom

In-hospital mortality or discharge to hospice was considerably higher in the TME versus non-TME patients (44% vs. 18%, respectively).

When the researchers adjusted for confounders (age, sex, race, worse Sequential Organ Failure Assessment score during hospitalization, ventilator status, study week, hospital location, and ICU care level) and excluded patients receiving only comfort care, they found that TME was associated with a 24% increased risk of in-hospital death (30% in patients with TME vs. 16% in those without TME).

The highest mortality risk was associated with hypoxemia, with 42% of patients with HIE dying during hospitalization, compared with 16% of patients without HIE (adjusted hazard ratio 1.56; 95% confidence interval, 1.21-2.00; P = .001).

“Not all patients who are intubated require sedation, but there’s generally a lot of hesitation in reducing or stopping sedation in some patients,” Dr. Frontera observed.

She acknowledged there are “many extremely sick patients whom you can’t ventilate without sedation.”

Nevertheless, “delirium in and of itself does not cause death. It’s a symptom, not a disease, and we have to figure out what causes it. Delirium might not need to be sedated, and it’s more important to see what the causal problem is.”

Independent predictor of death

Commenting on the study, Panayiotis N. Varelas, MD, PhD, vice president of the Neurocritical Care Society, said the study “approached the TME issue better than previously, namely allowing time for sedatives to wear off to have a better sample of patients with this syndrome.”

Dr. Varelas, who is chairman of the department of neurology and professor of neurology at Albany (N.Y.) Medical College, emphasized that TME “is not benign and, in patients with COVID-19, it is an independent predictor of in-hospital mortality.”

“One should take all possible measures … to avoid desaturation and hypotensive episodes and also aggressively treat SAE and uremic encephalopathy in hopes of improving the outcomes,” added Dr. Varelas, who was not involved with the study.

Also commenting on the study, Mitchell Elkind, MD, professor of neurology and epidemiology at Columbia University in New York, who was not associated with the research, said it “nicely distinguishes among the different causes of encephalopathy, including sepsis, hypoxia, and kidney failure … emphasizing just how sick these patients are.”

The study received no direct funding. Individual investigators were supported by grants from the National Institute on Aging and the National Institute of Neurological Disorders and Stroke. The investigators, Dr. Varelas, and Dr. Elkind have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research shows. Results of a retrospective study show that of almost 4,500 patients with COVID-19, 12% were diagnosed with TME. Of these, 78% developed encephalopathy immediately prior to hospital admission. Septic encephalopathy, hypoxic-ischemic encephalopathy (HIE), and uremia were the most common causes, although multiple causes were present in close to 80% of patients. TME was also associated with a 24% higher risk of in-hospital death.

“We found that close to one in eight patients who were hospitalized with COVID-19 had TME that was not attributed to the effects of sedatives, and that this is incredibly common among these patients who are critically ill” said lead author Jennifer A. Frontera, MD, New York University.

“The general principle of our findings is to be more aggressive in TME; and from a neurologist perspective, the way to do this is to eliminate the effects of sedation, which is a confounder,” she said.

The study was published online March 16 in Neurocritical Care.

Drilling down

“Many neurological complications of COVID-19 are sequelae of severe illness or secondary effects of multisystem organ failure, but our previous work identified TME as the most common neurological complication,” Dr. Frontera said.

Previous research investigating encephalopathy among patients with COVID-19 included patients who may have been sedated or have had a positive Confusion Assessment Method (CAM) result.

“A lot of the delirium literature is effectively heterogeneous because there are a number of patients who are on sedative medication that, if you could turn it off, these patients would return to normal. Some may have underlying neurological issues that can be addressed, but you can›t get to the bottom of this unless you turn off the sedation,” Dr. Frontera noted.

“We wanted to be specific and try to drill down to see what the underlying cause of the encephalopathy was,” she said.

The researchers retrospectively analyzed data on 4,491 patients (≥ 18 years old) with COVID-19 who were admitted to four New York City hospitals between March 1, 2020, and May 20, 2020. Of these, 559 (12%) with TME were compared with 3,932 patients without TME.

The researchers looked at index admissions and included patients who had:

- New changes in mental status or significant worsening of mental status (in patients with baseline abnormal mental status).

- Hyperglycemia or with transient focal neurologic deficits that resolved with glucose correction.

- An adequate washout of sedating medications (when relevant) prior to mental status assessment.

Potential etiologies included electrolyte abnormalities, organ failure, hypertensive encephalopathy, sepsis or active infection, fever, nutritional deficiency, and environmental injury.

Foreign environment

Most (78%) of the 559 patients diagnosed with TME had already developed encephalopathy immediately prior to hospital admission, the authors report. The most common etiologies of TME among hospitalized patients with COVID-19 are listed below.

Compared with patients without TME, those with TME – (all Ps < .001):

- Were older (76 vs. 62 years).

- Had higher rates of dementia (27% vs. 3%).

- Had higher rates of psychiatric history (20% vs. 10%).

- Were more often intubated (37% vs. 20%).

- Had a longer length of hospital stay (7.9 vs. 6.0 days).

- Were less often discharged home (25% vs. 66%).

“It’s no surprise that older patients and people with dementia or psychiatric illness are predisposed to becoming encephalopathic,” said Dr. Frontera. “Being in a foreign environment, such as a hospital, or being sleep-deprived in the ICU is likely to make them more confused during their hospital stay.”

Delirium as a symptom

In-hospital mortality or discharge to hospice was considerably higher in the TME versus non-TME patients (44% vs. 18%, respectively).

When the researchers adjusted for confounders (age, sex, race, worse Sequential Organ Failure Assessment score during hospitalization, ventilator status, study week, hospital location, and ICU care level) and excluded patients receiving only comfort care, they found that TME was associated with a 24% increased risk of in-hospital death (30% in patients with TME vs. 16% in those without TME).

The highest mortality risk was associated with hypoxemia, with 42% of patients with HIE dying during hospitalization, compared with 16% of patients without HIE (adjusted hazard ratio 1.56; 95% confidence interval, 1.21-2.00; P = .001).

“Not all patients who are intubated require sedation, but there’s generally a lot of hesitation in reducing or stopping sedation in some patients,” Dr. Frontera observed.

She acknowledged there are “many extremely sick patients whom you can’t ventilate without sedation.”

Nevertheless, “delirium in and of itself does not cause death. It’s a symptom, not a disease, and we have to figure out what causes it. Delirium might not need to be sedated, and it’s more important to see what the causal problem is.”

Independent predictor of death

Commenting on the study, Panayiotis N. Varelas, MD, PhD, vice president of the Neurocritical Care Society, said the study “approached the TME issue better than previously, namely allowing time for sedatives to wear off to have a better sample of patients with this syndrome.”

Dr. Varelas, who is chairman of the department of neurology and professor of neurology at Albany (N.Y.) Medical College, emphasized that TME “is not benign and, in patients with COVID-19, it is an independent predictor of in-hospital mortality.”

“One should take all possible measures … to avoid desaturation and hypotensive episodes and also aggressively treat SAE and uremic encephalopathy in hopes of improving the outcomes,” added Dr. Varelas, who was not involved with the study.

Also commenting on the study, Mitchell Elkind, MD, professor of neurology and epidemiology at Columbia University in New York, who was not associated with the research, said it “nicely distinguishes among the different causes of encephalopathy, including sepsis, hypoxia, and kidney failure … emphasizing just how sick these patients are.”

The study received no direct funding. Individual investigators were supported by grants from the National Institute on Aging and the National Institute of Neurological Disorders and Stroke. The investigators, Dr. Varelas, and Dr. Elkind have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research shows. Results of a retrospective study show that of almost 4,500 patients with COVID-19, 12% were diagnosed with TME. Of these, 78% developed encephalopathy immediately prior to hospital admission. Septic encephalopathy, hypoxic-ischemic encephalopathy (HIE), and uremia were the most common causes, although multiple causes were present in close to 80% of patients. TME was also associated with a 24% higher risk of in-hospital death.

“We found that close to one in eight patients who were hospitalized with COVID-19 had TME that was not attributed to the effects of sedatives, and that this is incredibly common among these patients who are critically ill” said lead author Jennifer A. Frontera, MD, New York University.

“The general principle of our findings is to be more aggressive in TME; and from a neurologist perspective, the way to do this is to eliminate the effects of sedation, which is a confounder,” she said.

The study was published online March 16 in Neurocritical Care.

Drilling down

“Many neurological complications of COVID-19 are sequelae of severe illness or secondary effects of multisystem organ failure, but our previous work identified TME as the most common neurological complication,” Dr. Frontera said.

Previous research investigating encephalopathy among patients with COVID-19 included patients who may have been sedated or have had a positive Confusion Assessment Method (CAM) result.

“A lot of the delirium literature is effectively heterogeneous because there are a number of patients who are on sedative medication that, if you could turn it off, these patients would return to normal. Some may have underlying neurological issues that can be addressed, but you can›t get to the bottom of this unless you turn off the sedation,” Dr. Frontera noted.

“We wanted to be specific and try to drill down to see what the underlying cause of the encephalopathy was,” she said.

The researchers retrospectively analyzed data on 4,491 patients (≥ 18 years old) with COVID-19 who were admitted to four New York City hospitals between March 1, 2020, and May 20, 2020. Of these, 559 (12%) with TME were compared with 3,932 patients without TME.

The researchers looked at index admissions and included patients who had:

- New changes in mental status or significant worsening of mental status (in patients with baseline abnormal mental status).

- Hyperglycemia or with transient focal neurologic deficits that resolved with glucose correction.

- An adequate washout of sedating medications (when relevant) prior to mental status assessment.

Potential etiologies included electrolyte abnormalities, organ failure, hypertensive encephalopathy, sepsis or active infection, fever, nutritional deficiency, and environmental injury.

Foreign environment

Most (78%) of the 559 patients diagnosed with TME had already developed encephalopathy immediately prior to hospital admission, the authors report. The most common etiologies of TME among hospitalized patients with COVID-19 are listed below.

Compared with patients without TME, those with TME – (all Ps < .001):

- Were older (76 vs. 62 years).

- Had higher rates of dementia (27% vs. 3%).

- Had higher rates of psychiatric history (20% vs. 10%).

- Were more often intubated (37% vs. 20%).

- Had a longer length of hospital stay (7.9 vs. 6.0 days).

- Were less often discharged home (25% vs. 66%).

“It’s no surprise that older patients and people with dementia or psychiatric illness are predisposed to becoming encephalopathic,” said Dr. Frontera. “Being in a foreign environment, such as a hospital, or being sleep-deprived in the ICU is likely to make them more confused during their hospital stay.”

Delirium as a symptom

In-hospital mortality or discharge to hospice was considerably higher in the TME versus non-TME patients (44% vs. 18%, respectively).

When the researchers adjusted for confounders (age, sex, race, worse Sequential Organ Failure Assessment score during hospitalization, ventilator status, study week, hospital location, and ICU care level) and excluded patients receiving only comfort care, they found that TME was associated with a 24% increased risk of in-hospital death (30% in patients with TME vs. 16% in those without TME).

The highest mortality risk was associated with hypoxemia, with 42% of patients with HIE dying during hospitalization, compared with 16% of patients without HIE (adjusted hazard ratio 1.56; 95% confidence interval, 1.21-2.00; P = .001).

“Not all patients who are intubated require sedation, but there’s generally a lot of hesitation in reducing or stopping sedation in some patients,” Dr. Frontera observed.

She acknowledged there are “many extremely sick patients whom you can’t ventilate without sedation.”

Nevertheless, “delirium in and of itself does not cause death. It’s a symptom, not a disease, and we have to figure out what causes it. Delirium might not need to be sedated, and it’s more important to see what the causal problem is.”

Independent predictor of death

Commenting on the study, Panayiotis N. Varelas, MD, PhD, vice president of the Neurocritical Care Society, said the study “approached the TME issue better than previously, namely allowing time for sedatives to wear off to have a better sample of patients with this syndrome.”

Dr. Varelas, who is chairman of the department of neurology and professor of neurology at Albany (N.Y.) Medical College, emphasized that TME “is not benign and, in patients with COVID-19, it is an independent predictor of in-hospital mortality.”

“One should take all possible measures … to avoid desaturation and hypotensive episodes and also aggressively treat SAE and uremic encephalopathy in hopes of improving the outcomes,” added Dr. Varelas, who was not involved with the study.

Also commenting on the study, Mitchell Elkind, MD, professor of neurology and epidemiology at Columbia University in New York, who was not associated with the research, said it “nicely distinguishes among the different causes of encephalopathy, including sepsis, hypoxia, and kidney failure … emphasizing just how sick these patients are.”

The study received no direct funding. Individual investigators were supported by grants from the National Institute on Aging and the National Institute of Neurological Disorders and Stroke. The investigators, Dr. Varelas, and Dr. Elkind have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM NEUROCRITICAL CARE

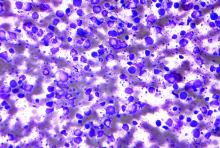

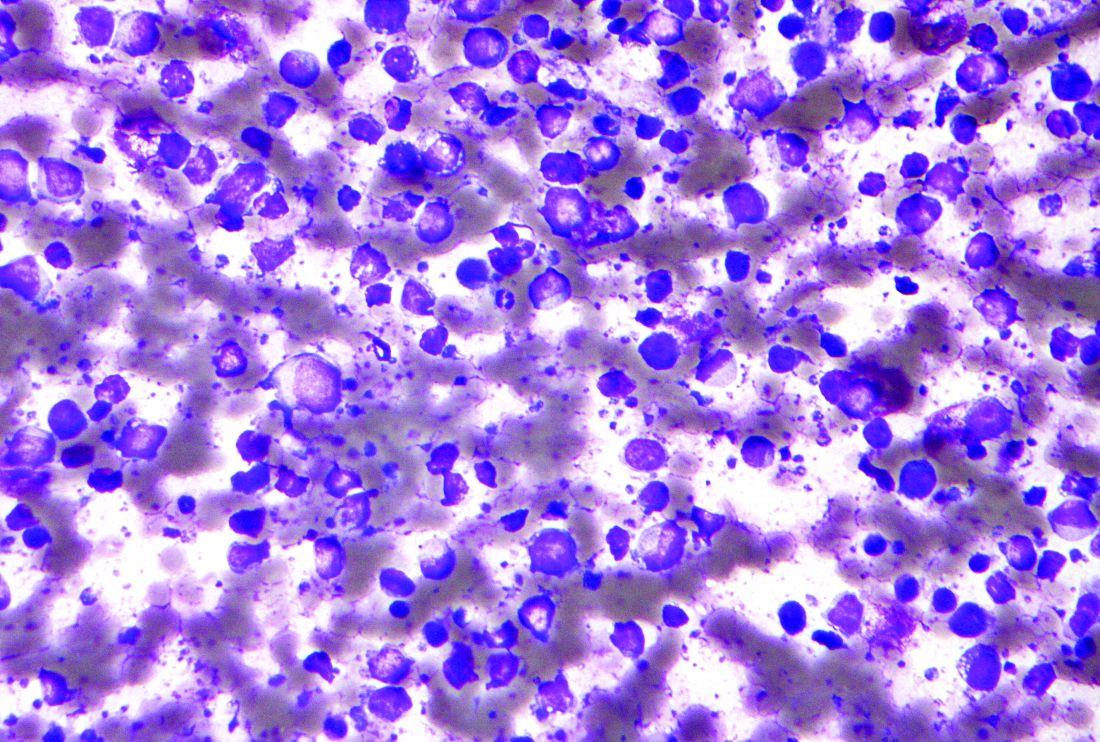

Poor survival with COVID in patients who have had HSCT

Among individuals who have received a hematopoietic stem cell transplant (HSCT), often used in the treatment of blood cancers, rates of survival are poor for those who develop COVID-19.

The probability of survival 30 days after being diagnosed with COVID-19 is only 68% for persons who have received an allogeneic HSCT and 67% for autologous HSCT recipients, according to new data from the Center for International Blood and Marrow Transplant Research.

These findings underscore the need for “stringent surveillance and aggressive treatment measures” in this population, Akshay Sharma, MBBS, of St. Jude Children’s Research Hospital, Memphis, and colleagues wrote.

The findings were published online March 1, 2021, in The Lancet Haematology.

The study is “of importance for physicians caring for HSCT recipients worldwide,” Mathieu Leclerc, MD, and Sébastien Maury, MD, Hôpital Henri Mondor, Créteil, France, commented in an accompanying editorial.

Study details

For their study, Dr. Sharma and colleagues analyzed outcomes for all HSCT recipients who developed COVID-19 and whose cases were reported to the CIBMTR. Of 318 such patients, 184 had undergone allogeneic HSCT, and 134 had undergone autologous HSCT.

Overall, about half of these patients (49%) had mild COVID-19.

Severe COVID-19 that required mechanical ventilation developed in 15% and 13% of the allogeneic and autologous HSCT recipients, respectively.

About one-fifth of patients died: 22% and 19% of allogeneic and autologous HSCT recipients, respectively.

Factors associated with greater mortality risk included age of 50 years or older (hazard ratio, 2.53), male sex (HR, 3.53), and development of COVID-19 within 12 months of undergoing HSCT (HR, 2.67).

Among autologous HSCT recipients, lymphoma was associated with higher mortality risk in comparison with a plasma cell disorder or myeloma (HR, 2.41), the authors noted.

“Two important messages can be drawn from the results reported by Sharma and colleagues,” Dr. Leclerc and Dr. Maury wrote in their editorial. “The first is the confirmation that the prognosis of COVID-19 is particularly poor in HSCT recipients, and that its prevention, in the absence of any specific curative treatment with sufficient efficacy, should be at the forefront of concerns.”

The second relates to the risk factors for death among HSCT recipients who develop COVID-19. In addition to previously known risk factors, such as age and gender, the investigators identified transplant-specific factors potentially associated with prognosis – namely, the nearly threefold increase in death among allogeneic HSCT recipients who develop COVID-19 within 12 months of transplant, they explained.

However, the findings are limited by a substantial amount of missing data, short follow-up, and the possibility of selection bias, they noted.

“Further large and well-designed studies with longer follow-up are needed to confirm and refine the results,” the editorialists wrote.

“[A] better understanding of the distinctive features of COVID-19 infection in HSCT recipients will be a necessary and essential step toward improvement of the remarkably poor prognosis observed in this setting,” they added.

The study was funded by the American Society of Hematology; the Leukemia and Lymphoma Society; the National Cancer Institute; the National Heart, Lung and Blood Institute; the National Institute of Allergy and Infectious Diseases; the National Institutes of Health; the Health Resources and Services Administration; and the Office of Naval Research. Dr. Sharma receives support for the conduct of industry-sponsored trials from Vertex Pharmaceuticals, CRISPR Therapeutics, and Novartis and consulting fees from Spotlight Therapeutics. Dr. Leclerc and Dr. Maury disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Among individuals who have received a hematopoietic stem cell transplant (HSCT), often used in the treatment of blood cancers, rates of survival are poor for those who develop COVID-19.

The probability of survival 30 days after being diagnosed with COVID-19 is only 68% for persons who have received an allogeneic HSCT and 67% for autologous HSCT recipients, according to new data from the Center for International Blood and Marrow Transplant Research.

These findings underscore the need for “stringent surveillance and aggressive treatment measures” in this population, Akshay Sharma, MBBS, of St. Jude Children’s Research Hospital, Memphis, and colleagues wrote.

The findings were published online March 1, 2021, in The Lancet Haematology.

The study is “of importance for physicians caring for HSCT recipients worldwide,” Mathieu Leclerc, MD, and Sébastien Maury, MD, Hôpital Henri Mondor, Créteil, France, commented in an accompanying editorial.

Study details

For their study, Dr. Sharma and colleagues analyzed outcomes for all HSCT recipients who developed COVID-19 and whose cases were reported to the CIBMTR. Of 318 such patients, 184 had undergone allogeneic HSCT, and 134 had undergone autologous HSCT.

Overall, about half of these patients (49%) had mild COVID-19.

Severe COVID-19 that required mechanical ventilation developed in 15% and 13% of the allogeneic and autologous HSCT recipients, respectively.

About one-fifth of patients died: 22% and 19% of allogeneic and autologous HSCT recipients, respectively.

Factors associated with greater mortality risk included age of 50 years or older (hazard ratio, 2.53), male sex (HR, 3.53), and development of COVID-19 within 12 months of undergoing HSCT (HR, 2.67).

Among autologous HSCT recipients, lymphoma was associated with higher mortality risk in comparison with a plasma cell disorder or myeloma (HR, 2.41), the authors noted.

“Two important messages can be drawn from the results reported by Sharma and colleagues,” Dr. Leclerc and Dr. Maury wrote in their editorial. “The first is the confirmation that the prognosis of COVID-19 is particularly poor in HSCT recipients, and that its prevention, in the absence of any specific curative treatment with sufficient efficacy, should be at the forefront of concerns.”

The second relates to the risk factors for death among HSCT recipients who develop COVID-19. In addition to previously known risk factors, such as age and gender, the investigators identified transplant-specific factors potentially associated with prognosis – namely, the nearly threefold increase in death among allogeneic HSCT recipients who develop COVID-19 within 12 months of transplant, they explained.

However, the findings are limited by a substantial amount of missing data, short follow-up, and the possibility of selection bias, they noted.

“Further large and well-designed studies with longer follow-up are needed to confirm and refine the results,” the editorialists wrote.

“[A] better understanding of the distinctive features of COVID-19 infection in HSCT recipients will be a necessary and essential step toward improvement of the remarkably poor prognosis observed in this setting,” they added.

The study was funded by the American Society of Hematology; the Leukemia and Lymphoma Society; the National Cancer Institute; the National Heart, Lung and Blood Institute; the National Institute of Allergy and Infectious Diseases; the National Institutes of Health; the Health Resources and Services Administration; and the Office of Naval Research. Dr. Sharma receives support for the conduct of industry-sponsored trials from Vertex Pharmaceuticals, CRISPR Therapeutics, and Novartis and consulting fees from Spotlight Therapeutics. Dr. Leclerc and Dr. Maury disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Among individuals who have received a hematopoietic stem cell transplant (HSCT), often used in the treatment of blood cancers, rates of survival are poor for those who develop COVID-19.

The probability of survival 30 days after being diagnosed with COVID-19 is only 68% for persons who have received an allogeneic HSCT and 67% for autologous HSCT recipients, according to new data from the Center for International Blood and Marrow Transplant Research.

These findings underscore the need for “stringent surveillance and aggressive treatment measures” in this population, Akshay Sharma, MBBS, of St. Jude Children’s Research Hospital, Memphis, and colleagues wrote.

The findings were published online March 1, 2021, in The Lancet Haematology.

The study is “of importance for physicians caring for HSCT recipients worldwide,” Mathieu Leclerc, MD, and Sébastien Maury, MD, Hôpital Henri Mondor, Créteil, France, commented in an accompanying editorial.

Study details

For their study, Dr. Sharma and colleagues analyzed outcomes for all HSCT recipients who developed COVID-19 and whose cases were reported to the CIBMTR. Of 318 such patients, 184 had undergone allogeneic HSCT, and 134 had undergone autologous HSCT.

Overall, about half of these patients (49%) had mild COVID-19.

Severe COVID-19 that required mechanical ventilation developed in 15% and 13% of the allogeneic and autologous HSCT recipients, respectively.

About one-fifth of patients died: 22% and 19% of allogeneic and autologous HSCT recipients, respectively.

Factors associated with greater mortality risk included age of 50 years or older (hazard ratio, 2.53), male sex (HR, 3.53), and development of COVID-19 within 12 months of undergoing HSCT (HR, 2.67).

Among autologous HSCT recipients, lymphoma was associated with higher mortality risk in comparison with a plasma cell disorder or myeloma (HR, 2.41), the authors noted.

“Two important messages can be drawn from the results reported by Sharma and colleagues,” Dr. Leclerc and Dr. Maury wrote in their editorial. “The first is the confirmation that the prognosis of COVID-19 is particularly poor in HSCT recipients, and that its prevention, in the absence of any specific curative treatment with sufficient efficacy, should be at the forefront of concerns.”

The second relates to the risk factors for death among HSCT recipients who develop COVID-19. In addition to previously known risk factors, such as age and gender, the investigators identified transplant-specific factors potentially associated with prognosis – namely, the nearly threefold increase in death among allogeneic HSCT recipients who develop COVID-19 within 12 months of transplant, they explained.

However, the findings are limited by a substantial amount of missing data, short follow-up, and the possibility of selection bias, they noted.

“Further large and well-designed studies with longer follow-up are needed to confirm and refine the results,” the editorialists wrote.

“[A] better understanding of the distinctive features of COVID-19 infection in HSCT recipients will be a necessary and essential step toward improvement of the remarkably poor prognosis observed in this setting,” they added.

The study was funded by the American Society of Hematology; the Leukemia and Lymphoma Society; the National Cancer Institute; the National Heart, Lung and Blood Institute; the National Institute of Allergy and Infectious Diseases; the National Institutes of Health; the Health Resources and Services Administration; and the Office of Naval Research. Dr. Sharma receives support for the conduct of industry-sponsored trials from Vertex Pharmaceuticals, CRISPR Therapeutics, and Novartis and consulting fees from Spotlight Therapeutics. Dr. Leclerc and Dr. Maury disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Omidubicel improves on umbilical cord blood transplants

Omidubicel, an investigational enriched umbilical cord blood product being developed by Gamida Cell for transplantation in patients with blood cancers, appears to have some advantages over standard umbilical cord blood.

The results come from a global phase 3 trial (NCT02730299) presented at the annual meeting of the European Society for Blood and Bone Marrow Transplantation.

“Transplantation with omidubicel, compared to standard cord blood transplantation, results in faster hematopoietic recovery, fewer infections, and fewer days in hospital,” said coinvestigator Guillermo F. Sanz, MD, PhD, from the Hospital Universitari i Politècnic la Fe in Valencia, Spain.

“Omidubicel should be considered as the new standard of care for patients eligible for umbilical cord blood transplantation,” Dr. Sanz concluded.

Zachariah DeFilipp, MD, from Mass General Cancer Center in Boston, a hematopoietic stem cell transplantation specialist who was not involved in the study, said in an interview that “omidubicel significantly improves the engraftment after transplant, as compared to standard cord blood transplant. For patients that lack an HLA-matched donor, this approach can help overcome the prolonged cytopenias that occur with standard cord blood transplants in adults.”

Gamida Cell plans to submit these data for approval of omidubicel by the Food and Drug Administration in the fourth quarter of 2021.

Omidubicel is also being evaluated in a phase 1/2 clinical study in patients with severe aplastic anemia (NCT03173937).

Expanding possibilities

Although umbilical cord blood stem cell grafts come from a readily available source and show greater tolerance across HLA barriers than other sources (such as bone marrow), the relatively low dose of stem cells in each unit results in delayed hematopoietic recovery, increased transplant-related morbidity and mortality, and longer hospitalizations, Dr. Sanz said.

Omidubicel consists of two cryopreserved fractions from a single cord blood unit. The product contains both noncultured CD133-negative cells, including T cells, and CD133-positive cells that are then expanded ex vivo for 21 days in the presence of nicotinamide.

“Nicotinamide increases stem and progenitor cells, inhibits differentiation and increases migration, bone marrow homing, and engraftment efficiency while preserving cellular functionality and phenotype,” Dr. Sanz explained during his presentation.

In an earlier phase 1/2 trial in 36 patients with high-risk hematologic malignancies, omidubicel was associated with hematopoietic engraftment lasting at least 10 years.

Details of phase 3 trial results

The global phase 3 trial was conducted in 125 patients (aged 13-65 years) with high-risk malignancies, including acute myeloid and lymphoblastic leukemias, myelodysplastic syndrome, chronic myeloid leukemia, lymphomas, and rare leukemias. These patients were all eligible for allogeneic stem cell transplantation but did not have matched donors.

Patients were randomly assigned to receive hematopoietic reconstitution with either omidubicel (n = 52) or standard cord blood (n = 58).

At 42 days of follow-up, the median time to neutrophil engraftment in the intention-to-treat (ITT) population, the primary endpoint, was 12 days with omidubicel versus 22 days with standard cord blood (P < .001).

In the as-treated population – the 108 patients who actually received omidubicel or standard cord blood – median time to engraftment was 10.0 versus 20.5 days, respectively (P < .001).

Rates of neutrophil engraftment at 42 days were 96% with omidubicel versus 89% with standard cord blood.

The secondary endpoint of time-to-platelet engraftment in the ITT population also favored omidubicel, with a cumulative day 42 incidence rate of 55%, compared with 35% with standard cord blood (P = .028).

In the as-treated population, median times to platelet engraftment were 37 days and 50 days, respectively (P = .023). The cumulative rates of platelet engraftment at 100 days of follow-up were 83% and 73%, respectively.

The incidence of grade 2 or 3 bacterial or invasive fungal infections by day 100 in the ITT population was 37% among patients who received omidubicel, compared with 57% for patients who received standard cord blood (P = .027). Viral infections occurred in 10% versus 26% of patients, respectively.

The incidence of acute graft versus host disease at day 100 was similar between treatment groups, and there was no significant difference at 1 year.

Relapse and nonrelapse mortality rates, as well as disease-free and overall survival rates also did not differ between groups.

In the first 100 days post transplant, patients who received omidubicel were alive and out of the hospital for a median of 60.5 days, compared with 48 days for patients who received standard cord blood (P = .005).

The study was funded by Gamida Cell. Dr. Sanz reported receiving research funding from the company and several others, and consulting fees, honoraria, speakers bureau activity, and travel expenses from other companies. Dr. DeFilipp reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Omidubicel, an investigational enriched umbilical cord blood product being developed by Gamida Cell for transplantation in patients with blood cancers, appears to have some advantages over standard umbilical cord blood.

The results come from a global phase 3 trial (NCT02730299) presented at the annual meeting of the European Society for Blood and Bone Marrow Transplantation.

“Transplantation with omidubicel, compared to standard cord blood transplantation, results in faster hematopoietic recovery, fewer infections, and fewer days in hospital,” said coinvestigator Guillermo F. Sanz, MD, PhD, from the Hospital Universitari i Politècnic la Fe in Valencia, Spain.

“Omidubicel should be considered as the new standard of care for patients eligible for umbilical cord blood transplantation,” Dr. Sanz concluded.

Zachariah DeFilipp, MD, from Mass General Cancer Center in Boston, a hematopoietic stem cell transplantation specialist who was not involved in the study, said in an interview that “omidubicel significantly improves the engraftment after transplant, as compared to standard cord blood transplant. For patients that lack an HLA-matched donor, this approach can help overcome the prolonged cytopenias that occur with standard cord blood transplants in adults.”

Gamida Cell plans to submit these data for approval of omidubicel by the Food and Drug Administration in the fourth quarter of 2021.

Omidubicel is also being evaluated in a phase 1/2 clinical study in patients with severe aplastic anemia (NCT03173937).

Expanding possibilities

Although umbilical cord blood stem cell grafts come from a readily available source and show greater tolerance across HLA barriers than other sources (such as bone marrow), the relatively low dose of stem cells in each unit results in delayed hematopoietic recovery, increased transplant-related morbidity and mortality, and longer hospitalizations, Dr. Sanz said.

Omidubicel consists of two cryopreserved fractions from a single cord blood unit. The product contains both noncultured CD133-negative cells, including T cells, and CD133-positive cells that are then expanded ex vivo for 21 days in the presence of nicotinamide.

“Nicotinamide increases stem and progenitor cells, inhibits differentiation and increases migration, bone marrow homing, and engraftment efficiency while preserving cellular functionality and phenotype,” Dr. Sanz explained during his presentation.

In an earlier phase 1/2 trial in 36 patients with high-risk hematologic malignancies, omidubicel was associated with hematopoietic engraftment lasting at least 10 years.

Details of phase 3 trial results