User login

Structured Peer Observation of Teaching

Hospitalists are increasingly responsible for educating students and housestaff in internal medicine.[1] Because the quality of teaching is an important factor in learning,[2, 3, 4] leaders in medical education have expressed concern over the rapid shift of teaching responsibilities to this new group of educators.[5, 6, 7, 8] Moreover, recent changes in duty hour restrictions have strained both student and resident education,[9, 10] necessitating the optimization of inpatient teaching.[11, 12] Many hospitalists have recently finished residency and have not had formal training in clinical teaching. Collectively, most hospital medicine groups are early in their careers, have significant clinical obligations,[13] and may not have the bandwidth or expertise to provide faculty development for improving clinical teaching.

Rationally designed and theoretically sound faculty development to improve inpatient clinical teaching is required to meet this challenge. There are a limited number of reports describing faculty development focused on strengthening the teaching of hospitalists, and only 3 utilized direct observation and feedback, 1 of which involved peer observation in the clinical setting.[14, 15, 16] This 2011 report described a narrative method of peer observation and feedback but did not assess for efficacy of the program.[16] To our knowledge, there have been no studies of structured peer observation and feedback to optimize hospitalist attendings' teaching which have evaluated the efficacy of the intervention.

We developed a faculty development program based on peer observation and feedback based on actual teaching practices, using structured feedback anchored in validated and observable measures of effective teaching. We hypothesized that participation in the program would increase confidence in key teaching skills, increase confidence in the ability to give and receive peer feedback, and strengthen attitudes toward peer observation and feedback.

METHODS

Subjects and Setting

The study was conducted at a 570‐bed academic, tertiary care medical center affiliated with an internal medicine residency program of 180 housestaff. Internal medicine ward attendings rotate during 2‐week blocks, and are asked to give formal teaching rounds 3 or 4 times a week (these sessions are distinct from teaching which may happen while rounding on patients). Ward teams are composed of 1 senior resident, 2 interns, and 1 to 2 medical students. The majority of internal medicine ward attendings are hospitalist faculty, hospital medicine fellows, or medicine chief residents. Because outpatient general internists and subspecialists only occasionally attend on the wards, we refer to ward attendings as attending hospitalists in this article. All attending hospitalists were eligible to participate if they attended on the wards at least twice during the academic year. The institutional review board at the University of California, San Francisco approved this study.

Theoretical Framework

We reviewed the literature to optimize our program in 3 conceptual domains: (1) overall structure of the program, (2) definition of effective teaching and (3) effective delivery of feedback.

Over‐reliance on didactics that are disconnected from the work environment is a weakness of traditional faculty development. Individuals may attempt to apply what they have learned, but receiving feedback on their actual workplace practices may be difficult. A recent perspective responds to this fragmentation by conceptualizing faculty development as embedded in both a faculty development community and a workplace community. This model emphasizes translating what faculty have learned in the classroom into practice, and highlights the importance of coaching in the workplace.[17] In accordance with this framework, we designed our program to reach beyond isolated workshops to effectively penetrate the workplace community.

We selected the Stanford Faculty Development Program (SFDP) framework for optimal clinical teaching as our model for recognizing and improving teaching skills. The SFDP was developed as a theory‐based intensive feedback method to improve teaching skills,[18, 19] and has been shown to improve teaching in the ambulatory[20] and inpatient settings.[21, 22] In this widely disseminated framework,[23, 24] excellent clinical teaching is grounded in optimizing observable behaviors organized around 7 domains.[18] A 26‐item instrument to evaluate clinical teaching (SFDP‐26) has been developed based on this framework[25] and has been validated in multiple settings.[26, 27] High‐quality teaching, as defined by the SFDP framework, has been correlated with improved educational outcomes in internal medicine clerkship students.[4]

Feedback is crucial to optimizing teaching,[28, 29, 30] particularly when it incorporates consultation[31] and narrative comments.[32] Peer feedback has several advantages over feedback from learners or from other non‐peer observers (such as supervisors or other evaluators). First, the observers benefit by gaining insight into their own weaknesses and potential areas for growth as teachers.[33, 34] Additionally, collegial observation and feedback may promote supportive teaching relationships between faculty.[35] Furthermore, peer review overcomes the biases that may be present in learner evaluations.[36] We established a 3‐stage feedback technique based on a previously described method.[37] In the first step, the observer elicits self‐appraisal from the speaker. Next, the observer provides specific, behaviorally anchored feedback in the form of 3 reinforcing comments and 2 constructive comments. Finally, the observer elicits a reflection on the feedback and helps develop a plan to improve teaching in future opportunities. We used a dyad model (paired participants repeatedly observe and give feedback to each other) to support mutual benefit and reciprocity between attendings.

Intervention

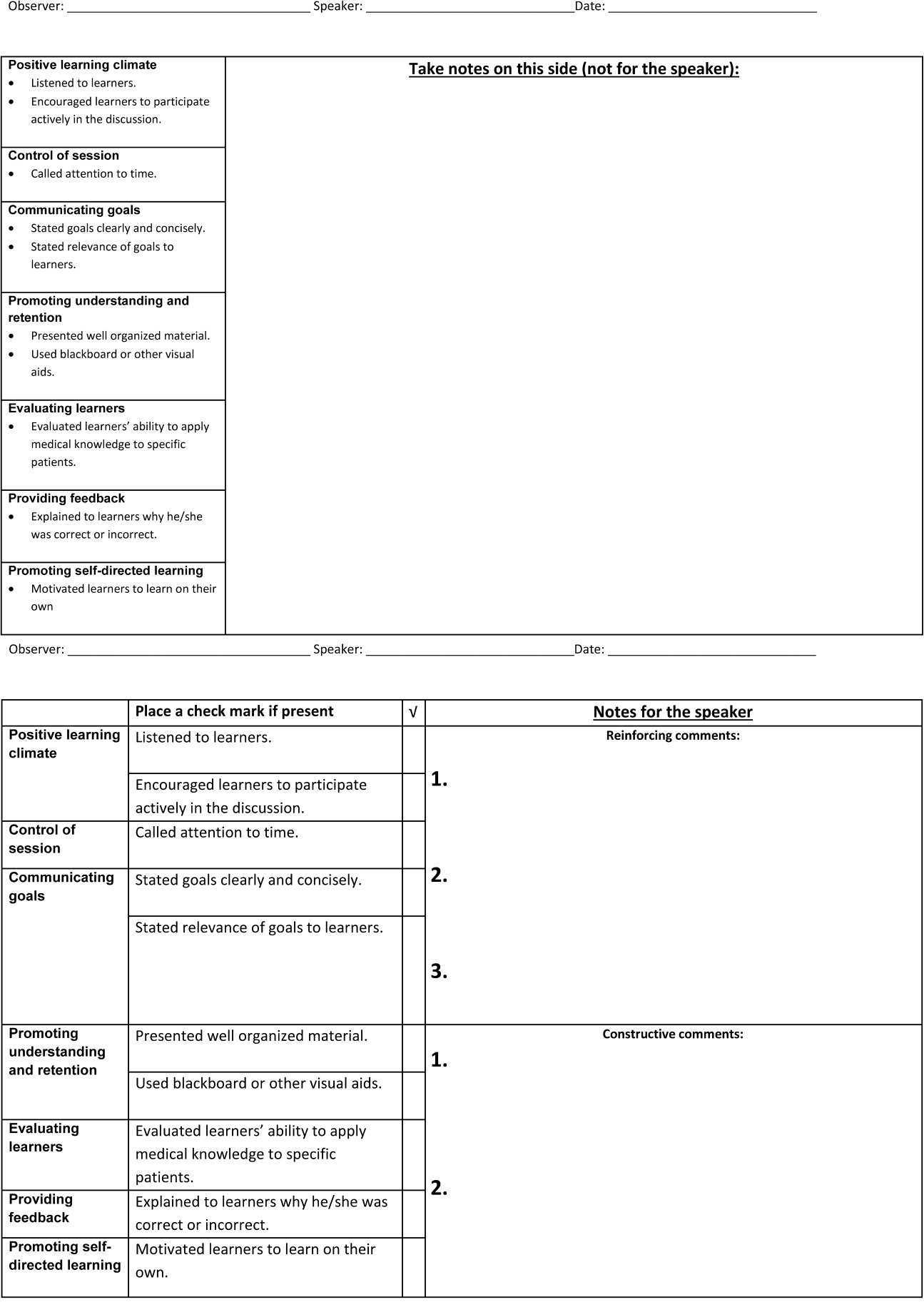

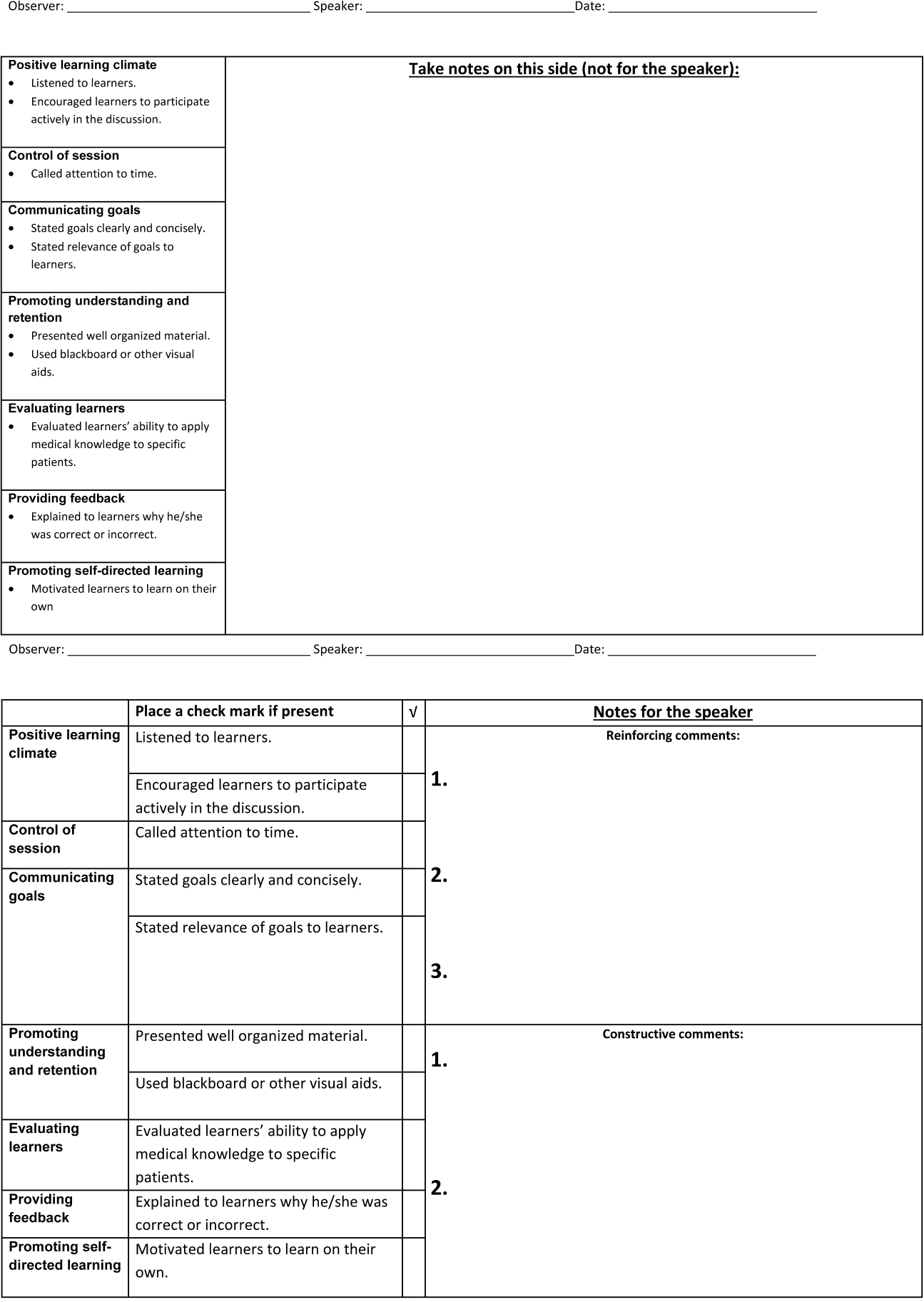

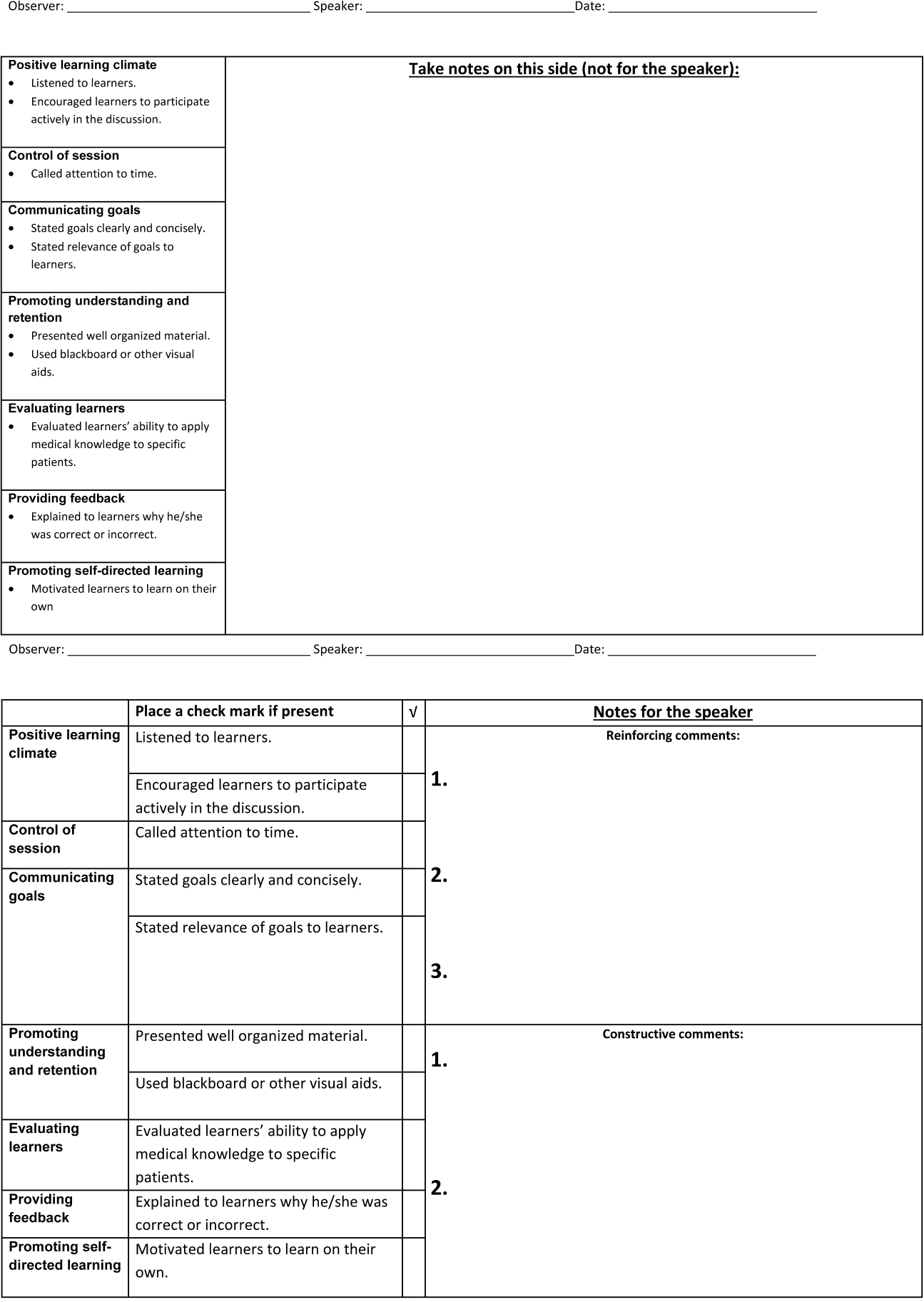

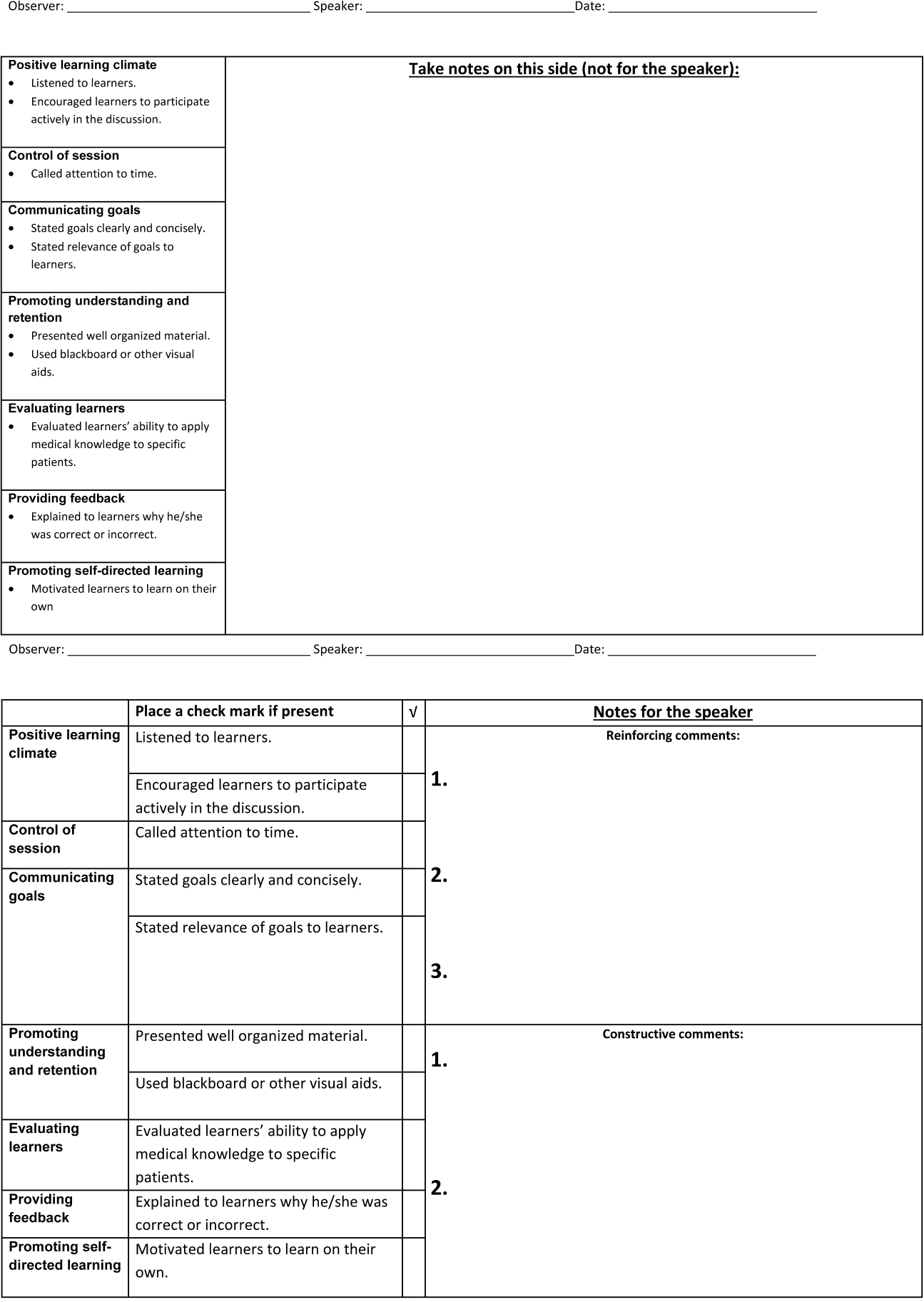

Using a modified Delphi approach, 5 medical education experts selected the 10 items that are most easily observable and salient to formal attending teaching rounds from the SFDP‐26 teaching assessment tool. A structured observation form was created, which included a checklist of the 10 selected items, space for note taking, and a template for narrative feedback (Figure 1).

We introduced the SFDP framework during a 2‐hour initial training session. Participants watched videos of teaching, learned to identify the 10 selected teaching behaviors, developed appropriate constructive and reinforcing comments, and practiced giving and receiving peer feedback.

Dyads were created on the basis of predetermined attending schedules. Participants were asked to observe and be observed twice during attending teaching rounds over the course of the academic year. Attending teaching rounds were defined as any preplanned didactic activity for ward teams. The structured observation forms were returned to the study coordinators after the observer had given feedback to the presenter. A copy of the feedback without the observer's notes was also given to each speaker. At the midpoint of the academic year, a refresher session was offered to reinforce those teaching behaviors that were the least frequently performed to date. All participants received a $50.00

Measurements and Data Collection

Participants were given a pre‐ and post‐program survey. The surveys included questions assessing confidence in ability to give feedback, receive feedback without feeling defensive, and teach effectively, as well as attitudes toward peer observation. The postprogram survey was administered at the end of the year and additionally assessed the self‐rated performance of the 10 selected teaching behaviors. A retrospective pre‐ and post‐program assessment was used for this outcome, because this method can be more reliable when participants initially may not have sufficient insight to accurately assess their own competence in specific measures.[21] The post‐program survey also included 4 questions assessing satisfaction with aspects of the program. All questions were structured as statements to which the respondent indicated degree of agreement using a 5‐point Likert scale, where 1=strongly disagree and 5=strongly agree. Structured observation forms used by participants were collected throughout the year to assess frequency of performance of the 10 selected teaching behaviors.

Statistical Analysis

We only analyzed the pre‐ and post‐program surveys that could be matched using anonymous identifiers provided by participants. For both prospective and retrospective measures, mean values and standard deviations were calculated. Wilcoxon signed rank tests for nonparametric data were performed to obtain P values. For all comparisons, a P value of <0.05 was considered significant. All comparisons were performed using Stata version 10 (StataCorp, College Station, TX).

RESULTS

Participant Characteristics and Participation in Program

Of the 37 eligible attending hospitalists, 22 (59%) enrolled. Fourteen were hospital medicine faculty, 6 were hospital medicine fellows, and 2 were internal medicine chief residents. The averagestandard deviation (SD) number of years as a ward attending was 2.2 years2.1. Seventeen (77%) reported previously having been observed and given feedback by a colleague, and 9 (41%) reported previously observing a colleague for the purpose of giving feedback.

All 22 participants attended 1 of 2, 2‐hour training sessions. Ten participants attended an hour‐long midyear refresher session. A total of 19 observation and feedback sessions took place; 15 of them occurred in the first half of the academic year. Fifteen attending hospitalists participated in at least 1 observed teaching session. Of the 11 dyads, 6 completed at least 1 observation of each other. Two dyads performed 2 observations of each other.

Fifteen participants (68% of those enrolled) completed both the pre‐ and post‐program surveys. Among these respondents, the average number of years attending was 2.92.2 years. Eight (53%) reported previously having been observed and given feedback by a colleague, and 7 (47%) reported previously observing a colleague for the purpose of giving feedback. For this subset of participants, the averageSD frequency of being observed during the program was 1.30.7, and observing was 1.10.8.

Confidence in Ability to Give Feedback, Receive Feedback, and Teach Effectively

In comparison of pre‐ and post‐intervention measures, participants indicated increased confidence in their ability to evaluate their colleagues and provide feedback in all domains queried. Participants also indicated increased confidence in the efficacy of their feedback to improve their colleagues' teaching skills. Participating in the program did not significantly change pre‐intervention levels of confidence in ability to receive feedback without being defensive or confidence in ability to use feedback to improve teaching skills (Table 1).

| Statement | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|

| |||||

| I can accurately assess my colleagues' teaching skills. | 3.20 | 0.86 | 4.07 | 0.59 | 0.004 |

| I can give accurate feedback to my colleagues regarding their teaching skills. | 3.40 | 0.63 | 4.20 | 0.56 | 0.002 |

| I can give feedback in a way that that my colleague will not feel defensive about their teaching skills. | 3.60 | 0.63 | 4.20 | 0.56 | 0.046 |

| My feedback will improve my colleagues' teaching skills. | 3.40 | 0.51 | 3.93 | 0.59 | 0.011 |

| I can receive feedback from a colleague without being defensive about my teaching skills. | 3.87 | 0.92 | 4.27 | 0.59 | 0.156 |

| I can use feedback from a colleague to improve my teaching skills. | 4.33 | 0.82 | 4.47 | 0.64 | 0.607 |

| I am confident in my ability to teach students and residents during attending rounds.a | 3.21 | 0.89 | 3.71 | 0.83 | 0.026 |

| I am confident in my knowledge of components of effective teaching.a | 3.21 | 0.89 | 3.71 | 0.99 | 0.035 |

| Learners regard me as an effective teacher.a | 3.14 | 0.66 | 3.64 | 0.74 | 0.033 |

Self‐Rated Performance of 10 Selected Teaching Behaviors

In retrospective assessment, participants felt that their performance had improved in all 10 teaching behaviors after the intervention. This perceived improvement reached statistical significance in 8 of the 10 selected behaviors (Table 2).

| SFDP Framework Category From Skeff et al.[18] | When I Give Attending Rounds, I Generally . | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|---|

| ||||||

| 1. Establishing a positive learning climate | Listen to learners | 4.27 | 0.59 | 4.53 | 0.52 | 0.046 |

| Encourage learners to participate actively in the discussion | 4.07 | 0.70 | 4.60 | 0.51 | 0.009 | |

| 2. Controlling the teaching session | Call attention to time | 3.33 | 0.98 | 4.27 | 0.59 | 0.004 |

| 3. Communicating goals | State goals clearly and concisely | 3.40 | 0.63 | 4.27 | 0.59 | 0.001 |

| State relevance of goals to learners | 3.40 | 0.74 | 4.20 | 0.68 | 0.002 | |

| 4. Promoting understanding and retention | Present well‐organized material | 3.87 | 0.64 | 4.07 | 0.70 | 0.083 |

| Use blackboard or other visual aids | 4.27 | 0.88 | 4.47 | 0.74 | 0.158 | |

| 5. Evaluating the learners | Evaluate learners' ability to apply medical knowledge to specific patients | 3.33 | 0.98 | 4.00 | 0.76 | 0.005 |

| 6. Providing feedback to the learners | Explain to learners why he/she was correct or incorrect | 3.47 | 1.13 | 4.13 | 0.64 | 0.009 |

| 7. Promoting self‐directed learning | Motivate learners to learn on their own | 3.20 | 0.86 | 3.73 | 0.70 | 0.005 |

Attitudes Toward Peer Observation and Feedback

There were no significant changes in attitudes toward observation and feedback on teaching. A strong preprogram belief that observation and feedback can improve teaching skills increased slightly, but not significantly, after the program. Participants remained largely neutral in expectation of discomfort with giving or receiving peer feedback. Prior to the program, there was a slight tendency to believe that observation and feedback is more effective when done by more skilled and experienced colleagues; this belief diminished, but not significantly (Table 3).

| Statement | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|

| |||||

| Being observed and receiving feedback can improve my teaching skills. | 4.47 | 1.06 | 4.60 | 0.51 | 0.941 |

| My teaching skills cannot improve without observation with feedback. | 2.93 | 1.39 | 3.47 | 1.30 | 0.188 |

| Observation with feedback is most effective when done by colleagues who are expert educators. | 3.53 | 0.83 | 3.33 | 0.98 | 0.180 |

| Observation with feedback is most effective when done by colleagues who have been teaching many years. | 3.40 | 0.91 | 3.07 | 1.03 | 0.143 |

| The thought of observing and giving feedback to my colleagues makes me uncomfortable. | 3.13 | 0.92 | 3.00 | 1.13 | 0.565 |

| The thought of being observed by a colleague and receiving feedback makes me uncomfortable. | 3.20 | 0.94 | 3.27 | 1.22 | 0.747 |

Program Evaluation

There were a variable number of responses to the program evaluation questions. The majority of participants found the program to be very beneficial (1=strongly disagree, 5=strongly agree [n, meanSD]): My teaching has improved as a result of this program (n=14, 4.90.3). Both giving (n=11, 4.21.6) and receiving (n=13, 4.61.1) feedback were felt to have improved teaching skills. There was strong agreement from respondents that they would participate in the program in the future: I am likely to participate in this program in the future (n=12, 4.60.9).

DISCUSSION

Previous studies have shown that teaching skills are unlikely to improve without feedback,[28, 29, 30] yet feedback for hospitalists is usually limited to summative, end‐rotation evaluations from learners, disconnected from the teaching encounter. Our theory‐based, rationally designed peer observation and feedback program resulted in increased confidence in the ability to give feedback, receive feedback, and teach effectively. Participation did not result in negative attitudes toward giving and receiving feedback from colleagues. Participants self‐reported increased performance of important teaching behaviors. Most participants rated the program very highly, and endorsed improved teaching skills as a result of the program.

Our experience provides several lessons for other groups considering the implementation of peer feedback to strengthen teaching. First, we suggest that hospitalist groups may expect variable degrees of participation in a voluntary peer feedback program. In our program, 41% of eligible attendings did not participate. We did not specifically investigate why; we speculate that they may not have had the time, believed that their teaching skills were already strong, or they may have been daunted at the idea of peer review. It is also possible that participants were a self‐selected group who were the most motivated to strengthen their teaching. Second, we note the steep decline in the number of observations in the second half of the year. Informal assessment for reasons for the drop‐off suggested that after initial enthusiasm for the program, navigating the logistics of observing the same peer in the second half of the year proved to be prohibitive to many participants. Therefore, future versions of peer feedback programs may benefit from removing the dyad requirement and encouraging all participants to observe one another whenever possible.

With these lessons in mind, we believe that a peer observation program could be implemented by other hospital medicine groups. The program does not require extensive content expertise or senior faculty but does require engaged leadership and interested and motivated faculty. Groups could identify an individual in their group with an interest in clinical teaching who could then be responsible for creating the training session (materials available upon request). We believe that with only a small upfront investment, most hospital medicine groups could use this as a model to build a peer observation program aimed at improving clinical teaching.

Our study has several limitations. As noted above, our participation rate was 59%, and the number of participating attendings declined through the year. We did not examine whether our program resulted in advances in the knowledge, skills, or attitudes of the learners; because each attending teaching session was unique, it was not possible to measure changes in learner knowledge. Our primary outcome measures relied on self‐assessment rather than higher order and more objective measures of teaching efficacy. Furthermore, our results may not be generalizable to other programs, given the heterogeneity in service structures and teaching practices across the country. This was an uncontrolled study; some of the outcomes may have naturally occurred independent of the intervention due to the natural evolution of clinical teaching. As with any educational intervention that integrates multiple strategies, we are not able to discern if the improved outcomes were the result of the initial didactic sessions, the refresher sessions, or the peer feedback itself. Serial assessments of frequency of teaching behaviors were not done due to the low number of observations in the second half of the program. Finally, our 10‐item tool derived from the validated SFDP‐26 tool is not itself a validated assessment of teaching.

We acknowledge that the increased confidence seen in our participants does not necessarily predict improved performance. Although increased confidence in core skills is a necessary step that can lead to changes in behavior, further studies are needed to determine whether the increase in faculty confidence that results from peer observation and feedback translates into improved educational outcomes.

The pressure on hospitalists to be excellent teachers is here to stay. Resources to train these faculty are scarce, yet we must prioritize faculty development in teaching to optimize the training of future physicians. Our data illustrate the benefits of peer observation and feedback. Hospitalist programs should consider this option in addressing the professional development needs of their faculty.

Acknowledgements

The authors thank Zachary Martin for administrative support for the program; Gurpreet Dhaliwal, MD, and Patricia O'Sullivan, PhD, for aid in program development; and John Amory, MD, MPH, for critical review of the manuscript. The authors thank the University of California, San Francisco Office of Medical Education for funding this work with an Educational Research Grant.

Disclosures: Funding: UCSF Office of Medical Education Educational Research Grant. Ethics approval: approved by UCSF Committee on Human Research. Previous presentations: Previous versions of this work were presented as an oral presentation at the University of California at San Francisco Medical Education Day, San Francisco, California, April 27, 2012, and as a poster presentation at the Society for General Internal Medicine 35th Annual Meeting, Orlando, Florida, May 912, 2012. The authors report no conflicts of interest.

- , , . Hospitalist involvement in internal medicine residencies. J Hosp Med. 2009;4(8):471–475.

- , , , , , . Is there a relationship between attending physicians' and residents' teaching skills and students' examination scores? Acad Med. 2000;75(11):1144–1146.

- , , . Six‐year documentation of the association between excellent clinical teaching and improved students' examination performances. Acad Med. 2000;75(10 suppl):S62–S64.

- , . Effect of clinical teaching on student performance during a medicine clerkship. Am J Med. 2001;110(3):205–209.

- , . Implications of the hospitalist model for medical students' education. Acad Med. 2001;76(4):324–330.

- . On educating and being a physician in the hospitalist era. Am J Med. 2001;111(9B):45S–47S.

- , . The role of hospitalists in medical education. Am J Med. 1999;107(4):305–309.

- , , , , , . Challenges and opportunities in academic hospital medicine: report from the academic hospital medicine summit. J Gen Intern Med. 2009;24(5):636–641.

- , , , et al. Impact of duty hour regulations on medical students' education: views of key clinical faculty. J Gen Intern Med. 2008;23(7):1084–1089.

- , , , , , . The impact of resident duty hours reform on the internal medicine core clerkship: results from the clerkship directors in internal medicine survey. Acad Med. 2006;81(12):1038–1044.

- , , , . Effects of resident work hour limitations on faculty professional lives. J Gen Intern Med. 2008;23(7):1077–1083.

- , . Teaching internal medicine residents in the new era. Inpatient attending with duty‐hour regulations. J Gen Intern Med. 2006;21(5):447–452.

- , , , . Survey of US academic hospitalist leaders about mentorship and academic activities in hospitalist groups. J Hosp Med. 2011;6(1):5–9.

- , , , . Using observed structured teaching exercises (OSTE) to enhance hospitalist teaching during family centered rounds. J Hosp Med. 2011;6(7):423–427.

- , , , . Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161–166.

- , , , . How to become a better clinical teacher: a collaborative peer observation process. Med Teach. 2011;33(2):151–155.

- , . Reframing research on faculty development. Acad Med. 2011;86(4):421–428.

- , , , et al. The Stanford faculty development program: a dissemination approach to faculty development for medical teachers. Teach Learn Med. 1992;4(3):180–187.

- . Evaluation of a method for improving the teaching performance of attending physicians. Am J Med. 1983;75(3):465–470.

- , , , . The impact of the Stanford Faculty Development Program on ambulatory teaching behavior. J Gen Intern Med. 2006;21(5):430–434.

- , , . Evaluation of a medical faculty development program: a comparison of traditional pre/post and retrospective pre/post self‐assessment ratings. Eval Health Prof. 1992;15(3):350–366.

- , , , , . Evaluation of the seminar method to improve clinical teaching. J Gen Intern Med. 1986;1(5):315–322.

- , , , , . Regional teaching improvement programs for community‐based teachers. Am J Med. 1999;106(1):76–80.

- , , , . Improving clinical teaching. Evaluation of a national dissemination program. Arch Intern Med. 1992;152(6):1156–1161.

- , , , . Factorial validation of a widely disseminated educational framework for evaluating clinical teachers. Acad Med. 1998;73(6):688–695.

- , , , . Student and resident evaluations of faculty—how reliable are they? Factorial validation of an educational framework using residents' evaluations of clinician‐educators. Acad Med. 1999;74(10):S25–S27.

- , . Students' global assessments of clinical teachers: a reliable and valid measure of teaching effectiveness. Acad Med. 1998;73(10 suppl):S72–S74.

- . The practice of giving feedback to improve teaching: what is effective? J Higher Educ. 1993;64(5):574–593.

- , , , et al. Faculty development. A resource for clinical teachers. J Gen Intern Med. 1997;12(suppl 2):S56–S63.

- , , , et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME guide no. 8. Med Teach. 2006;28(6):497–526.

- , . Strategies for improving teaching practices: a comprehensive approach to faculty development. Acad Med. 1998;73(4):387–396.

- , . Relationship between systematic feedback to faculty and ratings of clinical teaching. Acad Med. 1996;71(10):1100–1102.

- . Lessons learned from a peer review of bedside teaching. Acad Med. 2004;79(4):343–346.

- , , , . Evaluating an instrument for the peer review of inpatient teaching. Med Teach. 2003;25(2):131–135.

- , , . Twelve tips for peer observation of teaching. Med Teach. 2007;29(4):297–300.

- , . Assessing the quality of teaching. Am J Med. 1999;106(4):381–384.

- , , , , , . To the point: medical education reviews—providing feedback. Am J Obstet Gynecol. 2007;196(6):508–513.

Hospitalists are increasingly responsible for educating students and housestaff in internal medicine.[1] Because the quality of teaching is an important factor in learning,[2, 3, 4] leaders in medical education have expressed concern over the rapid shift of teaching responsibilities to this new group of educators.[5, 6, 7, 8] Moreover, recent changes in duty hour restrictions have strained both student and resident education,[9, 10] necessitating the optimization of inpatient teaching.[11, 12] Many hospitalists have recently finished residency and have not had formal training in clinical teaching. Collectively, most hospital medicine groups are early in their careers, have significant clinical obligations,[13] and may not have the bandwidth or expertise to provide faculty development for improving clinical teaching.

Rationally designed and theoretically sound faculty development to improve inpatient clinical teaching is required to meet this challenge. There are a limited number of reports describing faculty development focused on strengthening the teaching of hospitalists, and only 3 utilized direct observation and feedback, 1 of which involved peer observation in the clinical setting.[14, 15, 16] This 2011 report described a narrative method of peer observation and feedback but did not assess for efficacy of the program.[16] To our knowledge, there have been no studies of structured peer observation and feedback to optimize hospitalist attendings' teaching which have evaluated the efficacy of the intervention.

We developed a faculty development program based on peer observation and feedback based on actual teaching practices, using structured feedback anchored in validated and observable measures of effective teaching. We hypothesized that participation in the program would increase confidence in key teaching skills, increase confidence in the ability to give and receive peer feedback, and strengthen attitudes toward peer observation and feedback.

METHODS

Subjects and Setting

The study was conducted at a 570‐bed academic, tertiary care medical center affiliated with an internal medicine residency program of 180 housestaff. Internal medicine ward attendings rotate during 2‐week blocks, and are asked to give formal teaching rounds 3 or 4 times a week (these sessions are distinct from teaching which may happen while rounding on patients). Ward teams are composed of 1 senior resident, 2 interns, and 1 to 2 medical students. The majority of internal medicine ward attendings are hospitalist faculty, hospital medicine fellows, or medicine chief residents. Because outpatient general internists and subspecialists only occasionally attend on the wards, we refer to ward attendings as attending hospitalists in this article. All attending hospitalists were eligible to participate if they attended on the wards at least twice during the academic year. The institutional review board at the University of California, San Francisco approved this study.

Theoretical Framework

We reviewed the literature to optimize our program in 3 conceptual domains: (1) overall structure of the program, (2) definition of effective teaching and (3) effective delivery of feedback.

Over‐reliance on didactics that are disconnected from the work environment is a weakness of traditional faculty development. Individuals may attempt to apply what they have learned, but receiving feedback on their actual workplace practices may be difficult. A recent perspective responds to this fragmentation by conceptualizing faculty development as embedded in both a faculty development community and a workplace community. This model emphasizes translating what faculty have learned in the classroom into practice, and highlights the importance of coaching in the workplace.[17] In accordance with this framework, we designed our program to reach beyond isolated workshops to effectively penetrate the workplace community.

We selected the Stanford Faculty Development Program (SFDP) framework for optimal clinical teaching as our model for recognizing and improving teaching skills. The SFDP was developed as a theory‐based intensive feedback method to improve teaching skills,[18, 19] and has been shown to improve teaching in the ambulatory[20] and inpatient settings.[21, 22] In this widely disseminated framework,[23, 24] excellent clinical teaching is grounded in optimizing observable behaviors organized around 7 domains.[18] A 26‐item instrument to evaluate clinical teaching (SFDP‐26) has been developed based on this framework[25] and has been validated in multiple settings.[26, 27] High‐quality teaching, as defined by the SFDP framework, has been correlated with improved educational outcomes in internal medicine clerkship students.[4]

Feedback is crucial to optimizing teaching,[28, 29, 30] particularly when it incorporates consultation[31] and narrative comments.[32] Peer feedback has several advantages over feedback from learners or from other non‐peer observers (such as supervisors or other evaluators). First, the observers benefit by gaining insight into their own weaknesses and potential areas for growth as teachers.[33, 34] Additionally, collegial observation and feedback may promote supportive teaching relationships between faculty.[35] Furthermore, peer review overcomes the biases that may be present in learner evaluations.[36] We established a 3‐stage feedback technique based on a previously described method.[37] In the first step, the observer elicits self‐appraisal from the speaker. Next, the observer provides specific, behaviorally anchored feedback in the form of 3 reinforcing comments and 2 constructive comments. Finally, the observer elicits a reflection on the feedback and helps develop a plan to improve teaching in future opportunities. We used a dyad model (paired participants repeatedly observe and give feedback to each other) to support mutual benefit and reciprocity between attendings.

Intervention

Using a modified Delphi approach, 5 medical education experts selected the 10 items that are most easily observable and salient to formal attending teaching rounds from the SFDP‐26 teaching assessment tool. A structured observation form was created, which included a checklist of the 10 selected items, space for note taking, and a template for narrative feedback (Figure 1).

We introduced the SFDP framework during a 2‐hour initial training session. Participants watched videos of teaching, learned to identify the 10 selected teaching behaviors, developed appropriate constructive and reinforcing comments, and practiced giving and receiving peer feedback.

Dyads were created on the basis of predetermined attending schedules. Participants were asked to observe and be observed twice during attending teaching rounds over the course of the academic year. Attending teaching rounds were defined as any preplanned didactic activity for ward teams. The structured observation forms were returned to the study coordinators after the observer had given feedback to the presenter. A copy of the feedback without the observer's notes was also given to each speaker. At the midpoint of the academic year, a refresher session was offered to reinforce those teaching behaviors that were the least frequently performed to date. All participants received a $50.00

Measurements and Data Collection

Participants were given a pre‐ and post‐program survey. The surveys included questions assessing confidence in ability to give feedback, receive feedback without feeling defensive, and teach effectively, as well as attitudes toward peer observation. The postprogram survey was administered at the end of the year and additionally assessed the self‐rated performance of the 10 selected teaching behaviors. A retrospective pre‐ and post‐program assessment was used for this outcome, because this method can be more reliable when participants initially may not have sufficient insight to accurately assess their own competence in specific measures.[21] The post‐program survey also included 4 questions assessing satisfaction with aspects of the program. All questions were structured as statements to which the respondent indicated degree of agreement using a 5‐point Likert scale, where 1=strongly disagree and 5=strongly agree. Structured observation forms used by participants were collected throughout the year to assess frequency of performance of the 10 selected teaching behaviors.

Statistical Analysis

We only analyzed the pre‐ and post‐program surveys that could be matched using anonymous identifiers provided by participants. For both prospective and retrospective measures, mean values and standard deviations were calculated. Wilcoxon signed rank tests for nonparametric data were performed to obtain P values. For all comparisons, a P value of <0.05 was considered significant. All comparisons were performed using Stata version 10 (StataCorp, College Station, TX).

RESULTS

Participant Characteristics and Participation in Program

Of the 37 eligible attending hospitalists, 22 (59%) enrolled. Fourteen were hospital medicine faculty, 6 were hospital medicine fellows, and 2 were internal medicine chief residents. The averagestandard deviation (SD) number of years as a ward attending was 2.2 years2.1. Seventeen (77%) reported previously having been observed and given feedback by a colleague, and 9 (41%) reported previously observing a colleague for the purpose of giving feedback.

All 22 participants attended 1 of 2, 2‐hour training sessions. Ten participants attended an hour‐long midyear refresher session. A total of 19 observation and feedback sessions took place; 15 of them occurred in the first half of the academic year. Fifteen attending hospitalists participated in at least 1 observed teaching session. Of the 11 dyads, 6 completed at least 1 observation of each other. Two dyads performed 2 observations of each other.

Fifteen participants (68% of those enrolled) completed both the pre‐ and post‐program surveys. Among these respondents, the average number of years attending was 2.92.2 years. Eight (53%) reported previously having been observed and given feedback by a colleague, and 7 (47%) reported previously observing a colleague for the purpose of giving feedback. For this subset of participants, the averageSD frequency of being observed during the program was 1.30.7, and observing was 1.10.8.

Confidence in Ability to Give Feedback, Receive Feedback, and Teach Effectively

In comparison of pre‐ and post‐intervention measures, participants indicated increased confidence in their ability to evaluate their colleagues and provide feedback in all domains queried. Participants also indicated increased confidence in the efficacy of their feedback to improve their colleagues' teaching skills. Participating in the program did not significantly change pre‐intervention levels of confidence in ability to receive feedback without being defensive or confidence in ability to use feedback to improve teaching skills (Table 1).

| Statement | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|

| |||||

| I can accurately assess my colleagues' teaching skills. | 3.20 | 0.86 | 4.07 | 0.59 | 0.004 |

| I can give accurate feedback to my colleagues regarding their teaching skills. | 3.40 | 0.63 | 4.20 | 0.56 | 0.002 |

| I can give feedback in a way that that my colleague will not feel defensive about their teaching skills. | 3.60 | 0.63 | 4.20 | 0.56 | 0.046 |

| My feedback will improve my colleagues' teaching skills. | 3.40 | 0.51 | 3.93 | 0.59 | 0.011 |

| I can receive feedback from a colleague without being defensive about my teaching skills. | 3.87 | 0.92 | 4.27 | 0.59 | 0.156 |

| I can use feedback from a colleague to improve my teaching skills. | 4.33 | 0.82 | 4.47 | 0.64 | 0.607 |

| I am confident in my ability to teach students and residents during attending rounds.a | 3.21 | 0.89 | 3.71 | 0.83 | 0.026 |

| I am confident in my knowledge of components of effective teaching.a | 3.21 | 0.89 | 3.71 | 0.99 | 0.035 |

| Learners regard me as an effective teacher.a | 3.14 | 0.66 | 3.64 | 0.74 | 0.033 |

Self‐Rated Performance of 10 Selected Teaching Behaviors

In retrospective assessment, participants felt that their performance had improved in all 10 teaching behaviors after the intervention. This perceived improvement reached statistical significance in 8 of the 10 selected behaviors (Table 2).

| SFDP Framework Category From Skeff et al.[18] | When I Give Attending Rounds, I Generally . | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|---|

| ||||||

| 1. Establishing a positive learning climate | Listen to learners | 4.27 | 0.59 | 4.53 | 0.52 | 0.046 |

| Encourage learners to participate actively in the discussion | 4.07 | 0.70 | 4.60 | 0.51 | 0.009 | |

| 2. Controlling the teaching session | Call attention to time | 3.33 | 0.98 | 4.27 | 0.59 | 0.004 |

| 3. Communicating goals | State goals clearly and concisely | 3.40 | 0.63 | 4.27 | 0.59 | 0.001 |

| State relevance of goals to learners | 3.40 | 0.74 | 4.20 | 0.68 | 0.002 | |

| 4. Promoting understanding and retention | Present well‐organized material | 3.87 | 0.64 | 4.07 | 0.70 | 0.083 |

| Use blackboard or other visual aids | 4.27 | 0.88 | 4.47 | 0.74 | 0.158 | |

| 5. Evaluating the learners | Evaluate learners' ability to apply medical knowledge to specific patients | 3.33 | 0.98 | 4.00 | 0.76 | 0.005 |

| 6. Providing feedback to the learners | Explain to learners why he/she was correct or incorrect | 3.47 | 1.13 | 4.13 | 0.64 | 0.009 |

| 7. Promoting self‐directed learning | Motivate learners to learn on their own | 3.20 | 0.86 | 3.73 | 0.70 | 0.005 |

Attitudes Toward Peer Observation and Feedback

There were no significant changes in attitudes toward observation and feedback on teaching. A strong preprogram belief that observation and feedback can improve teaching skills increased slightly, but not significantly, after the program. Participants remained largely neutral in expectation of discomfort with giving or receiving peer feedback. Prior to the program, there was a slight tendency to believe that observation and feedback is more effective when done by more skilled and experienced colleagues; this belief diminished, but not significantly (Table 3).

| Statement | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|

| |||||

| Being observed and receiving feedback can improve my teaching skills. | 4.47 | 1.06 | 4.60 | 0.51 | 0.941 |

| My teaching skills cannot improve without observation with feedback. | 2.93 | 1.39 | 3.47 | 1.30 | 0.188 |

| Observation with feedback is most effective when done by colleagues who are expert educators. | 3.53 | 0.83 | 3.33 | 0.98 | 0.180 |

| Observation with feedback is most effective when done by colleagues who have been teaching many years. | 3.40 | 0.91 | 3.07 | 1.03 | 0.143 |

| The thought of observing and giving feedback to my colleagues makes me uncomfortable. | 3.13 | 0.92 | 3.00 | 1.13 | 0.565 |

| The thought of being observed by a colleague and receiving feedback makes me uncomfortable. | 3.20 | 0.94 | 3.27 | 1.22 | 0.747 |

Program Evaluation

There were a variable number of responses to the program evaluation questions. The majority of participants found the program to be very beneficial (1=strongly disagree, 5=strongly agree [n, meanSD]): My teaching has improved as a result of this program (n=14, 4.90.3). Both giving (n=11, 4.21.6) and receiving (n=13, 4.61.1) feedback were felt to have improved teaching skills. There was strong agreement from respondents that they would participate in the program in the future: I am likely to participate in this program in the future (n=12, 4.60.9).

DISCUSSION

Previous studies have shown that teaching skills are unlikely to improve without feedback,[28, 29, 30] yet feedback for hospitalists is usually limited to summative, end‐rotation evaluations from learners, disconnected from the teaching encounter. Our theory‐based, rationally designed peer observation and feedback program resulted in increased confidence in the ability to give feedback, receive feedback, and teach effectively. Participation did not result in negative attitudes toward giving and receiving feedback from colleagues. Participants self‐reported increased performance of important teaching behaviors. Most participants rated the program very highly, and endorsed improved teaching skills as a result of the program.

Our experience provides several lessons for other groups considering the implementation of peer feedback to strengthen teaching. First, we suggest that hospitalist groups may expect variable degrees of participation in a voluntary peer feedback program. In our program, 41% of eligible attendings did not participate. We did not specifically investigate why; we speculate that they may not have had the time, believed that their teaching skills were already strong, or they may have been daunted at the idea of peer review. It is also possible that participants were a self‐selected group who were the most motivated to strengthen their teaching. Second, we note the steep decline in the number of observations in the second half of the year. Informal assessment for reasons for the drop‐off suggested that after initial enthusiasm for the program, navigating the logistics of observing the same peer in the second half of the year proved to be prohibitive to many participants. Therefore, future versions of peer feedback programs may benefit from removing the dyad requirement and encouraging all participants to observe one another whenever possible.

With these lessons in mind, we believe that a peer observation program could be implemented by other hospital medicine groups. The program does not require extensive content expertise or senior faculty but does require engaged leadership and interested and motivated faculty. Groups could identify an individual in their group with an interest in clinical teaching who could then be responsible for creating the training session (materials available upon request). We believe that with only a small upfront investment, most hospital medicine groups could use this as a model to build a peer observation program aimed at improving clinical teaching.

Our study has several limitations. As noted above, our participation rate was 59%, and the number of participating attendings declined through the year. We did not examine whether our program resulted in advances in the knowledge, skills, or attitudes of the learners; because each attending teaching session was unique, it was not possible to measure changes in learner knowledge. Our primary outcome measures relied on self‐assessment rather than higher order and more objective measures of teaching efficacy. Furthermore, our results may not be generalizable to other programs, given the heterogeneity in service structures and teaching practices across the country. This was an uncontrolled study; some of the outcomes may have naturally occurred independent of the intervention due to the natural evolution of clinical teaching. As with any educational intervention that integrates multiple strategies, we are not able to discern if the improved outcomes were the result of the initial didactic sessions, the refresher sessions, or the peer feedback itself. Serial assessments of frequency of teaching behaviors were not done due to the low number of observations in the second half of the program. Finally, our 10‐item tool derived from the validated SFDP‐26 tool is not itself a validated assessment of teaching.

We acknowledge that the increased confidence seen in our participants does not necessarily predict improved performance. Although increased confidence in core skills is a necessary step that can lead to changes in behavior, further studies are needed to determine whether the increase in faculty confidence that results from peer observation and feedback translates into improved educational outcomes.

The pressure on hospitalists to be excellent teachers is here to stay. Resources to train these faculty are scarce, yet we must prioritize faculty development in teaching to optimize the training of future physicians. Our data illustrate the benefits of peer observation and feedback. Hospitalist programs should consider this option in addressing the professional development needs of their faculty.

Acknowledgements

The authors thank Zachary Martin for administrative support for the program; Gurpreet Dhaliwal, MD, and Patricia O'Sullivan, PhD, for aid in program development; and John Amory, MD, MPH, for critical review of the manuscript. The authors thank the University of California, San Francisco Office of Medical Education for funding this work with an Educational Research Grant.

Disclosures: Funding: UCSF Office of Medical Education Educational Research Grant. Ethics approval: approved by UCSF Committee on Human Research. Previous presentations: Previous versions of this work were presented as an oral presentation at the University of California at San Francisco Medical Education Day, San Francisco, California, April 27, 2012, and as a poster presentation at the Society for General Internal Medicine 35th Annual Meeting, Orlando, Florida, May 912, 2012. The authors report no conflicts of interest.

Hospitalists are increasingly responsible for educating students and housestaff in internal medicine.[1] Because the quality of teaching is an important factor in learning,[2, 3, 4] leaders in medical education have expressed concern over the rapid shift of teaching responsibilities to this new group of educators.[5, 6, 7, 8] Moreover, recent changes in duty hour restrictions have strained both student and resident education,[9, 10] necessitating the optimization of inpatient teaching.[11, 12] Many hospitalists have recently finished residency and have not had formal training in clinical teaching. Collectively, most hospital medicine groups are early in their careers, have significant clinical obligations,[13] and may not have the bandwidth or expertise to provide faculty development for improving clinical teaching.

Rationally designed and theoretically sound faculty development to improve inpatient clinical teaching is required to meet this challenge. There are a limited number of reports describing faculty development focused on strengthening the teaching of hospitalists, and only 3 utilized direct observation and feedback, 1 of which involved peer observation in the clinical setting.[14, 15, 16] This 2011 report described a narrative method of peer observation and feedback but did not assess for efficacy of the program.[16] To our knowledge, there have been no studies of structured peer observation and feedback to optimize hospitalist attendings' teaching which have evaluated the efficacy of the intervention.

We developed a faculty development program based on peer observation and feedback based on actual teaching practices, using structured feedback anchored in validated and observable measures of effective teaching. We hypothesized that participation in the program would increase confidence in key teaching skills, increase confidence in the ability to give and receive peer feedback, and strengthen attitudes toward peer observation and feedback.

METHODS

Subjects and Setting

The study was conducted at a 570‐bed academic, tertiary care medical center affiliated with an internal medicine residency program of 180 housestaff. Internal medicine ward attendings rotate during 2‐week blocks, and are asked to give formal teaching rounds 3 or 4 times a week (these sessions are distinct from teaching which may happen while rounding on patients). Ward teams are composed of 1 senior resident, 2 interns, and 1 to 2 medical students. The majority of internal medicine ward attendings are hospitalist faculty, hospital medicine fellows, or medicine chief residents. Because outpatient general internists and subspecialists only occasionally attend on the wards, we refer to ward attendings as attending hospitalists in this article. All attending hospitalists were eligible to participate if they attended on the wards at least twice during the academic year. The institutional review board at the University of California, San Francisco approved this study.

Theoretical Framework

We reviewed the literature to optimize our program in 3 conceptual domains: (1) overall structure of the program, (2) definition of effective teaching and (3) effective delivery of feedback.

Over‐reliance on didactics that are disconnected from the work environment is a weakness of traditional faculty development. Individuals may attempt to apply what they have learned, but receiving feedback on their actual workplace practices may be difficult. A recent perspective responds to this fragmentation by conceptualizing faculty development as embedded in both a faculty development community and a workplace community. This model emphasizes translating what faculty have learned in the classroom into practice, and highlights the importance of coaching in the workplace.[17] In accordance with this framework, we designed our program to reach beyond isolated workshops to effectively penetrate the workplace community.

We selected the Stanford Faculty Development Program (SFDP) framework for optimal clinical teaching as our model for recognizing and improving teaching skills. The SFDP was developed as a theory‐based intensive feedback method to improve teaching skills,[18, 19] and has been shown to improve teaching in the ambulatory[20] and inpatient settings.[21, 22] In this widely disseminated framework,[23, 24] excellent clinical teaching is grounded in optimizing observable behaviors organized around 7 domains.[18] A 26‐item instrument to evaluate clinical teaching (SFDP‐26) has been developed based on this framework[25] and has been validated in multiple settings.[26, 27] High‐quality teaching, as defined by the SFDP framework, has been correlated with improved educational outcomes in internal medicine clerkship students.[4]

Feedback is crucial to optimizing teaching,[28, 29, 30] particularly when it incorporates consultation[31] and narrative comments.[32] Peer feedback has several advantages over feedback from learners or from other non‐peer observers (such as supervisors or other evaluators). First, the observers benefit by gaining insight into their own weaknesses and potential areas for growth as teachers.[33, 34] Additionally, collegial observation and feedback may promote supportive teaching relationships between faculty.[35] Furthermore, peer review overcomes the biases that may be present in learner evaluations.[36] We established a 3‐stage feedback technique based on a previously described method.[37] In the first step, the observer elicits self‐appraisal from the speaker. Next, the observer provides specific, behaviorally anchored feedback in the form of 3 reinforcing comments and 2 constructive comments. Finally, the observer elicits a reflection on the feedback and helps develop a plan to improve teaching in future opportunities. We used a dyad model (paired participants repeatedly observe and give feedback to each other) to support mutual benefit and reciprocity between attendings.

Intervention

Using a modified Delphi approach, 5 medical education experts selected the 10 items that are most easily observable and salient to formal attending teaching rounds from the SFDP‐26 teaching assessment tool. A structured observation form was created, which included a checklist of the 10 selected items, space for note taking, and a template for narrative feedback (Figure 1).

We introduced the SFDP framework during a 2‐hour initial training session. Participants watched videos of teaching, learned to identify the 10 selected teaching behaviors, developed appropriate constructive and reinforcing comments, and practiced giving and receiving peer feedback.

Dyads were created on the basis of predetermined attending schedules. Participants were asked to observe and be observed twice during attending teaching rounds over the course of the academic year. Attending teaching rounds were defined as any preplanned didactic activity for ward teams. The structured observation forms were returned to the study coordinators after the observer had given feedback to the presenter. A copy of the feedback without the observer's notes was also given to each speaker. At the midpoint of the academic year, a refresher session was offered to reinforce those teaching behaviors that were the least frequently performed to date. All participants received a $50.00

Measurements and Data Collection

Participants were given a pre‐ and post‐program survey. The surveys included questions assessing confidence in ability to give feedback, receive feedback without feeling defensive, and teach effectively, as well as attitudes toward peer observation. The postprogram survey was administered at the end of the year and additionally assessed the self‐rated performance of the 10 selected teaching behaviors. A retrospective pre‐ and post‐program assessment was used for this outcome, because this method can be more reliable when participants initially may not have sufficient insight to accurately assess their own competence in specific measures.[21] The post‐program survey also included 4 questions assessing satisfaction with aspects of the program. All questions were structured as statements to which the respondent indicated degree of agreement using a 5‐point Likert scale, where 1=strongly disagree and 5=strongly agree. Structured observation forms used by participants were collected throughout the year to assess frequency of performance of the 10 selected teaching behaviors.

Statistical Analysis

We only analyzed the pre‐ and post‐program surveys that could be matched using anonymous identifiers provided by participants. For both prospective and retrospective measures, mean values and standard deviations were calculated. Wilcoxon signed rank tests for nonparametric data were performed to obtain P values. For all comparisons, a P value of <0.05 was considered significant. All comparisons were performed using Stata version 10 (StataCorp, College Station, TX).

RESULTS

Participant Characteristics and Participation in Program

Of the 37 eligible attending hospitalists, 22 (59%) enrolled. Fourteen were hospital medicine faculty, 6 were hospital medicine fellows, and 2 were internal medicine chief residents. The averagestandard deviation (SD) number of years as a ward attending was 2.2 years2.1. Seventeen (77%) reported previously having been observed and given feedback by a colleague, and 9 (41%) reported previously observing a colleague for the purpose of giving feedback.

All 22 participants attended 1 of 2, 2‐hour training sessions. Ten participants attended an hour‐long midyear refresher session. A total of 19 observation and feedback sessions took place; 15 of them occurred in the first half of the academic year. Fifteen attending hospitalists participated in at least 1 observed teaching session. Of the 11 dyads, 6 completed at least 1 observation of each other. Two dyads performed 2 observations of each other.

Fifteen participants (68% of those enrolled) completed both the pre‐ and post‐program surveys. Among these respondents, the average number of years attending was 2.92.2 years. Eight (53%) reported previously having been observed and given feedback by a colleague, and 7 (47%) reported previously observing a colleague for the purpose of giving feedback. For this subset of participants, the averageSD frequency of being observed during the program was 1.30.7, and observing was 1.10.8.

Confidence in Ability to Give Feedback, Receive Feedback, and Teach Effectively

In comparison of pre‐ and post‐intervention measures, participants indicated increased confidence in their ability to evaluate their colleagues and provide feedback in all domains queried. Participants also indicated increased confidence in the efficacy of their feedback to improve their colleagues' teaching skills. Participating in the program did not significantly change pre‐intervention levels of confidence in ability to receive feedback without being defensive or confidence in ability to use feedback to improve teaching skills (Table 1).

| Statement | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|

| |||||

| I can accurately assess my colleagues' teaching skills. | 3.20 | 0.86 | 4.07 | 0.59 | 0.004 |

| I can give accurate feedback to my colleagues regarding their teaching skills. | 3.40 | 0.63 | 4.20 | 0.56 | 0.002 |

| I can give feedback in a way that that my colleague will not feel defensive about their teaching skills. | 3.60 | 0.63 | 4.20 | 0.56 | 0.046 |

| My feedback will improve my colleagues' teaching skills. | 3.40 | 0.51 | 3.93 | 0.59 | 0.011 |

| I can receive feedback from a colleague without being defensive about my teaching skills. | 3.87 | 0.92 | 4.27 | 0.59 | 0.156 |

| I can use feedback from a colleague to improve my teaching skills. | 4.33 | 0.82 | 4.47 | 0.64 | 0.607 |

| I am confident in my ability to teach students and residents during attending rounds.a | 3.21 | 0.89 | 3.71 | 0.83 | 0.026 |

| I am confident in my knowledge of components of effective teaching.a | 3.21 | 0.89 | 3.71 | 0.99 | 0.035 |

| Learners regard me as an effective teacher.a | 3.14 | 0.66 | 3.64 | 0.74 | 0.033 |

Self‐Rated Performance of 10 Selected Teaching Behaviors

In retrospective assessment, participants felt that their performance had improved in all 10 teaching behaviors after the intervention. This perceived improvement reached statistical significance in 8 of the 10 selected behaviors (Table 2).

| SFDP Framework Category From Skeff et al.[18] | When I Give Attending Rounds, I Generally . | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|---|

| ||||||

| 1. Establishing a positive learning climate | Listen to learners | 4.27 | 0.59 | 4.53 | 0.52 | 0.046 |

| Encourage learners to participate actively in the discussion | 4.07 | 0.70 | 4.60 | 0.51 | 0.009 | |

| 2. Controlling the teaching session | Call attention to time | 3.33 | 0.98 | 4.27 | 0.59 | 0.004 |

| 3. Communicating goals | State goals clearly and concisely | 3.40 | 0.63 | 4.27 | 0.59 | 0.001 |

| State relevance of goals to learners | 3.40 | 0.74 | 4.20 | 0.68 | 0.002 | |

| 4. Promoting understanding and retention | Present well‐organized material | 3.87 | 0.64 | 4.07 | 0.70 | 0.083 |

| Use blackboard or other visual aids | 4.27 | 0.88 | 4.47 | 0.74 | 0.158 | |

| 5. Evaluating the learners | Evaluate learners' ability to apply medical knowledge to specific patients | 3.33 | 0.98 | 4.00 | 0.76 | 0.005 |

| 6. Providing feedback to the learners | Explain to learners why he/she was correct or incorrect | 3.47 | 1.13 | 4.13 | 0.64 | 0.009 |

| 7. Promoting self‐directed learning | Motivate learners to learn on their own | 3.20 | 0.86 | 3.73 | 0.70 | 0.005 |

Attitudes Toward Peer Observation and Feedback

There were no significant changes in attitudes toward observation and feedback on teaching. A strong preprogram belief that observation and feedback can improve teaching skills increased slightly, but not significantly, after the program. Participants remained largely neutral in expectation of discomfort with giving or receiving peer feedback. Prior to the program, there was a slight tendency to believe that observation and feedback is more effective when done by more skilled and experienced colleagues; this belief diminished, but not significantly (Table 3).

| Statement | Mean Pre | SD | Mean Post | SD | P |

|---|---|---|---|---|---|

| |||||

| Being observed and receiving feedback can improve my teaching skills. | 4.47 | 1.06 | 4.60 | 0.51 | 0.941 |

| My teaching skills cannot improve without observation with feedback. | 2.93 | 1.39 | 3.47 | 1.30 | 0.188 |

| Observation with feedback is most effective when done by colleagues who are expert educators. | 3.53 | 0.83 | 3.33 | 0.98 | 0.180 |

| Observation with feedback is most effective when done by colleagues who have been teaching many years. | 3.40 | 0.91 | 3.07 | 1.03 | 0.143 |

| The thought of observing and giving feedback to my colleagues makes me uncomfortable. | 3.13 | 0.92 | 3.00 | 1.13 | 0.565 |

| The thought of being observed by a colleague and receiving feedback makes me uncomfortable. | 3.20 | 0.94 | 3.27 | 1.22 | 0.747 |

Program Evaluation

There were a variable number of responses to the program evaluation questions. The majority of participants found the program to be very beneficial (1=strongly disagree, 5=strongly agree [n, meanSD]): My teaching has improved as a result of this program (n=14, 4.90.3). Both giving (n=11, 4.21.6) and receiving (n=13, 4.61.1) feedback were felt to have improved teaching skills. There was strong agreement from respondents that they would participate in the program in the future: I am likely to participate in this program in the future (n=12, 4.60.9).

DISCUSSION

Previous studies have shown that teaching skills are unlikely to improve without feedback,[28, 29, 30] yet feedback for hospitalists is usually limited to summative, end‐rotation evaluations from learners, disconnected from the teaching encounter. Our theory‐based, rationally designed peer observation and feedback program resulted in increased confidence in the ability to give feedback, receive feedback, and teach effectively. Participation did not result in negative attitudes toward giving and receiving feedback from colleagues. Participants self‐reported increased performance of important teaching behaviors. Most participants rated the program very highly, and endorsed improved teaching skills as a result of the program.

Our experience provides several lessons for other groups considering the implementation of peer feedback to strengthen teaching. First, we suggest that hospitalist groups may expect variable degrees of participation in a voluntary peer feedback program. In our program, 41% of eligible attendings did not participate. We did not specifically investigate why; we speculate that they may not have had the time, believed that their teaching skills were already strong, or they may have been daunted at the idea of peer review. It is also possible that participants were a self‐selected group who were the most motivated to strengthen their teaching. Second, we note the steep decline in the number of observations in the second half of the year. Informal assessment for reasons for the drop‐off suggested that after initial enthusiasm for the program, navigating the logistics of observing the same peer in the second half of the year proved to be prohibitive to many participants. Therefore, future versions of peer feedback programs may benefit from removing the dyad requirement and encouraging all participants to observe one another whenever possible.

With these lessons in mind, we believe that a peer observation program could be implemented by other hospital medicine groups. The program does not require extensive content expertise or senior faculty but does require engaged leadership and interested and motivated faculty. Groups could identify an individual in their group with an interest in clinical teaching who could then be responsible for creating the training session (materials available upon request). We believe that with only a small upfront investment, most hospital medicine groups could use this as a model to build a peer observation program aimed at improving clinical teaching.

Our study has several limitations. As noted above, our participation rate was 59%, and the number of participating attendings declined through the year. We did not examine whether our program resulted in advances in the knowledge, skills, or attitudes of the learners; because each attending teaching session was unique, it was not possible to measure changes in learner knowledge. Our primary outcome measures relied on self‐assessment rather than higher order and more objective measures of teaching efficacy. Furthermore, our results may not be generalizable to other programs, given the heterogeneity in service structures and teaching practices across the country. This was an uncontrolled study; some of the outcomes may have naturally occurred independent of the intervention due to the natural evolution of clinical teaching. As with any educational intervention that integrates multiple strategies, we are not able to discern if the improved outcomes were the result of the initial didactic sessions, the refresher sessions, or the peer feedback itself. Serial assessments of frequency of teaching behaviors were not done due to the low number of observations in the second half of the program. Finally, our 10‐item tool derived from the validated SFDP‐26 tool is not itself a validated assessment of teaching.

We acknowledge that the increased confidence seen in our participants does not necessarily predict improved performance. Although increased confidence in core skills is a necessary step that can lead to changes in behavior, further studies are needed to determine whether the increase in faculty confidence that results from peer observation and feedback translates into improved educational outcomes.

The pressure on hospitalists to be excellent teachers is here to stay. Resources to train these faculty are scarce, yet we must prioritize faculty development in teaching to optimize the training of future physicians. Our data illustrate the benefits of peer observation and feedback. Hospitalist programs should consider this option in addressing the professional development needs of their faculty.

Acknowledgements

The authors thank Zachary Martin for administrative support for the program; Gurpreet Dhaliwal, MD, and Patricia O'Sullivan, PhD, for aid in program development; and John Amory, MD, MPH, for critical review of the manuscript. The authors thank the University of California, San Francisco Office of Medical Education for funding this work with an Educational Research Grant.

Disclosures: Funding: UCSF Office of Medical Education Educational Research Grant. Ethics approval: approved by UCSF Committee on Human Research. Previous presentations: Previous versions of this work were presented as an oral presentation at the University of California at San Francisco Medical Education Day, San Francisco, California, April 27, 2012, and as a poster presentation at the Society for General Internal Medicine 35th Annual Meeting, Orlando, Florida, May 912, 2012. The authors report no conflicts of interest.

- , , . Hospitalist involvement in internal medicine residencies. J Hosp Med. 2009;4(8):471–475.

- , , , , , . Is there a relationship between attending physicians' and residents' teaching skills and students' examination scores? Acad Med. 2000;75(11):1144–1146.

- , , . Six‐year documentation of the association between excellent clinical teaching and improved students' examination performances. Acad Med. 2000;75(10 suppl):S62–S64.

- , . Effect of clinical teaching on student performance during a medicine clerkship. Am J Med. 2001;110(3):205–209.

- , . Implications of the hospitalist model for medical students' education. Acad Med. 2001;76(4):324–330.

- . On educating and being a physician in the hospitalist era. Am J Med. 2001;111(9B):45S–47S.

- , . The role of hospitalists in medical education. Am J Med. 1999;107(4):305–309.

- , , , , , . Challenges and opportunities in academic hospital medicine: report from the academic hospital medicine summit. J Gen Intern Med. 2009;24(5):636–641.

- , , , et al. Impact of duty hour regulations on medical students' education: views of key clinical faculty. J Gen Intern Med. 2008;23(7):1084–1089.

- , , , , , . The impact of resident duty hours reform on the internal medicine core clerkship: results from the clerkship directors in internal medicine survey. Acad Med. 2006;81(12):1038–1044.

- , , , . Effects of resident work hour limitations on faculty professional lives. J Gen Intern Med. 2008;23(7):1077–1083.

- , . Teaching internal medicine residents in the new era. Inpatient attending with duty‐hour regulations. J Gen Intern Med. 2006;21(5):447–452.

- , , , . Survey of US academic hospitalist leaders about mentorship and academic activities in hospitalist groups. J Hosp Med. 2011;6(1):5–9.

- , , , . Using observed structured teaching exercises (OSTE) to enhance hospitalist teaching during family centered rounds. J Hosp Med. 2011;6(7):423–427.

- , , , . Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161–166.

- , , , . How to become a better clinical teacher: a collaborative peer observation process. Med Teach. 2011;33(2):151–155.

- , . Reframing research on faculty development. Acad Med. 2011;86(4):421–428.

- , , , et al. The Stanford faculty development program: a dissemination approach to faculty development for medical teachers. Teach Learn Med. 1992;4(3):180–187.

- . Evaluation of a method for improving the teaching performance of attending physicians. Am J Med. 1983;75(3):465–470.

- , , , . The impact of the Stanford Faculty Development Program on ambulatory teaching behavior. J Gen Intern Med. 2006;21(5):430–434.

- , , . Evaluation of a medical faculty development program: a comparison of traditional pre/post and retrospective pre/post self‐assessment ratings. Eval Health Prof. 1992;15(3):350–366.

- , , , , . Evaluation of the seminar method to improve clinical teaching. J Gen Intern Med. 1986;1(5):315–322.

- , , , , . Regional teaching improvement programs for community‐based teachers. Am J Med. 1999;106(1):76–80.

- , , , . Improving clinical teaching. Evaluation of a national dissemination program. Arch Intern Med. 1992;152(6):1156–1161.

- , , , . Factorial validation of a widely disseminated educational framework for evaluating clinical teachers. Acad Med. 1998;73(6):688–695.

- , , , . Student and resident evaluations of faculty—how reliable are they? Factorial validation of an educational framework using residents' evaluations of clinician‐educators. Acad Med. 1999;74(10):S25–S27.

- , . Students' global assessments of clinical teachers: a reliable and valid measure of teaching effectiveness. Acad Med. 1998;73(10 suppl):S72–S74.

- . The practice of giving feedback to improve teaching: what is effective? J Higher Educ. 1993;64(5):574–593.

- , , , et al. Faculty development. A resource for clinical teachers. J Gen Intern Med. 1997;12(suppl 2):S56–S63.

- , , , et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME guide no. 8. Med Teach. 2006;28(6):497–526.

- , . Strategies for improving teaching practices: a comprehensive approach to faculty development. Acad Med. 1998;73(4):387–396.

- , . Relationship between systematic feedback to faculty and ratings of clinical teaching. Acad Med. 1996;71(10):1100–1102.

- . Lessons learned from a peer review of bedside teaching. Acad Med. 2004;79(4):343–346.

- , , , . Evaluating an instrument for the peer review of inpatient teaching. Med Teach. 2003;25(2):131–135.

- , , . Twelve tips for peer observation of teaching. Med Teach. 2007;29(4):297–300.

- , . Assessing the quality of teaching. Am J Med. 1999;106(4):381–384.

- , , , , , . To the point: medical education reviews—providing feedback. Am J Obstet Gynecol. 2007;196(6):508–513.

- , , . Hospitalist involvement in internal medicine residencies. J Hosp Med. 2009;4(8):471–475.

- , , , , , . Is there a relationship between attending physicians' and residents' teaching skills and students' examination scores? Acad Med. 2000;75(11):1144–1146.

- , , . Six‐year documentation of the association between excellent clinical teaching and improved students' examination performances. Acad Med. 2000;75(10 suppl):S62–S64.

- , . Effect of clinical teaching on student performance during a medicine clerkship. Am J Med. 2001;110(3):205–209.

- , . Implications of the hospitalist model for medical students' education. Acad Med. 2001;76(4):324–330.

- . On educating and being a physician in the hospitalist era. Am J Med. 2001;111(9B):45S–47S.

- , . The role of hospitalists in medical education. Am J Med. 1999;107(4):305–309.

- , , , , , . Challenges and opportunities in academic hospital medicine: report from the academic hospital medicine summit. J Gen Intern Med. 2009;24(5):636–641.

- , , , et al. Impact of duty hour regulations on medical students' education: views of key clinical faculty. J Gen Intern Med. 2008;23(7):1084–1089.

- , , , , , . The impact of resident duty hours reform on the internal medicine core clerkship: results from the clerkship directors in internal medicine survey. Acad Med. 2006;81(12):1038–1044.

- , , , . Effects of resident work hour limitations on faculty professional lives. J Gen Intern Med. 2008;23(7):1077–1083.

- , . Teaching internal medicine residents in the new era. Inpatient attending with duty‐hour regulations. J Gen Intern Med. 2006;21(5):447–452.

- , , , . Survey of US academic hospitalist leaders about mentorship and academic activities in hospitalist groups. J Hosp Med. 2011;6(1):5–9.

- , , , . Using observed structured teaching exercises (OSTE) to enhance hospitalist teaching during family centered rounds. J Hosp Med. 2011;6(7):423–427.

- , , , . Investing in the future: building an academic hospitalist faculty development program. J Hosp Med. 2011;6(3):161–166.

- , , , . How to become a better clinical teacher: a collaborative peer observation process. Med Teach. 2011;33(2):151–155.

- , . Reframing research on faculty development. Acad Med. 2011;86(4):421–428.

- , , , et al. The Stanford faculty development program: a dissemination approach to faculty development for medical teachers. Teach Learn Med. 1992;4(3):180–187.

- . Evaluation of a method for improving the teaching performance of attending physicians. Am J Med. 1983;75(3):465–470.

- , , , . The impact of the Stanford Faculty Development Program on ambulatory teaching behavior. J Gen Intern Med. 2006;21(5):430–434.

- , , . Evaluation of a medical faculty development program: a comparison of traditional pre/post and retrospective pre/post self‐assessment ratings. Eval Health Prof. 1992;15(3):350–366.

- , , , , . Evaluation of the seminar method to improve clinical teaching. J Gen Intern Med. 1986;1(5):315–322.

- , , , , . Regional teaching improvement programs for community‐based teachers. Am J Med. 1999;106(1):76–80.

- , , , . Improving clinical teaching. Evaluation of a national dissemination program. Arch Intern Med. 1992;152(6):1156–1161.

- , , , . Factorial validation of a widely disseminated educational framework for evaluating clinical teachers. Acad Med. 1998;73(6):688–695.

- , , , . Student and resident evaluations of faculty—how reliable are they? Factorial validation of an educational framework using residents' evaluations of clinician‐educators. Acad Med. 1999;74(10):S25–S27.

- , . Students' global assessments of clinical teachers: a reliable and valid measure of teaching effectiveness. Acad Med. 1998;73(10 suppl):S72–S74.

- . The practice of giving feedback to improve teaching: what is effective? J Higher Educ. 1993;64(5):574–593.

- , , , et al. Faculty development. A resource for clinical teachers. J Gen Intern Med. 1997;12(suppl 2):S56–S63.

- , , , et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME guide no. 8. Med Teach. 2006;28(6):497–526.

- , . Strategies for improving teaching practices: a comprehensive approach to faculty development. Acad Med. 1998;73(4):387–396.

- , . Relationship between systematic feedback to faculty and ratings of clinical teaching. Acad Med. 1996;71(10):1100–1102.

- . Lessons learned from a peer review of bedside teaching. Acad Med. 2004;79(4):343–346.