User login

For chronic abdominal pain, THC resembled placebo

Seven weeks of treatment with delta-9-atetrahydrocannabinol (THC) did not improve chronic abdominal pain in a placebo-controlled trial of 65 adults.

Treatment “was safe and well tolerated,” but did not significantly reduce pain scores or secondary efficacy outcomes, Marjan de Vries, MSc, and her associates wrote in the July issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2016.09.147). Studies have not clearly shown that THC improves central pain sensitization, a key mechanism in chronic abdominal pain, they noted. Future studies of THC and central sensitization include quantitative sensory testing or electroencephalography, they added.

Source: American Gastroenterological Association

Treatment-refractory chronic abdominal pain is common after abdominal surgery or in chronic pancreatitis, wrote Ms. de Vries of Radboud University Medical Center, Nijmegen, the Netherlands. Affected patients tend to develop central sensitization, or hyper-responsive nociceptive central nervous system pathways. When this happens, pain no longer couples reliably with peripheral stimuli, and therapy targeting central nociceptive pathways is indicated. The main psychoactive compound of Cannabis sativa is THC, which interacts with CB1 receptors in the central nervous system, including in areas of the brain that help regulate emotions, such as the amygdala. Emotion-processing circuits are often overactive in chronic pain, and disrupting them might help modify pain perception, the investigators hypothesized. Therefore, they randomly assigned 65 adults with at least 3 months of abdominal pain related to chronic pancreatitis or abdominal surgery to receive oral placebo or THC tablets three times daily for 50-52 days. The 31 patients in the THC group received step-up dosing (3 mg per dose for 5 days, followed by 5 mg per dose for 5 days) followed by stable dosing at 8 mg. Both groups continued other prescribed analgesics as usual, including oxycontin, fentanyl, morphine, codeine, tramadol, paracetamol, anti-epileptics, and nonsteroidal anti-inflammatories. All but two study participants were white, 25 were male, and 24 were female.

At baseline, all patients reported pain of at least 3 on an 11-point visual analogue scale (VAS). By days 50-52, average VAS scores decreased by 1.6 points (40%) in the THC group and by 1.9 points (37%) in the placebo group (P = .9). Although a strong placebo effect is common in studies of visceral pain, that did not prevent pregabalin from significantly outperforming placebo in another similarly designed randomized clinical trial of patients from this study group with chronic pancreatitis, the investigators noted.

The THC and placebo groups also resembled each other on various secondary outcome measures, including patient global impression of change, pain catastrophizing, pain-related anxiety, measures of depression and generalized anxiety, and subjective impressions of alertness, mood, feeling “high,” drowsiness, and difficulties in controlling thoughts. The only exception was that the THC group showed a trend toward improvement on the Short Form 36, compared with the placebo group (P = .051).

Pharmacokinetic analysis showed good oral absorption of THC. Dizziness, somnolence, and headache were common in both groups, but were more frequent with THC than placebo, as was nausea, dry mouth, and visual impairment. There were no serious treatment-related adverse events, although seven patients stopped THC because they could not tolerate the maximum dose.

Some evidence suggests that the shift from acute to chronic pain entails a transition from nociceptive to cognitive, affective, and autonomic sensitization, the researchers noted. “Therefore, an agent targeting particular brain areas related to the cognitive emotional feature of chronic pain, such as THC, might be efficacious in our chronic pain population, but might be better measured by using affective outcomes of pain,” they concluded.

The trial was supported by a grant from the European Union, the European Fund for Regional Development, and the Province of Gelderland. The THC was provided by Echo Pharmaceuticals, Nijmegen, the Netherlands. The investigators reported having no conflicts of interest.

Seven weeks of treatment with delta-9-atetrahydrocannabinol (THC) did not improve chronic abdominal pain in a placebo-controlled trial of 65 adults.

Treatment “was safe and well tolerated,” but did not significantly reduce pain scores or secondary efficacy outcomes, Marjan de Vries, MSc, and her associates wrote in the July issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2016.09.147). Studies have not clearly shown that THC improves central pain sensitization, a key mechanism in chronic abdominal pain, they noted. Future studies of THC and central sensitization include quantitative sensory testing or electroencephalography, they added.

Source: American Gastroenterological Association

Treatment-refractory chronic abdominal pain is common after abdominal surgery or in chronic pancreatitis, wrote Ms. de Vries of Radboud University Medical Center, Nijmegen, the Netherlands. Affected patients tend to develop central sensitization, or hyper-responsive nociceptive central nervous system pathways. When this happens, pain no longer couples reliably with peripheral stimuli, and therapy targeting central nociceptive pathways is indicated. The main psychoactive compound of Cannabis sativa is THC, which interacts with CB1 receptors in the central nervous system, including in areas of the brain that help regulate emotions, such as the amygdala. Emotion-processing circuits are often overactive in chronic pain, and disrupting them might help modify pain perception, the investigators hypothesized. Therefore, they randomly assigned 65 adults with at least 3 months of abdominal pain related to chronic pancreatitis or abdominal surgery to receive oral placebo or THC tablets three times daily for 50-52 days. The 31 patients in the THC group received step-up dosing (3 mg per dose for 5 days, followed by 5 mg per dose for 5 days) followed by stable dosing at 8 mg. Both groups continued other prescribed analgesics as usual, including oxycontin, fentanyl, morphine, codeine, tramadol, paracetamol, anti-epileptics, and nonsteroidal anti-inflammatories. All but two study participants were white, 25 were male, and 24 were female.

At baseline, all patients reported pain of at least 3 on an 11-point visual analogue scale (VAS). By days 50-52, average VAS scores decreased by 1.6 points (40%) in the THC group and by 1.9 points (37%) in the placebo group (P = .9). Although a strong placebo effect is common in studies of visceral pain, that did not prevent pregabalin from significantly outperforming placebo in another similarly designed randomized clinical trial of patients from this study group with chronic pancreatitis, the investigators noted.

The THC and placebo groups also resembled each other on various secondary outcome measures, including patient global impression of change, pain catastrophizing, pain-related anxiety, measures of depression and generalized anxiety, and subjective impressions of alertness, mood, feeling “high,” drowsiness, and difficulties in controlling thoughts. The only exception was that the THC group showed a trend toward improvement on the Short Form 36, compared with the placebo group (P = .051).

Pharmacokinetic analysis showed good oral absorption of THC. Dizziness, somnolence, and headache were common in both groups, but were more frequent with THC than placebo, as was nausea, dry mouth, and visual impairment. There were no serious treatment-related adverse events, although seven patients stopped THC because they could not tolerate the maximum dose.

Some evidence suggests that the shift from acute to chronic pain entails a transition from nociceptive to cognitive, affective, and autonomic sensitization, the researchers noted. “Therefore, an agent targeting particular brain areas related to the cognitive emotional feature of chronic pain, such as THC, might be efficacious in our chronic pain population, but might be better measured by using affective outcomes of pain,” they concluded.

The trial was supported by a grant from the European Union, the European Fund for Regional Development, and the Province of Gelderland. The THC was provided by Echo Pharmaceuticals, Nijmegen, the Netherlands. The investigators reported having no conflicts of interest.

Seven weeks of treatment with delta-9-atetrahydrocannabinol (THC) did not improve chronic abdominal pain in a placebo-controlled trial of 65 adults.

Treatment “was safe and well tolerated,” but did not significantly reduce pain scores or secondary efficacy outcomes, Marjan de Vries, MSc, and her associates wrote in the July issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2016.09.147). Studies have not clearly shown that THC improves central pain sensitization, a key mechanism in chronic abdominal pain, they noted. Future studies of THC and central sensitization include quantitative sensory testing or electroencephalography, they added.

Source: American Gastroenterological Association

Treatment-refractory chronic abdominal pain is common after abdominal surgery or in chronic pancreatitis, wrote Ms. de Vries of Radboud University Medical Center, Nijmegen, the Netherlands. Affected patients tend to develop central sensitization, or hyper-responsive nociceptive central nervous system pathways. When this happens, pain no longer couples reliably with peripheral stimuli, and therapy targeting central nociceptive pathways is indicated. The main psychoactive compound of Cannabis sativa is THC, which interacts with CB1 receptors in the central nervous system, including in areas of the brain that help regulate emotions, such as the amygdala. Emotion-processing circuits are often overactive in chronic pain, and disrupting them might help modify pain perception, the investigators hypothesized. Therefore, they randomly assigned 65 adults with at least 3 months of abdominal pain related to chronic pancreatitis or abdominal surgery to receive oral placebo or THC tablets three times daily for 50-52 days. The 31 patients in the THC group received step-up dosing (3 mg per dose for 5 days, followed by 5 mg per dose for 5 days) followed by stable dosing at 8 mg. Both groups continued other prescribed analgesics as usual, including oxycontin, fentanyl, morphine, codeine, tramadol, paracetamol, anti-epileptics, and nonsteroidal anti-inflammatories. All but two study participants were white, 25 were male, and 24 were female.

At baseline, all patients reported pain of at least 3 on an 11-point visual analogue scale (VAS). By days 50-52, average VAS scores decreased by 1.6 points (40%) in the THC group and by 1.9 points (37%) in the placebo group (P = .9). Although a strong placebo effect is common in studies of visceral pain, that did not prevent pregabalin from significantly outperforming placebo in another similarly designed randomized clinical trial of patients from this study group with chronic pancreatitis, the investigators noted.

The THC and placebo groups also resembled each other on various secondary outcome measures, including patient global impression of change, pain catastrophizing, pain-related anxiety, measures of depression and generalized anxiety, and subjective impressions of alertness, mood, feeling “high,” drowsiness, and difficulties in controlling thoughts. The only exception was that the THC group showed a trend toward improvement on the Short Form 36, compared with the placebo group (P = .051).

Pharmacokinetic analysis showed good oral absorption of THC. Dizziness, somnolence, and headache were common in both groups, but were more frequent with THC than placebo, as was nausea, dry mouth, and visual impairment. There were no serious treatment-related adverse events, although seven patients stopped THC because they could not tolerate the maximum dose.

Some evidence suggests that the shift from acute to chronic pain entails a transition from nociceptive to cognitive, affective, and autonomic sensitization, the researchers noted. “Therefore, an agent targeting particular brain areas related to the cognitive emotional feature of chronic pain, such as THC, might be efficacious in our chronic pain population, but might be better measured by using affective outcomes of pain,” they concluded.

The trial was supported by a grant from the European Union, the European Fund for Regional Development, and the Province of Gelderland. The THC was provided by Echo Pharmaceuticals, Nijmegen, the Netherlands. The investigators reported having no conflicts of interest.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Key clinical point: Tetrahydrocannabinol did not improve chronic abdominal pain more than did placebo.

Major finding: By days 50-52, average VAS scores decreased by 1.6 points (40%) in the THC group and by 1.9 points (37%) in the placebo group (P = .9).

Data source: A phase II, placebo-controlled study of 65 patients with chronic abdominal pain for at least 3 months who received either placebo or delta-9-atetrahydrocannabinol (THC), 8 mg three times daily.

Disclosures: The trial was supported by a grant from the European Union, the European Fund for Regional Development, and the Province of Gelderland. The THC was provided by Echo Pharmaceuticals, Nijmegen, the Netherlands. The investigators reported having no conflicts of interest.

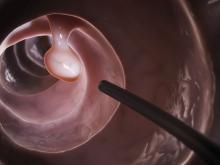

Study supports expanded definition of serrated polyposis syndrome

Patients with more than 10 colonic polyps, of which at least half were serrated, and their first-degree relatives had a risk of colorectal cancer similar to that of patients who met formal diagnostic criteria for serrated polyposis syndrome (SPS), according to a retrospective multicenter study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.003).

Such patients “should be treated with the same follow-up procedures as those proposed for patients with SPS, and possibly the definition of SPS should be broadened to include this phenotype,” wrote Cecilia M. Egoavil, MD, Miriam Juárez, and their associates.

SPS increases the risk of colorectal cancer (CRC) and is considered a heritable disease, which mandates “strict surveillance” of first-degree relatives, the researchers noted. The World Health Organization defines SPS as having at least five histologically diagnosed serrated lesions proximal to the sigmoid colon, of which two are at least 10 mm in diameter, or serrated polyps proximal to the sigmoid colon and a first-degree relative with SPS, or more than 20 serrated polyps throughout the colon. This “arbitrary” definition is “somewhat restrictive, and possibly leads to underdiagnosis of this disease,” the researchers wrote. Patients with multiple serrated polyps who do not meet WHO SPS criteria might have a “phenotypically attenuated form of serrated polyposis.”

For the study, the researchers compared 53 patients meeting WHO SPS criteria with 145 patients who did not meet these criteria but had more than 10 polyps throughout the colon, of which at least 50% were serrated. For both groups, number of polyps was obtained by adding polyp counts from subsequent colonoscopies. The data source was EPIPOLIP, a multicenter study of patients recruited from 24 hospitals in Spain in 2008 and 2009. At baseline, all patients had more than 10 adenomatous or serrated colonic polyps but did not have familial adenomatous polyposis, Lynch syndrome, hamartomatous polyposis, inflammatory bowel disease, or only hyperplastic rectosigmoid polyps.

The prevalence of CRC was statistically similar between groups (P = .4). There were 12 (22.6%) cases among SPS patients (mean age at diagnosis, 50 years), and 41 (28.3%) cases (mean age, 59 years) among patients with multiple serrated polyps who did not meet SPS criteria. During a mean follow-up of 4.2 years, one (1.9%) SPS patient developed incident CRC, as did four (2.8%) patients with multiple serrated polyps without SPS. Thus, standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) and 0.74 (95% CI, 0.20-1.90), respectively (P = .7). Standardized incidence ratios for CRC also did not significantly differ between first-degree relatives of patients with SPS (3.28, 95% CI, 2.16-4.77) and those with multiple serrated polyps (2.79, 95% CI, 2.10-3.63; P = .5).

A Kaplan-Meier analysis confirmed that there were no differences in the incidence of CRC between groups during follow-up. The findings “confirm that a special surveillance strategy is needed for patients with multiple serrated polyps and their relatives, probably similar to the strategy currently recommended for SPS patients,” the researchers concluded. They arbitrarily defined the group with multiple serrated polyps, so they were not able to link CRC to a cutoff number or percentage of serrated polyps, they noted.

Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana-Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

Patients with more than 10 colonic polyps, of which at least half were serrated, and their first-degree relatives had a risk of colorectal cancer similar to that of patients who met formal diagnostic criteria for serrated polyposis syndrome (SPS), according to a retrospective multicenter study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.003).

Such patients “should be treated with the same follow-up procedures as those proposed for patients with SPS, and possibly the definition of SPS should be broadened to include this phenotype,” wrote Cecilia M. Egoavil, MD, Miriam Juárez, and their associates.

SPS increases the risk of colorectal cancer (CRC) and is considered a heritable disease, which mandates “strict surveillance” of first-degree relatives, the researchers noted. The World Health Organization defines SPS as having at least five histologically diagnosed serrated lesions proximal to the sigmoid colon, of which two are at least 10 mm in diameter, or serrated polyps proximal to the sigmoid colon and a first-degree relative with SPS, or more than 20 serrated polyps throughout the colon. This “arbitrary” definition is “somewhat restrictive, and possibly leads to underdiagnosis of this disease,” the researchers wrote. Patients with multiple serrated polyps who do not meet WHO SPS criteria might have a “phenotypically attenuated form of serrated polyposis.”

For the study, the researchers compared 53 patients meeting WHO SPS criteria with 145 patients who did not meet these criteria but had more than 10 polyps throughout the colon, of which at least 50% were serrated. For both groups, number of polyps was obtained by adding polyp counts from subsequent colonoscopies. The data source was EPIPOLIP, a multicenter study of patients recruited from 24 hospitals in Spain in 2008 and 2009. At baseline, all patients had more than 10 adenomatous or serrated colonic polyps but did not have familial adenomatous polyposis, Lynch syndrome, hamartomatous polyposis, inflammatory bowel disease, or only hyperplastic rectosigmoid polyps.

The prevalence of CRC was statistically similar between groups (P = .4). There were 12 (22.6%) cases among SPS patients (mean age at diagnosis, 50 years), and 41 (28.3%) cases (mean age, 59 years) among patients with multiple serrated polyps who did not meet SPS criteria. During a mean follow-up of 4.2 years, one (1.9%) SPS patient developed incident CRC, as did four (2.8%) patients with multiple serrated polyps without SPS. Thus, standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) and 0.74 (95% CI, 0.20-1.90), respectively (P = .7). Standardized incidence ratios for CRC also did not significantly differ between first-degree relatives of patients with SPS (3.28, 95% CI, 2.16-4.77) and those with multiple serrated polyps (2.79, 95% CI, 2.10-3.63; P = .5).

A Kaplan-Meier analysis confirmed that there were no differences in the incidence of CRC between groups during follow-up. The findings “confirm that a special surveillance strategy is needed for patients with multiple serrated polyps and their relatives, probably similar to the strategy currently recommended for SPS patients,” the researchers concluded. They arbitrarily defined the group with multiple serrated polyps, so they were not able to link CRC to a cutoff number or percentage of serrated polyps, they noted.

Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana-Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

Patients with more than 10 colonic polyps, of which at least half were serrated, and their first-degree relatives had a risk of colorectal cancer similar to that of patients who met formal diagnostic criteria for serrated polyposis syndrome (SPS), according to a retrospective multicenter study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.003).

Such patients “should be treated with the same follow-up procedures as those proposed for patients with SPS, and possibly the definition of SPS should be broadened to include this phenotype,” wrote Cecilia M. Egoavil, MD, Miriam Juárez, and their associates.

SPS increases the risk of colorectal cancer (CRC) and is considered a heritable disease, which mandates “strict surveillance” of first-degree relatives, the researchers noted. The World Health Organization defines SPS as having at least five histologically diagnosed serrated lesions proximal to the sigmoid colon, of which two are at least 10 mm in diameter, or serrated polyps proximal to the sigmoid colon and a first-degree relative with SPS, or more than 20 serrated polyps throughout the colon. This “arbitrary” definition is “somewhat restrictive, and possibly leads to underdiagnosis of this disease,” the researchers wrote. Patients with multiple serrated polyps who do not meet WHO SPS criteria might have a “phenotypically attenuated form of serrated polyposis.”

For the study, the researchers compared 53 patients meeting WHO SPS criteria with 145 patients who did not meet these criteria but had more than 10 polyps throughout the colon, of which at least 50% were serrated. For both groups, number of polyps was obtained by adding polyp counts from subsequent colonoscopies. The data source was EPIPOLIP, a multicenter study of patients recruited from 24 hospitals in Spain in 2008 and 2009. At baseline, all patients had more than 10 adenomatous or serrated colonic polyps but did not have familial adenomatous polyposis, Lynch syndrome, hamartomatous polyposis, inflammatory bowel disease, or only hyperplastic rectosigmoid polyps.

The prevalence of CRC was statistically similar between groups (P = .4). There were 12 (22.6%) cases among SPS patients (mean age at diagnosis, 50 years), and 41 (28.3%) cases (mean age, 59 years) among patients with multiple serrated polyps who did not meet SPS criteria. During a mean follow-up of 4.2 years, one (1.9%) SPS patient developed incident CRC, as did four (2.8%) patients with multiple serrated polyps without SPS. Thus, standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) and 0.74 (95% CI, 0.20-1.90), respectively (P = .7). Standardized incidence ratios for CRC also did not significantly differ between first-degree relatives of patients with SPS (3.28, 95% CI, 2.16-4.77) and those with multiple serrated polyps (2.79, 95% CI, 2.10-3.63; P = .5).

A Kaplan-Meier analysis confirmed that there were no differences in the incidence of CRC between groups during follow-up. The findings “confirm that a special surveillance strategy is needed for patients with multiple serrated polyps and their relatives, probably similar to the strategy currently recommended for SPS patients,” the researchers concluded. They arbitrarily defined the group with multiple serrated polyps, so they were not able to link CRC to a cutoff number or percentage of serrated polyps, they noted.

Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana-Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: Risk of colorectal cancer was similar among patients with serrated polyposis syndrome and those who did not meet formal diagnostic criteria but had more than 10 colonic polyps, of which more than 50% were serrated, and their first-degree relatives.

Major finding: Standardized incidence ratios were 0.51 (95% confidence interval, 0.01-2.82) in patients who met criteria for serrated polyposis syndrome and 0.74 (95% CI, 0.20-1.90) in patients with multiple serrated polyps who did not meet the criteria (P = .7).

Data source: A multicenter retrospective study of 53 patients who met criteria for serrated polyposis and 145 patients who did not meet these criteria, but had more than 10 polyps throughout the colon, of which more than 50% were serrated.

Disclosures: Funders included Instituto de Salud Carlos III, Fundación de Investigación Biomédica de la Comunidad Valenciana–Instituto de Investigación Sanitaria y Biomédica de Alicante, Asociación Española Contra el Cáncer, and Conselleria d’Educació de la Generalitat Valenciana. The investigators had no conflicts of interest.

Steatosis linked to persistent ALT increase in hepatitis B

About one in five patients with chronic hepatitis B virus (HBV) infection had persistently elevated alanine aminotransferase (ALT) levels despite long-term treatment with tenofovir disoproxil fumarate, according to data from two phase III trials reported in the July issue of Clinical Gastroenterology and Hepatology (2017. doi: 10.1016/j.cgh.2017.01.032).

“Both host and viral factors, particularly hepatic steatosis and hepatitis B e antigen [HBeAg] seropositivity, are important contributors to this phenomenon,” Ira M. Jacobson, MD, of Mount Sinai Beth Israel Medical Center, New York, wrote with his associates. “Although serum ALT may indicate significant liver injury, this association is inconsistent, suggesting that relying on serum ALT alone is not sufficient to gauge either the extent of liver injury or the impact of antiviral therapy.”

Long-term treatment with newer antivirals such as tenofovir disoproxil fumarate (TDF) achieves complete viral suppression and improves liver histology in most cases of HBV infection. Transaminase levels are used to track long-term clinical response but sometimes remain elevated in the face of complete virologic response and regression of fibrosis. To explore predictors of this outcome, the researchers analyzed data from 471 chronic HBV patients receiving TDF 300 mg once daily for 5 years as part of two ongoing phase III trials (NCT00117676 and NCT00116805). At baseline, about 25% of patients were cirrhotic (Ishak fibrosis score greater than or equal to 5) and none had decompensated cirrhosis. A central laboratory analyzed ALT levels, which were up to 10 times the upper limit of normal in both HBeAg-positive and -negative patients and were at least twice the upper limit of normal in all HBeAg-positive patients.

After 5 years of TDF, ALT levels remained elevated in 87 (18%) of patients. Patients with at least 5% (grade 1) steatosis at baseline were significantly more likely to have persistent ALT elevation than were those with less or no steatosis (odds ratio, 2.2; 95% confidence interval, 1.03-4.9; P = .04). At least grade 1 steatosis at year 5 also was associated with persistent ALT elevation (OR, 3.4; 95% CI, 1.6-7.4; P =.002). Other significant correlates included HBeAg seropositivity (OR, 3.3; 95% CI, 1.7-6.6; P less than .001) and age 40 years or younger (OR, 2.1; 95% CI, 1.01-4.3; P = .046). Strikingly, half of HBeAg-positive patients with steatosis at baseline had elevated ALT at year 5, said the investigators.

Because many patients whose ALT values fall within commercial laboratory reference ranges have chronic active necroinflammation or fibrogenesis, the researchers performed a sensitivity analysis of patients who achieved a stricter definition of ALT normalization of no more than 30 U/L for men and 19 U/L for women that has been previously recommended (Ann Intern Med. 2002;137:1-10). In this analysis, 47% of patients had persistently elevated ALT despite effective virologic suppression, and the only significant predictor of persistent ALT elevation was grade 1 or more steatosis at year 5 (OR, 6.2; 95% CI, 2.3-16.4; P less than .001). Younger age and HBeAg positivity plus age were no longer significant.

Hepatic steatosis is common overall and in chronic HBV infection and often leads to increased serum transaminases, the researchers noted. Although past work has linked a PNPLA3 single nucleotide polymorphism to obesity, metabolic syndrome, and hepatic steatosis, the presence of this single nucleotide polymorphism was not significant in their study, possibly because many patients lacked genotype data, they added. “Larger longitudinal studies are warranted to further explore this factor and its potential effect on the biochemical response to antiviral treatment in [chronic HBV] patients,” they concluded.

Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

Antiviral therapy for chronic hepatitis B virus in most treated patients suppresses rather than eradicates infection. Despite this, long-term treatment results in substantial histologic improvement – including regression of fibrosis and reduction in complications.

However, as Jacobson et al. report in a histologic follow-up of 471 HBV patients treated long-term, aminotransferase elevation persisted in 18%. Factors implicated on multivariate analysis in unresolved biochemical dysfunction included HBeAg seropositivity, age less than 40 years, and steatosis at entry, in addition to steatosis at 5-year follow-up. The only association with hepatic dysfunction that persisted was steatosis when modified normal ranges for aminotransferases proposed by Prati were applied, namely 30 U for men and 19 U for women. This suggests that metabolic rather than viral factors are implicated in persistent biochemical dysfunction in patients with chronic HBV infection. Steatosis is also a frequent finding on liver biopsy in patients with chronic HCV infection.

Importantly, HCV-specific mechanisms have been implicated in the accumulation of steatosis in infected patients, as the virus may interfere with host lipid metabolism. HCV genotype 3 has a marked propensity to cause fat accumulation in hepatocytes, which appears to regress with successful antiviral therapy. In the interferon era, hepatic steatosis had been identified as a predictor of nonresponse to therapy for HCV. In patients with chronic viral hepatitis, attention needs to be paid to cofactors in liver disease – notably the metabolic syndrome – particularly because successfully treated patients are now discharged from the care of specialists.

Paul S. Martin, MD, is chief, division of hepatology, professor of medicine, University of Miami Health System, Fla. He has been a consultant and investigator for Gilead, BMS, and Merck.

Antiviral therapy for chronic hepatitis B virus in most treated patients suppresses rather than eradicates infection. Despite this, long-term treatment results in substantial histologic improvement – including regression of fibrosis and reduction in complications.

However, as Jacobson et al. report in a histologic follow-up of 471 HBV patients treated long-term, aminotransferase elevation persisted in 18%. Factors implicated on multivariate analysis in unresolved biochemical dysfunction included HBeAg seropositivity, age less than 40 years, and steatosis at entry, in addition to steatosis at 5-year follow-up. The only association with hepatic dysfunction that persisted was steatosis when modified normal ranges for aminotransferases proposed by Prati were applied, namely 30 U for men and 19 U for women. This suggests that metabolic rather than viral factors are implicated in persistent biochemical dysfunction in patients with chronic HBV infection. Steatosis is also a frequent finding on liver biopsy in patients with chronic HCV infection.

Importantly, HCV-specific mechanisms have been implicated in the accumulation of steatosis in infected patients, as the virus may interfere with host lipid metabolism. HCV genotype 3 has a marked propensity to cause fat accumulation in hepatocytes, which appears to regress with successful antiviral therapy. In the interferon era, hepatic steatosis had been identified as a predictor of nonresponse to therapy for HCV. In patients with chronic viral hepatitis, attention needs to be paid to cofactors in liver disease – notably the metabolic syndrome – particularly because successfully treated patients are now discharged from the care of specialists.

Paul S. Martin, MD, is chief, division of hepatology, professor of medicine, University of Miami Health System, Fla. He has been a consultant and investigator for Gilead, BMS, and Merck.

Antiviral therapy for chronic hepatitis B virus in most treated patients suppresses rather than eradicates infection. Despite this, long-term treatment results in substantial histologic improvement – including regression of fibrosis and reduction in complications.

However, as Jacobson et al. report in a histologic follow-up of 471 HBV patients treated long-term, aminotransferase elevation persisted in 18%. Factors implicated on multivariate analysis in unresolved biochemical dysfunction included HBeAg seropositivity, age less than 40 years, and steatosis at entry, in addition to steatosis at 5-year follow-up. The only association with hepatic dysfunction that persisted was steatosis when modified normal ranges for aminotransferases proposed by Prati were applied, namely 30 U for men and 19 U for women. This suggests that metabolic rather than viral factors are implicated in persistent biochemical dysfunction in patients with chronic HBV infection. Steatosis is also a frequent finding on liver biopsy in patients with chronic HCV infection.

Importantly, HCV-specific mechanisms have been implicated in the accumulation of steatosis in infected patients, as the virus may interfere with host lipid metabolism. HCV genotype 3 has a marked propensity to cause fat accumulation in hepatocytes, which appears to regress with successful antiviral therapy. In the interferon era, hepatic steatosis had been identified as a predictor of nonresponse to therapy for HCV. In patients with chronic viral hepatitis, attention needs to be paid to cofactors in liver disease – notably the metabolic syndrome – particularly because successfully treated patients are now discharged from the care of specialists.

Paul S. Martin, MD, is chief, division of hepatology, professor of medicine, University of Miami Health System, Fla. He has been a consultant and investigator for Gilead, BMS, and Merck.

About one in five patients with chronic hepatitis B virus (HBV) infection had persistently elevated alanine aminotransferase (ALT) levels despite long-term treatment with tenofovir disoproxil fumarate, according to data from two phase III trials reported in the July issue of Clinical Gastroenterology and Hepatology (2017. doi: 10.1016/j.cgh.2017.01.032).

“Both host and viral factors, particularly hepatic steatosis and hepatitis B e antigen [HBeAg] seropositivity, are important contributors to this phenomenon,” Ira M. Jacobson, MD, of Mount Sinai Beth Israel Medical Center, New York, wrote with his associates. “Although serum ALT may indicate significant liver injury, this association is inconsistent, suggesting that relying on serum ALT alone is not sufficient to gauge either the extent of liver injury or the impact of antiviral therapy.”

Long-term treatment with newer antivirals such as tenofovir disoproxil fumarate (TDF) achieves complete viral suppression and improves liver histology in most cases of HBV infection. Transaminase levels are used to track long-term clinical response but sometimes remain elevated in the face of complete virologic response and regression of fibrosis. To explore predictors of this outcome, the researchers analyzed data from 471 chronic HBV patients receiving TDF 300 mg once daily for 5 years as part of two ongoing phase III trials (NCT00117676 and NCT00116805). At baseline, about 25% of patients were cirrhotic (Ishak fibrosis score greater than or equal to 5) and none had decompensated cirrhosis. A central laboratory analyzed ALT levels, which were up to 10 times the upper limit of normal in both HBeAg-positive and -negative patients and were at least twice the upper limit of normal in all HBeAg-positive patients.

After 5 years of TDF, ALT levels remained elevated in 87 (18%) of patients. Patients with at least 5% (grade 1) steatosis at baseline were significantly more likely to have persistent ALT elevation than were those with less or no steatosis (odds ratio, 2.2; 95% confidence interval, 1.03-4.9; P = .04). At least grade 1 steatosis at year 5 also was associated with persistent ALT elevation (OR, 3.4; 95% CI, 1.6-7.4; P =.002). Other significant correlates included HBeAg seropositivity (OR, 3.3; 95% CI, 1.7-6.6; P less than .001) and age 40 years or younger (OR, 2.1; 95% CI, 1.01-4.3; P = .046). Strikingly, half of HBeAg-positive patients with steatosis at baseline had elevated ALT at year 5, said the investigators.

Because many patients whose ALT values fall within commercial laboratory reference ranges have chronic active necroinflammation or fibrogenesis, the researchers performed a sensitivity analysis of patients who achieved a stricter definition of ALT normalization of no more than 30 U/L for men and 19 U/L for women that has been previously recommended (Ann Intern Med. 2002;137:1-10). In this analysis, 47% of patients had persistently elevated ALT despite effective virologic suppression, and the only significant predictor of persistent ALT elevation was grade 1 or more steatosis at year 5 (OR, 6.2; 95% CI, 2.3-16.4; P less than .001). Younger age and HBeAg positivity plus age were no longer significant.

Hepatic steatosis is common overall and in chronic HBV infection and often leads to increased serum transaminases, the researchers noted. Although past work has linked a PNPLA3 single nucleotide polymorphism to obesity, metabolic syndrome, and hepatic steatosis, the presence of this single nucleotide polymorphism was not significant in their study, possibly because many patients lacked genotype data, they added. “Larger longitudinal studies are warranted to further explore this factor and its potential effect on the biochemical response to antiviral treatment in [chronic HBV] patients,” they concluded.

Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

About one in five patients with chronic hepatitis B virus (HBV) infection had persistently elevated alanine aminotransferase (ALT) levels despite long-term treatment with tenofovir disoproxil fumarate, according to data from two phase III trials reported in the July issue of Clinical Gastroenterology and Hepatology (2017. doi: 10.1016/j.cgh.2017.01.032).

“Both host and viral factors, particularly hepatic steatosis and hepatitis B e antigen [HBeAg] seropositivity, are important contributors to this phenomenon,” Ira M. Jacobson, MD, of Mount Sinai Beth Israel Medical Center, New York, wrote with his associates. “Although serum ALT may indicate significant liver injury, this association is inconsistent, suggesting that relying on serum ALT alone is not sufficient to gauge either the extent of liver injury or the impact of antiviral therapy.”

Long-term treatment with newer antivirals such as tenofovir disoproxil fumarate (TDF) achieves complete viral suppression and improves liver histology in most cases of HBV infection. Transaminase levels are used to track long-term clinical response but sometimes remain elevated in the face of complete virologic response and regression of fibrosis. To explore predictors of this outcome, the researchers analyzed data from 471 chronic HBV patients receiving TDF 300 mg once daily for 5 years as part of two ongoing phase III trials (NCT00117676 and NCT00116805). At baseline, about 25% of patients were cirrhotic (Ishak fibrosis score greater than or equal to 5) and none had decompensated cirrhosis. A central laboratory analyzed ALT levels, which were up to 10 times the upper limit of normal in both HBeAg-positive and -negative patients and were at least twice the upper limit of normal in all HBeAg-positive patients.

After 5 years of TDF, ALT levels remained elevated in 87 (18%) of patients. Patients with at least 5% (grade 1) steatosis at baseline were significantly more likely to have persistent ALT elevation than were those with less or no steatosis (odds ratio, 2.2; 95% confidence interval, 1.03-4.9; P = .04). At least grade 1 steatosis at year 5 also was associated with persistent ALT elevation (OR, 3.4; 95% CI, 1.6-7.4; P =.002). Other significant correlates included HBeAg seropositivity (OR, 3.3; 95% CI, 1.7-6.6; P less than .001) and age 40 years or younger (OR, 2.1; 95% CI, 1.01-4.3; P = .046). Strikingly, half of HBeAg-positive patients with steatosis at baseline had elevated ALT at year 5, said the investigators.

Because many patients whose ALT values fall within commercial laboratory reference ranges have chronic active necroinflammation or fibrogenesis, the researchers performed a sensitivity analysis of patients who achieved a stricter definition of ALT normalization of no more than 30 U/L for men and 19 U/L for women that has been previously recommended (Ann Intern Med. 2002;137:1-10). In this analysis, 47% of patients had persistently elevated ALT despite effective virologic suppression, and the only significant predictor of persistent ALT elevation was grade 1 or more steatosis at year 5 (OR, 6.2; 95% CI, 2.3-16.4; P less than .001). Younger age and HBeAg positivity plus age were no longer significant.

Hepatic steatosis is common overall and in chronic HBV infection and often leads to increased serum transaminases, the researchers noted. Although past work has linked a PNPLA3 single nucleotide polymorphism to obesity, metabolic syndrome, and hepatic steatosis, the presence of this single nucleotide polymorphism was not significant in their study, possibly because many patients lacked genotype data, they added. “Larger longitudinal studies are warranted to further explore this factor and its potential effect on the biochemical response to antiviral treatment in [chronic HBV] patients,” they concluded.

Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Key clinical point: In patients with chronic hepatitis B virus infection, steatosis was significantly associated with persistently elevated alanine aminotransferase (ALT) levels despite successful treatment with tenofovir disoproxil fumarate.

Major finding: At baseline and after 5 years of treatment, steatosis of grade 1 (5%) or more predicted persistent ALT elevation with odds ratios of 2.2 (P = .04) and 3.4 (P = .002), respectively.

Data source: Two phase III trials of tenofovir disoproxil fumarate in 471 patients with chronic hepatitis B virus infection.

Disclosures: Gilead Sciences sponsored the study. Dr. Jacobson disclosed consultancy, honoraria, and research ties to Gilead and several other pharmaceutical companies.

Improved adenoma detection rate found protective against interval cancers, death

An improved annual adenoma detection rate was associated with a significantly decreased risk of interval colorectal cancer (ICRC) and subsequent death in a national prospective cohort study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.006).

Source: American Gastroenterological Association

This is the first study to show a significant inverse relationship between an improved annual adenoma detection rate (ADR) and ICRC or subsequent death, Michal F. Kaminski MD, PhD, of the Institute of Oncology, Warsaw, wrote with his associates.

The rates of these outcomes were lowest when endoscopists achieved and maintained ADRs above 24.6%, which supports the currently recommended performance target of 25% for a mixed male-female population, they reported (Am J Gastroenterol. 2015;110:72-90).

This study included 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national cancer prevention program in Poland between 2004 and 2008. Endoscopists received annual feedback based on quality benchmarks to spur improvements in colonoscopy performance, and all participated for at least 2 years. For each endoscopist, investigators categorized annual ADRs based on quintiles for the entire data set. “Improved ADR” was defined as keeping annual ADR within the highest quintile (above 24.6%) or as increasing annual ADR by at least one quintile, compared with baseline.

Based on this definition, 219 endoscopists (75%) improved their ADR during a median of 5.8 years of follow-up (interquartile range, 5-7.2 years). In all, 168 interval CRCs were diagnosed, of which 44 cases led to death. After age, sex, and family history of colorectal cancer were controlled for, patients whose endoscopists improved their ADRs were significantly less likely to develop (adjusted hazard ratio, 0.6; 95% confidence interval, 0.5-0.9; P = .006) or to die of interval CRC (95% CI, 0.3-0.95; P = .04) than were patients whose endoscopists did not improve their ADRs.

Maintaining ADR in the highest quintile (above 24.6%) throughout follow-up led to an even lower risk of interval CRC (HR, 0.3; 95% CI, 0.1-0.6; P = .003) and death (HR, 0.2; 95% CI, 0.1-0.6; P = .003), the researchers reported. In absolute numbers, that translated to a decrease from 25.3 interval CRCs per 100,000 person-years of follow-up to 7.1 cases when endoscopists eventually reached the highest ADR quintile or to 4.5 cases when they were in the highest quintile throughout follow-up. Rates of colonic perforation remained stable even though most endoscopists upped their ADRs.

Together, these findings “prove the causal relationship between endoscopists’ ADRs and the likelihood of being diagnosed with, or dying from, interval CRC,” the investigators concluded. The national cancer registry in Poland is thought to miss about 10% of cases, but the rate of missing cases was not thought to change over time, they noted. However, they also lacked data on colonoscope withdrawal times, and had no control group to definitively show that feedback based on benchmarking was responsible for improved ADRs.

Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

The U.S. Multi-Society Task Force on Colorectal Cancer proposed the adenoma detection rate (ADR) as a colonoscopy quality measure in 2002. The rationale for a new measure was emerging evidence of highly variable adenoma detection and cancer prevention among colonoscopists. Highly variable performance, consistently verified in subsequent studies, casts a pall of severe operator dependence over colonoscopy. In landmark studies from Kaminski et al. and Corley et al. in 2010 and 2014, respectively, it was shown that doctors with higher ADRs provide patients with much greater protection against interval colorectal cancer (CRC).

Now Kaminski and colleagues from Poland have delivered a second landmark study, demonstrating for the first time that improving ADR prevents CRCs. We now have strong evidence that ADR predicts the level of cancer prevention, that ADR improvement is achievable, and that improving ADR further prevents CRCs and CRC deaths. Thanks to this study, ADR has come full circle. Measurement of and improvement in detection is now a fully validated concept that is essential to modern colonoscopy. In 2017, ADR measurement is mandatory for all practicing colonoscopists who are serious about CRC prevention. The tools to improve ADR that are widely accepted include ADR measurement and reporting, split or same-day preparations, lesion recognition and optimal technique, high-definition imaging, double examination (particularly for the right colon), patient rotation during withdrawal, chromoendoscopy, mucosal exposure devices (caps, cuffs, balloons, etc.), and water exchange. Tools for ADR improvement that are emerging or under study are brighter forms of electronic chromoendoscopy, and videorecording.

Douglas K. Rex, MD, is professor of medicine, division of gastroenterology/hepatology, at Indiana University, Indianapolis.* He has no relevant conflicts of interest.

Correction, 6/20/17: An earlier version of this article misstated Dr. Rex's affiliation.

The U.S. Multi-Society Task Force on Colorectal Cancer proposed the adenoma detection rate (ADR) as a colonoscopy quality measure in 2002. The rationale for a new measure was emerging evidence of highly variable adenoma detection and cancer prevention among colonoscopists. Highly variable performance, consistently verified in subsequent studies, casts a pall of severe operator dependence over colonoscopy. In landmark studies from Kaminski et al. and Corley et al. in 2010 and 2014, respectively, it was shown that doctors with higher ADRs provide patients with much greater protection against interval colorectal cancer (CRC).

Now Kaminski and colleagues from Poland have delivered a second landmark study, demonstrating for the first time that improving ADR prevents CRCs. We now have strong evidence that ADR predicts the level of cancer prevention, that ADR improvement is achievable, and that improving ADR further prevents CRCs and CRC deaths. Thanks to this study, ADR has come full circle. Measurement of and improvement in detection is now a fully validated concept that is essential to modern colonoscopy. In 2017, ADR measurement is mandatory for all practicing colonoscopists who are serious about CRC prevention. The tools to improve ADR that are widely accepted include ADR measurement and reporting, split or same-day preparations, lesion recognition and optimal technique, high-definition imaging, double examination (particularly for the right colon), patient rotation during withdrawal, chromoendoscopy, mucosal exposure devices (caps, cuffs, balloons, etc.), and water exchange. Tools for ADR improvement that are emerging or under study are brighter forms of electronic chromoendoscopy, and videorecording.

Douglas K. Rex, MD, is professor of medicine, division of gastroenterology/hepatology, at Indiana University, Indianapolis.* He has no relevant conflicts of interest.

Correction, 6/20/17: An earlier version of this article misstated Dr. Rex's affiliation.

The U.S. Multi-Society Task Force on Colorectal Cancer proposed the adenoma detection rate (ADR) as a colonoscopy quality measure in 2002. The rationale for a new measure was emerging evidence of highly variable adenoma detection and cancer prevention among colonoscopists. Highly variable performance, consistently verified in subsequent studies, casts a pall of severe operator dependence over colonoscopy. In landmark studies from Kaminski et al. and Corley et al. in 2010 and 2014, respectively, it was shown that doctors with higher ADRs provide patients with much greater protection against interval colorectal cancer (CRC).

Now Kaminski and colleagues from Poland have delivered a second landmark study, demonstrating for the first time that improving ADR prevents CRCs. We now have strong evidence that ADR predicts the level of cancer prevention, that ADR improvement is achievable, and that improving ADR further prevents CRCs and CRC deaths. Thanks to this study, ADR has come full circle. Measurement of and improvement in detection is now a fully validated concept that is essential to modern colonoscopy. In 2017, ADR measurement is mandatory for all practicing colonoscopists who are serious about CRC prevention. The tools to improve ADR that are widely accepted include ADR measurement and reporting, split or same-day preparations, lesion recognition and optimal technique, high-definition imaging, double examination (particularly for the right colon), patient rotation during withdrawal, chromoendoscopy, mucosal exposure devices (caps, cuffs, balloons, etc.), and water exchange. Tools for ADR improvement that are emerging or under study are brighter forms of electronic chromoendoscopy, and videorecording.

Douglas K. Rex, MD, is professor of medicine, division of gastroenterology/hepatology, at Indiana University, Indianapolis.* He has no relevant conflicts of interest.

Correction, 6/20/17: An earlier version of this article misstated Dr. Rex's affiliation.

An improved annual adenoma detection rate was associated with a significantly decreased risk of interval colorectal cancer (ICRC) and subsequent death in a national prospective cohort study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.006).

Source: American Gastroenterological Association

This is the first study to show a significant inverse relationship between an improved annual adenoma detection rate (ADR) and ICRC or subsequent death, Michal F. Kaminski MD, PhD, of the Institute of Oncology, Warsaw, wrote with his associates.

The rates of these outcomes were lowest when endoscopists achieved and maintained ADRs above 24.6%, which supports the currently recommended performance target of 25% for a mixed male-female population, they reported (Am J Gastroenterol. 2015;110:72-90).

This study included 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national cancer prevention program in Poland between 2004 and 2008. Endoscopists received annual feedback based on quality benchmarks to spur improvements in colonoscopy performance, and all participated for at least 2 years. For each endoscopist, investigators categorized annual ADRs based on quintiles for the entire data set. “Improved ADR” was defined as keeping annual ADR within the highest quintile (above 24.6%) or as increasing annual ADR by at least one quintile, compared with baseline.

Based on this definition, 219 endoscopists (75%) improved their ADR during a median of 5.8 years of follow-up (interquartile range, 5-7.2 years). In all, 168 interval CRCs were diagnosed, of which 44 cases led to death. After age, sex, and family history of colorectal cancer were controlled for, patients whose endoscopists improved their ADRs were significantly less likely to develop (adjusted hazard ratio, 0.6; 95% confidence interval, 0.5-0.9; P = .006) or to die of interval CRC (95% CI, 0.3-0.95; P = .04) than were patients whose endoscopists did not improve their ADRs.

Maintaining ADR in the highest quintile (above 24.6%) throughout follow-up led to an even lower risk of interval CRC (HR, 0.3; 95% CI, 0.1-0.6; P = .003) and death (HR, 0.2; 95% CI, 0.1-0.6; P = .003), the researchers reported. In absolute numbers, that translated to a decrease from 25.3 interval CRCs per 100,000 person-years of follow-up to 7.1 cases when endoscopists eventually reached the highest ADR quintile or to 4.5 cases when they were in the highest quintile throughout follow-up. Rates of colonic perforation remained stable even though most endoscopists upped their ADRs.

Together, these findings “prove the causal relationship between endoscopists’ ADRs and the likelihood of being diagnosed with, or dying from, interval CRC,” the investigators concluded. The national cancer registry in Poland is thought to miss about 10% of cases, but the rate of missing cases was not thought to change over time, they noted. However, they also lacked data on colonoscope withdrawal times, and had no control group to definitively show that feedback based on benchmarking was responsible for improved ADRs.

Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

An improved annual adenoma detection rate was associated with a significantly decreased risk of interval colorectal cancer (ICRC) and subsequent death in a national prospective cohort study published in the July issue of Gastroenterology (doi: 10.1053/j.gastro.2017.04.006).

Source: American Gastroenterological Association

This is the first study to show a significant inverse relationship between an improved annual adenoma detection rate (ADR) and ICRC or subsequent death, Michal F. Kaminski MD, PhD, of the Institute of Oncology, Warsaw, wrote with his associates.

The rates of these outcomes were lowest when endoscopists achieved and maintained ADRs above 24.6%, which supports the currently recommended performance target of 25% for a mixed male-female population, they reported (Am J Gastroenterol. 2015;110:72-90).

This study included 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national cancer prevention program in Poland between 2004 and 2008. Endoscopists received annual feedback based on quality benchmarks to spur improvements in colonoscopy performance, and all participated for at least 2 years. For each endoscopist, investigators categorized annual ADRs based on quintiles for the entire data set. “Improved ADR” was defined as keeping annual ADR within the highest quintile (above 24.6%) or as increasing annual ADR by at least one quintile, compared with baseline.

Based on this definition, 219 endoscopists (75%) improved their ADR during a median of 5.8 years of follow-up (interquartile range, 5-7.2 years). In all, 168 interval CRCs were diagnosed, of which 44 cases led to death. After age, sex, and family history of colorectal cancer were controlled for, patients whose endoscopists improved their ADRs were significantly less likely to develop (adjusted hazard ratio, 0.6; 95% confidence interval, 0.5-0.9; P = .006) or to die of interval CRC (95% CI, 0.3-0.95; P = .04) than were patients whose endoscopists did not improve their ADRs.

Maintaining ADR in the highest quintile (above 24.6%) throughout follow-up led to an even lower risk of interval CRC (HR, 0.3; 95% CI, 0.1-0.6; P = .003) and death (HR, 0.2; 95% CI, 0.1-0.6; P = .003), the researchers reported. In absolute numbers, that translated to a decrease from 25.3 interval CRCs per 100,000 person-years of follow-up to 7.1 cases when endoscopists eventually reached the highest ADR quintile or to 4.5 cases when they were in the highest quintile throughout follow-up. Rates of colonic perforation remained stable even though most endoscopists upped their ADRs.

Together, these findings “prove the causal relationship between endoscopists’ ADRs and the likelihood of being diagnosed with, or dying from, interval CRC,” the investigators concluded. The national cancer registry in Poland is thought to miss about 10% of cases, but the rate of missing cases was not thought to change over time, they noted. However, they also lacked data on colonoscope withdrawal times, and had no control group to definitively show that feedback based on benchmarking was responsible for improved ADRs.

Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: An improved adenoma detection rate was associated with a significantly reduced risk of interval colorectal cancer and subsequent death.

Major finding: Adjusted hazard ratios were 0.6 for developing ICRC (95% CI, 0.5-0.9; P = .006) and 0.50 for dying of ICRC (95% CI, 0.3-0.95; P = .04).

Data source: A prospective registry study of 294 endoscopists and 146,860 individuals who underwent screening colonoscopy as part of a national screening program between 2004 and 2008.

Disclosures: Funders included the Foundation of Polish Science, the Innovative Economy Operational Programme, the Polish Foundation of Gastroenterology, the Polish Ministry of Health, and the Polish Ministry of Science and Higher Education. The investigators reported having no relevant conflicts of interest.

Liraglutide produced cardiometabolic benefits in patients with schizophrenia

SAN DIEGO – The glucagon-like peptide-1 (GLP-1) receptor agonist liraglutide significantly lessened glucose tolerance, glycemic control, and other cardiometabolic risk factors in overweight or obese prediabetic patients receiving clozapine or olanzapine for schizophrenia, according to the findings of a randomized, double-blind, placebo-controlled trial.

Liraglutide was generally well tolerated and conferred cardiometabolic benefits similar to those in past studies of patients who were not on antipsychotic therapy, said Louise Vedtofte, PhD, of the University of Copenhagen. After 16 weeks of treatment, a 75-gram oral glucose tolerance test showed that 2-hour plasma glucose levels were 23% lower with liraglutide compared with placebo (P less than .001), she said at the annual scientific sessions of the American Diabetes Association.

Liraglutide patients also lost an average of 5.2 kg more body weight, cut 4.1 cm more from their waist circumference, and were significantly more likely to normalize their fasting plasma glucose and hemoglobin A1c levels compared with the placebo group (P less than .001 for each comparison), she said. The report by Dr. Vedtofte and her associates was published in JAMA Psychiatry simultaneously with the presentation at the meeting (2017 June 10. doi: 10.1001/jamapsychiatry.2017.1220).

Antipsychotics are core therapies in schizophrenia spectrum disorders but also cause increased appetite, weight gain, and cardiometabolic disturbances, noted Dr. Vedtofte. About a third of patients with schizophrenia who receive antipsychotics develop metabolic syndrome, and some 15% go on to develop diabetes. Clozapine and olanzapine cause more weight gain than do other antipsychotics, but swapping either medication for a more weight-neutral alternative can threaten the well-being of patients with schizophrenia, she emphasized. “Olanzapine and clozapine are good for the patients’ mental health, but are detrimental for their somatic health.”

As a GLP-1 receptor agonist, liraglutide inhibits appetite and food intake, and the Food and Drug Administration has approved treatment at doses of 1.8 mg for type 2 diabetes and 3 mg once daily for obesity. To explore how liraglutide affects patients on antipsychotics, Dr. Vedtofte and her associates randomly assigned 103 adults with schizophrenia spectrum disorders from two clinical sites in Denmark to receive placebo or 0.6 mg liraglutide subcutaneously once daily, up-titrated by 0.6 mg weekly to a maximum dose of 1.8 mg. At baseline, all patients were receiving stable treatment with clozapine, olanzapine, or both; had a body mass index of at least 27 kg/m2; and were prediabetic, with fasting plasma glucose levels of 110 to 125 mg/dL, HbA1c levels of 6.1%-6.4%, or 2-hour plasma glucose levels of 140 mg/dL or higher during the 75-gram oral glucose tolerance test.[[{"fid":"198022","view_mode":"medstat_image_flush_right","attributes":{"alt":"Louise Vedtofte, PhD, of the University of Copenhagen","height":"220","width":"147","class":"media-element file-medstat-image-flush-right","data-delta":"1"},"fields":{"format":"medstat_image_flush_right","field_file_image_caption[und][0][value]":"Dr. Louise Vedtofte","field_file_image_credit[und][0][value]":"","field_file_image_caption[und][0][format]":"plain_text","field_file_image_credit[und][0][format]":"plain_text"},"type":"media","field_deltas":{"1":{"format":"medstat_image_flush_right","field_file_image_caption[und][0][value]":"Dr. Louise Vedtofte","field_file_image_credit[und][0][value]":""}},"link_text":false}]]

Fully 93% of patients completed the study. At week 16, glucose tolerance improved significantly in the liraglutide group (P less than .001) but not in the placebo group (P less than .001 for difference between groups) after the researchers controlled for age, sex, illness duration, BMI, Clinical Global Impressions Scale severity score, and antipsychotic treatment. Glucose tolerance normalized in 30 patients who received liraglutide (64%) compared with 8 in the placebo group (16%; P less than .001), Dr. Vedtofte said.

Besides its benefits to waist circumference and weight loss, liraglutide was associated with reductions in systolic blood pressure (average, 5 mm Hg; visceral fat, 0.25 kg; and low-density lipoprotein levels, 15 mg/dL). These changes occurred even though liraglutide did not significantly alter C-peptide or glucagon secretion in the multivariate analysis of glucose tolerance test results, Dr. Vedtofte said. “The rate of nausea was 62% in liraglutide patients and 32% with placebo, but nausea was transient and did not explain the weight loss in subgroup analyses,” she added.

Liraglutide did not alter clinical liver function, but treated patients experienced a small (1.3 U/L), statistically significant rise in amylase levels. Rates of other adverse events were similar between groups except that patients were more likely to experience orthostatic hypotension on liraglutide (8.2%) than on placebo (0%; P = .04). Treatment with liraglutide did not significantly increase the likelihood of serious somatic or psychiatric adverse events, but one patient died while on therapy. “This patient was a man in his 60s with longstanding schizophrenia who was admitted to the hospital with vomiting and diarrhea, and died 3 days later,” Dr. Vedtofte said. Autopsy did not reveal the cause of death, but the patients showed no change in mental status or other signs of adverse therapeutic effects, she added.

Novo Nordisk funded the study and provided liraglutide and placebo injections. Additional support came from Capital Region Psychiatry Research Group, the foundation of King Christian X of Denmark, and the Lundbeck Foundation. Dr. Vedtofte had no disclosures.

SAN DIEGO – The glucagon-like peptide-1 (GLP-1) receptor agonist liraglutide significantly lessened glucose tolerance, glycemic control, and other cardiometabolic risk factors in overweight or obese prediabetic patients receiving clozapine or olanzapine for schizophrenia, according to the findings of a randomized, double-blind, placebo-controlled trial.

Liraglutide was generally well tolerated and conferred cardiometabolic benefits similar to those in past studies of patients who were not on antipsychotic therapy, said Louise Vedtofte, PhD, of the University of Copenhagen. After 16 weeks of treatment, a 75-gram oral glucose tolerance test showed that 2-hour plasma glucose levels were 23% lower with liraglutide compared with placebo (P less than .001), she said at the annual scientific sessions of the American Diabetes Association.

Liraglutide patients also lost an average of 5.2 kg more body weight, cut 4.1 cm more from their waist circumference, and were significantly more likely to normalize their fasting plasma glucose and hemoglobin A1c levels compared with the placebo group (P less than .001 for each comparison), she said. The report by Dr. Vedtofte and her associates was published in JAMA Psychiatry simultaneously with the presentation at the meeting (2017 June 10. doi: 10.1001/jamapsychiatry.2017.1220).

Antipsychotics are core therapies in schizophrenia spectrum disorders but also cause increased appetite, weight gain, and cardiometabolic disturbances, noted Dr. Vedtofte. About a third of patients with schizophrenia who receive antipsychotics develop metabolic syndrome, and some 15% go on to develop diabetes. Clozapine and olanzapine cause more weight gain than do other antipsychotics, but swapping either medication for a more weight-neutral alternative can threaten the well-being of patients with schizophrenia, she emphasized. “Olanzapine and clozapine are good for the patients’ mental health, but are detrimental for their somatic health.”

As a GLP-1 receptor agonist, liraglutide inhibits appetite and food intake, and the Food and Drug Administration has approved treatment at doses of 1.8 mg for type 2 diabetes and 3 mg once daily for obesity. To explore how liraglutide affects patients on antipsychotics, Dr. Vedtofte and her associates randomly assigned 103 adults with schizophrenia spectrum disorders from two clinical sites in Denmark to receive placebo or 0.6 mg liraglutide subcutaneously once daily, up-titrated by 0.6 mg weekly to a maximum dose of 1.8 mg. At baseline, all patients were receiving stable treatment with clozapine, olanzapine, or both; had a body mass index of at least 27 kg/m2; and were prediabetic, with fasting plasma glucose levels of 110 to 125 mg/dL, HbA1c levels of 6.1%-6.4%, or 2-hour plasma glucose levels of 140 mg/dL or higher during the 75-gram oral glucose tolerance test.[[{"fid":"198022","view_mode":"medstat_image_flush_right","attributes":{"alt":"Louise Vedtofte, PhD, of the University of Copenhagen","height":"220","width":"147","class":"media-element file-medstat-image-flush-right","data-delta":"1"},"fields":{"format":"medstat_image_flush_right","field_file_image_caption[und][0][value]":"Dr. Louise Vedtofte","field_file_image_credit[und][0][value]":"","field_file_image_caption[und][0][format]":"plain_text","field_file_image_credit[und][0][format]":"plain_text"},"type":"media","field_deltas":{"1":{"format":"medstat_image_flush_right","field_file_image_caption[und][0][value]":"Dr. Louise Vedtofte","field_file_image_credit[und][0][value]":""}},"link_text":false}]]

Fully 93% of patients completed the study. At week 16, glucose tolerance improved significantly in the liraglutide group (P less than .001) but not in the placebo group (P less than .001 for difference between groups) after the researchers controlled for age, sex, illness duration, BMI, Clinical Global Impressions Scale severity score, and antipsychotic treatment. Glucose tolerance normalized in 30 patients who received liraglutide (64%) compared with 8 in the placebo group (16%; P less than .001), Dr. Vedtofte said.

Besides its benefits to waist circumference and weight loss, liraglutide was associated with reductions in systolic blood pressure (average, 5 mm Hg; visceral fat, 0.25 kg; and low-density lipoprotein levels, 15 mg/dL). These changes occurred even though liraglutide did not significantly alter C-peptide or glucagon secretion in the multivariate analysis of glucose tolerance test results, Dr. Vedtofte said. “The rate of nausea was 62% in liraglutide patients and 32% with placebo, but nausea was transient and did not explain the weight loss in subgroup analyses,” she added.

Liraglutide did not alter clinical liver function, but treated patients experienced a small (1.3 U/L), statistically significant rise in amylase levels. Rates of other adverse events were similar between groups except that patients were more likely to experience orthostatic hypotension on liraglutide (8.2%) than on placebo (0%; P = .04). Treatment with liraglutide did not significantly increase the likelihood of serious somatic or psychiatric adverse events, but one patient died while on therapy. “This patient was a man in his 60s with longstanding schizophrenia who was admitted to the hospital with vomiting and diarrhea, and died 3 days later,” Dr. Vedtofte said. Autopsy did not reveal the cause of death, but the patients showed no change in mental status or other signs of adverse therapeutic effects, she added.

Novo Nordisk funded the study and provided liraglutide and placebo injections. Additional support came from Capital Region Psychiatry Research Group, the foundation of King Christian X of Denmark, and the Lundbeck Foundation. Dr. Vedtofte had no disclosures.

SAN DIEGO – The glucagon-like peptide-1 (GLP-1) receptor agonist liraglutide significantly lessened glucose tolerance, glycemic control, and other cardiometabolic risk factors in overweight or obese prediabetic patients receiving clozapine or olanzapine for schizophrenia, according to the findings of a randomized, double-blind, placebo-controlled trial.

Liraglutide was generally well tolerated and conferred cardiometabolic benefits similar to those in past studies of patients who were not on antipsychotic therapy, said Louise Vedtofte, PhD, of the University of Copenhagen. After 16 weeks of treatment, a 75-gram oral glucose tolerance test showed that 2-hour plasma glucose levels were 23% lower with liraglutide compared with placebo (P less than .001), she said at the annual scientific sessions of the American Diabetes Association.

Liraglutide patients also lost an average of 5.2 kg more body weight, cut 4.1 cm more from their waist circumference, and were significantly more likely to normalize their fasting plasma glucose and hemoglobin A1c levels compared with the placebo group (P less than .001 for each comparison), she said. The report by Dr. Vedtofte and her associates was published in JAMA Psychiatry simultaneously with the presentation at the meeting (2017 June 10. doi: 10.1001/jamapsychiatry.2017.1220).

Antipsychotics are core therapies in schizophrenia spectrum disorders but also cause increased appetite, weight gain, and cardiometabolic disturbances, noted Dr. Vedtofte. About a third of patients with schizophrenia who receive antipsychotics develop metabolic syndrome, and some 15% go on to develop diabetes. Clozapine and olanzapine cause more weight gain than do other antipsychotics, but swapping either medication for a more weight-neutral alternative can threaten the well-being of patients with schizophrenia, she emphasized. “Olanzapine and clozapine are good for the patients’ mental health, but are detrimental for their somatic health.”

As a GLP-1 receptor agonist, liraglutide inhibits appetite and food intake, and the Food and Drug Administration has approved treatment at doses of 1.8 mg for type 2 diabetes and 3 mg once daily for obesity. To explore how liraglutide affects patients on antipsychotics, Dr. Vedtofte and her associates randomly assigned 103 adults with schizophrenia spectrum disorders from two clinical sites in Denmark to receive placebo or 0.6 mg liraglutide subcutaneously once daily, up-titrated by 0.6 mg weekly to a maximum dose of 1.8 mg. At baseline, all patients were receiving stable treatment with clozapine, olanzapine, or both; had a body mass index of at least 27 kg/m2; and were prediabetic, with fasting plasma glucose levels of 110 to 125 mg/dL, HbA1c levels of 6.1%-6.4%, or 2-hour plasma glucose levels of 140 mg/dL or higher during the 75-gram oral glucose tolerance test.[[{"fid":"198022","view_mode":"medstat_image_flush_right","attributes":{"alt":"Louise Vedtofte, PhD, of the University of Copenhagen","height":"220","width":"147","class":"media-element file-medstat-image-flush-right","data-delta":"1"},"fields":{"format":"medstat_image_flush_right","field_file_image_caption[und][0][value]":"Dr. Louise Vedtofte","field_file_image_credit[und][0][value]":"","field_file_image_caption[und][0][format]":"plain_text","field_file_image_credit[und][0][format]":"plain_text"},"type":"media","field_deltas":{"1":{"format":"medstat_image_flush_right","field_file_image_caption[und][0][value]":"Dr. Louise Vedtofte","field_file_image_credit[und][0][value]":""}},"link_text":false}]]

Fully 93% of patients completed the study. At week 16, glucose tolerance improved significantly in the liraglutide group (P less than .001) but not in the placebo group (P less than .001 for difference between groups) after the researchers controlled for age, sex, illness duration, BMI, Clinical Global Impressions Scale severity score, and antipsychotic treatment. Glucose tolerance normalized in 30 patients who received liraglutide (64%) compared with 8 in the placebo group (16%; P less than .001), Dr. Vedtofte said.

Besides its benefits to waist circumference and weight loss, liraglutide was associated with reductions in systolic blood pressure (average, 5 mm Hg; visceral fat, 0.25 kg; and low-density lipoprotein levels, 15 mg/dL). These changes occurred even though liraglutide did not significantly alter C-peptide or glucagon secretion in the multivariate analysis of glucose tolerance test results, Dr. Vedtofte said. “The rate of nausea was 62% in liraglutide patients and 32% with placebo, but nausea was transient and did not explain the weight loss in subgroup analyses,” she added.

Liraglutide did not alter clinical liver function, but treated patients experienced a small (1.3 U/L), statistically significant rise in amylase levels. Rates of other adverse events were similar between groups except that patients were more likely to experience orthostatic hypotension on liraglutide (8.2%) than on placebo (0%; P = .04). Treatment with liraglutide did not significantly increase the likelihood of serious somatic or psychiatric adverse events, but one patient died while on therapy. “This patient was a man in his 60s with longstanding schizophrenia who was admitted to the hospital with vomiting and diarrhea, and died 3 days later,” Dr. Vedtofte said. Autopsy did not reveal the cause of death, but the patients showed no change in mental status or other signs of adverse therapeutic effects, she added.

Novo Nordisk funded the study and provided liraglutide and placebo injections. Additional support came from Capital Region Psychiatry Research Group, the foundation of King Christian X of Denmark, and the Lundbeck Foundation. Dr. Vedtofte had no disclosures.

AT THE ADA ANNUAL SCIENTIFIC SESSIONS

Key clinical point: