User login

VIDEO: Study supports close follow-up of patients with high-risk adenomas plus serrated polyps

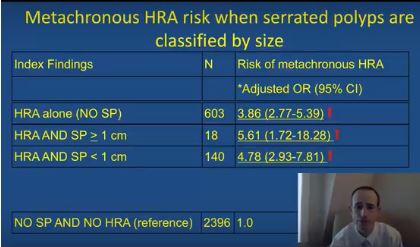

The simultaneous colonoscopic presence of serrated polyps and high-risk adenomas led to a fivefold increase in the odds of metachronous high-risk adenomas in a large population-based registry study reported in Gastroenterology (2017. doi: 10.1053/j.gastro.2017.09.011).

The data “support the recommendation that individuals with large and high-risk serrated lesions require closer surveillance,” said Joseph C. Anderson, MD, of White River Junction Department of Veterans Affairs Medical Center, Vt., with his associates. When discounting size and histology, the presence of serrated polyps alone was not associated with an increased risk for metachronous high-risk adenoma, they also reported. Although serrated polyps are important precursors of colorectal cancer, relevant longitudinal surveillance data are sparse. Therefore, the investigators studied 5,433 adults who underwent index and follow-up colonoscopies a median of 4.9 years apart and were tracked in the population-based New Hampshire Colonoscopy Registry. The cohort had a median age of 61 years and half of individuals were male.

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

After adjusting for age, sex, smoking status, body mass index, and median interval between colonoscopies, individuals were at significantly increased risk of metachronous high-risk adenoma if their baseline colonoscopy showed high-risk adenoma and synchronous serrated polyps (odds ratio, 5.6; 95% confidence interval, 1.7-18.3), high-risk adenoma with synchronous sessile serrated adenomas (or polyps) or traditional serrated adenomas (OR, 16.0; 95% CI, 7.0-37.0), or high-risk adenoma alone (OR, 3.9; 95% CI, 2.8-5.4), vs. participants with no findings.

The researchers also found that the index presence of large (at least 1-cm) serrated polyps greatly increased the likelihood of finding large metachronous serrated polyps on subsequent colonoscopy (OR, 14.0; 95% CI, 5.0-40.9). “This has clinical relevance, since previous studies have demonstrated an increased risk for colorectal cancer in individuals with large serrated polyps,” the researchers wrote. “However, this increased risk may occur over a protracted time period of 10 years or more, and addressing variation in serrated polyp detection rates and completeness of resection may be more effective than a shorter surveillance interval at reducing risk in these individuals.”

The index presence of sessile serrated adenomas or polyps, or traditional serrated adenomas, also predicted the subsequent development of large serrated polyps (OR, 9.7; 95% CI, 3.6-25.9). The study did not examine polyp location or morphology (flat versus polypoid), but the association might be related to right-sided or flat lesions, which colonoscopists are more likely to miss or to incompletely excise than more defined polypoid lesions, the researchers commented. “Additional research is needed to further clarify the associations between index patient characteristics, polyp location, size, endoscopic appearance and histology, and the metachronous risk of advanced lesions and colorectal cancer in order to refine current surveillance recommendations for individuals undergoing colonoscopy,” they commented.

The study spanned January 2004 to June 2015, and awareness about the importance of serrated polyps rose during this period, they also noted.

The National Cancer Institute and the Norris Cotton Cancer Center provided funding. The researchers reported having no conflicts of interest.

The simultaneous colonoscopic presence of serrated polyps and high-risk adenomas led to a fivefold increase in the odds of metachronous high-risk adenomas in a large population-based registry study reported in Gastroenterology (2017. doi: 10.1053/j.gastro.2017.09.011).

The data “support the recommendation that individuals with large and high-risk serrated lesions require closer surveillance,” said Joseph C. Anderson, MD, of White River Junction Department of Veterans Affairs Medical Center, Vt., with his associates. When discounting size and histology, the presence of serrated polyps alone was not associated with an increased risk for metachronous high-risk adenoma, they also reported. Although serrated polyps are important precursors of colorectal cancer, relevant longitudinal surveillance data are sparse. Therefore, the investigators studied 5,433 adults who underwent index and follow-up colonoscopies a median of 4.9 years apart and were tracked in the population-based New Hampshire Colonoscopy Registry. The cohort had a median age of 61 years and half of individuals were male.

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

After adjusting for age, sex, smoking status, body mass index, and median interval between colonoscopies, individuals were at significantly increased risk of metachronous high-risk adenoma if their baseline colonoscopy showed high-risk adenoma and synchronous serrated polyps (odds ratio, 5.6; 95% confidence interval, 1.7-18.3), high-risk adenoma with synchronous sessile serrated adenomas (or polyps) or traditional serrated adenomas (OR, 16.0; 95% CI, 7.0-37.0), or high-risk adenoma alone (OR, 3.9; 95% CI, 2.8-5.4), vs. participants with no findings.

The researchers also found that the index presence of large (at least 1-cm) serrated polyps greatly increased the likelihood of finding large metachronous serrated polyps on subsequent colonoscopy (OR, 14.0; 95% CI, 5.0-40.9). “This has clinical relevance, since previous studies have demonstrated an increased risk for colorectal cancer in individuals with large serrated polyps,” the researchers wrote. “However, this increased risk may occur over a protracted time period of 10 years or more, and addressing variation in serrated polyp detection rates and completeness of resection may be more effective than a shorter surveillance interval at reducing risk in these individuals.”

The index presence of sessile serrated adenomas or polyps, or traditional serrated adenomas, also predicted the subsequent development of large serrated polyps (OR, 9.7; 95% CI, 3.6-25.9). The study did not examine polyp location or morphology (flat versus polypoid), but the association might be related to right-sided or flat lesions, which colonoscopists are more likely to miss or to incompletely excise than more defined polypoid lesions, the researchers commented. “Additional research is needed to further clarify the associations between index patient characteristics, polyp location, size, endoscopic appearance and histology, and the metachronous risk of advanced lesions and colorectal cancer in order to refine current surveillance recommendations for individuals undergoing colonoscopy,” they commented.

The study spanned January 2004 to June 2015, and awareness about the importance of serrated polyps rose during this period, they also noted.

The National Cancer Institute and the Norris Cotton Cancer Center provided funding. The researchers reported having no conflicts of interest.

The simultaneous colonoscopic presence of serrated polyps and high-risk adenomas led to a fivefold increase in the odds of metachronous high-risk adenomas in a large population-based registry study reported in Gastroenterology (2017. doi: 10.1053/j.gastro.2017.09.011).

The data “support the recommendation that individuals with large and high-risk serrated lesions require closer surveillance,” said Joseph C. Anderson, MD, of White River Junction Department of Veterans Affairs Medical Center, Vt., with his associates. When discounting size and histology, the presence of serrated polyps alone was not associated with an increased risk for metachronous high-risk adenoma, they also reported. Although serrated polyps are important precursors of colorectal cancer, relevant longitudinal surveillance data are sparse. Therefore, the investigators studied 5,433 adults who underwent index and follow-up colonoscopies a median of 4.9 years apart and were tracked in the population-based New Hampshire Colonoscopy Registry. The cohort had a median age of 61 years and half of individuals were male.

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

After adjusting for age, sex, smoking status, body mass index, and median interval between colonoscopies, individuals were at significantly increased risk of metachronous high-risk adenoma if their baseline colonoscopy showed high-risk adenoma and synchronous serrated polyps (odds ratio, 5.6; 95% confidence interval, 1.7-18.3), high-risk adenoma with synchronous sessile serrated adenomas (or polyps) or traditional serrated adenomas (OR, 16.0; 95% CI, 7.0-37.0), or high-risk adenoma alone (OR, 3.9; 95% CI, 2.8-5.4), vs. participants with no findings.

The researchers also found that the index presence of large (at least 1-cm) serrated polyps greatly increased the likelihood of finding large metachronous serrated polyps on subsequent colonoscopy (OR, 14.0; 95% CI, 5.0-40.9). “This has clinical relevance, since previous studies have demonstrated an increased risk for colorectal cancer in individuals with large serrated polyps,” the researchers wrote. “However, this increased risk may occur over a protracted time period of 10 years or more, and addressing variation in serrated polyp detection rates and completeness of resection may be more effective than a shorter surveillance interval at reducing risk in these individuals.”

The index presence of sessile serrated adenomas or polyps, or traditional serrated adenomas, also predicted the subsequent development of large serrated polyps (OR, 9.7; 95% CI, 3.6-25.9). The study did not examine polyp location or morphology (flat versus polypoid), but the association might be related to right-sided or flat lesions, which colonoscopists are more likely to miss or to incompletely excise than more defined polypoid lesions, the researchers commented. “Additional research is needed to further clarify the associations between index patient characteristics, polyp location, size, endoscopic appearance and histology, and the metachronous risk of advanced lesions and colorectal cancer in order to refine current surveillance recommendations for individuals undergoing colonoscopy,” they commented.

The study spanned January 2004 to June 2015, and awareness about the importance of serrated polyps rose during this period, they also noted.

The National Cancer Institute and the Norris Cotton Cancer Center provided funding. The researchers reported having no conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: High-risk adenomas and the synchronous presence of serrated polyps significantly increased the risk of metachronous high-risk adenomas.

Major finding: Compared with individuals with unremarkable colonoscopies, the odds ratio was 5.6 after adjusting for age, sex, smoking status, body mass index, and median interval between colonoscopies.

Data source: Analyses of index and follow-up colonoscopies of 5,433 individuals from a population-based surveillance registry.

Disclosures: The National Cancer Institute and the Norris Cotton Cancer Center provided funding. The researchers reported having no conflicts of interest.

Adjusting fecal immunochemical test thresholds improved their performance

Physicians can minimize the heterogeneity of fecal immunochemical colorectal cancer screening tests by adjusting thresholds for positivity, according to researchers. The report is in the January issue of Gastroenterology (doi: 10.1053/j.gastro.2017.09.018).

“Rather than simply using thresholds recommended by the manufacturer, screening programs should choose thresholds based on intended levels of specificity and manageable positivity rates,” wrote PhD student Anton Gies of the German Cancer Research Center and the National Center for Tumor Diseases in Heidelberg, Germany, with his associates.

The investigators directly compared nine different fecal immunochemical assays using stool samples from 516 individuals, of whom 216 had advanced adenoma or colorectal cancer. Using thresholds recommended by manufacturers (2-17 mcg Hb/g feces) produced widely ranging sensitivities (22%-46%) and specificities (86%-98%). Using a uniform threshold of 15 mcg Hb/g feces narrowed the range of specificity (94%-98%), but sensitivities remained quite variable (16%-34%). Adjusting detection thresholds to obtain preset specificities (99%, 97%, or 93%) greatly narrowed both sensitivity (14%-18%, 21%-24%, and 30%-35%, respectively) and rates of positivity (2.8%-3.4%, 5.8%-6.1%, and 10%-11%, respectively), the researchers reported.

Increasingly, physicians are using fecal immunochemical testing to screen for colorectal neoplasia. In a prior study (Ann Intern Med. 2009 Feb 3;150[3]:162-90) investigators evaluated the diagnostic performance of six qualitative point-of-care fecal immunochemical tests among screening colonoscopy patients in Germany, and found that the tests had highly variable sensitivities and specificities for the detection of colorectal neoplasia. Not surprisingly, the most sensitive tests were the least specific, and vice versa, which is the problem with using fixed thresholds in qualitative fecal immunochemical tests, the researchers asserted.

Quantitative fecal immunochemical tests are more flexible than qualitative assays because users can adjust thresholds based on fecal hemoglobin concentrations. However, very few studies had directly compared sensitivities and specificities among quantitative fecal immunochemical tests, and “it is unclear to what extent differences ... reflect true heterogeneity in test performance or differences in study populations or varying pre-analytical conditions,” the investigators wrote. Patients in their study underwent colonoscopies in Germany between 2005 and 2010, and fecal samples were stored at –80 °C until analysis. The researchers calculated test sensitivities and specificities by using colonoscopy and histology reports evaluated by blinded, trained research assistants.

“Apparent heterogeneity in diagnostic performance of quantitative fecal immunochemical tests can be overcome to a large extent by adjusting thresholds to yield defined levels of specificity or positivity rates,” the investigators concluded. Only 16 patients in this study had colorectal cancer, which made it difficult to pinpoint test sensitivity for this finding, they noted. However, they found similar sensitivity estimates for colorectal cancer in an ancillary clinical study.

Manufacturers provided test kits free of charge. There were no external funding sources, and the researchers reported having no conflicts of interest.

The fecal immunochemical test (FIT) is an important option for colorectal cancer screening, endorsed by guidelines and effective for mass screening using mailed outreach. Patients offered FIT or a choice between FIT and colonoscopy are more likely to be screened.

In the United States, FIT is a qualitative test (reported as positive or negative), based on Food and Drug Administration regulations, in an attempt to simplify clinical decision making. In Europe, FIT has been used quantitatively, with adjustable positivity rate and sensitivity pegged to available colonoscopy resources. Adding complexity, there are multiple FIT brands, each with varying performance, even at similar hemoglobin concentrations. Each brand has a different sensitivity, specificity, and positivity rate, because reagents, buffers, and collection devices vary. Ambient temperature during mailing and transport time to processing labs can also affect test performance.

Theodore R. Levin, MD, is chief of gastroenterology, Kaiser Permanente Medical Center, Walnut Creek, Calif. He has no conflicts of interest.

The fecal immunochemical test (FIT) is an important option for colorectal cancer screening, endorsed by guidelines and effective for mass screening using mailed outreach. Patients offered FIT or a choice between FIT and colonoscopy are more likely to be screened.

In the United States, FIT is a qualitative test (reported as positive or negative), based on Food and Drug Administration regulations, in an attempt to simplify clinical decision making. In Europe, FIT has been used quantitatively, with adjustable positivity rate and sensitivity pegged to available colonoscopy resources. Adding complexity, there are multiple FIT brands, each with varying performance, even at similar hemoglobin concentrations. Each brand has a different sensitivity, specificity, and positivity rate, because reagents, buffers, and collection devices vary. Ambient temperature during mailing and transport time to processing labs can also affect test performance.

Theodore R. Levin, MD, is chief of gastroenterology, Kaiser Permanente Medical Center, Walnut Creek, Calif. He has no conflicts of interest.

The fecal immunochemical test (FIT) is an important option for colorectal cancer screening, endorsed by guidelines and effective for mass screening using mailed outreach. Patients offered FIT or a choice between FIT and colonoscopy are more likely to be screened.

In the United States, FIT is a qualitative test (reported as positive or negative), based on Food and Drug Administration regulations, in an attempt to simplify clinical decision making. In Europe, FIT has been used quantitatively, with adjustable positivity rate and sensitivity pegged to available colonoscopy resources. Adding complexity, there are multiple FIT brands, each with varying performance, even at similar hemoglobin concentrations. Each brand has a different sensitivity, specificity, and positivity rate, because reagents, buffers, and collection devices vary. Ambient temperature during mailing and transport time to processing labs can also affect test performance.

Theodore R. Levin, MD, is chief of gastroenterology, Kaiser Permanente Medical Center, Walnut Creek, Calif. He has no conflicts of interest.

Physicians can minimize the heterogeneity of fecal immunochemical colorectal cancer screening tests by adjusting thresholds for positivity, according to researchers. The report is in the January issue of Gastroenterology (doi: 10.1053/j.gastro.2017.09.018).

“Rather than simply using thresholds recommended by the manufacturer, screening programs should choose thresholds based on intended levels of specificity and manageable positivity rates,” wrote PhD student Anton Gies of the German Cancer Research Center and the National Center for Tumor Diseases in Heidelberg, Germany, with his associates.

The investigators directly compared nine different fecal immunochemical assays using stool samples from 516 individuals, of whom 216 had advanced adenoma or colorectal cancer. Using thresholds recommended by manufacturers (2-17 mcg Hb/g feces) produced widely ranging sensitivities (22%-46%) and specificities (86%-98%). Using a uniform threshold of 15 mcg Hb/g feces narrowed the range of specificity (94%-98%), but sensitivities remained quite variable (16%-34%). Adjusting detection thresholds to obtain preset specificities (99%, 97%, or 93%) greatly narrowed both sensitivity (14%-18%, 21%-24%, and 30%-35%, respectively) and rates of positivity (2.8%-3.4%, 5.8%-6.1%, and 10%-11%, respectively), the researchers reported.

Increasingly, physicians are using fecal immunochemical testing to screen for colorectal neoplasia. In a prior study (Ann Intern Med. 2009 Feb 3;150[3]:162-90) investigators evaluated the diagnostic performance of six qualitative point-of-care fecal immunochemical tests among screening colonoscopy patients in Germany, and found that the tests had highly variable sensitivities and specificities for the detection of colorectal neoplasia. Not surprisingly, the most sensitive tests were the least specific, and vice versa, which is the problem with using fixed thresholds in qualitative fecal immunochemical tests, the researchers asserted.

Quantitative fecal immunochemical tests are more flexible than qualitative assays because users can adjust thresholds based on fecal hemoglobin concentrations. However, very few studies had directly compared sensitivities and specificities among quantitative fecal immunochemical tests, and “it is unclear to what extent differences ... reflect true heterogeneity in test performance or differences in study populations or varying pre-analytical conditions,” the investigators wrote. Patients in their study underwent colonoscopies in Germany between 2005 and 2010, and fecal samples were stored at –80 °C until analysis. The researchers calculated test sensitivities and specificities by using colonoscopy and histology reports evaluated by blinded, trained research assistants.

“Apparent heterogeneity in diagnostic performance of quantitative fecal immunochemical tests can be overcome to a large extent by adjusting thresholds to yield defined levels of specificity or positivity rates,” the investigators concluded. Only 16 patients in this study had colorectal cancer, which made it difficult to pinpoint test sensitivity for this finding, they noted. However, they found similar sensitivity estimates for colorectal cancer in an ancillary clinical study.

Manufacturers provided test kits free of charge. There were no external funding sources, and the researchers reported having no conflicts of interest.

Physicians can minimize the heterogeneity of fecal immunochemical colorectal cancer screening tests by adjusting thresholds for positivity, according to researchers. The report is in the January issue of Gastroenterology (doi: 10.1053/j.gastro.2017.09.018).

“Rather than simply using thresholds recommended by the manufacturer, screening programs should choose thresholds based on intended levels of specificity and manageable positivity rates,” wrote PhD student Anton Gies of the German Cancer Research Center and the National Center for Tumor Diseases in Heidelberg, Germany, with his associates.

The investigators directly compared nine different fecal immunochemical assays using stool samples from 516 individuals, of whom 216 had advanced adenoma or colorectal cancer. Using thresholds recommended by manufacturers (2-17 mcg Hb/g feces) produced widely ranging sensitivities (22%-46%) and specificities (86%-98%). Using a uniform threshold of 15 mcg Hb/g feces narrowed the range of specificity (94%-98%), but sensitivities remained quite variable (16%-34%). Adjusting detection thresholds to obtain preset specificities (99%, 97%, or 93%) greatly narrowed both sensitivity (14%-18%, 21%-24%, and 30%-35%, respectively) and rates of positivity (2.8%-3.4%, 5.8%-6.1%, and 10%-11%, respectively), the researchers reported.

Increasingly, physicians are using fecal immunochemical testing to screen for colorectal neoplasia. In a prior study (Ann Intern Med. 2009 Feb 3;150[3]:162-90) investigators evaluated the diagnostic performance of six qualitative point-of-care fecal immunochemical tests among screening colonoscopy patients in Germany, and found that the tests had highly variable sensitivities and specificities for the detection of colorectal neoplasia. Not surprisingly, the most sensitive tests were the least specific, and vice versa, which is the problem with using fixed thresholds in qualitative fecal immunochemical tests, the researchers asserted.

Quantitative fecal immunochemical tests are more flexible than qualitative assays because users can adjust thresholds based on fecal hemoglobin concentrations. However, very few studies had directly compared sensitivities and specificities among quantitative fecal immunochemical tests, and “it is unclear to what extent differences ... reflect true heterogeneity in test performance or differences in study populations or varying pre-analytical conditions,” the investigators wrote. Patients in their study underwent colonoscopies in Germany between 2005 and 2010, and fecal samples were stored at –80 °C until analysis. The researchers calculated test sensitivities and specificities by using colonoscopy and histology reports evaluated by blinded, trained research assistants.

“Apparent heterogeneity in diagnostic performance of quantitative fecal immunochemical tests can be overcome to a large extent by adjusting thresholds to yield defined levels of specificity or positivity rates,” the investigators concluded. Only 16 patients in this study had colorectal cancer, which made it difficult to pinpoint test sensitivity for this finding, they noted. However, they found similar sensitivity estimates for colorectal cancer in an ancillary clinical study.

Manufacturers provided test kits free of charge. There were no external funding sources, and the researchers reported having no conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: To minimize the heterogeneity of fecal immunochemical screening tests, adjust thresholds to produce a predetermined specificity or a manageable rate of positivity.

Major finding: Adjusting detection thresholds to obtain preset specificities (99%, 97%, or 93%) greatly narrowed both sensitivity (14%-18%, 21%-24%, and 30%-35%, respectively) and rates of positivity (2.8%-3.4%, 5.8%-6.1%, and 10%-11%, respectively).

Data source: A comparison of nine different fecal immunochemical assays in 516 patients, of whom 216 had colorectal neoplasias.

Disclosures: Manufacturers provided test kits free of charge. There were no other external sources of support, and the researchers reported having no conflicts of interest.

Frailty, not age, predicted complications after ambulatory surgery

Frailty was associated with a significant increase in 30-day complications after ambulatory hernia repair or ambulatory surgery of the breast, thyroid, or parathyroid, according to the results of a large retrospective cohort study.

The findings reinforce prior work indicating that frailty, not chronologic age, should be a primary factor when deciding about and preparing for surgery, Carolyn D. Seib, MD, and her associates at the University of California, San Francisco, wrote in JAMA Surgery. “Informed consent should be adjusted based on frailty to ensure that patients have an accurate assessment of their risk when making decisions about whether to undergo surgery,” the researchers said.

To test the hypothesis that frailty predicts morbidity and mortality after ambulatory general surgery, the researchers studied 140,828 patients older than 40 years from the 2007-2010 American College of Surgeons National Surgical Quality Improvement Program (JAMA Surg. 2017 Oct 11. doi: 10.1001/jamasurg.2017.4007). Nearly 2,500 (1.7%) patients experienced perioperative complications, and 0.7% had serious complications, the researchers said. After controlling for age sex, race or ethnicity, smoking, type of anesthesia, and corticosteroid use, patients with an intermediate (0.18-0.35) frailty score had a 70% higher odds of any complication (odds ratio, 1.7; 95% confidence interval, 1.5-1.9) and a 100% higher odds of serious complications (OR, 2.0; 95% CI, 1.7-2.3), compared with patients with a low frailty score.

An intermediate score reflected the presence of two to three frailty traits, such as impaired functional status, history of diabetes, pneumonia, chronic cardiovascular or lung disease, or impaired sensorium, the investigators noted. Notably, having a high modified frailty index (four or more frailty traits) was associated with 3.3-fold higher odds of any complication and with nearly 4-fold higher odds of serious complications, even after controlling for potential confounders.

Among modifiable risk factors, only the use of local and monitored anesthesia was associated with a significant decrease in the likelihood of serious 30-day complications (adjusted OR, 0.66; 95% CI, 0.53-0.81). Single-center studies of elderly patients undergoing inguinal hernia repair have reported similar findings, the researchers said. “For frail patients who choose to undergo hernia repair, local and monitored anesthesia care should be used whenever possible,” they wrote. “The use of local with monitored anesthesia care may be challenging in complex surgical procedures for breast cancer, such as modified radical mastectomy or axillary dissection, but it should be considered for patients with increased anesthesia risk who are undergoing ambulatory breast surgery.”

The National Institute on Aging provided partial funding. The investigators reported having no conflicts of interest.

Frailty was associated with a significant increase in 30-day complications after ambulatory hernia repair or ambulatory surgery of the breast, thyroid, or parathyroid, according to the results of a large retrospective cohort study.

The findings reinforce prior work indicating that frailty, not chronologic age, should be a primary factor when deciding about and preparing for surgery, Carolyn D. Seib, MD, and her associates at the University of California, San Francisco, wrote in JAMA Surgery. “Informed consent should be adjusted based on frailty to ensure that patients have an accurate assessment of their risk when making decisions about whether to undergo surgery,” the researchers said.

To test the hypothesis that frailty predicts morbidity and mortality after ambulatory general surgery, the researchers studied 140,828 patients older than 40 years from the 2007-2010 American College of Surgeons National Surgical Quality Improvement Program (JAMA Surg. 2017 Oct 11. doi: 10.1001/jamasurg.2017.4007). Nearly 2,500 (1.7%) patients experienced perioperative complications, and 0.7% had serious complications, the researchers said. After controlling for age sex, race or ethnicity, smoking, type of anesthesia, and corticosteroid use, patients with an intermediate (0.18-0.35) frailty score had a 70% higher odds of any complication (odds ratio, 1.7; 95% confidence interval, 1.5-1.9) and a 100% higher odds of serious complications (OR, 2.0; 95% CI, 1.7-2.3), compared with patients with a low frailty score.

An intermediate score reflected the presence of two to three frailty traits, such as impaired functional status, history of diabetes, pneumonia, chronic cardiovascular or lung disease, or impaired sensorium, the investigators noted. Notably, having a high modified frailty index (four or more frailty traits) was associated with 3.3-fold higher odds of any complication and with nearly 4-fold higher odds of serious complications, even after controlling for potential confounders.

Among modifiable risk factors, only the use of local and monitored anesthesia was associated with a significant decrease in the likelihood of serious 30-day complications (adjusted OR, 0.66; 95% CI, 0.53-0.81). Single-center studies of elderly patients undergoing inguinal hernia repair have reported similar findings, the researchers said. “For frail patients who choose to undergo hernia repair, local and monitored anesthesia care should be used whenever possible,” they wrote. “The use of local with monitored anesthesia care may be challenging in complex surgical procedures for breast cancer, such as modified radical mastectomy or axillary dissection, but it should be considered for patients with increased anesthesia risk who are undergoing ambulatory breast surgery.”

The National Institute on Aging provided partial funding. The investigators reported having no conflicts of interest.

Frailty was associated with a significant increase in 30-day complications after ambulatory hernia repair or ambulatory surgery of the breast, thyroid, or parathyroid, according to the results of a large retrospective cohort study.

The findings reinforce prior work indicating that frailty, not chronologic age, should be a primary factor when deciding about and preparing for surgery, Carolyn D. Seib, MD, and her associates at the University of California, San Francisco, wrote in JAMA Surgery. “Informed consent should be adjusted based on frailty to ensure that patients have an accurate assessment of their risk when making decisions about whether to undergo surgery,” the researchers said.

To test the hypothesis that frailty predicts morbidity and mortality after ambulatory general surgery, the researchers studied 140,828 patients older than 40 years from the 2007-2010 American College of Surgeons National Surgical Quality Improvement Program (JAMA Surg. 2017 Oct 11. doi: 10.1001/jamasurg.2017.4007). Nearly 2,500 (1.7%) patients experienced perioperative complications, and 0.7% had serious complications, the researchers said. After controlling for age sex, race or ethnicity, smoking, type of anesthesia, and corticosteroid use, patients with an intermediate (0.18-0.35) frailty score had a 70% higher odds of any complication (odds ratio, 1.7; 95% confidence interval, 1.5-1.9) and a 100% higher odds of serious complications (OR, 2.0; 95% CI, 1.7-2.3), compared with patients with a low frailty score.

An intermediate score reflected the presence of two to three frailty traits, such as impaired functional status, history of diabetes, pneumonia, chronic cardiovascular or lung disease, or impaired sensorium, the investigators noted. Notably, having a high modified frailty index (four or more frailty traits) was associated with 3.3-fold higher odds of any complication and with nearly 4-fold higher odds of serious complications, even after controlling for potential confounders.

Among modifiable risk factors, only the use of local and monitored anesthesia was associated with a significant decrease in the likelihood of serious 30-day complications (adjusted OR, 0.66; 95% CI, 0.53-0.81). Single-center studies of elderly patients undergoing inguinal hernia repair have reported similar findings, the researchers said. “For frail patients who choose to undergo hernia repair, local and monitored anesthesia care should be used whenever possible,” they wrote. “The use of local with monitored anesthesia care may be challenging in complex surgical procedures for breast cancer, such as modified radical mastectomy or axillary dissection, but it should be considered for patients with increased anesthesia risk who are undergoing ambulatory breast surgery.”

The National Institute on Aging provided partial funding. The investigators reported having no conflicts of interest.

FROM JAMA SURGERY

Key clinical point: Frailty was an independent risk factor for 30-day complications of ambulatory surgery, independent of age and other correlates.

Major finding: Having an intermediate (0.18-0.35) frailty score increased the odds of any complication by 70% (OR, 1.7).

Data source: A single-center retrospective cohort study of 140,828 patients older than 40 years from the 2007-2010 American College of Surgeons National Surgical Quality Improvement Program.

Disclosures: The investigators had no disclosures.

ACOG updates guidance on pelvic organ prolapse

Using polypropylene mesh to augment surgical repair of anterior vaginal wall prolapse improves anatomic and some subjective outcomes, compared with native tissue repair, but it also comes with increased morbidity, according to new guidance from the American College of Obstetricians and Gynecologists.

(POP) to incorporate recent systematic review evidence.

When using polypropylene mesh for anterior POP repair, 11% of patients develop mesh erosion, of which 7% require surgical correction, according to the updated practice bulletin (Obstet Gynecol. 2017;130:e234-50).

“Referral to an obstetrician-gynecologist with appropriate training and experience, such as a female pelvic medicine and reconstructive surgery specialist, is recommended for surgical treatment of prolapse mesh complications,” ACOG and AUGS wrote.

The practice bulletin updates the recommendations on mesh based on a recent systematic review and meta-analysis that concluded that biological graft repair and absorbable mesh offered minimal benefits compared with native tissue repair, and did not significantly reduce rates of prolapse awareness or repeat surgery (Cochrane Database Syst Rev. 2016 Nov 30;11:CD004014).

Porcine dermis graft, which was used in most of the studies, did not significantly reduce rates of anterior prolapse recurrence compared with native tissue repair. Use of polypropylene mesh also tends to prolong operating times and causes more blood loss than native tissue anterior repair, and is associated with an elevated combined risk of stress urinary incontinence, mesh erosion, and repeat surgery for prolapse, the review concluded.

“Uterosacral and sacrospinous ligament suspension for apical POP with native tissue are equally effective surgical treatments of POP, with comparable anatomic, functional, and adverse outcomes,” the authors wrote in the practice bulletin.

Neither synthetic mesh nor biologic grafts improve outcomes of transvaginal repair of posterior vaginal wall prolapse, they added. As an alternative to surgery, most women can be successfully fitted with a pessary and clinicians should offer them this option, the practice bulletin stated. In up to 9% of cases, pessaries cause local devascularization or erosion, in which case they should be removed for 2-4 weeks while the patient undergoes local estrogen therapy.

Although POP is common and benign, symptomatic cases undermine quality of life by causing vaginal bulge and pressure and problems voiding, defecating, and during sexual activity. Consequently, about 300,000 women in the United States undergo surgery for POP every year. By 2050, population aging in the United States will lead to about a 50% rise in the number of women with POP, according to the practice bulletin.

Using polypropylene mesh to augment surgical repair of anterior vaginal wall prolapse improves anatomic and some subjective outcomes, compared with native tissue repair, but it also comes with increased morbidity, according to new guidance from the American College of Obstetricians and Gynecologists.

(POP) to incorporate recent systematic review evidence.

When using polypropylene mesh for anterior POP repair, 11% of patients develop mesh erosion, of which 7% require surgical correction, according to the updated practice bulletin (Obstet Gynecol. 2017;130:e234-50).

“Referral to an obstetrician-gynecologist with appropriate training and experience, such as a female pelvic medicine and reconstructive surgery specialist, is recommended for surgical treatment of prolapse mesh complications,” ACOG and AUGS wrote.

The practice bulletin updates the recommendations on mesh based on a recent systematic review and meta-analysis that concluded that biological graft repair and absorbable mesh offered minimal benefits compared with native tissue repair, and did not significantly reduce rates of prolapse awareness or repeat surgery (Cochrane Database Syst Rev. 2016 Nov 30;11:CD004014).

Porcine dermis graft, which was used in most of the studies, did not significantly reduce rates of anterior prolapse recurrence compared with native tissue repair. Use of polypropylene mesh also tends to prolong operating times and causes more blood loss than native tissue anterior repair, and is associated with an elevated combined risk of stress urinary incontinence, mesh erosion, and repeat surgery for prolapse, the review concluded.

“Uterosacral and sacrospinous ligament suspension for apical POP with native tissue are equally effective surgical treatments of POP, with comparable anatomic, functional, and adverse outcomes,” the authors wrote in the practice bulletin.

Neither synthetic mesh nor biologic grafts improve outcomes of transvaginal repair of posterior vaginal wall prolapse, they added. As an alternative to surgery, most women can be successfully fitted with a pessary and clinicians should offer them this option, the practice bulletin stated. In up to 9% of cases, pessaries cause local devascularization or erosion, in which case they should be removed for 2-4 weeks while the patient undergoes local estrogen therapy.

Although POP is common and benign, symptomatic cases undermine quality of life by causing vaginal bulge and pressure and problems voiding, defecating, and during sexual activity. Consequently, about 300,000 women in the United States undergo surgery for POP every year. By 2050, population aging in the United States will lead to about a 50% rise in the number of women with POP, according to the practice bulletin.

Using polypropylene mesh to augment surgical repair of anterior vaginal wall prolapse improves anatomic and some subjective outcomes, compared with native tissue repair, but it also comes with increased morbidity, according to new guidance from the American College of Obstetricians and Gynecologists.

(POP) to incorporate recent systematic review evidence.

When using polypropylene mesh for anterior POP repair, 11% of patients develop mesh erosion, of which 7% require surgical correction, according to the updated practice bulletin (Obstet Gynecol. 2017;130:e234-50).

“Referral to an obstetrician-gynecologist with appropriate training and experience, such as a female pelvic medicine and reconstructive surgery specialist, is recommended for surgical treatment of prolapse mesh complications,” ACOG and AUGS wrote.

The practice bulletin updates the recommendations on mesh based on a recent systematic review and meta-analysis that concluded that biological graft repair and absorbable mesh offered minimal benefits compared with native tissue repair, and did not significantly reduce rates of prolapse awareness or repeat surgery (Cochrane Database Syst Rev. 2016 Nov 30;11:CD004014).

Porcine dermis graft, which was used in most of the studies, did not significantly reduce rates of anterior prolapse recurrence compared with native tissue repair. Use of polypropylene mesh also tends to prolong operating times and causes more blood loss than native tissue anterior repair, and is associated with an elevated combined risk of stress urinary incontinence, mesh erosion, and repeat surgery for prolapse, the review concluded.

“Uterosacral and sacrospinous ligament suspension for apical POP with native tissue are equally effective surgical treatments of POP, with comparable anatomic, functional, and adverse outcomes,” the authors wrote in the practice bulletin.

Neither synthetic mesh nor biologic grafts improve outcomes of transvaginal repair of posterior vaginal wall prolapse, they added. As an alternative to surgery, most women can be successfully fitted with a pessary and clinicians should offer them this option, the practice bulletin stated. In up to 9% of cases, pessaries cause local devascularization or erosion, in which case they should be removed for 2-4 weeks while the patient undergoes local estrogen therapy.

Although POP is common and benign, symptomatic cases undermine quality of life by causing vaginal bulge and pressure and problems voiding, defecating, and during sexual activity. Consequently, about 300,000 women in the United States undergo surgery for POP every year. By 2050, population aging in the United States will lead to about a 50% rise in the number of women with POP, according to the practice bulletin.

FROM OBSTETRICS & GYNECOLOGY

ACOG: VBAC is safe for many women

Women and their , according to an updated practice bulletin from the American College of Obstetricians and Gynecologists.

Trial of labor after cesarean delivery (TOLAC) results in a successful birth in 60%-80% of cases, sparing mothers from major abdominal surgery and reducing the risk of hemorrhage, thromboses, and infection, the authors of the practice bulletin wrote. “The preponderance of evidence suggests that most women with one previous cesarean delivery with a low-transverse incision are candidates for and should be counseled about and offered TOLAC,” they said (Obstet Gynecol. 2017 Nov;130[5]:e217-33. doi: 10.1097/AOG.0000000000002398).

Rates of cesarean delivery in the United States jumped from 5% to nearly 32% between 1970 and 2016. Although rates of VBAC rose between the mid-1980s and the mid-1990s, cases of uterine rupture and other complications spurred fears of malpractice litigation and reversed this trend. VBAC rates were more than 28% in 1996 but fell to 8.5% by 2006, according to the practice bulletin.

To reduce the risk of uterine rupture, avoid misoprostol for cervical ripening and labor induction in women with a prior cesarean delivery, ACOG recommended.

“No evidence suggests that epidural analgesia is a causal risk factor for unsuccessful TOLAC,” the authors added. “Therefore, epidural analgesia for labor may be used as part of TOLAC, and adequate pain relief may encourage more women to choose TOLAC.”

Women with two prior low-transverse cesareans also are potential candidates for TOLAC, depending on other predictors of successful VBAC. Factors that reduce the chances of a successful TOLAC include advanced maternal age, high body mass index, high birth weight, gestational age of more than 40 weeks at delivery, and preeclampsia at the time of delivery, according to the practice bulletin.

To reduce the risk of adverse outcomes of complications, TOLAC should not occur at home and should only occur at level I facilities (or higher) that can perform an emergency cesarean delivery if the mother or fetus is in jeopardy.

The practice bulletin recommends continuous fetal heart rate monitoring during TOLAC and notes several additional categories of TOLAC candidates. Obstetricians and patients should discuss the potential risks and benefits of both TOLAC and elective repeat cesarean delivery, and that discussion should be documented in the medical record, ACOG recommended.

Women and their , according to an updated practice bulletin from the American College of Obstetricians and Gynecologists.

Trial of labor after cesarean delivery (TOLAC) results in a successful birth in 60%-80% of cases, sparing mothers from major abdominal surgery and reducing the risk of hemorrhage, thromboses, and infection, the authors of the practice bulletin wrote. “The preponderance of evidence suggests that most women with one previous cesarean delivery with a low-transverse incision are candidates for and should be counseled about and offered TOLAC,” they said (Obstet Gynecol. 2017 Nov;130[5]:e217-33. doi: 10.1097/AOG.0000000000002398).

Rates of cesarean delivery in the United States jumped from 5% to nearly 32% between 1970 and 2016. Although rates of VBAC rose between the mid-1980s and the mid-1990s, cases of uterine rupture and other complications spurred fears of malpractice litigation and reversed this trend. VBAC rates were more than 28% in 1996 but fell to 8.5% by 2006, according to the practice bulletin.

To reduce the risk of uterine rupture, avoid misoprostol for cervical ripening and labor induction in women with a prior cesarean delivery, ACOG recommended.

“No evidence suggests that epidural analgesia is a causal risk factor for unsuccessful TOLAC,” the authors added. “Therefore, epidural analgesia for labor may be used as part of TOLAC, and adequate pain relief may encourage more women to choose TOLAC.”

Women with two prior low-transverse cesareans also are potential candidates for TOLAC, depending on other predictors of successful VBAC. Factors that reduce the chances of a successful TOLAC include advanced maternal age, high body mass index, high birth weight, gestational age of more than 40 weeks at delivery, and preeclampsia at the time of delivery, according to the practice bulletin.

To reduce the risk of adverse outcomes of complications, TOLAC should not occur at home and should only occur at level I facilities (or higher) that can perform an emergency cesarean delivery if the mother or fetus is in jeopardy.

The practice bulletin recommends continuous fetal heart rate monitoring during TOLAC and notes several additional categories of TOLAC candidates. Obstetricians and patients should discuss the potential risks and benefits of both TOLAC and elective repeat cesarean delivery, and that discussion should be documented in the medical record, ACOG recommended.

Women and their , according to an updated practice bulletin from the American College of Obstetricians and Gynecologists.

Trial of labor after cesarean delivery (TOLAC) results in a successful birth in 60%-80% of cases, sparing mothers from major abdominal surgery and reducing the risk of hemorrhage, thromboses, and infection, the authors of the practice bulletin wrote. “The preponderance of evidence suggests that most women with one previous cesarean delivery with a low-transverse incision are candidates for and should be counseled about and offered TOLAC,” they said (Obstet Gynecol. 2017 Nov;130[5]:e217-33. doi: 10.1097/AOG.0000000000002398).

Rates of cesarean delivery in the United States jumped from 5% to nearly 32% between 1970 and 2016. Although rates of VBAC rose between the mid-1980s and the mid-1990s, cases of uterine rupture and other complications spurred fears of malpractice litigation and reversed this trend. VBAC rates were more than 28% in 1996 but fell to 8.5% by 2006, according to the practice bulletin.

To reduce the risk of uterine rupture, avoid misoprostol for cervical ripening and labor induction in women with a prior cesarean delivery, ACOG recommended.

“No evidence suggests that epidural analgesia is a causal risk factor for unsuccessful TOLAC,” the authors added. “Therefore, epidural analgesia for labor may be used as part of TOLAC, and adequate pain relief may encourage more women to choose TOLAC.”

Women with two prior low-transverse cesareans also are potential candidates for TOLAC, depending on other predictors of successful VBAC. Factors that reduce the chances of a successful TOLAC include advanced maternal age, high body mass index, high birth weight, gestational age of more than 40 weeks at delivery, and preeclampsia at the time of delivery, according to the practice bulletin.

To reduce the risk of adverse outcomes of complications, TOLAC should not occur at home and should only occur at level I facilities (or higher) that can perform an emergency cesarean delivery if the mother or fetus is in jeopardy.

The practice bulletin recommends continuous fetal heart rate monitoring during TOLAC and notes several additional categories of TOLAC candidates. Obstetricians and patients should discuss the potential risks and benefits of both TOLAC and elective repeat cesarean delivery, and that discussion should be documented in the medical record, ACOG recommended.

FROM OBSTETRICS & GYNECOLOGY

Low tryptophan levels linked to IBD

Patients with inflammatory bowel disease (IBD) had significantly lower serum levels of the essential amino acid tryptophan than healthy controls in a large study reported in the December issue of Gastroenterology (doi: 10.1053/j.gastro.2017.08.028).

Serum tryptophan levels also correlated inversely with both disease activity and C-reactive protein levels in patients with IBD, reported Susanna Nikolaus, MD, of University Hospital Schleswig-Holstein, Kiel, Germany, with her associates. “Tryptophan deficiency could contribute to development of IBD. Studies are needed to determine whether modification of intestinal tryptophan pathways affects [its] severity,” they wrote.

Several small case series have reported low levels of tryptophan in IBD and other autoimmune disorders, the investigators noted. Removing tryptophan from the diet has been found to increase susceptibility to colitis in mice, and supplementing with tryptophan or some of its metabolites has the opposite effect. For this study, the researchers used high-performance liquid chromatography to quantify tryptophan levels in serum samples from 535 consecutive patients with IBD and 100 matched controls. They used mass spectrometry to measure metabolites of tryptophan, enzyme-linked immunosorbent assay to measure interleukin-22 (IL-22) levels, and 16S rDNA amplicon sequencing to correlate tryptophan levels with fecal microbiota species. Finally, they used real-time polymerase chain reaction to measure levels of mRNA encoding tryptophan metabolites in colonic biopsy specimens.

Serum tryptophan levels were significantly lower in patients with IBD than controls (P = 5.3 x 10–6). The difference was starker in patients with Crohn’s disease (P = 1.1 x 10–10 vs. controls) compared with those with ulcerative colitis (P = 2.8 x 10–3 vs. controls), the investigators noted. Serum tryptophan levels also correlated inversely with disease activity in patients with Crohn’s disease (P = .01), while patients with ulcerative colitis showed a similar but nonsignificant trend (P = .07). Low tryptophan levels were associated with marked, statistically significant increases in C-reactive protein levels in both Crohn’s disease and ulcerative colitis. Tryptophan level also correlated inversely with leukocyte count, although the trend was less pronounced (P = .04).IBD was associated with several aberrations in the tryptophan kynurenine pathway, which is the primary means of catabolizing the amino acid. For example, compared with controls, patients with active IBD had significantly lower levels of mRNA encoding tryptophan 2,3-dioxygenase-2 (TDO2, a key enzyme in the kynurenine pathway) and solute carrier family 6 member 19 (SLC6A19, also called B0AT1, a neutral amino acid transporter). Patients with IBD also had significantly higher levels of indoleamine 2,3-dioxygenase 1 (IDO1), which catalyzes the initial, rate-limiting oxidation of tryptophan to kynurenine. Accordingly, patients with IBD had a significantly higher ratio of kynurenine to tryptophan than did controls, and this abnormality was associated with disease activity, especially in Crohn’s disease (P = .03).

Patients with IBD who had relatively higher tryptophan levels also tended to have more diverse gut microbiota than did patients with lower serum tryptophan levels, although differences among groups were not statistically significant, the investigators said. Serum concentration of IL-22 also correlated with disease activity in patients with IBD, and infliximab responders had a “significant and sustained increase” of tryptophan levels over time, compared with nonresponders.

Potsdam dietary questionnaires found no link between disease activity and dietary consumption of tryptophan, the researchers said. Additionally, they found no links between serum tryptophan levels and age, smoking status, or disease complications, such as fistulae or abscess formation.The investigators acknowledged grant support from the DFG Excellence Cluster “Inflammation at Interfaces” and BMBF e-med SYSINFLAME and H2020 SysCID. One coinvestigator reported employment by CONARIS Research Institute AG, which helps develop drugs with inflammatory indications. The other investigators had no conflicts of interest.

In this interesting study, Nikolaus et al. found an association of decreased serum tryptophan in patients with inflammatory bowel disease (IBD), compared with control subjects. The authors also found an inverse correlation of serum tryptophan levels in patients with C-reactive protein in both ulcerative colitis and Crohn's disease and with active disease as defined by clinical disease activity scores in Crohn's disease. A validated food-frequency questionnaire found no difference in tryptophan consumption based on disease activity in a subset of patients, decreasing the likelihood that this association is secondary to altered dietary intake only and may be related to other mechanisms.

Sara Horst, MD, MPH, is an assistant professor, division of gastroenterology, hepatology & nutrition, Inflammatory Bowel Disease Center, Vanderbilt University Medical Center, Nashville, Tenn. She had no relevant conflicts of interest.

In this interesting study, Nikolaus et al. found an association of decreased serum tryptophan in patients with inflammatory bowel disease (IBD), compared with control subjects. The authors also found an inverse correlation of serum tryptophan levels in patients with C-reactive protein in both ulcerative colitis and Crohn's disease and with active disease as defined by clinical disease activity scores in Crohn's disease. A validated food-frequency questionnaire found no difference in tryptophan consumption based on disease activity in a subset of patients, decreasing the likelihood that this association is secondary to altered dietary intake only and may be related to other mechanisms.

Sara Horst, MD, MPH, is an assistant professor, division of gastroenterology, hepatology & nutrition, Inflammatory Bowel Disease Center, Vanderbilt University Medical Center, Nashville, Tenn. She had no relevant conflicts of interest.

In this interesting study, Nikolaus et al. found an association of decreased serum tryptophan in patients with inflammatory bowel disease (IBD), compared with control subjects. The authors also found an inverse correlation of serum tryptophan levels in patients with C-reactive protein in both ulcerative colitis and Crohn's disease and with active disease as defined by clinical disease activity scores in Crohn's disease. A validated food-frequency questionnaire found no difference in tryptophan consumption based on disease activity in a subset of patients, decreasing the likelihood that this association is secondary to altered dietary intake only and may be related to other mechanisms.

Sara Horst, MD, MPH, is an assistant professor, division of gastroenterology, hepatology & nutrition, Inflammatory Bowel Disease Center, Vanderbilt University Medical Center, Nashville, Tenn. She had no relevant conflicts of interest.

Patients with inflammatory bowel disease (IBD) had significantly lower serum levels of the essential amino acid tryptophan than healthy controls in a large study reported in the December issue of Gastroenterology (doi: 10.1053/j.gastro.2017.08.028).

Serum tryptophan levels also correlated inversely with both disease activity and C-reactive protein levels in patients with IBD, reported Susanna Nikolaus, MD, of University Hospital Schleswig-Holstein, Kiel, Germany, with her associates. “Tryptophan deficiency could contribute to development of IBD. Studies are needed to determine whether modification of intestinal tryptophan pathways affects [its] severity,” they wrote.

Several small case series have reported low levels of tryptophan in IBD and other autoimmune disorders, the investigators noted. Removing tryptophan from the diet has been found to increase susceptibility to colitis in mice, and supplementing with tryptophan or some of its metabolites has the opposite effect. For this study, the researchers used high-performance liquid chromatography to quantify tryptophan levels in serum samples from 535 consecutive patients with IBD and 100 matched controls. They used mass spectrometry to measure metabolites of tryptophan, enzyme-linked immunosorbent assay to measure interleukin-22 (IL-22) levels, and 16S rDNA amplicon sequencing to correlate tryptophan levels with fecal microbiota species. Finally, they used real-time polymerase chain reaction to measure levels of mRNA encoding tryptophan metabolites in colonic biopsy specimens.

Serum tryptophan levels were significantly lower in patients with IBD than controls (P = 5.3 x 10–6). The difference was starker in patients with Crohn’s disease (P = 1.1 x 10–10 vs. controls) compared with those with ulcerative colitis (P = 2.8 x 10–3 vs. controls), the investigators noted. Serum tryptophan levels also correlated inversely with disease activity in patients with Crohn’s disease (P = .01), while patients with ulcerative colitis showed a similar but nonsignificant trend (P = .07). Low tryptophan levels were associated with marked, statistically significant increases in C-reactive protein levels in both Crohn’s disease and ulcerative colitis. Tryptophan level also correlated inversely with leukocyte count, although the trend was less pronounced (P = .04).IBD was associated with several aberrations in the tryptophan kynurenine pathway, which is the primary means of catabolizing the amino acid. For example, compared with controls, patients with active IBD had significantly lower levels of mRNA encoding tryptophan 2,3-dioxygenase-2 (TDO2, a key enzyme in the kynurenine pathway) and solute carrier family 6 member 19 (SLC6A19, also called B0AT1, a neutral amino acid transporter). Patients with IBD also had significantly higher levels of indoleamine 2,3-dioxygenase 1 (IDO1), which catalyzes the initial, rate-limiting oxidation of tryptophan to kynurenine. Accordingly, patients with IBD had a significantly higher ratio of kynurenine to tryptophan than did controls, and this abnormality was associated with disease activity, especially in Crohn’s disease (P = .03).

Patients with IBD who had relatively higher tryptophan levels also tended to have more diverse gut microbiota than did patients with lower serum tryptophan levels, although differences among groups were not statistically significant, the investigators said. Serum concentration of IL-22 also correlated with disease activity in patients with IBD, and infliximab responders had a “significant and sustained increase” of tryptophan levels over time, compared with nonresponders.

Potsdam dietary questionnaires found no link between disease activity and dietary consumption of tryptophan, the researchers said. Additionally, they found no links between serum tryptophan levels and age, smoking status, or disease complications, such as fistulae or abscess formation.The investigators acknowledged grant support from the DFG Excellence Cluster “Inflammation at Interfaces” and BMBF e-med SYSINFLAME and H2020 SysCID. One coinvestigator reported employment by CONARIS Research Institute AG, which helps develop drugs with inflammatory indications. The other investigators had no conflicts of interest.

Patients with inflammatory bowel disease (IBD) had significantly lower serum levels of the essential amino acid tryptophan than healthy controls in a large study reported in the December issue of Gastroenterology (doi: 10.1053/j.gastro.2017.08.028).

Serum tryptophan levels also correlated inversely with both disease activity and C-reactive protein levels in patients with IBD, reported Susanna Nikolaus, MD, of University Hospital Schleswig-Holstein, Kiel, Germany, with her associates. “Tryptophan deficiency could contribute to development of IBD. Studies are needed to determine whether modification of intestinal tryptophan pathways affects [its] severity,” they wrote.

Several small case series have reported low levels of tryptophan in IBD and other autoimmune disorders, the investigators noted. Removing tryptophan from the diet has been found to increase susceptibility to colitis in mice, and supplementing with tryptophan or some of its metabolites has the opposite effect. For this study, the researchers used high-performance liquid chromatography to quantify tryptophan levels in serum samples from 535 consecutive patients with IBD and 100 matched controls. They used mass spectrometry to measure metabolites of tryptophan, enzyme-linked immunosorbent assay to measure interleukin-22 (IL-22) levels, and 16S rDNA amplicon sequencing to correlate tryptophan levels with fecal microbiota species. Finally, they used real-time polymerase chain reaction to measure levels of mRNA encoding tryptophan metabolites in colonic biopsy specimens.

Serum tryptophan levels were significantly lower in patients with IBD than controls (P = 5.3 x 10–6). The difference was starker in patients with Crohn’s disease (P = 1.1 x 10–10 vs. controls) compared with those with ulcerative colitis (P = 2.8 x 10–3 vs. controls), the investigators noted. Serum tryptophan levels also correlated inversely with disease activity in patients with Crohn’s disease (P = .01), while patients with ulcerative colitis showed a similar but nonsignificant trend (P = .07). Low tryptophan levels were associated with marked, statistically significant increases in C-reactive protein levels in both Crohn’s disease and ulcerative colitis. Tryptophan level also correlated inversely with leukocyte count, although the trend was less pronounced (P = .04).IBD was associated with several aberrations in the tryptophan kynurenine pathway, which is the primary means of catabolizing the amino acid. For example, compared with controls, patients with active IBD had significantly lower levels of mRNA encoding tryptophan 2,3-dioxygenase-2 (TDO2, a key enzyme in the kynurenine pathway) and solute carrier family 6 member 19 (SLC6A19, also called B0AT1, a neutral amino acid transporter). Patients with IBD also had significantly higher levels of indoleamine 2,3-dioxygenase 1 (IDO1), which catalyzes the initial, rate-limiting oxidation of tryptophan to kynurenine. Accordingly, patients with IBD had a significantly higher ratio of kynurenine to tryptophan than did controls, and this abnormality was associated with disease activity, especially in Crohn’s disease (P = .03).

Patients with IBD who had relatively higher tryptophan levels also tended to have more diverse gut microbiota than did patients with lower serum tryptophan levels, although differences among groups were not statistically significant, the investigators said. Serum concentration of IL-22 also correlated with disease activity in patients with IBD, and infliximab responders had a “significant and sustained increase” of tryptophan levels over time, compared with nonresponders.

Potsdam dietary questionnaires found no link between disease activity and dietary consumption of tryptophan, the researchers said. Additionally, they found no links between serum tryptophan levels and age, smoking status, or disease complications, such as fistulae or abscess formation.The investigators acknowledged grant support from the DFG Excellence Cluster “Inflammation at Interfaces” and BMBF e-med SYSINFLAME and H2020 SysCID. One coinvestigator reported employment by CONARIS Research Institute AG, which helps develop drugs with inflammatory indications. The other investigators had no conflicts of interest.

FROM GASTROENTEROLOGY

Key clinical point: Patients with inflammatory bowel disease had significantly lower serum tryptophan levels than healthy controls.

Major finding: Serum tryptophan levels also correlated inversely with disease activity and C-reactive protein levels in patients with IBD.

Data source: An analysis of serum samples from 535 consecutive patients with IBD and 100 matched controls.

Disclosures: The investigators acknowledged grant support from the DFG Excellence Cluster “Inflammation at Interfaces” and BMBF e-med SYSINFLAME and H2020 SysCID. One coinvestigator reported employment by CONARIS Research Institute AG, which helps develop therapies with inflammatory indications. The other investigators had no conflicts of interest.

Study highlights disparities in U.S. lupus mortality

Mortality from systemic lupus erythematosus has declined since 1968 in the United States, but not as markedly as rates of death from other causes, according to a study in Annals of Internal Medicine.

Furthermore, systemic lupus erythematosus (SLE) mortality varied significantly by sex, race, and geographic region, noted Eric Y. Yen, MD, and his associates at the University of California, Los Angeles. Mortality from SLE was highest among females, black individuals, and those who lived in the South. Multivariable analyses confirmed that sex, race, and geographic region were independent risk factors for SLE mortality and that race modified relationships among SLE mortality, sex, and geographic region.

Between 1968 and 2013, there were 50,249 deaths from SLE and more than 100.8 million deaths from other causes in the United States, the researchers said. Mortality from other causes continuously dropped over the study period, but SLE mortality dropped only between 1968 and 1975 before rising continuously for 24 years. Only in 1999 did SLE mortality begin to fall again. Consequently, the ratio of SLE mortality to mortality from other causes rose by 34.6% overall between 1968 and 2013, and rose by 62.5% among blacks and by 58.6% among southerners.

After the researchers accounted for age, sex, race or ethnicity, and geographic region, the risk of death from SLE dropped significantly during 2004 through 2008, compared with 1999 through 2003, and declined even more between 2009 and 2013. Female sex, racial or ethnic minority status, residing in the South or West, and being older than 65 years all independently increased the risk of dying from SLE.

Although the South had the highest SLE mortality among whites, the West had the highest SLE mortality among all other races and ethnicities, the investigators determined. Previous research has identified pockets of increased SLE mortality in Alabama, Arkansas, Louisiana, and New Mexico, and has shown that poverty is a stronger predictor of SLE mortality than race, they noted. “Geographic differences in the quality of care of patients with lupus nephritis have also been reported, with more patients in the Northeast receiving standard-of-care medications,” they wrote. “Interactions between genetic and non-genetic factors associated with race/ethnicity and geographic differences in environment, such as increased sunlight exposure, socioeconomic factors, and access to medical care, might also influence SLE mortality.”

The National Institutes of Health, the Lupus Foundation of America, and the Rheumatology Research Foundation funded the study. The investigators reported having no conflicts of interest.

Mortality from systemic lupus erythematosus has declined since 1968 in the United States, but not as markedly as rates of death from other causes, according to a study in Annals of Internal Medicine.

Furthermore, systemic lupus erythematosus (SLE) mortality varied significantly by sex, race, and geographic region, noted Eric Y. Yen, MD, and his associates at the University of California, Los Angeles. Mortality from SLE was highest among females, black individuals, and those who lived in the South. Multivariable analyses confirmed that sex, race, and geographic region were independent risk factors for SLE mortality and that race modified relationships among SLE mortality, sex, and geographic region.

Between 1968 and 2013, there were 50,249 deaths from SLE and more than 100.8 million deaths from other causes in the United States, the researchers said. Mortality from other causes continuously dropped over the study period, but SLE mortality dropped only between 1968 and 1975 before rising continuously for 24 years. Only in 1999 did SLE mortality begin to fall again. Consequently, the ratio of SLE mortality to mortality from other causes rose by 34.6% overall between 1968 and 2013, and rose by 62.5% among blacks and by 58.6% among southerners.

After the researchers accounted for age, sex, race or ethnicity, and geographic region, the risk of death from SLE dropped significantly during 2004 through 2008, compared with 1999 through 2003, and declined even more between 2009 and 2013. Female sex, racial or ethnic minority status, residing in the South or West, and being older than 65 years all independently increased the risk of dying from SLE.

Although the South had the highest SLE mortality among whites, the West had the highest SLE mortality among all other races and ethnicities, the investigators determined. Previous research has identified pockets of increased SLE mortality in Alabama, Arkansas, Louisiana, and New Mexico, and has shown that poverty is a stronger predictor of SLE mortality than race, they noted. “Geographic differences in the quality of care of patients with lupus nephritis have also been reported, with more patients in the Northeast receiving standard-of-care medications,” they wrote. “Interactions between genetic and non-genetic factors associated with race/ethnicity and geographic differences in environment, such as increased sunlight exposure, socioeconomic factors, and access to medical care, might also influence SLE mortality.”

The National Institutes of Health, the Lupus Foundation of America, and the Rheumatology Research Foundation funded the study. The investigators reported having no conflicts of interest.

Mortality from systemic lupus erythematosus has declined since 1968 in the United States, but not as markedly as rates of death from other causes, according to a study in Annals of Internal Medicine.

Furthermore, systemic lupus erythematosus (SLE) mortality varied significantly by sex, race, and geographic region, noted Eric Y. Yen, MD, and his associates at the University of California, Los Angeles. Mortality from SLE was highest among females, black individuals, and those who lived in the South. Multivariable analyses confirmed that sex, race, and geographic region were independent risk factors for SLE mortality and that race modified relationships among SLE mortality, sex, and geographic region.

Between 1968 and 2013, there were 50,249 deaths from SLE and more than 100.8 million deaths from other causes in the United States, the researchers said. Mortality from other causes continuously dropped over the study period, but SLE mortality dropped only between 1968 and 1975 before rising continuously for 24 years. Only in 1999 did SLE mortality begin to fall again. Consequently, the ratio of SLE mortality to mortality from other causes rose by 34.6% overall between 1968 and 2013, and rose by 62.5% among blacks and by 58.6% among southerners.

After the researchers accounted for age, sex, race or ethnicity, and geographic region, the risk of death from SLE dropped significantly during 2004 through 2008, compared with 1999 through 2003, and declined even more between 2009 and 2013. Female sex, racial or ethnic minority status, residing in the South or West, and being older than 65 years all independently increased the risk of dying from SLE.

Although the South had the highest SLE mortality among whites, the West had the highest SLE mortality among all other races and ethnicities, the investigators determined. Previous research has identified pockets of increased SLE mortality in Alabama, Arkansas, Louisiana, and New Mexico, and has shown that poverty is a stronger predictor of SLE mortality than race, they noted. “Geographic differences in the quality of care of patients with lupus nephritis have also been reported, with more patients in the Northeast receiving standard-of-care medications,” they wrote. “Interactions between genetic and non-genetic factors associated with race/ethnicity and geographic differences in environment, such as increased sunlight exposure, socioeconomic factors, and access to medical care, might also influence SLE mortality.”

The National Institutes of Health, the Lupus Foundation of America, and the Rheumatology Research Foundation funded the study. The investigators reported having no conflicts of interest.

FROM ANNALS OF INTERNAL MEDICINE

Key clinical point: Mortality from systemic lupus erythematosus has declined since 1968, but not as markedly as rates of death from other causes.

Major finding: The ratio of SLE mortality to mortality from other causes rose by nearly 35% between 1968 and 2013.

Data source: Analyses of the Centers for Disease Control and Prevention’s National Vital Statistics System and CDC WONDER.

Disclosures: The National Institutes of Health, the Lupus Foundation of America, and the Rheumatology Research Foundation funded the study. The investigators reported having no conflicts of interest.

VIDEO: High-volume endoscopists, centers produced better ERCP outcomes

Endoscopists who performed endoscopic retrograde cholangiopancreatography (ERCP) at high-volume centers had a 60% greater odds of procedure success compared with those at low-volume centers, according to the results of a systematic review and meta-analysis.

High-volume endoscopists also had a 30% lower odds of performing ERCP that led to adverse events such as pancreatitis, perforation, and bleeding, reported Rajesh N. Keswani, MD, MS, of Northwestern University, Chicago, and his associates. High-volume centers themselves also were associated with a significantly higher odds of successful ERCP (odds ratio, 2.0; 95% CI, 1.6 to 2.5), although they were not associated with a significantly lower risk of adverse events, the reviewers wrote. The study was published in the December issue of Clinical Gastroenterology and Hepatology (doi: 10.1016/j.cgh.2017.06.002).

Diagnostic ERCP has fallen sevenfold in the past 30 years while therapeutic use has increased 30-fold, the researchers noted. Therapeutic use spans several complex pancreaticobiliary conditions, including chronic pancreatitis, malignant jaundice, and complications of liver transplantation. This shift from diagnostic to therapeutic has naturally increased the complexity of ERCP, the need for expert endoscopy, and the potential risk of adverse events. “As health care continues to shift toward rewarding value rather than volume, it will be increasingly important to deliver care that is effective and efficient,” the reviewers wrote. “Thus, understanding factors associated with unsuccessful interventions, such as a failed ERCP, will be of critical importance to payers and patients (Clin Gastroenterol Hepatol. 2017 Jun 7;218:237-45).

Therefore, they searched MEDLINE, EMBASE, and the Cochrane register of controlled trials for prospective and retrospective studies published through January 2017. In all, the researchers identified 13 studies that stratified outcomes by volume per endoscopist or center. These studies comprised 59,437 procedures and patients. Definitions of low volume varied by study, ranging from less than 25 to less than 156 annual ERCPs per endoscopist and from less than 87 to less than 200 annual ERCPs per center. Endoscopists who achieved this threshold were significantly more likely to perform successful ERCPs than were low-volume endoscopists (OR, 1.6; 95% CI, 1.2 to 2.1), and were significantly less likely to have patients develop pancreatitis, perforation, or bleeding after ERCP (OR, 0.7; 95% CI, 0.5 to 0.8).

SOURCE: AMERICAN GASTROENTEROLOGICAL ASSOCIATION

“Given these compelling findings, we propose that providers and payers consider consolidating ERCP to high-volume endoscopists and centers to improve ERCP outcomes and value,” the reviewers wrote. Minimum thresholds for endoscopists and centers to maintain ERCP skills and optimize outcomes have not been defined, they noted. Intuitively, there is no “critical volume threshold” at which “outcomes suddenly improve,” but the studies in this analysis used widely varying definitions of low volume, they added. It also remains unclear whether a low-volume endoscopist can achieve optimal outcomes at a high-volume center, or vice versa, they said. They recommended studies to better define procedure success and the appropriate use of ERCP in therapeutic settings.