User login

More evidence that modified Atkins diet lowers seizures in adults

ORLANDO —

The results of the small new review and meta-analysis suggest that “the MAD may be an effective adjuvant therapy for older patients who have failed anti-seizure medications,” study investigator Aiswarya Raj, MBBS, Aster Malabar Institute of Medical Sciences, Kerala, India, said in an interview.

The findings were presented at the annual meeting of the American Epilepsy Society.

Paucity of Adult Data

The MAD is a less restrictive hybrid of the ketogenic diet that limits carbohydrate intake and encourages fat consumption. It does not restrict fluids, calories, or proteins and does not require fats to be weighed or measured.

The diet includes fewer carbohydrates than the traditional Atkins diet and places more emphasis on fat intake. Dr. Raj said that the research suggests that the MAD “is a promising therapy in pediatric populations, but there’s not a lot of data in adults.”

Dr. Raj noted that this diet type has not been that popular in patients who clinicians believe might be better treated with drug therapy, possibly because of concern about the cardiac impact of consuming high-fat foods.

After conducting a systematic literature review assessing the efficacy of MAD in adults, the researchers included three randomized controlled trials and four observational studies published from January 2000 to May 2023 in the analysis.

The randomized controlled trials in the review assessed the primary outcome, a greater than 50% seizure reduction, at the end of 2 months, 3 months, and 6 months. In the MAD group, 32.5% of participants had more than a 50% seizure reduction vs 3% in the control group (odds ratio [OR], 12.62; 95% CI, 4.05-39.29; P < .0001).

Four participants who followed the diet achieved complete seizure-freedom compared with no participants in the control group (OR, 16.20; 95% CI, 0.82-318.82; P = .07).

The prospective studies examined this outcome at the end of 1 month or 3 months. In these studies, 41.9% of individuals experienced more than a 50% seizure reduction after 1 month of following the MAD, and 34.2% experienced this reduction after 3 months (OR, 1.41; 95% CI, 0.79-2.52; P = .24), with zero heterogeneity across studies.

It’s difficult to interpret the difference in seizure reduction between 1 and 3 months of therapy, Dr. Raj noted, because “there’s always the issue of compliance when you put a patient on a long-term diet.”

Positive results for MAD in adults were shown in another recent systematic review and meta-analysis published in Seizure: European Journal of Epilepsy.

That analysis included six studies with 575 patients who were randomly assigned to MAD or usual diet (UD) plus standard drug therapy. After an average follow-up of 12 weeks, MAD was associated with a higher rate of 50% or greater reduction in seizure frequency (relative risk [RR], 6.28; 95% CI, 3.52-10.50; P < .001), both in adults with drug-resistant epilepsy (RR, 6.14; 95% CI, 1.15-32.66; P = .033) and children (RR, 6.28; 95% CI, 3.43-11.49; P < .001).

MAD was also associated with a higher seizure freedom rate compared with UD (RR, 5.94; 95% CI, 1.93-18.31; P = .002).

Cholesterol Concern

In Dr. Raj’s analysis, there was an increment in blood total cholesterol level after 3 months of MAD (standard mean difference, -0.82; 95% CI, -1.23 to -0.40; P = .0001).

Concern about elevated blood cholesterol affecting coronary artery disease risk may explain why doctors sometimes shy away from recommending the MAD to their adult patients. “Some may not want to take that risk; you don’t want patients to succumb to coronary artery disease,” said Dr. Raj.

She noted that 3 months “is a very short time period,” and studies looking at cholesterol levels at the end of at least 1 year are needed to determine whether levels return to normal.

“We’re seeing a lot of literature now that suggests dietary intake does not really have a link with cholesterol levels,” she said. If this can be proven, “then this is definitely a great therapy.”

The evidence of cardiovascular safety of the MAD includes a study of 37 patients who showed that although total cholesterol and low-density lipoprotein (LDL) cholesterol increased over the first 3 months of MAD treatment, these values normalized within 1 year of treatment, including in patients treated with MAD for more than 3 years.

Primary Diet Recommendation

This news organization asked one of the authors of that study, Mackenzie C. Cervenka, MD, professor of neurology and medical director of the Adult Epilepsy Diet Center, Johns Hopkins Hospital, Baltimore, Maryland, to comment on the new research.

She said that she was “thrilled” to see more evidence showing that this diet therapy can be as effective for adults as for children. “This is a really important message to get out there.”

At her adult epilepsy diet center, the MAD is the “primary” diet recommended for patients who are resistant to seizure medication, not tube fed, and are keen to try diet therapy, said Dr. Cervenka.

In her experience, the likelihood of having a 50% or greater seizure reduction is about 40% among medication-resistant patients, “so very similar to what they reported in that review,” she said.

However, she noted that she emphasizes to patients that “diet therapy is not meant to be monotherapy.”

Dr. Cervenka’s team is examining LDL cholesterol levels as well as LDL particle size in adults who have been on the MAD for 2 years. LDL particle size, she noted, is a better predictor of long-term cardiovascular health.

No conflicts of interest were reported.

A version of this article appeared on Medscape.com.

ORLANDO —

The results of the small new review and meta-analysis suggest that “the MAD may be an effective adjuvant therapy for older patients who have failed anti-seizure medications,” study investigator Aiswarya Raj, MBBS, Aster Malabar Institute of Medical Sciences, Kerala, India, said in an interview.

The findings were presented at the annual meeting of the American Epilepsy Society.

Paucity of Adult Data

The MAD is a less restrictive hybrid of the ketogenic diet that limits carbohydrate intake and encourages fat consumption. It does not restrict fluids, calories, or proteins and does not require fats to be weighed or measured.

The diet includes fewer carbohydrates than the traditional Atkins diet and places more emphasis on fat intake. Dr. Raj said that the research suggests that the MAD “is a promising therapy in pediatric populations, but there’s not a lot of data in adults.”

Dr. Raj noted that this diet type has not been that popular in patients who clinicians believe might be better treated with drug therapy, possibly because of concern about the cardiac impact of consuming high-fat foods.

After conducting a systematic literature review assessing the efficacy of MAD in adults, the researchers included three randomized controlled trials and four observational studies published from January 2000 to May 2023 in the analysis.

The randomized controlled trials in the review assessed the primary outcome, a greater than 50% seizure reduction, at the end of 2 months, 3 months, and 6 months. In the MAD group, 32.5% of participants had more than a 50% seizure reduction vs 3% in the control group (odds ratio [OR], 12.62; 95% CI, 4.05-39.29; P < .0001).

Four participants who followed the diet achieved complete seizure-freedom compared with no participants in the control group (OR, 16.20; 95% CI, 0.82-318.82; P = .07).

The prospective studies examined this outcome at the end of 1 month or 3 months. In these studies, 41.9% of individuals experienced more than a 50% seizure reduction after 1 month of following the MAD, and 34.2% experienced this reduction after 3 months (OR, 1.41; 95% CI, 0.79-2.52; P = .24), with zero heterogeneity across studies.

It’s difficult to interpret the difference in seizure reduction between 1 and 3 months of therapy, Dr. Raj noted, because “there’s always the issue of compliance when you put a patient on a long-term diet.”

Positive results for MAD in adults were shown in another recent systematic review and meta-analysis published in Seizure: European Journal of Epilepsy.

That analysis included six studies with 575 patients who were randomly assigned to MAD or usual diet (UD) plus standard drug therapy. After an average follow-up of 12 weeks, MAD was associated with a higher rate of 50% or greater reduction in seizure frequency (relative risk [RR], 6.28; 95% CI, 3.52-10.50; P < .001), both in adults with drug-resistant epilepsy (RR, 6.14; 95% CI, 1.15-32.66; P = .033) and children (RR, 6.28; 95% CI, 3.43-11.49; P < .001).

MAD was also associated with a higher seizure freedom rate compared with UD (RR, 5.94; 95% CI, 1.93-18.31; P = .002).

Cholesterol Concern

In Dr. Raj’s analysis, there was an increment in blood total cholesterol level after 3 months of MAD (standard mean difference, -0.82; 95% CI, -1.23 to -0.40; P = .0001).

Concern about elevated blood cholesterol affecting coronary artery disease risk may explain why doctors sometimes shy away from recommending the MAD to their adult patients. “Some may not want to take that risk; you don’t want patients to succumb to coronary artery disease,” said Dr. Raj.

She noted that 3 months “is a very short time period,” and studies looking at cholesterol levels at the end of at least 1 year are needed to determine whether levels return to normal.

“We’re seeing a lot of literature now that suggests dietary intake does not really have a link with cholesterol levels,” she said. If this can be proven, “then this is definitely a great therapy.”

The evidence of cardiovascular safety of the MAD includes a study of 37 patients who showed that although total cholesterol and low-density lipoprotein (LDL) cholesterol increased over the first 3 months of MAD treatment, these values normalized within 1 year of treatment, including in patients treated with MAD for more than 3 years.

Primary Diet Recommendation

This news organization asked one of the authors of that study, Mackenzie C. Cervenka, MD, professor of neurology and medical director of the Adult Epilepsy Diet Center, Johns Hopkins Hospital, Baltimore, Maryland, to comment on the new research.

She said that she was “thrilled” to see more evidence showing that this diet therapy can be as effective for adults as for children. “This is a really important message to get out there.”

At her adult epilepsy diet center, the MAD is the “primary” diet recommended for patients who are resistant to seizure medication, not tube fed, and are keen to try diet therapy, said Dr. Cervenka.

In her experience, the likelihood of having a 50% or greater seizure reduction is about 40% among medication-resistant patients, “so very similar to what they reported in that review,” she said.

However, she noted that she emphasizes to patients that “diet therapy is not meant to be monotherapy.”

Dr. Cervenka’s team is examining LDL cholesterol levels as well as LDL particle size in adults who have been on the MAD for 2 years. LDL particle size, she noted, is a better predictor of long-term cardiovascular health.

No conflicts of interest were reported.

A version of this article appeared on Medscape.com.

ORLANDO —

The results of the small new review and meta-analysis suggest that “the MAD may be an effective adjuvant therapy for older patients who have failed anti-seizure medications,” study investigator Aiswarya Raj, MBBS, Aster Malabar Institute of Medical Sciences, Kerala, India, said in an interview.

The findings were presented at the annual meeting of the American Epilepsy Society.

Paucity of Adult Data

The MAD is a less restrictive hybrid of the ketogenic diet that limits carbohydrate intake and encourages fat consumption. It does not restrict fluids, calories, or proteins and does not require fats to be weighed or measured.

The diet includes fewer carbohydrates than the traditional Atkins diet and places more emphasis on fat intake. Dr. Raj said that the research suggests that the MAD “is a promising therapy in pediatric populations, but there’s not a lot of data in adults.”

Dr. Raj noted that this diet type has not been that popular in patients who clinicians believe might be better treated with drug therapy, possibly because of concern about the cardiac impact of consuming high-fat foods.

After conducting a systematic literature review assessing the efficacy of MAD in adults, the researchers included three randomized controlled trials and four observational studies published from January 2000 to May 2023 in the analysis.

The randomized controlled trials in the review assessed the primary outcome, a greater than 50% seizure reduction, at the end of 2 months, 3 months, and 6 months. In the MAD group, 32.5% of participants had more than a 50% seizure reduction vs 3% in the control group (odds ratio [OR], 12.62; 95% CI, 4.05-39.29; P < .0001).

Four participants who followed the diet achieved complete seizure-freedom compared with no participants in the control group (OR, 16.20; 95% CI, 0.82-318.82; P = .07).

The prospective studies examined this outcome at the end of 1 month or 3 months. In these studies, 41.9% of individuals experienced more than a 50% seizure reduction after 1 month of following the MAD, and 34.2% experienced this reduction after 3 months (OR, 1.41; 95% CI, 0.79-2.52; P = .24), with zero heterogeneity across studies.

It’s difficult to interpret the difference in seizure reduction between 1 and 3 months of therapy, Dr. Raj noted, because “there’s always the issue of compliance when you put a patient on a long-term diet.”

Positive results for MAD in adults were shown in another recent systematic review and meta-analysis published in Seizure: European Journal of Epilepsy.

That analysis included six studies with 575 patients who were randomly assigned to MAD or usual diet (UD) plus standard drug therapy. After an average follow-up of 12 weeks, MAD was associated with a higher rate of 50% or greater reduction in seizure frequency (relative risk [RR], 6.28; 95% CI, 3.52-10.50; P < .001), both in adults with drug-resistant epilepsy (RR, 6.14; 95% CI, 1.15-32.66; P = .033) and children (RR, 6.28; 95% CI, 3.43-11.49; P < .001).

MAD was also associated with a higher seizure freedom rate compared with UD (RR, 5.94; 95% CI, 1.93-18.31; P = .002).

Cholesterol Concern

In Dr. Raj’s analysis, there was an increment in blood total cholesterol level after 3 months of MAD (standard mean difference, -0.82; 95% CI, -1.23 to -0.40; P = .0001).

Concern about elevated blood cholesterol affecting coronary artery disease risk may explain why doctors sometimes shy away from recommending the MAD to their adult patients. “Some may not want to take that risk; you don’t want patients to succumb to coronary artery disease,” said Dr. Raj.

She noted that 3 months “is a very short time period,” and studies looking at cholesterol levels at the end of at least 1 year are needed to determine whether levels return to normal.

“We’re seeing a lot of literature now that suggests dietary intake does not really have a link with cholesterol levels,” she said. If this can be proven, “then this is definitely a great therapy.”

The evidence of cardiovascular safety of the MAD includes a study of 37 patients who showed that although total cholesterol and low-density lipoprotein (LDL) cholesterol increased over the first 3 months of MAD treatment, these values normalized within 1 year of treatment, including in patients treated with MAD for more than 3 years.

Primary Diet Recommendation

This news organization asked one of the authors of that study, Mackenzie C. Cervenka, MD, professor of neurology and medical director of the Adult Epilepsy Diet Center, Johns Hopkins Hospital, Baltimore, Maryland, to comment on the new research.

She said that she was “thrilled” to see more evidence showing that this diet therapy can be as effective for adults as for children. “This is a really important message to get out there.”

At her adult epilepsy diet center, the MAD is the “primary” diet recommended for patients who are resistant to seizure medication, not tube fed, and are keen to try diet therapy, said Dr. Cervenka.

In her experience, the likelihood of having a 50% or greater seizure reduction is about 40% among medication-resistant patients, “so very similar to what they reported in that review,” she said.

However, she noted that she emphasizes to patients that “diet therapy is not meant to be monotherapy.”

Dr. Cervenka’s team is examining LDL cholesterol levels as well as LDL particle size in adults who have been on the MAD for 2 years. LDL particle size, she noted, is a better predictor of long-term cardiovascular health.

No conflicts of interest were reported.

A version of this article appeared on Medscape.com.

FROM AES 2023

CGRP in migraine prodrome can stop headache, reduce severity

BARCELONA, SPAIN — In the randomized, placebo-controlled crossover PRODROME trial, treatment with ubrogepant (Ubrelvy) 100 mg, one of the new CGRP receptor antagonists, during the prodrome prevented the development of moderate/severe headache at both 24 hours and 48 hours post-dose. The medication also reduced headache of any intensity within 24 hours and functional disability compared with placebo.

“This represents a totally different way of treating a migraine attack – to treat it before the headache starts. This is a paradigm shift in the way we approach the acute treatment of migraine,” study investigator Peter Goadsby, MBBS, MD, PhD, professor of neurology at Kings College London, UK, said in an interview.

The findings were presented at 17th European Headache Congress (EHC) and were also recently published online in The Lancet.

A New Way to Manage Migraine?

The prodrome is usually the earliest phase of a migraine attack and is believed to be experienced by the vast majority of patients with migraine. It consists of various symptoms, including sensitivity to light, fatigue, mood changes, cognitive dysfunction, craving certain foods, and neck pain, which can occur several hours or days before onset.

Dr. Goadsby notes that, at present, there isn’t very much a patient can do about the prodrome.

“We advise patients if they feel an attack is coming not to do anything that might make it worse and make sure they have their acute treatment available for when the headache phase starts. So, we just advise people to prepare for the attack rather than doing anything specific to stop it. But with new data from this study, we now have something that can be done. Patients have an option,” he said.

Dr. Goadsby explained that currently patients are not encouraged to use acute migraine medications such as triptans in the prodrome phase.

“There is actually no evidence that taking a triptan during the prodromal phase works. The advice is to take a triptan as soon as the headache starts, but not before the headache starts.”

He noted that there is also the problem of medication overuse that is seen with triptans, and most other medications used to treat acute migraine, which leads to medication overuse headache, “so we don’t like to encourage patients to increase the frequency of taking triptans for this reason.”

But ubrogepant and other members of the “gepant” class do not seem to have the propensity for medication overuse problems. “Rather, the more a patient takes the less likely they are to get a headache as these drugs also have a preventative effect,” Dr. Goadsby said.

Major Reduction in Severity

The PRODROME trial was conducted at 75 sites in the United States in 518 patients who had at least a 1-year history of migraine with or without aura and a history of two to eight migraine attacks per month with moderate to severe headache in each of the 3 months before study entry.

Participants underwent a rigorous screening period during which they were required to show that they could identify prodromal symptoms that were reliably followed by migraine headache within 1-6 hours.

They were randomly assigned to receive either placebo to treat the first qualifying prodrome event and ubrogepant 100 mg to treat the second qualifying prodrome event or vice versa, with instructions to take the study drug at the onset of the prodrome event.

Efficacy assessments during the double-blind treatment period were recorded by the participant in an electronic diary. On identifying a qualifying prodrome, the patient recorded prodromal symptoms, and was then required to report the absence or presence of a headache at regular intervals up to 48 hours after the study drug dose. If a headache was reported, participants rated the intensity as mild, moderate, or severe and reported whether rescue medication was taken to treat it.

The primary endpoint was absence of moderate or severe intensity headache within 24 hours after study-drug dose. This occurred after 46% of 418 qualifying prodrome events that had been treated with ubrogepant and after 29% of 423 qualifying prodrome events that had been treated with placebo (odds ratio, 2.09; 95% CI, 1.63 - 2.69; P < .0001).

“The incidence of moderate to severe headache was almost halved when ubrogepant was taken in the prodrome,” Dr. Goadsby reported.

Ubrogepant also showed similar impressive results for the secondary endpoints in the absence of moderate to severe headache within 48 hours post-dose and the absence of any headache of any intensity at 24 hours.

Little to No Disability

The researchers also evaluated functional ability, and more participants reported “no disability or able to function normally” during the 24 hours after treatment with ubrogepant than after placebo (OR, 1.66; P < .0001).

Other findings showed that the prodromal symptoms themselves, such as light sensitivity and cognitive dysfunction, were also reduced with ubrogepant.

Dr. Goadsby said he was pleased but not surprised by the results, as the “gepant” class of drugs are used in both the acute treatment of migraine and as preventive agents, although different agents have been approved for different indications in this regard.

“The ‘gepants’ are a class of medication that can be used in almost any way in migraine — to treat an acute migraine headache, to prevent migraine if taken chronically, and now we see that they can also stop a migraine from developing if taken during the initial prodromal phase. That’s unique for a migraine medication,” he said.

While the current study was conducted with ubrogepant, Dr. Goadsby suspects that any of the “gepants” would probably have a similar effect.

He noted that the prodromal phase of migraine has only just started to be explored, with functional imaging studies showing that structural brain changes occur during this phase.

Dr. Goadsby said the current study opens up a whole new area of interest, emphasizing the clinical value of identifying the prodrome in individuals with migraine, better characterizing the symptomology of the prodrome and understanding more about how to treat it.

“It’s the ultimate way of treating migraine early, and by taking this type of medication in the prodromal phase, patients may be able to stop having pain. That’s quite an implication,” he concluded.

The PRODROME study was funded by AbbVie. Dr. Goadsby reports personal fees from AbbVie.

A version of this article appeared on Medscape.com.

BARCELONA, SPAIN — In the randomized, placebo-controlled crossover PRODROME trial, treatment with ubrogepant (Ubrelvy) 100 mg, one of the new CGRP receptor antagonists, during the prodrome prevented the development of moderate/severe headache at both 24 hours and 48 hours post-dose. The medication also reduced headache of any intensity within 24 hours and functional disability compared with placebo.

“This represents a totally different way of treating a migraine attack – to treat it before the headache starts. This is a paradigm shift in the way we approach the acute treatment of migraine,” study investigator Peter Goadsby, MBBS, MD, PhD, professor of neurology at Kings College London, UK, said in an interview.

The findings were presented at 17th European Headache Congress (EHC) and were also recently published online in The Lancet.

A New Way to Manage Migraine?

The prodrome is usually the earliest phase of a migraine attack and is believed to be experienced by the vast majority of patients with migraine. It consists of various symptoms, including sensitivity to light, fatigue, mood changes, cognitive dysfunction, craving certain foods, and neck pain, which can occur several hours or days before onset.

Dr. Goadsby notes that, at present, there isn’t very much a patient can do about the prodrome.

“We advise patients if they feel an attack is coming not to do anything that might make it worse and make sure they have their acute treatment available for when the headache phase starts. So, we just advise people to prepare for the attack rather than doing anything specific to stop it. But with new data from this study, we now have something that can be done. Patients have an option,” he said.

Dr. Goadsby explained that currently patients are not encouraged to use acute migraine medications such as triptans in the prodrome phase.

“There is actually no evidence that taking a triptan during the prodromal phase works. The advice is to take a triptan as soon as the headache starts, but not before the headache starts.”

He noted that there is also the problem of medication overuse that is seen with triptans, and most other medications used to treat acute migraine, which leads to medication overuse headache, “so we don’t like to encourage patients to increase the frequency of taking triptans for this reason.”

But ubrogepant and other members of the “gepant” class do not seem to have the propensity for medication overuse problems. “Rather, the more a patient takes the less likely they are to get a headache as these drugs also have a preventative effect,” Dr. Goadsby said.

Major Reduction in Severity

The PRODROME trial was conducted at 75 sites in the United States in 518 patients who had at least a 1-year history of migraine with or without aura and a history of two to eight migraine attacks per month with moderate to severe headache in each of the 3 months before study entry.

Participants underwent a rigorous screening period during which they were required to show that they could identify prodromal symptoms that were reliably followed by migraine headache within 1-6 hours.

They were randomly assigned to receive either placebo to treat the first qualifying prodrome event and ubrogepant 100 mg to treat the second qualifying prodrome event or vice versa, with instructions to take the study drug at the onset of the prodrome event.

Efficacy assessments during the double-blind treatment period were recorded by the participant in an electronic diary. On identifying a qualifying prodrome, the patient recorded prodromal symptoms, and was then required to report the absence or presence of a headache at regular intervals up to 48 hours after the study drug dose. If a headache was reported, participants rated the intensity as mild, moderate, or severe and reported whether rescue medication was taken to treat it.

The primary endpoint was absence of moderate or severe intensity headache within 24 hours after study-drug dose. This occurred after 46% of 418 qualifying prodrome events that had been treated with ubrogepant and after 29% of 423 qualifying prodrome events that had been treated with placebo (odds ratio, 2.09; 95% CI, 1.63 - 2.69; P < .0001).

“The incidence of moderate to severe headache was almost halved when ubrogepant was taken in the prodrome,” Dr. Goadsby reported.

Ubrogepant also showed similar impressive results for the secondary endpoints in the absence of moderate to severe headache within 48 hours post-dose and the absence of any headache of any intensity at 24 hours.

Little to No Disability

The researchers also evaluated functional ability, and more participants reported “no disability or able to function normally” during the 24 hours after treatment with ubrogepant than after placebo (OR, 1.66; P < .0001).

Other findings showed that the prodromal symptoms themselves, such as light sensitivity and cognitive dysfunction, were also reduced with ubrogepant.

Dr. Goadsby said he was pleased but not surprised by the results, as the “gepant” class of drugs are used in both the acute treatment of migraine and as preventive agents, although different agents have been approved for different indications in this regard.

“The ‘gepants’ are a class of medication that can be used in almost any way in migraine — to treat an acute migraine headache, to prevent migraine if taken chronically, and now we see that they can also stop a migraine from developing if taken during the initial prodromal phase. That’s unique for a migraine medication,” he said.

While the current study was conducted with ubrogepant, Dr. Goadsby suspects that any of the “gepants” would probably have a similar effect.

He noted that the prodromal phase of migraine has only just started to be explored, with functional imaging studies showing that structural brain changes occur during this phase.

Dr. Goadsby said the current study opens up a whole new area of interest, emphasizing the clinical value of identifying the prodrome in individuals with migraine, better characterizing the symptomology of the prodrome and understanding more about how to treat it.

“It’s the ultimate way of treating migraine early, and by taking this type of medication in the prodromal phase, patients may be able to stop having pain. That’s quite an implication,” he concluded.

The PRODROME study was funded by AbbVie. Dr. Goadsby reports personal fees from AbbVie.

A version of this article appeared on Medscape.com.

BARCELONA, SPAIN — In the randomized, placebo-controlled crossover PRODROME trial, treatment with ubrogepant (Ubrelvy) 100 mg, one of the new CGRP receptor antagonists, during the prodrome prevented the development of moderate/severe headache at both 24 hours and 48 hours post-dose. The medication also reduced headache of any intensity within 24 hours and functional disability compared with placebo.

“This represents a totally different way of treating a migraine attack – to treat it before the headache starts. This is a paradigm shift in the way we approach the acute treatment of migraine,” study investigator Peter Goadsby, MBBS, MD, PhD, professor of neurology at Kings College London, UK, said in an interview.

The findings were presented at 17th European Headache Congress (EHC) and were also recently published online in The Lancet.

A New Way to Manage Migraine?

The prodrome is usually the earliest phase of a migraine attack and is believed to be experienced by the vast majority of patients with migraine. It consists of various symptoms, including sensitivity to light, fatigue, mood changes, cognitive dysfunction, craving certain foods, and neck pain, which can occur several hours or days before onset.

Dr. Goadsby notes that, at present, there isn’t very much a patient can do about the prodrome.

“We advise patients if they feel an attack is coming not to do anything that might make it worse and make sure they have their acute treatment available for when the headache phase starts. So, we just advise people to prepare for the attack rather than doing anything specific to stop it. But with new data from this study, we now have something that can be done. Patients have an option,” he said.

Dr. Goadsby explained that currently patients are not encouraged to use acute migraine medications such as triptans in the prodrome phase.

“There is actually no evidence that taking a triptan during the prodromal phase works. The advice is to take a triptan as soon as the headache starts, but not before the headache starts.”

He noted that there is also the problem of medication overuse that is seen with triptans, and most other medications used to treat acute migraine, which leads to medication overuse headache, “so we don’t like to encourage patients to increase the frequency of taking triptans for this reason.”

But ubrogepant and other members of the “gepant” class do not seem to have the propensity for medication overuse problems. “Rather, the more a patient takes the less likely they are to get a headache as these drugs also have a preventative effect,” Dr. Goadsby said.

Major Reduction in Severity

The PRODROME trial was conducted at 75 sites in the United States in 518 patients who had at least a 1-year history of migraine with or without aura and a history of two to eight migraine attacks per month with moderate to severe headache in each of the 3 months before study entry.

Participants underwent a rigorous screening period during which they were required to show that they could identify prodromal symptoms that were reliably followed by migraine headache within 1-6 hours.

They were randomly assigned to receive either placebo to treat the first qualifying prodrome event and ubrogepant 100 mg to treat the second qualifying prodrome event or vice versa, with instructions to take the study drug at the onset of the prodrome event.

Efficacy assessments during the double-blind treatment period were recorded by the participant in an electronic diary. On identifying a qualifying prodrome, the patient recorded prodromal symptoms, and was then required to report the absence or presence of a headache at regular intervals up to 48 hours after the study drug dose. If a headache was reported, participants rated the intensity as mild, moderate, or severe and reported whether rescue medication was taken to treat it.

The primary endpoint was absence of moderate or severe intensity headache within 24 hours after study-drug dose. This occurred after 46% of 418 qualifying prodrome events that had been treated with ubrogepant and after 29% of 423 qualifying prodrome events that had been treated with placebo (odds ratio, 2.09; 95% CI, 1.63 - 2.69; P < .0001).

“The incidence of moderate to severe headache was almost halved when ubrogepant was taken in the prodrome,” Dr. Goadsby reported.

Ubrogepant also showed similar impressive results for the secondary endpoints in the absence of moderate to severe headache within 48 hours post-dose and the absence of any headache of any intensity at 24 hours.

Little to No Disability

The researchers also evaluated functional ability, and more participants reported “no disability or able to function normally” during the 24 hours after treatment with ubrogepant than after placebo (OR, 1.66; P < .0001).

Other findings showed that the prodromal symptoms themselves, such as light sensitivity and cognitive dysfunction, were also reduced with ubrogepant.

Dr. Goadsby said he was pleased but not surprised by the results, as the “gepant” class of drugs are used in both the acute treatment of migraine and as preventive agents, although different agents have been approved for different indications in this regard.

“The ‘gepants’ are a class of medication that can be used in almost any way in migraine — to treat an acute migraine headache, to prevent migraine if taken chronically, and now we see that they can also stop a migraine from developing if taken during the initial prodromal phase. That’s unique for a migraine medication,” he said.

While the current study was conducted with ubrogepant, Dr. Goadsby suspects that any of the “gepants” would probably have a similar effect.

He noted that the prodromal phase of migraine has only just started to be explored, with functional imaging studies showing that structural brain changes occur during this phase.

Dr. Goadsby said the current study opens up a whole new area of interest, emphasizing the clinical value of identifying the prodrome in individuals with migraine, better characterizing the symptomology of the prodrome and understanding more about how to treat it.

“It’s the ultimate way of treating migraine early, and by taking this type of medication in the prodromal phase, patients may be able to stop having pain. That’s quite an implication,” he concluded.

The PRODROME study was funded by AbbVie. Dr. Goadsby reports personal fees from AbbVie.

A version of this article appeared on Medscape.com.

FROM EHC 2023

Why Are Prion Diseases on the Rise?

This transcript has been edited for clarity.

In 1986, in Britain, cattle started dying.

The condition, quickly nicknamed “mad cow disease,” was clearly infectious, but the particular pathogen was difficult to identify. By 1993, 120,000 cattle in Britain were identified as being infected. As yet, no human cases had occurred and the UK government insisted that cattle were a dead-end host for the pathogen. By the mid-1990s, however, multiple human cases, attributable to ingestion of meat and organs from infected cattle, were discovered. In humans, variant Creutzfeldt-Jakob disease (CJD) was a media sensation — a nearly uniformly fatal, untreatable condition with a rapid onset of dementia, mobility issues characterized by jerky movements, and autopsy reports finding that the brain itself had turned into a spongy mess.

The United States banned UK beef imports in 1996 and only lifted the ban in 2020.

The disease was made all the more mysterious because the pathogen involved was not a bacterium, parasite, or virus, but a protein — or a proteinaceous infectious particle, shortened to “prion.”

Prions are misfolded proteins that aggregate in cells — in this case, in nerve cells. But what makes prions different from other misfolded proteins is that the misfolded protein catalyzes the conversion of its non-misfolded counterpart into the misfolded configuration. It creates a chain reaction, leading to rapid accumulation of misfolded proteins and cell death.

And, like a time bomb, we all have prion protein inside us. In its normally folded state, the function of prion protein remains unclear — knockout mice do okay without it — but it is also highly conserved across mammalian species, so it probably does something worthwhile, perhaps protecting nerve fibers.

Far more common than humans contracting mad cow disease is the condition known as sporadic CJD, responsible for 85% of all cases of prion-induced brain disease. The cause of sporadic CJD is unknown.

But one thing is known: Cases are increasing.

I don’t want you to freak out; we are not in the midst of a CJD epidemic. But it’s been a while since I’ve seen people discussing the condition — which remains as horrible as it was in the 1990s — and a new research letter appearing in JAMA Neurology brought it back to the top of my mind.

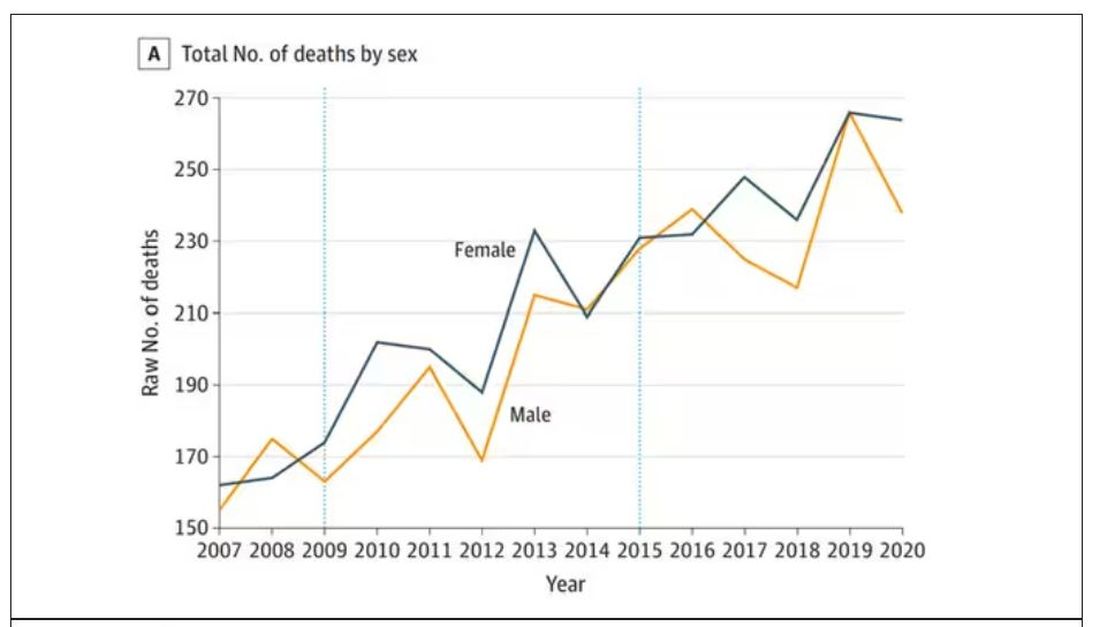

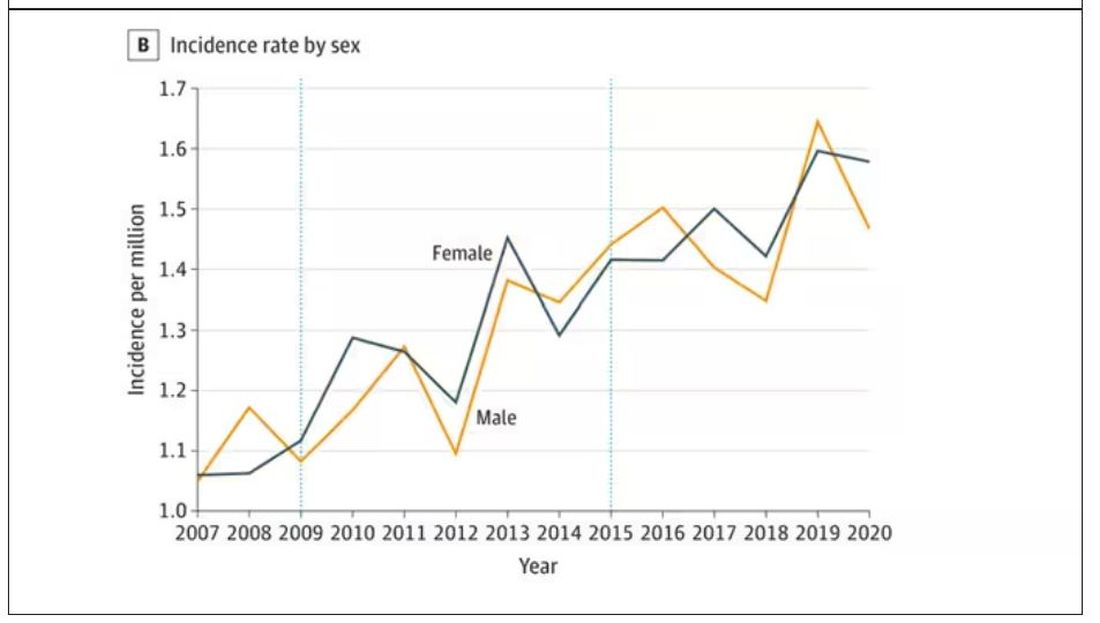

Researchers, led by Matthew Crane at Hopkins, used the CDC’s WONDER cause-of-death database, which pulls diagnoses from death certificates. Normally, I’m not a fan of using death certificates for cause-of-death analyses, but in this case I’ll give it a pass. Assuming that the diagnosis of CJD is made, it would be really unlikely for it not to appear on a death certificate.

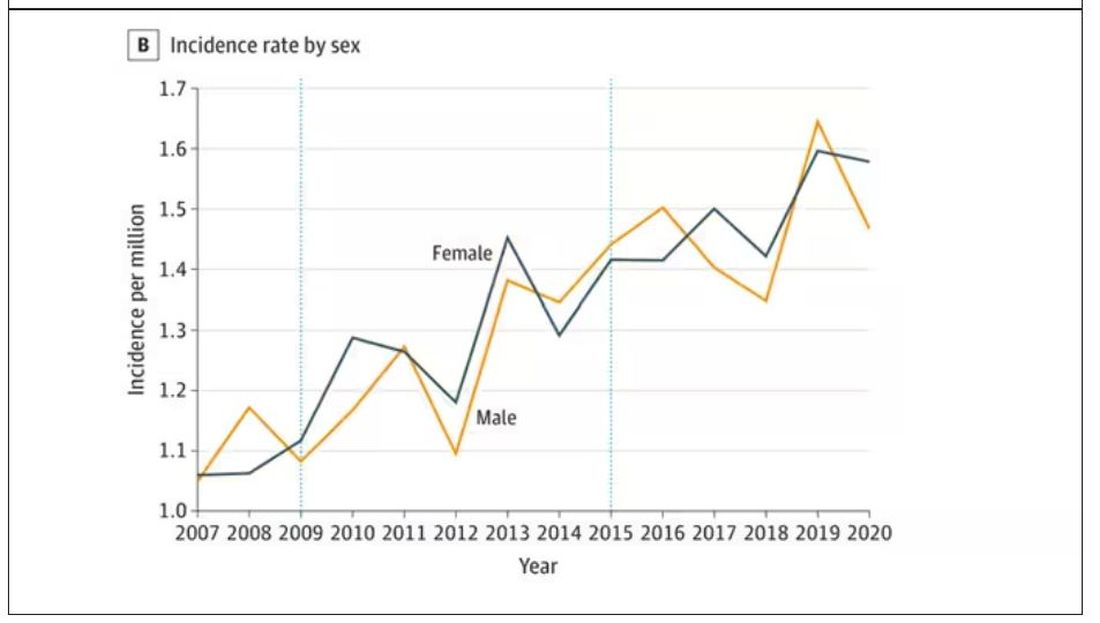

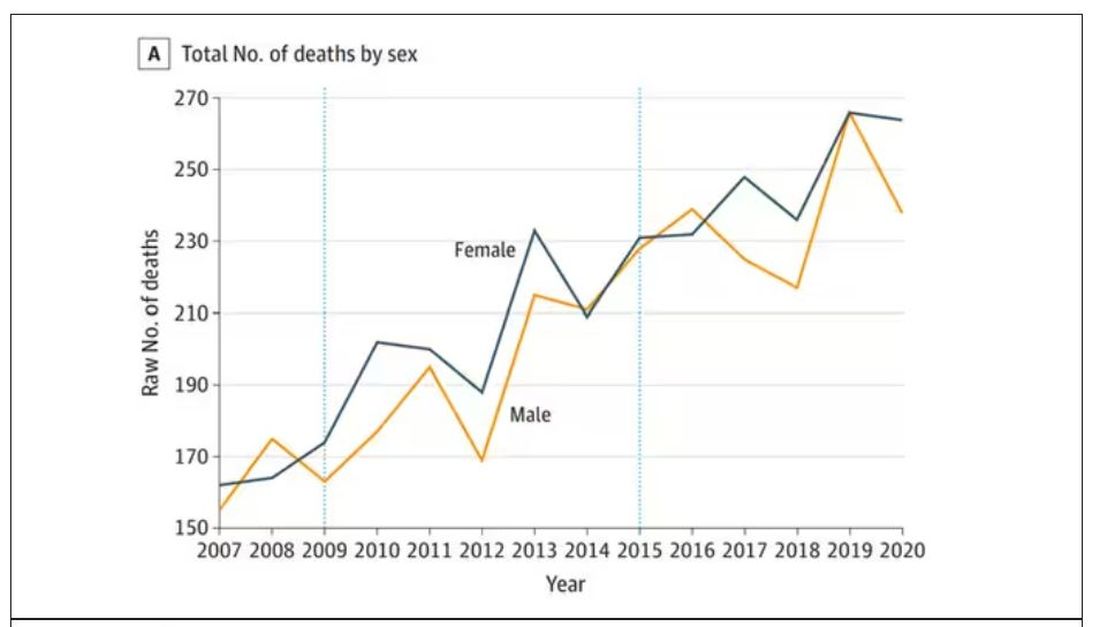

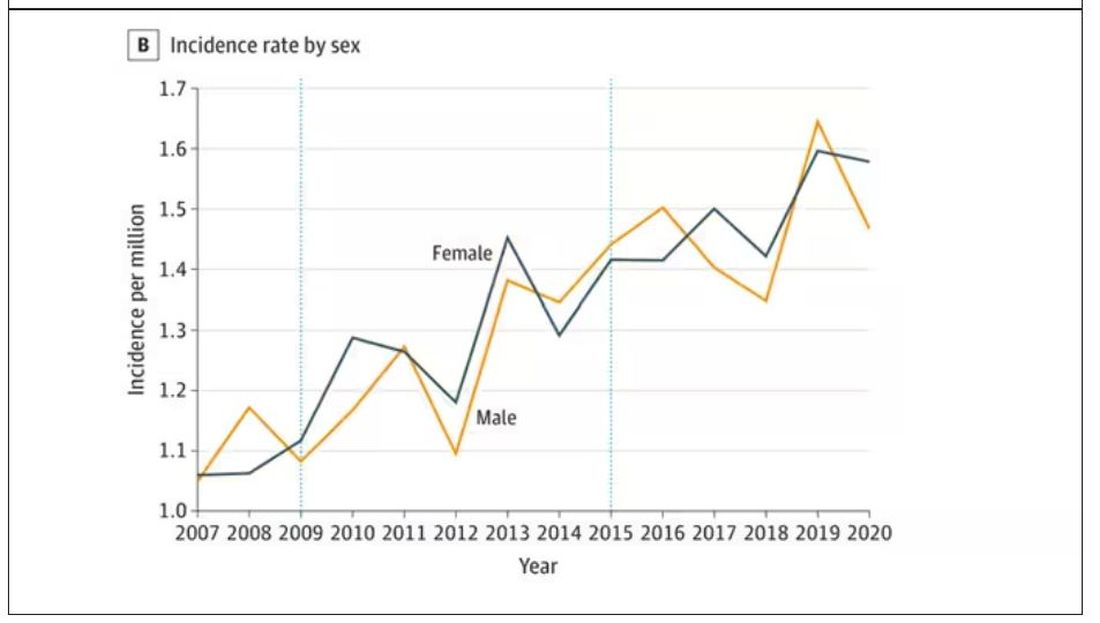

The main findings are seen here.

Note that we can’t tell whether these are sporadic CJD cases or variant CJD cases or even familial CJD cases; however, unless there has been a dramatic change in epidemiology, the vast majority of these will be sporadic.

The question is, why are there more cases?

Whenever this type of question comes up with any disease, there are basically three possibilities:

First, there may be an increase in the susceptible, or at-risk, population. In this case, we know that older people are at higher risk of developing sporadic CJD, and over time, the population has aged. To be fair, the authors adjusted for this and still saw an increase, though it was attenuated.

Second, we might be better at diagnosing the condition. A lot has happened since the mid-1990s, when the diagnosis was based more or less on symptoms. The advent of more sophisticated MRI protocols as well as a new diagnostic test called “real-time quaking-induced conversion testing” may mean we are just better at detecting people with this disease.

Third (and most concerning), a new exposure has occurred. What that exposure might be, where it might come from, is anyone’s guess. It’s hard to do broad-scale epidemiology on very rare diseases.

But given these findings, it seems that a bit more surveillance for this rare but devastating condition is well merited.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now.

F. Perry Wilson, MD, MSCE, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

In 1986, in Britain, cattle started dying.

The condition, quickly nicknamed “mad cow disease,” was clearly infectious, but the particular pathogen was difficult to identify. By 1993, 120,000 cattle in Britain were identified as being infected. As yet, no human cases had occurred and the UK government insisted that cattle were a dead-end host for the pathogen. By the mid-1990s, however, multiple human cases, attributable to ingestion of meat and organs from infected cattle, were discovered. In humans, variant Creutzfeldt-Jakob disease (CJD) was a media sensation — a nearly uniformly fatal, untreatable condition with a rapid onset of dementia, mobility issues characterized by jerky movements, and autopsy reports finding that the brain itself had turned into a spongy mess.

The United States banned UK beef imports in 1996 and only lifted the ban in 2020.

The disease was made all the more mysterious because the pathogen involved was not a bacterium, parasite, or virus, but a protein — or a proteinaceous infectious particle, shortened to “prion.”

Prions are misfolded proteins that aggregate in cells — in this case, in nerve cells. But what makes prions different from other misfolded proteins is that the misfolded protein catalyzes the conversion of its non-misfolded counterpart into the misfolded configuration. It creates a chain reaction, leading to rapid accumulation of misfolded proteins and cell death.

And, like a time bomb, we all have prion protein inside us. In its normally folded state, the function of prion protein remains unclear — knockout mice do okay without it — but it is also highly conserved across mammalian species, so it probably does something worthwhile, perhaps protecting nerve fibers.

Far more common than humans contracting mad cow disease is the condition known as sporadic CJD, responsible for 85% of all cases of prion-induced brain disease. The cause of sporadic CJD is unknown.

But one thing is known: Cases are increasing.

I don’t want you to freak out; we are not in the midst of a CJD epidemic. But it’s been a while since I’ve seen people discussing the condition — which remains as horrible as it was in the 1990s — and a new research letter appearing in JAMA Neurology brought it back to the top of my mind.

Researchers, led by Matthew Crane at Hopkins, used the CDC’s WONDER cause-of-death database, which pulls diagnoses from death certificates. Normally, I’m not a fan of using death certificates for cause-of-death analyses, but in this case I’ll give it a pass. Assuming that the diagnosis of CJD is made, it would be really unlikely for it not to appear on a death certificate.

The main findings are seen here.

Note that we can’t tell whether these are sporadic CJD cases or variant CJD cases or even familial CJD cases; however, unless there has been a dramatic change in epidemiology, the vast majority of these will be sporadic.

The question is, why are there more cases?

Whenever this type of question comes up with any disease, there are basically three possibilities:

First, there may be an increase in the susceptible, or at-risk, population. In this case, we know that older people are at higher risk of developing sporadic CJD, and over time, the population has aged. To be fair, the authors adjusted for this and still saw an increase, though it was attenuated.

Second, we might be better at diagnosing the condition. A lot has happened since the mid-1990s, when the diagnosis was based more or less on symptoms. The advent of more sophisticated MRI protocols as well as a new diagnostic test called “real-time quaking-induced conversion testing” may mean we are just better at detecting people with this disease.

Third (and most concerning), a new exposure has occurred. What that exposure might be, where it might come from, is anyone’s guess. It’s hard to do broad-scale epidemiology on very rare diseases.

But given these findings, it seems that a bit more surveillance for this rare but devastating condition is well merited.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now.

F. Perry Wilson, MD, MSCE, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

This transcript has been edited for clarity.

In 1986, in Britain, cattle started dying.

The condition, quickly nicknamed “mad cow disease,” was clearly infectious, but the particular pathogen was difficult to identify. By 1993, 120,000 cattle in Britain were identified as being infected. As yet, no human cases had occurred and the UK government insisted that cattle were a dead-end host for the pathogen. By the mid-1990s, however, multiple human cases, attributable to ingestion of meat and organs from infected cattle, were discovered. In humans, variant Creutzfeldt-Jakob disease (CJD) was a media sensation — a nearly uniformly fatal, untreatable condition with a rapid onset of dementia, mobility issues characterized by jerky movements, and autopsy reports finding that the brain itself had turned into a spongy mess.

The United States banned UK beef imports in 1996 and only lifted the ban in 2020.

The disease was made all the more mysterious because the pathogen involved was not a bacterium, parasite, or virus, but a protein — or a proteinaceous infectious particle, shortened to “prion.”

Prions are misfolded proteins that aggregate in cells — in this case, in nerve cells. But what makes prions different from other misfolded proteins is that the misfolded protein catalyzes the conversion of its non-misfolded counterpart into the misfolded configuration. It creates a chain reaction, leading to rapid accumulation of misfolded proteins and cell death.

And, like a time bomb, we all have prion protein inside us. In its normally folded state, the function of prion protein remains unclear — knockout mice do okay without it — but it is also highly conserved across mammalian species, so it probably does something worthwhile, perhaps protecting nerve fibers.

Far more common than humans contracting mad cow disease is the condition known as sporadic CJD, responsible for 85% of all cases of prion-induced brain disease. The cause of sporadic CJD is unknown.

But one thing is known: Cases are increasing.

I don’t want you to freak out; we are not in the midst of a CJD epidemic. But it’s been a while since I’ve seen people discussing the condition — which remains as horrible as it was in the 1990s — and a new research letter appearing in JAMA Neurology brought it back to the top of my mind.

Researchers, led by Matthew Crane at Hopkins, used the CDC’s WONDER cause-of-death database, which pulls diagnoses from death certificates. Normally, I’m not a fan of using death certificates for cause-of-death analyses, but in this case I’ll give it a pass. Assuming that the diagnosis of CJD is made, it would be really unlikely for it not to appear on a death certificate.

The main findings are seen here.

Note that we can’t tell whether these are sporadic CJD cases or variant CJD cases or even familial CJD cases; however, unless there has been a dramatic change in epidemiology, the vast majority of these will be sporadic.

The question is, why are there more cases?

Whenever this type of question comes up with any disease, there are basically three possibilities:

First, there may be an increase in the susceptible, or at-risk, population. In this case, we know that older people are at higher risk of developing sporadic CJD, and over time, the population has aged. To be fair, the authors adjusted for this and still saw an increase, though it was attenuated.

Second, we might be better at diagnosing the condition. A lot has happened since the mid-1990s, when the diagnosis was based more or less on symptoms. The advent of more sophisticated MRI protocols as well as a new diagnostic test called “real-time quaking-induced conversion testing” may mean we are just better at detecting people with this disease.

Third (and most concerning), a new exposure has occurred. What that exposure might be, where it might come from, is anyone’s guess. It’s hard to do broad-scale epidemiology on very rare diseases.

But given these findings, it seems that a bit more surveillance for this rare but devastating condition is well merited.

F. Perry Wilson, MD, MSCE, is an associate professor of medicine and public health and director of Yale’s Clinical and Translational Research Accelerator. His science communication work can be found in the Huffington Post, on NPR, and here on Medscape. He tweets @fperrywilson and his new book, How Medicine Works and When It Doesn’t, is available now.

F. Perry Wilson, MD, MSCE, has disclosed no relevant financial relationships.

A version of this article appeared on Medscape.com.

Is migraine really a female disorder?

BARCELONA, SPAIN — Migraine is widely considered a predominantly female disorder. Its frequency, duration, and severity tend to be higher in women, and women are also more likely than men to receive a migraine diagnosis. However, gender expectations, differences in the likelihood of self-reporting, and problems with how migraine is classified make it difficult to estimate its true prevalence in men and women.

Different Symptoms

Headache disorders are estimated to affect 50% of the general population ; tension-type headache and migraine are the two most common. According to epidemiologic studies, migraine is more prevalent in women, with a female-to-male ratio of 3:1. There are numerous studies of why this might be, most of which focus largely on female-related factors, such as hormones and the menstrual cycle.

“Despite many years of research, there isn’t one clear factor explaining this substantial difference between women and men,” said Tobias Kurth of Charité – Universitätsmedizin Berlin, Germany. “So the question is: Are we missing something else?”

One factor in these perceived sex differences in migraine is that women seem to report their migraines differently from men, and they also have different symptoms. For example, women are more likely than men to report severe pain, and their migraine attacks are more often accompanied by photophobia, phonophobia, and nausea, whereas men’s migraines are more often accompanied by aura.

“By favoring female symptoms, the classification system may not be picking up male symptoms because they’re not being classified in the right way,” Dr. Kurth said, with one consequence being that migraine is underdiagnosed in men. “Before trying to understand the biological and behavioral reasons for these sex differences, we first need to consider these methodological challenges that we all apply knowingly or unknowingly.”

Christian Lampl, professor of neurology at Konventhospital der Barmherzigen Brüder Linz, Austria, and president of the European Headache Federation, said in an interview, “I’m convinced that this 3:1 ratio which has been stated for decades is wrong, but we still don’t have the data. The criteria we have [for classifying migraine] are useful for clinical trials, but they are useless for determining the male-to-female ratio.

“We need a new definition of migraine,” he added. “Migraine is an episode, not an attack. Attacks have a sudden onset, and migraine onset is not sudden — it is an episode with a headache attack.”

Inadequate Menopause Services

Professor Anne MacGregor of St. Bartholomew’s Hospital in London, United Kingdom, specializes in migraine and women’s health. She presented data showing that migraine is underdiagnosed in women; one reason being that the disorder receives inadequate attention from healthcare professionals at specialist menopause services.

Menopause is associated with an increased prevalence of migraine, but women do not discuss headache symptoms at specialist menopause services, Dr. MacGregor said.

She then described unpublished results from a survey of 117 women attending the specialist menopause service at St. Bartholomew’s Hospital. Among the respondents, 34% reported experiencing episodic migraine and an additional 8% reported having chronic migraine.

“Within this population of women who were not reporting headache as a symptom [to the menopause service until asked in the survey], 42% of them were positive for a diagnosis of migraine,” said Dr. MacGregor. “They were mostly relying on prescribed paracetamol and codeine, or buying it over the counter, and only 22% of them were receiving triptans.

“They are clearly being undertreated,” she added. “Part of this issue is that they didn’t spontaneously report headache as a menopause symptom, so they weren’t consulting for headache to their primary care physicians.”

Correct diagnosis by a consultant is a prerequisite for receiving appropriate migraine treatment. Yet, according to a US study published in 2012, only 45.5% of women with episodic migraine consulted a prescribing healthcare professional. Of those who consulted, 89% were diagnosed correctly, and only 68% of those received the appropriate treatment.

A larger, more recent study confirmed that there is a massive unmet need for improving care in this patient population. The Chronic Migraine Epidemiology and Outcomes (CaMEO) Study, which analyzed data from nearly 90,000 participants, showed that just 4.8% of people with chronic migraine received consultation, correct diagnosis, and treatment, with 89% of women with chronic migraine left undiagnosed.

The OVERCOME Study further revealed that although many people with migraine were repeat consulters, they were consulting their physicians for other health problems.

“This makes it very clear that people in other specialties need to be more aware about picking up and diagnosing headache,” said MacGregor. “That’s where the real need is in managing headache. We have the treatments, but if the patients can’t access them, they’re not much good to them.”

A version of this article appeared on Medscape.com.

BARCELONA, SPAIN — Migraine is widely considered a predominantly female disorder. Its frequency, duration, and severity tend to be higher in women, and women are also more likely than men to receive a migraine diagnosis. However, gender expectations, differences in the likelihood of self-reporting, and problems with how migraine is classified make it difficult to estimate its true prevalence in men and women.

Different Symptoms

Headache disorders are estimated to affect 50% of the general population ; tension-type headache and migraine are the two most common. According to epidemiologic studies, migraine is more prevalent in women, with a female-to-male ratio of 3:1. There are numerous studies of why this might be, most of which focus largely on female-related factors, such as hormones and the menstrual cycle.

“Despite many years of research, there isn’t one clear factor explaining this substantial difference between women and men,” said Tobias Kurth of Charité – Universitätsmedizin Berlin, Germany. “So the question is: Are we missing something else?”

One factor in these perceived sex differences in migraine is that women seem to report their migraines differently from men, and they also have different symptoms. For example, women are more likely than men to report severe pain, and their migraine attacks are more often accompanied by photophobia, phonophobia, and nausea, whereas men’s migraines are more often accompanied by aura.

“By favoring female symptoms, the classification system may not be picking up male symptoms because they’re not being classified in the right way,” Dr. Kurth said, with one consequence being that migraine is underdiagnosed in men. “Before trying to understand the biological and behavioral reasons for these sex differences, we first need to consider these methodological challenges that we all apply knowingly or unknowingly.”

Christian Lampl, professor of neurology at Konventhospital der Barmherzigen Brüder Linz, Austria, and president of the European Headache Federation, said in an interview, “I’m convinced that this 3:1 ratio which has been stated for decades is wrong, but we still don’t have the data. The criteria we have [for classifying migraine] are useful for clinical trials, but they are useless for determining the male-to-female ratio.

“We need a new definition of migraine,” he added. “Migraine is an episode, not an attack. Attacks have a sudden onset, and migraine onset is not sudden — it is an episode with a headache attack.”

Inadequate Menopause Services

Professor Anne MacGregor of St. Bartholomew’s Hospital in London, United Kingdom, specializes in migraine and women’s health. She presented data showing that migraine is underdiagnosed in women; one reason being that the disorder receives inadequate attention from healthcare professionals at specialist menopause services.

Menopause is associated with an increased prevalence of migraine, but women do not discuss headache symptoms at specialist menopause services, Dr. MacGregor said.

She then described unpublished results from a survey of 117 women attending the specialist menopause service at St. Bartholomew’s Hospital. Among the respondents, 34% reported experiencing episodic migraine and an additional 8% reported having chronic migraine.

“Within this population of women who were not reporting headache as a symptom [to the menopause service until asked in the survey], 42% of them were positive for a diagnosis of migraine,” said Dr. MacGregor. “They were mostly relying on prescribed paracetamol and codeine, or buying it over the counter, and only 22% of them were receiving triptans.

“They are clearly being undertreated,” she added. “Part of this issue is that they didn’t spontaneously report headache as a menopause symptom, so they weren’t consulting for headache to their primary care physicians.”

Correct diagnosis by a consultant is a prerequisite for receiving appropriate migraine treatment. Yet, according to a US study published in 2012, only 45.5% of women with episodic migraine consulted a prescribing healthcare professional. Of those who consulted, 89% were diagnosed correctly, and only 68% of those received the appropriate treatment.

A larger, more recent study confirmed that there is a massive unmet need for improving care in this patient population. The Chronic Migraine Epidemiology and Outcomes (CaMEO) Study, which analyzed data from nearly 90,000 participants, showed that just 4.8% of people with chronic migraine received consultation, correct diagnosis, and treatment, with 89% of women with chronic migraine left undiagnosed.

The OVERCOME Study further revealed that although many people with migraine were repeat consulters, they were consulting their physicians for other health problems.

“This makes it very clear that people in other specialties need to be more aware about picking up and diagnosing headache,” said MacGregor. “That’s where the real need is in managing headache. We have the treatments, but if the patients can’t access them, they’re not much good to them.”

A version of this article appeared on Medscape.com.

BARCELONA, SPAIN — Migraine is widely considered a predominantly female disorder. Its frequency, duration, and severity tend to be higher in women, and women are also more likely than men to receive a migraine diagnosis. However, gender expectations, differences in the likelihood of self-reporting, and problems with how migraine is classified make it difficult to estimate its true prevalence in men and women.

Different Symptoms

Headache disorders are estimated to affect 50% of the general population ; tension-type headache and migraine are the two most common. According to epidemiologic studies, migraine is more prevalent in women, with a female-to-male ratio of 3:1. There are numerous studies of why this might be, most of which focus largely on female-related factors, such as hormones and the menstrual cycle.

“Despite many years of research, there isn’t one clear factor explaining this substantial difference between women and men,” said Tobias Kurth of Charité – Universitätsmedizin Berlin, Germany. “So the question is: Are we missing something else?”

One factor in these perceived sex differences in migraine is that women seem to report their migraines differently from men, and they also have different symptoms. For example, women are more likely than men to report severe pain, and their migraine attacks are more often accompanied by photophobia, phonophobia, and nausea, whereas men’s migraines are more often accompanied by aura.

“By favoring female symptoms, the classification system may not be picking up male symptoms because they’re not being classified in the right way,” Dr. Kurth said, with one consequence being that migraine is underdiagnosed in men. “Before trying to understand the biological and behavioral reasons for these sex differences, we first need to consider these methodological challenges that we all apply knowingly or unknowingly.”

Christian Lampl, professor of neurology at Konventhospital der Barmherzigen Brüder Linz, Austria, and president of the European Headache Federation, said in an interview, “I’m convinced that this 3:1 ratio which has been stated for decades is wrong, but we still don’t have the data. The criteria we have [for classifying migraine] are useful for clinical trials, but they are useless for determining the male-to-female ratio.

“We need a new definition of migraine,” he added. “Migraine is an episode, not an attack. Attacks have a sudden onset, and migraine onset is not sudden — it is an episode with a headache attack.”

Inadequate Menopause Services

Professor Anne MacGregor of St. Bartholomew’s Hospital in London, United Kingdom, specializes in migraine and women’s health. She presented data showing that migraine is underdiagnosed in women; one reason being that the disorder receives inadequate attention from healthcare professionals at specialist menopause services.

Menopause is associated with an increased prevalence of migraine, but women do not discuss headache symptoms at specialist menopause services, Dr. MacGregor said.

She then described unpublished results from a survey of 117 women attending the specialist menopause service at St. Bartholomew’s Hospital. Among the respondents, 34% reported experiencing episodic migraine and an additional 8% reported having chronic migraine.

“Within this population of women who were not reporting headache as a symptom [to the menopause service until asked in the survey], 42% of them were positive for a diagnosis of migraine,” said Dr. MacGregor. “They were mostly relying on prescribed paracetamol and codeine, or buying it over the counter, and only 22% of them were receiving triptans.

“They are clearly being undertreated,” she added. “Part of this issue is that they didn’t spontaneously report headache as a menopause symptom, so they weren’t consulting for headache to their primary care physicians.”

Correct diagnosis by a consultant is a prerequisite for receiving appropriate migraine treatment. Yet, according to a US study published in 2012, only 45.5% of women with episodic migraine consulted a prescribing healthcare professional. Of those who consulted, 89% were diagnosed correctly, and only 68% of those received the appropriate treatment.

A larger, more recent study confirmed that there is a massive unmet need for improving care in this patient population. The Chronic Migraine Epidemiology and Outcomes (CaMEO) Study, which analyzed data from nearly 90,000 participants, showed that just 4.8% of people with chronic migraine received consultation, correct diagnosis, and treatment, with 89% of women with chronic migraine left undiagnosed.

The OVERCOME Study further revealed that although many people with migraine were repeat consulters, they were consulting their physicians for other health problems.

“This makes it very clear that people in other specialties need to be more aware about picking up and diagnosing headache,” said MacGregor. “That’s where the real need is in managing headache. We have the treatments, but if the patients can’t access them, they’re not much good to them.”

A version of this article appeared on Medscape.com.

FROM EHC 2023

Younger heart disease onset tied to higher dementia risk

TOPLINE:

, with the risk highest — at 36% — if onset is before age 45, results of a large observational study show.

METHODOLOGY:

- The study included 432,667 of the more than 500,000 participants in the UK Biobank, with a mean age of 56.9 years, 50,685 (11.7%) of whom had CHD and 50,445 had data on age at CHD onset.

- Researchers divided participants into three groups according to age at CHD onset (below 45 years, 45-59 years, and 60 years and older), and carried out a propensity score matching analysis.

- Outcomes included all-cause dementia, AD, and VD.

- Covariates included age, sex, race, educational level, body mass index, low-density lipoprotein cholesterol, smoking status, alcohol intake, exercise, depressed mood, hypertension, diabetes, statin use, and apolipoprotein E4 status.

TAKEAWAY:

- During a median follow-up of 12.8 years, researchers identified 5876 cases of all-cause dementia, 2540 cases of AD, and 1220 cases of VD.

- Fully adjusted models showed participants with CHD had significantly higher risks than those without CHD of developing all-cause dementia (hazard ratio [HR], 1.36; 95% CI, 1.28-1.45; P < .001), AD (HR, 1.13; 95% CI, 1.02-1.24; P = .019), and VD (HR, 1.78; 95% CI, 1.56-2.02; P < .001). The higher risk for VD suggests CHD has a more profound influence on neuropathologic changes involved in this dementia type, said the authors.

- Those with CHD diagnosed at a younger age had higher risks of developing dementia (HR per 10-year decrease in age, 1.25; 95% CI, 1.20-1.30 for all-cause dementia, 1.29; 95% CI, 1.20-1.38 for AD, and 1.22; 95% CI, 1.13-1.31 for VD; P for all < .001).

- Propensity score matching analysis showed patients with CHD had significantly higher risks for dementia compared with matched controls, with the highest risk seen in patients diagnosed before age 45 (HR, 2.40; 95% CI, 1.79-3.20; P < .001), followed by those diagnosed between 45 and 59 years (HR, 1.46; 95% CI, 1.32-1.62; P < .001) and at or above 60 years (HR, 1.11; 95% CI, 1.03-1.19; P = .005), with similar results for AD and VD.

IN PRACTICE:

The findings suggest “additional attention should be paid to the cognitive status of patients with CHD, especially the ones diagnosed with CHD at a young age,” the authors conclude, noting that “timely intervention, such as cognitive training, could be implemented once signs of cognitive deteriorations are detected.”

SOURCE:

The study was conducted by Jie Liang, BS, School of Nursing, Chinese Academy of Medical Sciences & Peking Union Medical College, Beijing, and colleagues. It was published online on November 29, 2023, in the Journal of the American Heart Association.

LIMITATIONS:

As this is an observational study, it can’t conclude a causal relationship. Although the authors adjusted for many potential confounders, unknown risk factors that also contribute to CHD can’t be ruled out. As the study excluded 69,744 participants, selection bias is possible. The study included a mostly White population.

DISCLOSURES:

The study was supported by the National Natural Science Foundation of China, the Non-Profit Central Research Institute Fund of the Chinese Academy of Medical Sciences, and the China Medical Board. The authors have no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

TOPLINE:

, with the risk highest — at 36% — if onset is before age 45, results of a large observational study show.

METHODOLOGY:

- The study included 432,667 of the more than 500,000 participants in the UK Biobank, with a mean age of 56.9 years, 50,685 (11.7%) of whom had CHD and 50,445 had data on age at CHD onset.

- Researchers divided participants into three groups according to age at CHD onset (below 45 years, 45-59 years, and 60 years and older), and carried out a propensity score matching analysis.

- Outcomes included all-cause dementia, AD, and VD.

- Covariates included age, sex, race, educational level, body mass index, low-density lipoprotein cholesterol, smoking status, alcohol intake, exercise, depressed mood, hypertension, diabetes, statin use, and apolipoprotein E4 status.

TAKEAWAY:

- During a median follow-up of 12.8 years, researchers identified 5876 cases of all-cause dementia, 2540 cases of AD, and 1220 cases of VD.

- Fully adjusted models showed participants with CHD had significantly higher risks than those without CHD of developing all-cause dementia (hazard ratio [HR], 1.36; 95% CI, 1.28-1.45; P < .001), AD (HR, 1.13; 95% CI, 1.02-1.24; P = .019), and VD (HR, 1.78; 95% CI, 1.56-2.02; P < .001). The higher risk for VD suggests CHD has a more profound influence on neuropathologic changes involved in this dementia type, said the authors.

- Those with CHD diagnosed at a younger age had higher risks of developing dementia (HR per 10-year decrease in age, 1.25; 95% CI, 1.20-1.30 for all-cause dementia, 1.29; 95% CI, 1.20-1.38 for AD, and 1.22; 95% CI, 1.13-1.31 for VD; P for all < .001).

- Propensity score matching analysis showed patients with CHD had significantly higher risks for dementia compared with matched controls, with the highest risk seen in patients diagnosed before age 45 (HR, 2.40; 95% CI, 1.79-3.20; P < .001), followed by those diagnosed between 45 and 59 years (HR, 1.46; 95% CI, 1.32-1.62; P < .001) and at or above 60 years (HR, 1.11; 95% CI, 1.03-1.19; P = .005), with similar results for AD and VD.

IN PRACTICE:

The findings suggest “additional attention should be paid to the cognitive status of patients with CHD, especially the ones diagnosed with CHD at a young age,” the authors conclude, noting that “timely intervention, such as cognitive training, could be implemented once signs of cognitive deteriorations are detected.”

SOURCE:

The study was conducted by Jie Liang, BS, School of Nursing, Chinese Academy of Medical Sciences & Peking Union Medical College, Beijing, and colleagues. It was published online on November 29, 2023, in the Journal of the American Heart Association.

LIMITATIONS:

As this is an observational study, it can’t conclude a causal relationship. Although the authors adjusted for many potential confounders, unknown risk factors that also contribute to CHD can’t be ruled out. As the study excluded 69,744 participants, selection bias is possible. The study included a mostly White population.

DISCLOSURES:

The study was supported by the National Natural Science Foundation of China, the Non-Profit Central Research Institute Fund of the Chinese Academy of Medical Sciences, and the China Medical Board. The authors have no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

TOPLINE:

, with the risk highest — at 36% — if onset is before age 45, results of a large observational study show.

METHODOLOGY:

- The study included 432,667 of the more than 500,000 participants in the UK Biobank, with a mean age of 56.9 years, 50,685 (11.7%) of whom had CHD and 50,445 had data on age at CHD onset.

- Researchers divided participants into three groups according to age at CHD onset (below 45 years, 45-59 years, and 60 years and older), and carried out a propensity score matching analysis.

- Outcomes included all-cause dementia, AD, and VD.

- Covariates included age, sex, race, educational level, body mass index, low-density lipoprotein cholesterol, smoking status, alcohol intake, exercise, depressed mood, hypertension, diabetes, statin use, and apolipoprotein E4 status.

TAKEAWAY:

- During a median follow-up of 12.8 years, researchers identified 5876 cases of all-cause dementia, 2540 cases of AD, and 1220 cases of VD.

- Fully adjusted models showed participants with CHD had significantly higher risks than those without CHD of developing all-cause dementia (hazard ratio [HR], 1.36; 95% CI, 1.28-1.45; P < .001), AD (HR, 1.13; 95% CI, 1.02-1.24; P = .019), and VD (HR, 1.78; 95% CI, 1.56-2.02; P < .001). The higher risk for VD suggests CHD has a more profound influence on neuropathologic changes involved in this dementia type, said the authors.

- Those with CHD diagnosed at a younger age had higher risks of developing dementia (HR per 10-year decrease in age, 1.25; 95% CI, 1.20-1.30 for all-cause dementia, 1.29; 95% CI, 1.20-1.38 for AD, and 1.22; 95% CI, 1.13-1.31 for VD; P for all < .001).

- Propensity score matching analysis showed patients with CHD had significantly higher risks for dementia compared with matched controls, with the highest risk seen in patients diagnosed before age 45 (HR, 2.40; 95% CI, 1.79-3.20; P < .001), followed by those diagnosed between 45 and 59 years (HR, 1.46; 95% CI, 1.32-1.62; P < .001) and at or above 60 years (HR, 1.11; 95% CI, 1.03-1.19; P = .005), with similar results for AD and VD.

IN PRACTICE:

The findings suggest “additional attention should be paid to the cognitive status of patients with CHD, especially the ones diagnosed with CHD at a young age,” the authors conclude, noting that “timely intervention, such as cognitive training, could be implemented once signs of cognitive deteriorations are detected.”

SOURCE:

The study was conducted by Jie Liang, BS, School of Nursing, Chinese Academy of Medical Sciences & Peking Union Medical College, Beijing, and colleagues. It was published online on November 29, 2023, in the Journal of the American Heart Association.

LIMITATIONS:

As this is an observational study, it can’t conclude a causal relationship. Although the authors adjusted for many potential confounders, unknown risk factors that also contribute to CHD can’t be ruled out. As the study excluded 69,744 participants, selection bias is possible. The study included a mostly White population.

DISCLOSURES:

The study was supported by the National Natural Science Foundation of China, the Non-Profit Central Research Institute Fund of the Chinese Academy of Medical Sciences, and the China Medical Board. The authors have no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

Which migraine medications are most effective?

TOPLINE:

new results from large, real-world analysis of self-reported patient data show.

METHODOLOGY:

- Researchers analyzed nearly 11 million migraine attack records extracted from Migraine Buddy, an e-diary smartphone app, over a 6-year period.

- They evaluated self-reported treatment effectiveness for 25 acute migraine medications among seven classes: acetaminophen, NSAIDs, triptans, combination analgesics, ergots, antiemetics, and opioids.

- A two-level nested multivariate logistic regression model adjusted for within-subject dependency and for concomitant medications taken within each analyzed migraine attack.

- The final analysis included nearly 5 million medication-outcome pairs from 3.1 million migraine attacks in 278,000 medication users.

TAKEAWAY:

- Using ibuprofen as the reference, triptans, ergots, and antiemetics were the top three medication classes with the highest effectiveness (mean odds ratios [OR] 4.80, 3.02, and 2.67, respectively).

- The next most effective medication classes were opioids (OR, 2.49), NSAIDs other than ibuprofen (OR, 1.94), combination analgesics acetaminophen/acetylsalicylic acid/caffeine (OR, 1.69), and others (OR, 1.49).

- Acetaminophen (OR, 0.83) was considered to be the least effective.

- The most effective individual medications were eletriptan (Relpax) (OR, 6.1); zolmitriptan (Zomig) (OR, 5.7); and sumatriptan (Imitrex) (OR, 5.2).

IN PRACTICE:

“Our findings that triptans, ergots, and antiemetics are the most effective classes of medications align with the guideline recommendations and offer generalizable insights to complement clinical practice,” the authors wrote.

SOURCE:

The study, with first author Chia-Chun Chiang, MD, Department of Neurology, Mayo Clinic, Rochester, Minnesota, was published online November 29 in Neurology.

LIMITATIONS:

The findings are based on subjective user-reported ratings of effectiveness and information on side effects, dosages, and formulations were not available. The newer migraine medication classes, gepants and ditans, were not included due to the relatively lower number of treated attacks. The regression model did not include age, gender, pain intensity, and other migraine-associated symptoms, which could potentially affect treatment effectiveness.

DISCLOSURES: