User login

Long-term erratic sleep may foretell cognitive problems

CHARLOTTE, N.C. – Erratic sleep patterns over years or even decades, along with a patient’s age and history of depression, may be harbingers of cognitive impairment later in life, an analysis of decades of data from a large sleep study has found.

“What we were a little surprised to find in this model was that sleep duration, whether short, long or average, was not significant, but the sleep variability – the change in sleep across those time measurements—was significantly impacting the incidence of cognitive impairment,” Samantha Keil, PhD, a postdoctoral fellow at the University of Washington, Seattle, reported at the at the annual meeting of the Associated Professional Sleep Societies.

The researchers analyzed sleep and cognition data collected over decades on 1,104 adults who participated in the Seattle Longitudinal Study. Study participants ranged from age 55 to over 100, with almost 80% of the study cohort aged 65 and older.

The Seattle Longitudinal Study first started gathering data in the 1950s. Participants in the study cohort underwent an extensive cognitive battery, which was added to the study in 1984 and gathered every 5-7 years, and completed a health behavioral questionnaire (HBQ), which was added in 1993 and administered every 3-5 years, Dr. Keil said. The HBQ included a question on average nightly sleep duration.

The study used a multivariable Cox proportional hazard regression model to evaluate the overall effect of average sleep duration and changes in sleep duration over time on cognitive impairment. Covariates used in the model included apolipoprotein E4 (APOE4) genotype, gender, years of education, ethnicity, and depression.

Dr. Keil said the model found, as expected, that the demographic variables of education, APOE status, and depression were significantly associated with cognitive impairment (hazard ratios of 1.11; 95% confidence interval [CI], 1.02-1.21; P = .01; and 2.08; 95% CI, 1.31-3.31; P < .005; and 1.08; 95% CI, 1.04-1.13; P < .005, respectively). Importantly, when evaluating the duration, change and variability of sleep, the researchers found that increased sleep variability was significantly associated with cognitive impairment (HR, 3.15; 95% CI, 1.69-5.87; P < .005).

Under this analysis, “sleep variability over time and not median sleep duration was associated with cognitive impairment,” she said. When sleep variability was added into the model, it improved the concordance score – a value that reflects the ability of a model to predict an outcome better than random chance – from .63 to .73 (a value of .5 indicates the model is no better at predicting an outcome than a random chance model; a value of .7 or greater indicates a good model).

Identification of sleep variability as a sleep pattern of interest in longitudinal studies is important, Dr. Keil said, because simply evaluating mean or median sleep duration across time might not account for a subject’s variable sleep phenotype. Most importantly, further evaluation of sleep variability with a linear regression prediction analysis (F statistic 8.796, P < .0001, adjusted R-squared .235) found that increased age, depression, and sleep variability significantly predicted cognitive impairment 10 years downstream. “Longitudinal sleep variability is perhaps for the first time being reported as significantly associated with the development of downstream cognitive impairment,” Dr. Keil said.

What makes this study unique, Dr. Keil said in an interview, is that it used self-reported longitudinal data gathered at 3- to 5-year intervals for up to 25 years, allowing for the assessment of variation of sleep duration across this entire time frame. “If you could use that shift in sleep duration as a point of therapeutic intervention, that would be really exciting,” she said.

Future research will evaluate how sleep variability and cognitive function are impacted by other variables gathered in the Seattle Longitudinal Study over the years, including factors such as diabetes and hypertension status, diet, alcohol and tobacco use, and marital and family status. Follow-up studies will be investigating the impact of sleep variability on neuropathologic disease progression and lymphatic system impairment, Dr. Keil said.

A newer approach

By linking sleep variability and daytime functioning, the study employs a “newer approach,” said Joseph M. Dzierzewski, PhD, director of behavioral medicine concentration in the department of psychology at Virginia Commonwealth University in Richmond. “While some previous work has examined night-to-night fluctuation in various sleep characteristics and cognitive functioning, what differentiates the present study from these previous works is the duration of the investigation,” he said. The “richness of data” in the Seattle Longitudinal Study and how it tracks sleep and cognition over years make it “quite unique and novel.”

Future studies, he said, should be deliberate in how they evaluate sleep and neurocognitive function across years. “Disentangling short-term moment-to-moment and day-to-day fluctuation, which may be more reversible in nature, from long-term, enduring month-to-month or year-to-year fluctuation, which may be more permanent, will be important for continuing to advance our understanding of these complex phenomena,” Dr. Dzierzewski said. “An additional important area of future investigation would be to continue the hunt for a common biological factor underpinning both sleep variability and Alzheimer’s disease.” That, he said, may help identify potential intervention targets.

Dr. Keil and Dr. Dzierzewski have no relevant disclosures.

CHARLOTTE, N.C. – Erratic sleep patterns over years or even decades, along with a patient’s age and history of depression, may be harbingers of cognitive impairment later in life, an analysis of decades of data from a large sleep study has found.

“What we were a little surprised to find in this model was that sleep duration, whether short, long or average, was not significant, but the sleep variability – the change in sleep across those time measurements—was significantly impacting the incidence of cognitive impairment,” Samantha Keil, PhD, a postdoctoral fellow at the University of Washington, Seattle, reported at the at the annual meeting of the Associated Professional Sleep Societies.

The researchers analyzed sleep and cognition data collected over decades on 1,104 adults who participated in the Seattle Longitudinal Study. Study participants ranged from age 55 to over 100, with almost 80% of the study cohort aged 65 and older.

The Seattle Longitudinal Study first started gathering data in the 1950s. Participants in the study cohort underwent an extensive cognitive battery, which was added to the study in 1984 and gathered every 5-7 years, and completed a health behavioral questionnaire (HBQ), which was added in 1993 and administered every 3-5 years, Dr. Keil said. The HBQ included a question on average nightly sleep duration.

The study used a multivariable Cox proportional hazard regression model to evaluate the overall effect of average sleep duration and changes in sleep duration over time on cognitive impairment. Covariates used in the model included apolipoprotein E4 (APOE4) genotype, gender, years of education, ethnicity, and depression.

Dr. Keil said the model found, as expected, that the demographic variables of education, APOE status, and depression were significantly associated with cognitive impairment (hazard ratios of 1.11; 95% confidence interval [CI], 1.02-1.21; P = .01; and 2.08; 95% CI, 1.31-3.31; P < .005; and 1.08; 95% CI, 1.04-1.13; P < .005, respectively). Importantly, when evaluating the duration, change and variability of sleep, the researchers found that increased sleep variability was significantly associated with cognitive impairment (HR, 3.15; 95% CI, 1.69-5.87; P < .005).

Under this analysis, “sleep variability over time and not median sleep duration was associated with cognitive impairment,” she said. When sleep variability was added into the model, it improved the concordance score – a value that reflects the ability of a model to predict an outcome better than random chance – from .63 to .73 (a value of .5 indicates the model is no better at predicting an outcome than a random chance model; a value of .7 or greater indicates a good model).

Identification of sleep variability as a sleep pattern of interest in longitudinal studies is important, Dr. Keil said, because simply evaluating mean or median sleep duration across time might not account for a subject’s variable sleep phenotype. Most importantly, further evaluation of sleep variability with a linear regression prediction analysis (F statistic 8.796, P < .0001, adjusted R-squared .235) found that increased age, depression, and sleep variability significantly predicted cognitive impairment 10 years downstream. “Longitudinal sleep variability is perhaps for the first time being reported as significantly associated with the development of downstream cognitive impairment,” Dr. Keil said.

What makes this study unique, Dr. Keil said in an interview, is that it used self-reported longitudinal data gathered at 3- to 5-year intervals for up to 25 years, allowing for the assessment of variation of sleep duration across this entire time frame. “If you could use that shift in sleep duration as a point of therapeutic intervention, that would be really exciting,” she said.

Future research will evaluate how sleep variability and cognitive function are impacted by other variables gathered in the Seattle Longitudinal Study over the years, including factors such as diabetes and hypertension status, diet, alcohol and tobacco use, and marital and family status. Follow-up studies will be investigating the impact of sleep variability on neuropathologic disease progression and lymphatic system impairment, Dr. Keil said.

A newer approach

By linking sleep variability and daytime functioning, the study employs a “newer approach,” said Joseph M. Dzierzewski, PhD, director of behavioral medicine concentration in the department of psychology at Virginia Commonwealth University in Richmond. “While some previous work has examined night-to-night fluctuation in various sleep characteristics and cognitive functioning, what differentiates the present study from these previous works is the duration of the investigation,” he said. The “richness of data” in the Seattle Longitudinal Study and how it tracks sleep and cognition over years make it “quite unique and novel.”

Future studies, he said, should be deliberate in how they evaluate sleep and neurocognitive function across years. “Disentangling short-term moment-to-moment and day-to-day fluctuation, which may be more reversible in nature, from long-term, enduring month-to-month or year-to-year fluctuation, which may be more permanent, will be important for continuing to advance our understanding of these complex phenomena,” Dr. Dzierzewski said. “An additional important area of future investigation would be to continue the hunt for a common biological factor underpinning both sleep variability and Alzheimer’s disease.” That, he said, may help identify potential intervention targets.

Dr. Keil and Dr. Dzierzewski have no relevant disclosures.

CHARLOTTE, N.C. – Erratic sleep patterns over years or even decades, along with a patient’s age and history of depression, may be harbingers of cognitive impairment later in life, an analysis of decades of data from a large sleep study has found.

“What we were a little surprised to find in this model was that sleep duration, whether short, long or average, was not significant, but the sleep variability – the change in sleep across those time measurements—was significantly impacting the incidence of cognitive impairment,” Samantha Keil, PhD, a postdoctoral fellow at the University of Washington, Seattle, reported at the at the annual meeting of the Associated Professional Sleep Societies.

The researchers analyzed sleep and cognition data collected over decades on 1,104 adults who participated in the Seattle Longitudinal Study. Study participants ranged from age 55 to over 100, with almost 80% of the study cohort aged 65 and older.

The Seattle Longitudinal Study first started gathering data in the 1950s. Participants in the study cohort underwent an extensive cognitive battery, which was added to the study in 1984 and gathered every 5-7 years, and completed a health behavioral questionnaire (HBQ), which was added in 1993 and administered every 3-5 years, Dr. Keil said. The HBQ included a question on average nightly sleep duration.

The study used a multivariable Cox proportional hazard regression model to evaluate the overall effect of average sleep duration and changes in sleep duration over time on cognitive impairment. Covariates used in the model included apolipoprotein E4 (APOE4) genotype, gender, years of education, ethnicity, and depression.

Dr. Keil said the model found, as expected, that the demographic variables of education, APOE status, and depression were significantly associated with cognitive impairment (hazard ratios of 1.11; 95% confidence interval [CI], 1.02-1.21; P = .01; and 2.08; 95% CI, 1.31-3.31; P < .005; and 1.08; 95% CI, 1.04-1.13; P < .005, respectively). Importantly, when evaluating the duration, change and variability of sleep, the researchers found that increased sleep variability was significantly associated with cognitive impairment (HR, 3.15; 95% CI, 1.69-5.87; P < .005).

Under this analysis, “sleep variability over time and not median sleep duration was associated with cognitive impairment,” she said. When sleep variability was added into the model, it improved the concordance score – a value that reflects the ability of a model to predict an outcome better than random chance – from .63 to .73 (a value of .5 indicates the model is no better at predicting an outcome than a random chance model; a value of .7 or greater indicates a good model).

Identification of sleep variability as a sleep pattern of interest in longitudinal studies is important, Dr. Keil said, because simply evaluating mean or median sleep duration across time might not account for a subject’s variable sleep phenotype. Most importantly, further evaluation of sleep variability with a linear regression prediction analysis (F statistic 8.796, P < .0001, adjusted R-squared .235) found that increased age, depression, and sleep variability significantly predicted cognitive impairment 10 years downstream. “Longitudinal sleep variability is perhaps for the first time being reported as significantly associated with the development of downstream cognitive impairment,” Dr. Keil said.

What makes this study unique, Dr. Keil said in an interview, is that it used self-reported longitudinal data gathered at 3- to 5-year intervals for up to 25 years, allowing for the assessment of variation of sleep duration across this entire time frame. “If you could use that shift in sleep duration as a point of therapeutic intervention, that would be really exciting,” she said.

Future research will evaluate how sleep variability and cognitive function are impacted by other variables gathered in the Seattle Longitudinal Study over the years, including factors such as diabetes and hypertension status, diet, alcohol and tobacco use, and marital and family status. Follow-up studies will be investigating the impact of sleep variability on neuropathologic disease progression and lymphatic system impairment, Dr. Keil said.

A newer approach

By linking sleep variability and daytime functioning, the study employs a “newer approach,” said Joseph M. Dzierzewski, PhD, director of behavioral medicine concentration in the department of psychology at Virginia Commonwealth University in Richmond. “While some previous work has examined night-to-night fluctuation in various sleep characteristics and cognitive functioning, what differentiates the present study from these previous works is the duration of the investigation,” he said. The “richness of data” in the Seattle Longitudinal Study and how it tracks sleep and cognition over years make it “quite unique and novel.”

Future studies, he said, should be deliberate in how they evaluate sleep and neurocognitive function across years. “Disentangling short-term moment-to-moment and day-to-day fluctuation, which may be more reversible in nature, from long-term, enduring month-to-month or year-to-year fluctuation, which may be more permanent, will be important for continuing to advance our understanding of these complex phenomena,” Dr. Dzierzewski said. “An additional important area of future investigation would be to continue the hunt for a common biological factor underpinning both sleep variability and Alzheimer’s disease.” That, he said, may help identify potential intervention targets.

Dr. Keil and Dr. Dzierzewski have no relevant disclosures.

AT SLEEP 2022

Surprising link between herpes zoster and dementia

Herpes zoster does not appear to increase dementia risk – on the contrary, the viral infection may offer some protection, a large population-based study suggests.

“We were surprised by these results [and] the reasons for the decreased risk are unclear,” study author Sigrun Alba Johannesdottir Schmidt, MD, PhD, with Aarhus (Denmark) University Hospital, said in a news release.

The study was published online in Neurology.

Conflicting findings

Herpes zoster (HZ) is an acute, cutaneous viral infection caused by the reactivation of varicella-zoster virus (VZV). Previous population-based studies have reported both decreased and increased risks of dementia after having HZ.

It’s thought that HZ may contribute to the development of dementia through neuroinflammation, cerebral vasculopathy, or direct neural damage, but epidemiologic evidence is limited.

To investigate further, Dr. Schmidt and colleagues used Danish medical registries to identify 247,305 people who had visited a hospital for HZ or were prescribed antiviral medication for HZ over a 20-year period and matched them to 1,235,890 people who did not have HZ. For both cohorts, the median age was 64 years, and 61% were women.

Dementia was diagnosed in 9.7% of zoster patients and 10.3% of matched control persons during up to 21 years of follow-up.

Contrary to the researchers’ expectation, HZ was associated with a small (7%) decreased relative risk of all-cause dementia during follow-up (hazard ratio, 0.93; 95% confidence interval, 0.90-0.95).

There was no increased long-term risk of dementia in subgroup analyses, except possibly among those with HZ that involved the central nervous system (HR, 1.94; 95% CI, 0.78-4.80), which has been shown before.

However, the population attributable fraction of dementia caused by this rare complication is low (< 1%), suggesting that universal vaccination against VZV in the elderly has limited potential to reduce dementia risk, the investigators noted.

Nonetheless, Dr. Schmidt said shingles vaccination should be encouraged in older people because it can prevent complications from the disease.

The research team admitted that the slightly decreased long-term risk of dementia, including Alzheimer’s disease, was “unexpected.” The reasons for this decreased risk are unclear, they say, and could be explained by missed diagnoses of shingles in people with undiagnosed dementia.

They were not able to examine whether antiviral treatment modifies the association between HZ and dementia and said that this topic merits further research.

The study was supported by the Edel and Wilhelm Daubenmerkls Charitable Foundation. The authors disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Herpes zoster does not appear to increase dementia risk – on the contrary, the viral infection may offer some protection, a large population-based study suggests.

“We were surprised by these results [and] the reasons for the decreased risk are unclear,” study author Sigrun Alba Johannesdottir Schmidt, MD, PhD, with Aarhus (Denmark) University Hospital, said in a news release.

The study was published online in Neurology.

Conflicting findings

Herpes zoster (HZ) is an acute, cutaneous viral infection caused by the reactivation of varicella-zoster virus (VZV). Previous population-based studies have reported both decreased and increased risks of dementia after having HZ.

It’s thought that HZ may contribute to the development of dementia through neuroinflammation, cerebral vasculopathy, or direct neural damage, but epidemiologic evidence is limited.

To investigate further, Dr. Schmidt and colleagues used Danish medical registries to identify 247,305 people who had visited a hospital for HZ or were prescribed antiviral medication for HZ over a 20-year period and matched them to 1,235,890 people who did not have HZ. For both cohorts, the median age was 64 years, and 61% were women.

Dementia was diagnosed in 9.7% of zoster patients and 10.3% of matched control persons during up to 21 years of follow-up.

Contrary to the researchers’ expectation, HZ was associated with a small (7%) decreased relative risk of all-cause dementia during follow-up (hazard ratio, 0.93; 95% confidence interval, 0.90-0.95).

There was no increased long-term risk of dementia in subgroup analyses, except possibly among those with HZ that involved the central nervous system (HR, 1.94; 95% CI, 0.78-4.80), which has been shown before.

However, the population attributable fraction of dementia caused by this rare complication is low (< 1%), suggesting that universal vaccination against VZV in the elderly has limited potential to reduce dementia risk, the investigators noted.

Nonetheless, Dr. Schmidt said shingles vaccination should be encouraged in older people because it can prevent complications from the disease.

The research team admitted that the slightly decreased long-term risk of dementia, including Alzheimer’s disease, was “unexpected.” The reasons for this decreased risk are unclear, they say, and could be explained by missed diagnoses of shingles in people with undiagnosed dementia.

They were not able to examine whether antiviral treatment modifies the association between HZ and dementia and said that this topic merits further research.

The study was supported by the Edel and Wilhelm Daubenmerkls Charitable Foundation. The authors disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Herpes zoster does not appear to increase dementia risk – on the contrary, the viral infection may offer some protection, a large population-based study suggests.

“We were surprised by these results [and] the reasons for the decreased risk are unclear,” study author Sigrun Alba Johannesdottir Schmidt, MD, PhD, with Aarhus (Denmark) University Hospital, said in a news release.

The study was published online in Neurology.

Conflicting findings

Herpes zoster (HZ) is an acute, cutaneous viral infection caused by the reactivation of varicella-zoster virus (VZV). Previous population-based studies have reported both decreased and increased risks of dementia after having HZ.

It’s thought that HZ may contribute to the development of dementia through neuroinflammation, cerebral vasculopathy, or direct neural damage, but epidemiologic evidence is limited.

To investigate further, Dr. Schmidt and colleagues used Danish medical registries to identify 247,305 people who had visited a hospital for HZ or were prescribed antiviral medication for HZ over a 20-year period and matched them to 1,235,890 people who did not have HZ. For both cohorts, the median age was 64 years, and 61% were women.

Dementia was diagnosed in 9.7% of zoster patients and 10.3% of matched control persons during up to 21 years of follow-up.

Contrary to the researchers’ expectation, HZ was associated with a small (7%) decreased relative risk of all-cause dementia during follow-up (hazard ratio, 0.93; 95% confidence interval, 0.90-0.95).

There was no increased long-term risk of dementia in subgroup analyses, except possibly among those with HZ that involved the central nervous system (HR, 1.94; 95% CI, 0.78-4.80), which has been shown before.

However, the population attributable fraction of dementia caused by this rare complication is low (< 1%), suggesting that universal vaccination against VZV in the elderly has limited potential to reduce dementia risk, the investigators noted.

Nonetheless, Dr. Schmidt said shingles vaccination should be encouraged in older people because it can prevent complications from the disease.

The research team admitted that the slightly decreased long-term risk of dementia, including Alzheimer’s disease, was “unexpected.” The reasons for this decreased risk are unclear, they say, and could be explained by missed diagnoses of shingles in people with undiagnosed dementia.

They were not able to examine whether antiviral treatment modifies the association between HZ and dementia and said that this topic merits further research.

The study was supported by the Edel and Wilhelm Daubenmerkls Charitable Foundation. The authors disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Analysis shows predictive capabilities of sleep EEG

CHARLOTTE, N.C. – , a researcher reported at the annual meeting of the Associated Professional Sleep Societies. “Sleep EEGs contain decodable information about the risk of unfavorable outcomes,” said Haoqi Sun, PhD, an instructor of neurology at Massachusetts General Hospital, Boston, and lead study author. “The results suggest that it’s feasible to use sleep to identify people with high risk of unfavorable outcomes and it strengthens the concept of sleep as a window into brain and general health.”

The researchers performed a quantitative analysis of sleep data collected on 8,673 adults who had diagnostic sleep studies that included polysomnography (PSG). The analysis used ICD codes to consider these 11 health outcomes: dementia, mild cognitive impairment (MCI) or dementia, ischemic stroke, intracranial hemorrhage, atrial fibrillation, myocardial infarction, type 2 diabetes, hypertension, bipolar disorder, depression, and mortality.

Then, Dr. Sun explained, they extracted 86 spectral and time-domain features of REM and non-REM sleep from sleep EEG recordings, and analyzed that data by adjusting for eight covariates including age, sex, body mass index, and use of benzodiazepines, antidepressants, sedatives, antiseizure drugs, and stimulants.

Participants were partitioned into three sleep-quality groups: poor, average, and good. The outcome-wise mean prediction difference in 10-year cumulative incidence was 2.3% for the poor sleep group, 0.5% for the average sleep group, and 1.3% for the good sleep group.

The outcomes with the three greatest poor to average risk ratios were dementia (6.2; 95% confidence interval, 4.5-9.3), mortality (5.7; 95% CI, 5-7.5) and MCI or dementia (4; 95% CI, 3.2-4.9).

Ready for the clinic?

In an interview, Dr. Sun said the results demonstrated the potential of using EEG brain wave data to predict health outcomes on an individual basis, although he acknowledged that most of the 86 sleep features the researchers used are not readily available in the clinic.

He noted the spectral features used in the study can be captured through software compatible with PSG. “From there you can identify the various bands, the different frequency ranges, and then you can easily see within this range whether a person has a higher power or lower power,” he said. However, the spindle and slow-oscillation features that researchers used in the study are beyond the reach of most clinics.

Next steps

This research is in its early stage, Dr. Sun said, but at some point the data collected from sleep studies could be paired with machine learning to make the model workable for evaluating individual patients. “Our goal is to first make this individualized,” he said. “We want to minimize the noise in the recording and minimize the night-to-night variability in the findings. There is some clinical-informed approach and there is also some algorithm-informed approach where you can minimize the variation over time.”

The model also has the potential to predict outcomes, particularly with chronic diseases such as diabetes and dementia, well before a diagnosis is made, he said.

‘Fascinating’ and ‘provocative’

Donald Bliwise, PhD, professor of neurology at Emory Sleep Center in Atlanta, said the study was “fascinating; it’s provocative; it’s exciting and interesting,” but added, “Sleep is vital for health. That’s abundantly clear in a study like that, but trying to push it a little bit further with all of these 86 measurements of the EEG, I think it becomes complicated.”

The study methodology, particularly the use of cumulative incidence of various diseases, was laudable, he said, and the use of simpler EEG-measured sleep features, such as alpha band power, “make intuitive sense.”

But it’s less clear on how the more sophisticated features the study model used – for example, kurtosis of theta frequency or coupling between spindle and slow oscillation – rank on sleep quality, he said, adding that the researchers have most likely done that but couldn’t add that into the format of the presentation.

“Kurtosis of the theta frequency band we don’t get on everyone in the sleep lab,” Dr. Bliwise said. “We might be able to, but I don’t know how to quite plug that into a turnkey model.”

The clinical components of the study were conducted by M. Brandon Westover, MD, PhD, at Massachusetts General Hospital, and Robert J. Thomas, MD, at Beth Israel Deaconess Medical Center, both in Boston. The study received support from the American Academy of Sleep Medicine Foundation. Dr. Sun has no relevant disclosures. Dr. Bliwise has no disclosures.

CHARLOTTE, N.C. – , a researcher reported at the annual meeting of the Associated Professional Sleep Societies. “Sleep EEGs contain decodable information about the risk of unfavorable outcomes,” said Haoqi Sun, PhD, an instructor of neurology at Massachusetts General Hospital, Boston, and lead study author. “The results suggest that it’s feasible to use sleep to identify people with high risk of unfavorable outcomes and it strengthens the concept of sleep as a window into brain and general health.”

The researchers performed a quantitative analysis of sleep data collected on 8,673 adults who had diagnostic sleep studies that included polysomnography (PSG). The analysis used ICD codes to consider these 11 health outcomes: dementia, mild cognitive impairment (MCI) or dementia, ischemic stroke, intracranial hemorrhage, atrial fibrillation, myocardial infarction, type 2 diabetes, hypertension, bipolar disorder, depression, and mortality.

Then, Dr. Sun explained, they extracted 86 spectral and time-domain features of REM and non-REM sleep from sleep EEG recordings, and analyzed that data by adjusting for eight covariates including age, sex, body mass index, and use of benzodiazepines, antidepressants, sedatives, antiseizure drugs, and stimulants.

Participants were partitioned into three sleep-quality groups: poor, average, and good. The outcome-wise mean prediction difference in 10-year cumulative incidence was 2.3% for the poor sleep group, 0.5% for the average sleep group, and 1.3% for the good sleep group.

The outcomes with the three greatest poor to average risk ratios were dementia (6.2; 95% confidence interval, 4.5-9.3), mortality (5.7; 95% CI, 5-7.5) and MCI or dementia (4; 95% CI, 3.2-4.9).

Ready for the clinic?

In an interview, Dr. Sun said the results demonstrated the potential of using EEG brain wave data to predict health outcomes on an individual basis, although he acknowledged that most of the 86 sleep features the researchers used are not readily available in the clinic.

He noted the spectral features used in the study can be captured through software compatible with PSG. “From there you can identify the various bands, the different frequency ranges, and then you can easily see within this range whether a person has a higher power or lower power,” he said. However, the spindle and slow-oscillation features that researchers used in the study are beyond the reach of most clinics.

Next steps

This research is in its early stage, Dr. Sun said, but at some point the data collected from sleep studies could be paired with machine learning to make the model workable for evaluating individual patients. “Our goal is to first make this individualized,” he said. “We want to minimize the noise in the recording and minimize the night-to-night variability in the findings. There is some clinical-informed approach and there is also some algorithm-informed approach where you can minimize the variation over time.”

The model also has the potential to predict outcomes, particularly with chronic diseases such as diabetes and dementia, well before a diagnosis is made, he said.

‘Fascinating’ and ‘provocative’

Donald Bliwise, PhD, professor of neurology at Emory Sleep Center in Atlanta, said the study was “fascinating; it’s provocative; it’s exciting and interesting,” but added, “Sleep is vital for health. That’s abundantly clear in a study like that, but trying to push it a little bit further with all of these 86 measurements of the EEG, I think it becomes complicated.”

The study methodology, particularly the use of cumulative incidence of various diseases, was laudable, he said, and the use of simpler EEG-measured sleep features, such as alpha band power, “make intuitive sense.”

But it’s less clear on how the more sophisticated features the study model used – for example, kurtosis of theta frequency or coupling between spindle and slow oscillation – rank on sleep quality, he said, adding that the researchers have most likely done that but couldn’t add that into the format of the presentation.

“Kurtosis of the theta frequency band we don’t get on everyone in the sleep lab,” Dr. Bliwise said. “We might be able to, but I don’t know how to quite plug that into a turnkey model.”

The clinical components of the study were conducted by M. Brandon Westover, MD, PhD, at Massachusetts General Hospital, and Robert J. Thomas, MD, at Beth Israel Deaconess Medical Center, both in Boston. The study received support from the American Academy of Sleep Medicine Foundation. Dr. Sun has no relevant disclosures. Dr. Bliwise has no disclosures.

CHARLOTTE, N.C. – , a researcher reported at the annual meeting of the Associated Professional Sleep Societies. “Sleep EEGs contain decodable information about the risk of unfavorable outcomes,” said Haoqi Sun, PhD, an instructor of neurology at Massachusetts General Hospital, Boston, and lead study author. “The results suggest that it’s feasible to use sleep to identify people with high risk of unfavorable outcomes and it strengthens the concept of sleep as a window into brain and general health.”

The researchers performed a quantitative analysis of sleep data collected on 8,673 adults who had diagnostic sleep studies that included polysomnography (PSG). The analysis used ICD codes to consider these 11 health outcomes: dementia, mild cognitive impairment (MCI) or dementia, ischemic stroke, intracranial hemorrhage, atrial fibrillation, myocardial infarction, type 2 diabetes, hypertension, bipolar disorder, depression, and mortality.

Then, Dr. Sun explained, they extracted 86 spectral and time-domain features of REM and non-REM sleep from sleep EEG recordings, and analyzed that data by adjusting for eight covariates including age, sex, body mass index, and use of benzodiazepines, antidepressants, sedatives, antiseizure drugs, and stimulants.

Participants were partitioned into three sleep-quality groups: poor, average, and good. The outcome-wise mean prediction difference in 10-year cumulative incidence was 2.3% for the poor sleep group, 0.5% for the average sleep group, and 1.3% for the good sleep group.

The outcomes with the three greatest poor to average risk ratios were dementia (6.2; 95% confidence interval, 4.5-9.3), mortality (5.7; 95% CI, 5-7.5) and MCI or dementia (4; 95% CI, 3.2-4.9).

Ready for the clinic?

In an interview, Dr. Sun said the results demonstrated the potential of using EEG brain wave data to predict health outcomes on an individual basis, although he acknowledged that most of the 86 sleep features the researchers used are not readily available in the clinic.

He noted the spectral features used in the study can be captured through software compatible with PSG. “From there you can identify the various bands, the different frequency ranges, and then you can easily see within this range whether a person has a higher power or lower power,” he said. However, the spindle and slow-oscillation features that researchers used in the study are beyond the reach of most clinics.

Next steps

This research is in its early stage, Dr. Sun said, but at some point the data collected from sleep studies could be paired with machine learning to make the model workable for evaluating individual patients. “Our goal is to first make this individualized,” he said. “We want to minimize the noise in the recording and minimize the night-to-night variability in the findings. There is some clinical-informed approach and there is also some algorithm-informed approach where you can minimize the variation over time.”

The model also has the potential to predict outcomes, particularly with chronic diseases such as diabetes and dementia, well before a diagnosis is made, he said.

‘Fascinating’ and ‘provocative’

Donald Bliwise, PhD, professor of neurology at Emory Sleep Center in Atlanta, said the study was “fascinating; it’s provocative; it’s exciting and interesting,” but added, “Sleep is vital for health. That’s abundantly clear in a study like that, but trying to push it a little bit further with all of these 86 measurements of the EEG, I think it becomes complicated.”

The study methodology, particularly the use of cumulative incidence of various diseases, was laudable, he said, and the use of simpler EEG-measured sleep features, such as alpha band power, “make intuitive sense.”

But it’s less clear on how the more sophisticated features the study model used – for example, kurtosis of theta frequency or coupling between spindle and slow oscillation – rank on sleep quality, he said, adding that the researchers have most likely done that but couldn’t add that into the format of the presentation.

“Kurtosis of the theta frequency band we don’t get on everyone in the sleep lab,” Dr. Bliwise said. “We might be able to, but I don’t know how to quite plug that into a turnkey model.”

The clinical components of the study were conducted by M. Brandon Westover, MD, PhD, at Massachusetts General Hospital, and Robert J. Thomas, MD, at Beth Israel Deaconess Medical Center, both in Boston. The study received support from the American Academy of Sleep Medicine Foundation. Dr. Sun has no relevant disclosures. Dr. Bliwise has no disclosures.

AT SLEEP 2022

Opioid use in the elderly a dementia risk factor?

in new findings that suggest exposure to these drugs may be another modifiable risk factor for dementia.

“Clinicians and others may want to consider that opioid exposure in those aged 75-80 increases dementia risk, and to balance the potential benefits of opioid use in old age with adverse side effects,” said Stephen Z. Levine, PhD, professor, department of community mental health, University of Haifa (Israel).

The study was published online in the American Journal of Geriatric Psychiatry.

Widespread use

Evidence points to a relatively high rate of opioid prescriptions among older adults. A Morbidity and Mortality Weekly Report noted 19.2% of the U.S. adult population filled an opioid prescription in 2018, with the rate in those over 65 double that of adults aged 20-24 years (25% vs. 11.2%).

Disorders and illnesses for which opioids might be prescribed, including cancer and some pain conditions, “are far more prevalent in old age than at a younger age,” said Dr. Levine.

This high rate of opioid use underscores the need to consider the risks of opioid use in old age, said Dr. Levine. “Unfortunately, studies of the association between opioid use and dementia risk in old age are few, and their results are inconsistent.”

The study included 91,307 Israeli citizens aged 60 and over without dementia who were enrolled in the Meuhedet Healthcare Services, a nonprofit health maintenance organization (HMO) serving 14% of the country’s population. Meuhedet has maintained an up-to-date dementia registry since 2002.

The average age of the study sample was 68.29 years at the start of the study (in 2012).

In Israel, opioids are prescribed for a 30-day period. In this study, opioid exposure was defined as opioid medication fills covering 60 days (or two prescriptions) within a 120-day interval.

The primary outcome was incident dementia during follow-up from Jan. 1, 2013 to Oct. 30, 2017. The analysis controlled for a number of factors, including age, sex, smoking status, health conditions such as arthritis, depression, diabetes, osteoporosis, cognitive decline, vitamin deficiencies, cancer, cardiovascular conditions, and hospitalizations for falls.

Researchers also accounted for the competing risk of mortality.

During the study, 3.1% of subjects were exposed to opioids at a mean age of 73.94 years, and 5.8% of subjects developed dementia at an average age of 78.07 years.

Increased dementia risk

The risk of incident dementia was significantly increased in those exposed to opioids versus unexposed individuals in the 75- to 80-year age group (adjusted hazard ratio, 1.39; 95% confidence interval, 1.01-1.92; z statistic = 2.02; P < .05).

The authors noted the effect size for opioid exposure in this elderly age group is like other potentially modifiable risk factors for dementia, including body mass index and smoking.

The current study could not determine the biological explanation for the increased dementia risk among older opioid users. “Causal notions are challenging in observational studies and should be viewed with caution,” Dr. Levine noted.

However, a plausible mechanism highlighted in the literature is that opioids promote apoptosis of microglia and neurons that contribute to neurodegenerative diseases, he said.

The study included 14 sensitivity analyses, including those that looked at females, subjects older than 70, smokers, and groups with and without comorbid health conditions. The only sensitivity analysis that didn’t have similar findings to the primary analysis looked at dementia risk restricted to subjects without a vitamin deficiency.

“It’s reassuring that 13 or 14 sensitivity analyses found a significant association between opioid exposure and dementia risk,” said Dr. Levine.

Some prior studies did not show an association between opioid exposure and dementia risk. One possible reason for the discrepancy with the current findings is that the previous research didn’t account for age-specific opioid use effects, or the competing risk of mortality, said Dr. Levine.

Clinicians have a number of potential alternatives to opioids to treat various conditions including acetaminophen, non-steroidal anti-inflammatory drugs, amine reuptake inhibitors (ARIs), membrane stabilizers, muscle relaxants, topical capsaicin, botulinum toxin, cannabinoids, and steroids.

A limitation of the study was that it didn’t adjust for all possible comorbid health conditions, including vascular conditions, or for use of benzodiazepines, and surgical procedures.

In addition, since up to 50% of dementia cases are undetected, it’s possible some in the unexposed opioid group may actually have undiagnosed dementia, thereby reducing the effect sizes in the results.

Reverse causality is also a possibility as the neuropathological process associated with dementia could have started prior to opioid exposure. In addition, the results are limited to prolonged opioid exposure.

Interpret with caution

Commenting on the study, David Knopman, MD, a neurologist at Mayo Clinic in Rochester, Minn., whose research involves late-life cognitive disorders, was skeptical.

“On the face of it, the fact that an association was seen only in one narrow age range – 75+ to 80 years – ought to raise serious suspicion about the reliability and validity of the claim that opioid use is a risk factor for dementia, he said.

Although the researchers performed several sensitivity analyses, including accounting for mortality, “pharmacoepidemiological studies are terribly sensitive to residual biases” related to physician and patient choices related to medication use, added Dr. Knopman.

The claim that opioids are a dementia risk “should be viewed with great caution” and should not influence use of opioids where they’re truly indicated, he said.

“It would be a great pity if patients with pain requiring opioids avoid them because of fears about dementia based on the dubious relationship between age and opioid use.”

Dr. Levine and Dr. Knopman report no relevant financial disclosures.

A version of this article first appeared on Medscape.com.

in new findings that suggest exposure to these drugs may be another modifiable risk factor for dementia.

“Clinicians and others may want to consider that opioid exposure in those aged 75-80 increases dementia risk, and to balance the potential benefits of opioid use in old age with adverse side effects,” said Stephen Z. Levine, PhD, professor, department of community mental health, University of Haifa (Israel).

The study was published online in the American Journal of Geriatric Psychiatry.

Widespread use

Evidence points to a relatively high rate of opioid prescriptions among older adults. A Morbidity and Mortality Weekly Report noted 19.2% of the U.S. adult population filled an opioid prescription in 2018, with the rate in those over 65 double that of adults aged 20-24 years (25% vs. 11.2%).

Disorders and illnesses for which opioids might be prescribed, including cancer and some pain conditions, “are far more prevalent in old age than at a younger age,” said Dr. Levine.

This high rate of opioid use underscores the need to consider the risks of opioid use in old age, said Dr. Levine. “Unfortunately, studies of the association between opioid use and dementia risk in old age are few, and their results are inconsistent.”

The study included 91,307 Israeli citizens aged 60 and over without dementia who were enrolled in the Meuhedet Healthcare Services, a nonprofit health maintenance organization (HMO) serving 14% of the country’s population. Meuhedet has maintained an up-to-date dementia registry since 2002.

The average age of the study sample was 68.29 years at the start of the study (in 2012).

In Israel, opioids are prescribed for a 30-day period. In this study, opioid exposure was defined as opioid medication fills covering 60 days (or two prescriptions) within a 120-day interval.

The primary outcome was incident dementia during follow-up from Jan. 1, 2013 to Oct. 30, 2017. The analysis controlled for a number of factors, including age, sex, smoking status, health conditions such as arthritis, depression, diabetes, osteoporosis, cognitive decline, vitamin deficiencies, cancer, cardiovascular conditions, and hospitalizations for falls.

Researchers also accounted for the competing risk of mortality.

During the study, 3.1% of subjects were exposed to opioids at a mean age of 73.94 years, and 5.8% of subjects developed dementia at an average age of 78.07 years.

Increased dementia risk

The risk of incident dementia was significantly increased in those exposed to opioids versus unexposed individuals in the 75- to 80-year age group (adjusted hazard ratio, 1.39; 95% confidence interval, 1.01-1.92; z statistic = 2.02; P < .05).

The authors noted the effect size for opioid exposure in this elderly age group is like other potentially modifiable risk factors for dementia, including body mass index and smoking.

The current study could not determine the biological explanation for the increased dementia risk among older opioid users. “Causal notions are challenging in observational studies and should be viewed with caution,” Dr. Levine noted.

However, a plausible mechanism highlighted in the literature is that opioids promote apoptosis of microglia and neurons that contribute to neurodegenerative diseases, he said.

The study included 14 sensitivity analyses, including those that looked at females, subjects older than 70, smokers, and groups with and without comorbid health conditions. The only sensitivity analysis that didn’t have similar findings to the primary analysis looked at dementia risk restricted to subjects without a vitamin deficiency.

“It’s reassuring that 13 or 14 sensitivity analyses found a significant association between opioid exposure and dementia risk,” said Dr. Levine.

Some prior studies did not show an association between opioid exposure and dementia risk. One possible reason for the discrepancy with the current findings is that the previous research didn’t account for age-specific opioid use effects, or the competing risk of mortality, said Dr. Levine.

Clinicians have a number of potential alternatives to opioids to treat various conditions including acetaminophen, non-steroidal anti-inflammatory drugs, amine reuptake inhibitors (ARIs), membrane stabilizers, muscle relaxants, topical capsaicin, botulinum toxin, cannabinoids, and steroids.

A limitation of the study was that it didn’t adjust for all possible comorbid health conditions, including vascular conditions, or for use of benzodiazepines, and surgical procedures.

In addition, since up to 50% of dementia cases are undetected, it’s possible some in the unexposed opioid group may actually have undiagnosed dementia, thereby reducing the effect sizes in the results.

Reverse causality is also a possibility as the neuropathological process associated with dementia could have started prior to opioid exposure. In addition, the results are limited to prolonged opioid exposure.

Interpret with caution

Commenting on the study, David Knopman, MD, a neurologist at Mayo Clinic in Rochester, Minn., whose research involves late-life cognitive disorders, was skeptical.

“On the face of it, the fact that an association was seen only in one narrow age range – 75+ to 80 years – ought to raise serious suspicion about the reliability and validity of the claim that opioid use is a risk factor for dementia, he said.

Although the researchers performed several sensitivity analyses, including accounting for mortality, “pharmacoepidemiological studies are terribly sensitive to residual biases” related to physician and patient choices related to medication use, added Dr. Knopman.

The claim that opioids are a dementia risk “should be viewed with great caution” and should not influence use of opioids where they’re truly indicated, he said.

“It would be a great pity if patients with pain requiring opioids avoid them because of fears about dementia based on the dubious relationship between age and opioid use.”

Dr. Levine and Dr. Knopman report no relevant financial disclosures.

A version of this article first appeared on Medscape.com.

in new findings that suggest exposure to these drugs may be another modifiable risk factor for dementia.

“Clinicians and others may want to consider that opioid exposure in those aged 75-80 increases dementia risk, and to balance the potential benefits of opioid use in old age with adverse side effects,” said Stephen Z. Levine, PhD, professor, department of community mental health, University of Haifa (Israel).

The study was published online in the American Journal of Geriatric Psychiatry.

Widespread use

Evidence points to a relatively high rate of opioid prescriptions among older adults. A Morbidity and Mortality Weekly Report noted 19.2% of the U.S. adult population filled an opioid prescription in 2018, with the rate in those over 65 double that of adults aged 20-24 years (25% vs. 11.2%).

Disorders and illnesses for which opioids might be prescribed, including cancer and some pain conditions, “are far more prevalent in old age than at a younger age,” said Dr. Levine.

This high rate of opioid use underscores the need to consider the risks of opioid use in old age, said Dr. Levine. “Unfortunately, studies of the association between opioid use and dementia risk in old age are few, and their results are inconsistent.”

The study included 91,307 Israeli citizens aged 60 and over without dementia who were enrolled in the Meuhedet Healthcare Services, a nonprofit health maintenance organization (HMO) serving 14% of the country’s population. Meuhedet has maintained an up-to-date dementia registry since 2002.

The average age of the study sample was 68.29 years at the start of the study (in 2012).

In Israel, opioids are prescribed for a 30-day period. In this study, opioid exposure was defined as opioid medication fills covering 60 days (or two prescriptions) within a 120-day interval.

The primary outcome was incident dementia during follow-up from Jan. 1, 2013 to Oct. 30, 2017. The analysis controlled for a number of factors, including age, sex, smoking status, health conditions such as arthritis, depression, diabetes, osteoporosis, cognitive decline, vitamin deficiencies, cancer, cardiovascular conditions, and hospitalizations for falls.

Researchers also accounted for the competing risk of mortality.

During the study, 3.1% of subjects were exposed to opioids at a mean age of 73.94 years, and 5.8% of subjects developed dementia at an average age of 78.07 years.

Increased dementia risk

The risk of incident dementia was significantly increased in those exposed to opioids versus unexposed individuals in the 75- to 80-year age group (adjusted hazard ratio, 1.39; 95% confidence interval, 1.01-1.92; z statistic = 2.02; P < .05).

The authors noted the effect size for opioid exposure in this elderly age group is like other potentially modifiable risk factors for dementia, including body mass index and smoking.

The current study could not determine the biological explanation for the increased dementia risk among older opioid users. “Causal notions are challenging in observational studies and should be viewed with caution,” Dr. Levine noted.

However, a plausible mechanism highlighted in the literature is that opioids promote apoptosis of microglia and neurons that contribute to neurodegenerative diseases, he said.

The study included 14 sensitivity analyses, including those that looked at females, subjects older than 70, smokers, and groups with and without comorbid health conditions. The only sensitivity analysis that didn’t have similar findings to the primary analysis looked at dementia risk restricted to subjects without a vitamin deficiency.

“It’s reassuring that 13 or 14 sensitivity analyses found a significant association between opioid exposure and dementia risk,” said Dr. Levine.

Some prior studies did not show an association between opioid exposure and dementia risk. One possible reason for the discrepancy with the current findings is that the previous research didn’t account for age-specific opioid use effects, or the competing risk of mortality, said Dr. Levine.

Clinicians have a number of potential alternatives to opioids to treat various conditions including acetaminophen, non-steroidal anti-inflammatory drugs, amine reuptake inhibitors (ARIs), membrane stabilizers, muscle relaxants, topical capsaicin, botulinum toxin, cannabinoids, and steroids.

A limitation of the study was that it didn’t adjust for all possible comorbid health conditions, including vascular conditions, or for use of benzodiazepines, and surgical procedures.

In addition, since up to 50% of dementia cases are undetected, it’s possible some in the unexposed opioid group may actually have undiagnosed dementia, thereby reducing the effect sizes in the results.

Reverse causality is also a possibility as the neuropathological process associated with dementia could have started prior to opioid exposure. In addition, the results are limited to prolonged opioid exposure.

Interpret with caution

Commenting on the study, David Knopman, MD, a neurologist at Mayo Clinic in Rochester, Minn., whose research involves late-life cognitive disorders, was skeptical.

“On the face of it, the fact that an association was seen only in one narrow age range – 75+ to 80 years – ought to raise serious suspicion about the reliability and validity of the claim that opioid use is a risk factor for dementia, he said.

Although the researchers performed several sensitivity analyses, including accounting for mortality, “pharmacoepidemiological studies are terribly sensitive to residual biases” related to physician and patient choices related to medication use, added Dr. Knopman.

The claim that opioids are a dementia risk “should be viewed with great caution” and should not influence use of opioids where they’re truly indicated, he said.

“It would be a great pity if patients with pain requiring opioids avoid them because of fears about dementia based on the dubious relationship between age and opioid use.”

Dr. Levine and Dr. Knopman report no relevant financial disclosures.

A version of this article first appeared on Medscape.com.

FROM AMERICAN JOURNAL OF GERIATRIC PSYCHIATRY

Hearing, vision loss combo a colossal risk for cognitive decline

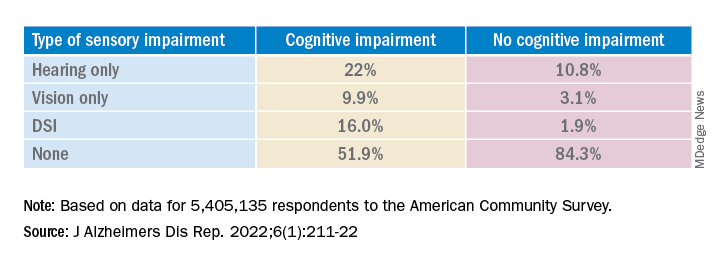

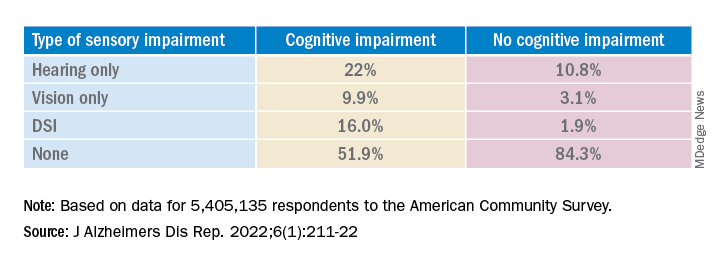

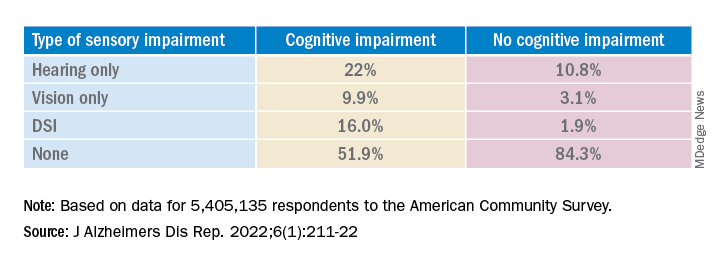

The combination of hearing loss and vision loss is linked to an eightfold increased risk of cognitive impairment, new research shows.

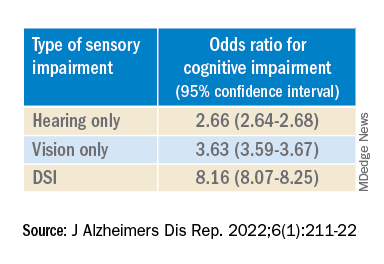

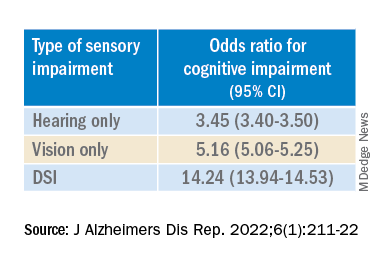

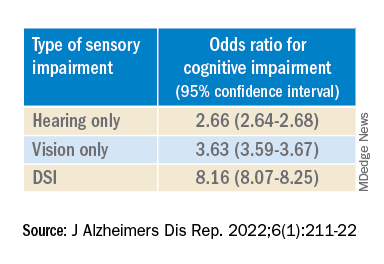

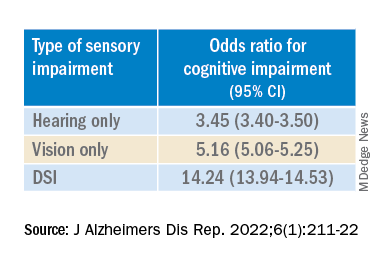

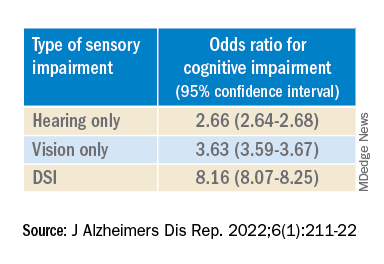

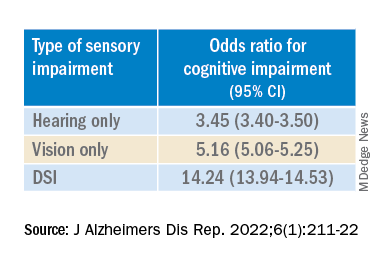

Investigators analyzed data on more than 5 million U.S. seniors. Adjusted results show that participants with hearing impairment alone had more than twice the odds of also having cognitive impairment, while those with vision impairment alone had more than triple the odds of cognitive impairment.

However, those with dual sensory impairment (DSI) had an eightfold higher risk for cognitive impairment.

In addition, half of the participants with DSI also had cognitive impairment. Of those with cognitive impairment, 16% had DSI, compared with only about 2% of their peers without cognitive impairment.

“The findings of the present study may inform interventions that can support older people with concurrent sensory impairment and cognitive impairment,” said lead author Esme Fuller-Thomson, PhD, professor, Factor-Inwentash Faculty of Social Work, University of Toronto.

“Special attention, in particular, should be given to those aged 65-74 who have serious hearing and/or vision impairment [because], if the relationship with dementia is found to be causal, such interventions can potentially mitigate the development of cognitive impairment,” said Dr. Fuller-Thomson, who is also director of the Institute for Life Course and Aging and a professor in the department of family and community medicine and faculty of nursing, all at the University of Toronto.

The findings were published online in the Journal of Alzheimer’s Disease Reports.

Sensory isolation

Hearing and vision impairment increase with age; it is estimated that one-third of U.S. adults between the ages of 65 and 74 experience hearing loss, and 4% experience vision impairment, the investigators note.

“The link between dual hearing loss and seeing loss and mental health problems such as depression and social isolation have been well researched, but we were very interested in the link between dual sensory loss and cognitive problems,” Dr. Fuller-Thomson said.

Additionally, “there have been several studies in the past decade linking hearing loss to dementia and cognitive decline, but less attention has been paid to cognitive problems among those with DSI, despite this group being particularly isolated,” she said. Existing research into DSI suggests an association with cognitive decline; the current investigators sought to expand on this previous work.

To do so, they used merged data from 10 consecutive waves from 2008 to 2017 of the American Community Survey (ACS), which was conducted by the U.S. Census Bureau. The ACS is a nationally representative sample of 3.5 million randomly selected U.S. addresses and includes community-dwelling adults and those residing in institutional settings.

Participants aged 65 or older (n = 5,405,135; 56.4% women) were asked yes/no questions regarding serious cognitive impairment, hearing impairment, and vision impairment. A proxy, such as a family member or nursing home staff member, provided answers for individuals not capable of self-report.

Potential confounding variables included age, race/ethnicity, sex, education, and household income.

Potential mechanisms

Results showed that, among those with cognitive impairment, there was a higher prevalence of hearing impairment, vision impairment, and DSI than among their peers without cognitive impairment; in addition, a lower percentage of these persons had no sensory impairment (P < .001).

The prevalence of DSI climbed with age, from 1.5% for respondents aged 65-74 years to 2.6% for those aged 75-84 and to 10.8% in those 85 years and older.

Individuals with higher levels of poverty also had higher levels of DSI. Among those who had not completed high school, the prevalence of DSI was higher, compared with high school or university graduates (6.3% vs. 3.1% and 1.85, respectively).

After controlling for age, race, education, and income, the researchers found “substantially” higher odds of cognitive impairment in those with vs. those without sensory impairments.

“The magnitude of the odds of cognitive impairment by sensory impairment was greatest for the youngest cohort (age 65-74) and lowest for the oldest cohort (age 85+),” the investigators wrote. Among participants in the youngest cohort, there was a “dose-response relationship” for those with hearing impairment only, visual impairment only, and DSI.

Because the study was observational, it “does not provide sufficient information to determine the reasons behind the observed link between sensory loss and cognitive problems,” Dr. Fuller-Thomson said. However, there are “several potential causal mechanisms [that] warrant future research.”

The “sensory deprivation hypothesis” suggests that DSI could cause cognitive deterioration because of decreased auditory and visual input. The “resource allocation hypothesis” posits that hearing- or vision-impaired older adults “may use more cognitive resources to accommodate for sensory deficits, allocating fewer cognitive resources for higher-order memory processes,” the researchers wrote. Hearing impairment “may also lead to social disengagement among older adults, hastening cognitive decline due to isolation and lack of stimulation,” they added.

Reverse causality is also possible. In the “cognitive load on perception” hypothesis, cognitive decline may lead to declines in hearing and vision because of “decreased resources for sensory processing.”

In addition, the association may be noncausal. “The ‘common cause hypothesis’ theorizes that sensory impairment and cognitive impairment may be due to shared age-related degeneration of the central nervous system ... or frailty,” Dr. Fuller-Thomson said.

Parallel findings

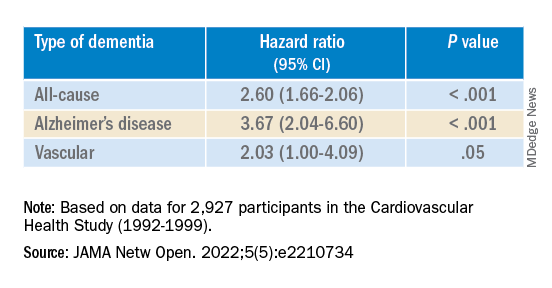

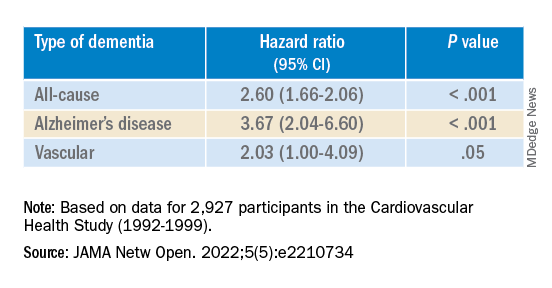

The results are similar to those from a study conducted by Phillip Hwang, PhD, of the department of anatomy and neurobiology, Boston University, and colleagues that was published online in JAMA Network Open.

They analyzed data on 8 years of follow-up of 2,927 participants in the Cardiovascular Health Study (mean age, 74.6 years; 58.2% women).

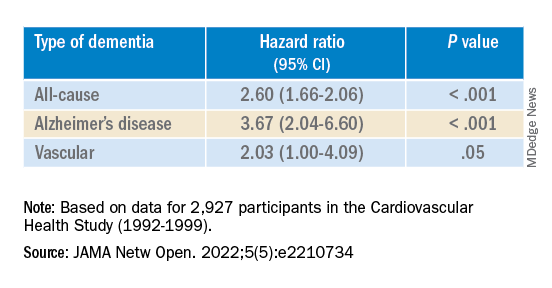

Compared with no sensory impairment, DSI was associated with increased risk for all-cause dementia and Alzheimer’s disease, but not with vascular dementia.

“Future work in health care guidelines could consider incorporating screening of sensory impairment in older adults as part of risk assessment for dementia,” Nicholas Reed, AuD, and Esther Oh, MD, PhD, both of Johns Hopkins University, Baltimore, wrote in an accompanying editorial.

Accurate testing

Commenting on both studies, Heather Whitson, MD, professor of medicine (geriatrics) and ophthalmology and director at the Duke University Center for the Study of Aging and Human Development, Durham, N.C., said both “add further strength to the evidence base, which has really converged in the last few years to support that there is a link between sensory health and cognitive health.”

However, “we still don’t know whether hearing/vision loss causes cognitive decline, though there are plausible ways that sensory loss could affect cognitive abilities like memory, language, and executive function,” she said

Dr. Whitson, who was not involved with the research, is also codirector of the Duke/University of North Carolina Alzheimer’s Disease Research Center at Duke University, Durham, N.C., and the Durham VA Medical Center.

“The big question is whether we can improve patients’ cognitive performance by treating or accommodating their sensory impairments,” she said. “If safe and feasible things like hearing aids or cataract surgery improve cognitive health, even a little bit, it would be a huge benefit to society, because sensory loss is very common, and there are many treatment options,” Dr. Whitson added.

Dr. Fuller-Thomson emphasized that practitioners should “consider the full impact of sensory impairment on cognitive testing methods, as both auditory and visual testing methods may fail to take hearing and vision impairment into account.”

Thus, “when performing cognitive tests on older adults with sensory impairments, practitioners should ensure they are communicating audibly and/or using visual speech cues for hearing-impaired individuals, eliminating items from cognitive tests that rely on vision for those who are visually impaired, and using physical cues for individuals with hearing or dual sensory impairment, as this can help increase the accuracy of testing and prevent confounding,” she said.

The study by Fuller-Thomson et al. was funded by a donation from Janis Rotman. Its investigators have reported no relevant financial relationships. The study by Hwang et al. was funded by contracts from the National Heart, Lung, and Blood Institute, the National Institute of Neurological Disorders and Stroke, and the National Institute on Aging. Dr. Hwang reports no relevant financial relationships. The other investigators’ disclosures are listed in the original article. Dr. Reed received grants from the National Institute on Aging during the conduct of the study and has served on the advisory board of Neosensory outside the submitted work. Dr. Oh and Dr. Whitson report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

The combination of hearing loss and vision loss is linked to an eightfold increased risk of cognitive impairment, new research shows.

Investigators analyzed data on more than 5 million U.S. seniors. Adjusted results show that participants with hearing impairment alone had more than twice the odds of also having cognitive impairment, while those with vision impairment alone had more than triple the odds of cognitive impairment.

However, those with dual sensory impairment (DSI) had an eightfold higher risk for cognitive impairment.

In addition, half of the participants with DSI also had cognitive impairment. Of those with cognitive impairment, 16% had DSI, compared with only about 2% of their peers without cognitive impairment.

“The findings of the present study may inform interventions that can support older people with concurrent sensory impairment and cognitive impairment,” said lead author Esme Fuller-Thomson, PhD, professor, Factor-Inwentash Faculty of Social Work, University of Toronto.

“Special attention, in particular, should be given to those aged 65-74 who have serious hearing and/or vision impairment [because], if the relationship with dementia is found to be causal, such interventions can potentially mitigate the development of cognitive impairment,” said Dr. Fuller-Thomson, who is also director of the Institute for Life Course and Aging and a professor in the department of family and community medicine and faculty of nursing, all at the University of Toronto.

The findings were published online in the Journal of Alzheimer’s Disease Reports.

Sensory isolation

Hearing and vision impairment increase with age; it is estimated that one-third of U.S. adults between the ages of 65 and 74 experience hearing loss, and 4% experience vision impairment, the investigators note.

“The link between dual hearing loss and seeing loss and mental health problems such as depression and social isolation have been well researched, but we were very interested in the link between dual sensory loss and cognitive problems,” Dr. Fuller-Thomson said.

Additionally, “there have been several studies in the past decade linking hearing loss to dementia and cognitive decline, but less attention has been paid to cognitive problems among those with DSI, despite this group being particularly isolated,” she said. Existing research into DSI suggests an association with cognitive decline; the current investigators sought to expand on this previous work.

To do so, they used merged data from 10 consecutive waves from 2008 to 2017 of the American Community Survey (ACS), which was conducted by the U.S. Census Bureau. The ACS is a nationally representative sample of 3.5 million randomly selected U.S. addresses and includes community-dwelling adults and those residing in institutional settings.

Participants aged 65 or older (n = 5,405,135; 56.4% women) were asked yes/no questions regarding serious cognitive impairment, hearing impairment, and vision impairment. A proxy, such as a family member or nursing home staff member, provided answers for individuals not capable of self-report.

Potential confounding variables included age, race/ethnicity, sex, education, and household income.

Potential mechanisms

Results showed that, among those with cognitive impairment, there was a higher prevalence of hearing impairment, vision impairment, and DSI than among their peers without cognitive impairment; in addition, a lower percentage of these persons had no sensory impairment (P < .001).

The prevalence of DSI climbed with age, from 1.5% for respondents aged 65-74 years to 2.6% for those aged 75-84 and to 10.8% in those 85 years and older.

Individuals with higher levels of poverty also had higher levels of DSI. Among those who had not completed high school, the prevalence of DSI was higher, compared with high school or university graduates (6.3% vs. 3.1% and 1.85, respectively).

After controlling for age, race, education, and income, the researchers found “substantially” higher odds of cognitive impairment in those with vs. those without sensory impairments.

“The magnitude of the odds of cognitive impairment by sensory impairment was greatest for the youngest cohort (age 65-74) and lowest for the oldest cohort (age 85+),” the investigators wrote. Among participants in the youngest cohort, there was a “dose-response relationship” for those with hearing impairment only, visual impairment only, and DSI.

Because the study was observational, it “does not provide sufficient information to determine the reasons behind the observed link between sensory loss and cognitive problems,” Dr. Fuller-Thomson said. However, there are “several potential causal mechanisms [that] warrant future research.”

The “sensory deprivation hypothesis” suggests that DSI could cause cognitive deterioration because of decreased auditory and visual input. The “resource allocation hypothesis” posits that hearing- or vision-impaired older adults “may use more cognitive resources to accommodate for sensory deficits, allocating fewer cognitive resources for higher-order memory processes,” the researchers wrote. Hearing impairment “may also lead to social disengagement among older adults, hastening cognitive decline due to isolation and lack of stimulation,” they added.

Reverse causality is also possible. In the “cognitive load on perception” hypothesis, cognitive decline may lead to declines in hearing and vision because of “decreased resources for sensory processing.”

In addition, the association may be noncausal. “The ‘common cause hypothesis’ theorizes that sensory impairment and cognitive impairment may be due to shared age-related degeneration of the central nervous system ... or frailty,” Dr. Fuller-Thomson said.

Parallel findings

The results are similar to those from a study conducted by Phillip Hwang, PhD, of the department of anatomy and neurobiology, Boston University, and colleagues that was published online in JAMA Network Open.

They analyzed data on 8 years of follow-up of 2,927 participants in the Cardiovascular Health Study (mean age, 74.6 years; 58.2% women).

Compared with no sensory impairment, DSI was associated with increased risk for all-cause dementia and Alzheimer’s disease, but not with vascular dementia.

“Future work in health care guidelines could consider incorporating screening of sensory impairment in older adults as part of risk assessment for dementia,” Nicholas Reed, AuD, and Esther Oh, MD, PhD, both of Johns Hopkins University, Baltimore, wrote in an accompanying editorial.

Accurate testing

Commenting on both studies, Heather Whitson, MD, professor of medicine (geriatrics) and ophthalmology and director at the Duke University Center for the Study of Aging and Human Development, Durham, N.C., said both “add further strength to the evidence base, which has really converged in the last few years to support that there is a link between sensory health and cognitive health.”

However, “we still don’t know whether hearing/vision loss causes cognitive decline, though there are plausible ways that sensory loss could affect cognitive abilities like memory, language, and executive function,” she said

Dr. Whitson, who was not involved with the research, is also codirector of the Duke/University of North Carolina Alzheimer’s Disease Research Center at Duke University, Durham, N.C., and the Durham VA Medical Center.

“The big question is whether we can improve patients’ cognitive performance by treating or accommodating their sensory impairments,” she said. “If safe and feasible things like hearing aids or cataract surgery improve cognitive health, even a little bit, it would be a huge benefit to society, because sensory loss is very common, and there are many treatment options,” Dr. Whitson added.

Dr. Fuller-Thomson emphasized that practitioners should “consider the full impact of sensory impairment on cognitive testing methods, as both auditory and visual testing methods may fail to take hearing and vision impairment into account.”

Thus, “when performing cognitive tests on older adults with sensory impairments, practitioners should ensure they are communicating audibly and/or using visual speech cues for hearing-impaired individuals, eliminating items from cognitive tests that rely on vision for those who are visually impaired, and using physical cues for individuals with hearing or dual sensory impairment, as this can help increase the accuracy of testing and prevent confounding,” she said.

The study by Fuller-Thomson et al. was funded by a donation from Janis Rotman. Its investigators have reported no relevant financial relationships. The study by Hwang et al. was funded by contracts from the National Heart, Lung, and Blood Institute, the National Institute of Neurological Disorders and Stroke, and the National Institute on Aging. Dr. Hwang reports no relevant financial relationships. The other investigators’ disclosures are listed in the original article. Dr. Reed received grants from the National Institute on Aging during the conduct of the study and has served on the advisory board of Neosensory outside the submitted work. Dr. Oh and Dr. Whitson report no relevant financial relationships.

A version of this article first appeared on Medscape.com.

The combination of hearing loss and vision loss is linked to an eightfold increased risk of cognitive impairment, new research shows.

Investigators analyzed data on more than 5 million U.S. seniors. Adjusted results show that participants with hearing impairment alone had more than twice the odds of also having cognitive impairment, while those with vision impairment alone had more than triple the odds of cognitive impairment.

However, those with dual sensory impairment (DSI) had an eightfold higher risk for cognitive impairment.

In addition, half of the participants with DSI also had cognitive impairment. Of those with cognitive impairment, 16% had DSI, compared with only about 2% of their peers without cognitive impairment.

“The findings of the present study may inform interventions that can support older people with concurrent sensory impairment and cognitive impairment,” said lead author Esme Fuller-Thomson, PhD, professor, Factor-Inwentash Faculty of Social Work, University of Toronto.

“Special attention, in particular, should be given to those aged 65-74 who have serious hearing and/or vision impairment [because], if the relationship with dementia is found to be causal, such interventions can potentially mitigate the development of cognitive impairment,” said Dr. Fuller-Thomson, who is also director of the Institute for Life Course and Aging and a professor in the department of family and community medicine and faculty of nursing, all at the University of Toronto.

The findings were published online in the Journal of Alzheimer’s Disease Reports.

Sensory isolation

Hearing and vision impairment increase with age; it is estimated that one-third of U.S. adults between the ages of 65 and 74 experience hearing loss, and 4% experience vision impairment, the investigators note.