User login

Adding palbociclib upped responses in previously treated MCL

An early study adding palbociclib to ibrutinib in previously treated patients with mantle cell lymphoma (MCL) showed a higher complete response rate than what has previously been reported for single-agent ibrutinib, according to investigators.

Results from the phase 1 trial (NCT02159755) support preclinical models, suggesting that the CDK4/6 inhibitor palbociclib may be able to help overcome resistance to ibrutinib, an inhibitor of Bruton’s tyrosine kinase (BTK).

These findings set the stage for an ongoing phase 2 multicenter study, reported lead author Peter Martin, MD, of Weill Cornell Medicine in New York and his colleagues.

The present study involved 27 patients with previously treated MCL, the investigators wrote in Blood. Of these, 21 were men and 6 were women, all of whom had adequate organ and bone marrow function, good performance status, and no previous treatment with CDK4/6 or BTK inhibitors.

Patients were randomly grouped into five dose levels of each drug: Ibrutinib doses ranged from 280-560 mg, and palbociclib from 75-125 mg. Ibrutinib was given daily and palbociclib was administered for 21 out of 28 days per cycle. Therapy continued until withdrawal, unacceptable toxicity, or disease progression.

The primary objective was to determine phase 2 dose. Secondarily, the investigators sought to determine activity and toxicity profiles. The maximum tolerated doses were ibrutinib 560 mg daily plus palbociclib 100 mg on days 1-21 of each 28-day cycle.

Across all patients, the complete response rate was 37%, compared with 21% for ibrutinib monotherapy in a previous trial. About two-thirds of patients had a response of any kind, which aligns closely with the overall response rate previously reported for ibrutinib alone (67% vs. 68%). After a median follow-up of 25.6 months in survivors, the 2-year progression free survival was 59.4%. The two-year overall survival rate was 60.6%.

The dose-limiting toxicity was grade 3 rash, which occurred in two out of five patients treated at the highest doses. The most common grade 3 or higher toxicities were neutropenia (41%) and thrombocytopenia (30%), followed by hypertension (15%), febrile neutropenia (15%), lung infection (11%), fatigue (7%), upper respiratory tract infection (7%), hyperglycemia (7%), rash (7%), myalgia (7%), and increased alanine transaminase/aspartate aminotransferase (7%).

“Although BTK-inhibitor-based combinations appear promising, the degree to which they improve upon single-agent ibrutinib is unclear,” the investigators wrote, noting that a phase 2 trial (NCT03478514) is currently underway and uses the maximum tolerated doses.

The phase 1 trial was sponsored by the National Cancer Institute. Study funding was provided by the Sarah Cannon Fund at the HCA Foundation. The investigators reported financial relationships with Janssen, Gilead, AstraZeneca, Celgene, Karyopharm, and others.

SOURCE: Martin P et al. Blood. 2019 Jan 28. doi: 10.1182/blood-2018-11-886457.

An early study adding palbociclib to ibrutinib in previously treated patients with mantle cell lymphoma (MCL) showed a higher complete response rate than what has previously been reported for single-agent ibrutinib, according to investigators.

Results from the phase 1 trial (NCT02159755) support preclinical models, suggesting that the CDK4/6 inhibitor palbociclib may be able to help overcome resistance to ibrutinib, an inhibitor of Bruton’s tyrosine kinase (BTK).

These findings set the stage for an ongoing phase 2 multicenter study, reported lead author Peter Martin, MD, of Weill Cornell Medicine in New York and his colleagues.

The present study involved 27 patients with previously treated MCL, the investigators wrote in Blood. Of these, 21 were men and 6 were women, all of whom had adequate organ and bone marrow function, good performance status, and no previous treatment with CDK4/6 or BTK inhibitors.

Patients were randomly grouped into five dose levels of each drug: Ibrutinib doses ranged from 280-560 mg, and palbociclib from 75-125 mg. Ibrutinib was given daily and palbociclib was administered for 21 out of 28 days per cycle. Therapy continued until withdrawal, unacceptable toxicity, or disease progression.

The primary objective was to determine phase 2 dose. Secondarily, the investigators sought to determine activity and toxicity profiles. The maximum tolerated doses were ibrutinib 560 mg daily plus palbociclib 100 mg on days 1-21 of each 28-day cycle.

Across all patients, the complete response rate was 37%, compared with 21% for ibrutinib monotherapy in a previous trial. About two-thirds of patients had a response of any kind, which aligns closely with the overall response rate previously reported for ibrutinib alone (67% vs. 68%). After a median follow-up of 25.6 months in survivors, the 2-year progression free survival was 59.4%. The two-year overall survival rate was 60.6%.

The dose-limiting toxicity was grade 3 rash, which occurred in two out of five patients treated at the highest doses. The most common grade 3 or higher toxicities were neutropenia (41%) and thrombocytopenia (30%), followed by hypertension (15%), febrile neutropenia (15%), lung infection (11%), fatigue (7%), upper respiratory tract infection (7%), hyperglycemia (7%), rash (7%), myalgia (7%), and increased alanine transaminase/aspartate aminotransferase (7%).

“Although BTK-inhibitor-based combinations appear promising, the degree to which they improve upon single-agent ibrutinib is unclear,” the investigators wrote, noting that a phase 2 trial (NCT03478514) is currently underway and uses the maximum tolerated doses.

The phase 1 trial was sponsored by the National Cancer Institute. Study funding was provided by the Sarah Cannon Fund at the HCA Foundation. The investigators reported financial relationships with Janssen, Gilead, AstraZeneca, Celgene, Karyopharm, and others.

SOURCE: Martin P et al. Blood. 2019 Jan 28. doi: 10.1182/blood-2018-11-886457.

An early study adding palbociclib to ibrutinib in previously treated patients with mantle cell lymphoma (MCL) showed a higher complete response rate than what has previously been reported for single-agent ibrutinib, according to investigators.

Results from the phase 1 trial (NCT02159755) support preclinical models, suggesting that the CDK4/6 inhibitor palbociclib may be able to help overcome resistance to ibrutinib, an inhibitor of Bruton’s tyrosine kinase (BTK).

These findings set the stage for an ongoing phase 2 multicenter study, reported lead author Peter Martin, MD, of Weill Cornell Medicine in New York and his colleagues.

The present study involved 27 patients with previously treated MCL, the investigators wrote in Blood. Of these, 21 were men and 6 were women, all of whom had adequate organ and bone marrow function, good performance status, and no previous treatment with CDK4/6 or BTK inhibitors.

Patients were randomly grouped into five dose levels of each drug: Ibrutinib doses ranged from 280-560 mg, and palbociclib from 75-125 mg. Ibrutinib was given daily and palbociclib was administered for 21 out of 28 days per cycle. Therapy continued until withdrawal, unacceptable toxicity, or disease progression.

The primary objective was to determine phase 2 dose. Secondarily, the investigators sought to determine activity and toxicity profiles. The maximum tolerated doses were ibrutinib 560 mg daily plus palbociclib 100 mg on days 1-21 of each 28-day cycle.

Across all patients, the complete response rate was 37%, compared with 21% for ibrutinib monotherapy in a previous trial. About two-thirds of patients had a response of any kind, which aligns closely with the overall response rate previously reported for ibrutinib alone (67% vs. 68%). After a median follow-up of 25.6 months in survivors, the 2-year progression free survival was 59.4%. The two-year overall survival rate was 60.6%.

The dose-limiting toxicity was grade 3 rash, which occurred in two out of five patients treated at the highest doses. The most common grade 3 or higher toxicities were neutropenia (41%) and thrombocytopenia (30%), followed by hypertension (15%), febrile neutropenia (15%), lung infection (11%), fatigue (7%), upper respiratory tract infection (7%), hyperglycemia (7%), rash (7%), myalgia (7%), and increased alanine transaminase/aspartate aminotransferase (7%).

“Although BTK-inhibitor-based combinations appear promising, the degree to which they improve upon single-agent ibrutinib is unclear,” the investigators wrote, noting that a phase 2 trial (NCT03478514) is currently underway and uses the maximum tolerated doses.

The phase 1 trial was sponsored by the National Cancer Institute. Study funding was provided by the Sarah Cannon Fund at the HCA Foundation. The investigators reported financial relationships with Janssen, Gilead, AstraZeneca, Celgene, Karyopharm, and others.

SOURCE: Martin P et al. Blood. 2019 Jan 28. doi: 10.1182/blood-2018-11-886457.

FROM BLOOD

Key clinical point:

Major finding: The complete response rate for the combination treatment was 37%.

Study details: A prospective, phase 1 trial of 27 patients with previously treated MCL.

Disclosures: The trial was sponsored by the National Cancer Institute. Funding was provided by the Sarah Cannon Fund at the HCA Foundation. The investigators reported financial relationships with Janssen, Gilead, AstraZeneca, Celgene, Karyopharm, and others.

Source: Martin P et al. Blood. 2019 Jan 28. doi: 10.1182/blood-2018-11-886457.

Fitusiran is reversible during dosing suspension

PRAGUE – Fitusiran, a novel small interfering RNA therapeutic that decreases antithrombin (AT) synthesis and bleeding in patients with hemophilia A or B, with or without inhibitors, is reversible upon dosing cessation and becomes effective again with resumption of dosing. That finding is based on data obtained before, during, and after a phase 2 study dosing suspension.

In September 2017, the fitusiran open-label extension study was suspended to investigate a fatal thrombotic event. The suspension was lifted 3 months later, and the trial continued with reduced doses of replacement factors or bypassing agents for breakthrough bleeds.

The pause in the study was used to gather data about the effects of dosing cessation and resumption, which were reported by coauthor Craig Benson, MD, of Sanofi Genzyme in Cambridge, Mass. Dr. Benson presented findings at the annual congress of the European Association for Haemophilia and Allied Disorders on behalf of lead author John Pasi, MD, PhD, of the Haemophilia Centre at the Royal London Hospital and colleagues.

Prior to the suspension, 28 patients with hemophilia A or B, with or without inhibitors, were given 50-80 mg of fitusiran for up to 20 months with a median dose duration of 11 months. During dosing suspension, the investigators measured AT levels, thrombin generation, and annualized bleeding rates (ABR).

“We can see that the antithrombin knockdown effect of fitusiran is reversible with dosing hold,” Dr. Benson said, referring to an upward trend of median AT level.

Within 4 months of stopping fitusiran, median AT level was 60% of normal. This level continued to rise to about 90% by month 7, before returning to normal at month 11.

“This is an AT recovery we had previously seen by a few subjects that had discontinued [fitusiran] prior to the clinical hold,” Dr. Benson said, “but here, with a much larger sample size, we see a fairly consistent AT recovery.”

As expected, while AT levels rose, thrombin generation showed an inverse trend. “We see a similar temporal pattern,” Dr. Benson said. “The bulk of thrombin generation decrease is seen by the 4th month off fitusiran.”

When patients restarted fitusiran after the suspension was lifted, AT levels dropped to about 30% of normal by month 1 and about 20% of normal from month 2 onward. Again, in an inverse manner, thrombin generation increased.

Clinically, changes in AT and thrombin generation before, during, and after study suspension were reflected in median ABR, which rose from 1.43 events per year prior to cessation to 6.47 events per year during treatment hold before falling to 1.25 events per year after restarting fitusiran.

Overall, the results support the efficacy and reversibility of fitusiran. The agent is continuing to be studied in the phase 3 ATLAS trials.

The study was funded by Sanofi Genzyme and Alnylam. Dr. Benson is an employee of Sanofi Genzyme. Other investigators reported financial ties to Sanofi Genzyme, Alnylam, Baxalta, Octapharma, Pfizer, Shire, and others.

SOURCE: Pasi J et al. EAHAD 2019, Abstract OR16.

PRAGUE – Fitusiran, a novel small interfering RNA therapeutic that decreases antithrombin (AT) synthesis and bleeding in patients with hemophilia A or B, with or without inhibitors, is reversible upon dosing cessation and becomes effective again with resumption of dosing. That finding is based on data obtained before, during, and after a phase 2 study dosing suspension.

In September 2017, the fitusiran open-label extension study was suspended to investigate a fatal thrombotic event. The suspension was lifted 3 months later, and the trial continued with reduced doses of replacement factors or bypassing agents for breakthrough bleeds.

The pause in the study was used to gather data about the effects of dosing cessation and resumption, which were reported by coauthor Craig Benson, MD, of Sanofi Genzyme in Cambridge, Mass. Dr. Benson presented findings at the annual congress of the European Association for Haemophilia and Allied Disorders on behalf of lead author John Pasi, MD, PhD, of the Haemophilia Centre at the Royal London Hospital and colleagues.

Prior to the suspension, 28 patients with hemophilia A or B, with or without inhibitors, were given 50-80 mg of fitusiran for up to 20 months with a median dose duration of 11 months. During dosing suspension, the investigators measured AT levels, thrombin generation, and annualized bleeding rates (ABR).

“We can see that the antithrombin knockdown effect of fitusiran is reversible with dosing hold,” Dr. Benson said, referring to an upward trend of median AT level.

Within 4 months of stopping fitusiran, median AT level was 60% of normal. This level continued to rise to about 90% by month 7, before returning to normal at month 11.

“This is an AT recovery we had previously seen by a few subjects that had discontinued [fitusiran] prior to the clinical hold,” Dr. Benson said, “but here, with a much larger sample size, we see a fairly consistent AT recovery.”

As expected, while AT levels rose, thrombin generation showed an inverse trend. “We see a similar temporal pattern,” Dr. Benson said. “The bulk of thrombin generation decrease is seen by the 4th month off fitusiran.”

When patients restarted fitusiran after the suspension was lifted, AT levels dropped to about 30% of normal by month 1 and about 20% of normal from month 2 onward. Again, in an inverse manner, thrombin generation increased.

Clinically, changes in AT and thrombin generation before, during, and after study suspension were reflected in median ABR, which rose from 1.43 events per year prior to cessation to 6.47 events per year during treatment hold before falling to 1.25 events per year after restarting fitusiran.

Overall, the results support the efficacy and reversibility of fitusiran. The agent is continuing to be studied in the phase 3 ATLAS trials.

The study was funded by Sanofi Genzyme and Alnylam. Dr. Benson is an employee of Sanofi Genzyme. Other investigators reported financial ties to Sanofi Genzyme, Alnylam, Baxalta, Octapharma, Pfizer, Shire, and others.

SOURCE: Pasi J et al. EAHAD 2019, Abstract OR16.

PRAGUE – Fitusiran, a novel small interfering RNA therapeutic that decreases antithrombin (AT) synthesis and bleeding in patients with hemophilia A or B, with or without inhibitors, is reversible upon dosing cessation and becomes effective again with resumption of dosing. That finding is based on data obtained before, during, and after a phase 2 study dosing suspension.

In September 2017, the fitusiran open-label extension study was suspended to investigate a fatal thrombotic event. The suspension was lifted 3 months later, and the trial continued with reduced doses of replacement factors or bypassing agents for breakthrough bleeds.

The pause in the study was used to gather data about the effects of dosing cessation and resumption, which were reported by coauthor Craig Benson, MD, of Sanofi Genzyme in Cambridge, Mass. Dr. Benson presented findings at the annual congress of the European Association for Haemophilia and Allied Disorders on behalf of lead author John Pasi, MD, PhD, of the Haemophilia Centre at the Royal London Hospital and colleagues.

Prior to the suspension, 28 patients with hemophilia A or B, with or without inhibitors, were given 50-80 mg of fitusiran for up to 20 months with a median dose duration of 11 months. During dosing suspension, the investigators measured AT levels, thrombin generation, and annualized bleeding rates (ABR).

“We can see that the antithrombin knockdown effect of fitusiran is reversible with dosing hold,” Dr. Benson said, referring to an upward trend of median AT level.

Within 4 months of stopping fitusiran, median AT level was 60% of normal. This level continued to rise to about 90% by month 7, before returning to normal at month 11.

“This is an AT recovery we had previously seen by a few subjects that had discontinued [fitusiran] prior to the clinical hold,” Dr. Benson said, “but here, with a much larger sample size, we see a fairly consistent AT recovery.”

As expected, while AT levels rose, thrombin generation showed an inverse trend. “We see a similar temporal pattern,” Dr. Benson said. “The bulk of thrombin generation decrease is seen by the 4th month off fitusiran.”

When patients restarted fitusiran after the suspension was lifted, AT levels dropped to about 30% of normal by month 1 and about 20% of normal from month 2 onward. Again, in an inverse manner, thrombin generation increased.

Clinically, changes in AT and thrombin generation before, during, and after study suspension were reflected in median ABR, which rose from 1.43 events per year prior to cessation to 6.47 events per year during treatment hold before falling to 1.25 events per year after restarting fitusiran.

Overall, the results support the efficacy and reversibility of fitusiran. The agent is continuing to be studied in the phase 3 ATLAS trials.

The study was funded by Sanofi Genzyme and Alnylam. Dr. Benson is an employee of Sanofi Genzyme. Other investigators reported financial ties to Sanofi Genzyme, Alnylam, Baxalta, Octapharma, Pfizer, Shire, and others.

SOURCE: Pasi J et al. EAHAD 2019, Abstract OR16.

REPORTING FROM EAHAD 2019

Key clinical point: Major finding: Median antithrombin returned to levels greater than 60% of normal 4 months after stopping fitusiran.

Study details: A phase 2, open-label study involving 28 patients with hemophilia A or B.

Disclosures: The study was funded by Sanofi Genzyme and Alnylam. Dr. Benson is an employee of Sanofi Genzyme. Other investigators reported financial affiliations with Sanofi Genzyme, Alnylam, Baxalta, Octapharma, Pfizer, Shire, and others.

Source: Pasi J et al. EAHAD 2019, Abstract OR16.

Targeted triplet shows potential for B-cell cancers

A triplet combination of targeted agents ublituximab, umbralisib, and ibrutinib may be a safe and effective regimen for patients with chronic lymphocytic leukemia/small lymphocytic lymphoma (CLL/SLL) and other B-cell malignancies, according to early study results.

The phase 1 trial had an overall response rate of 84% and a favorable safety profile, reported lead author Loretta J. Nastoupil, MD, of the University of Texas MD Anderson Cancer Center, Houston, and her colleagues. The results suggest that the regimen could eventually serve as a nonchemotherapeutic option for patients with B-cell malignancies.

“Therapeutic targeting of the B-cell receptor signaling pathway has revolutionized the management of B-cell lymphomas,” the investigators wrote in the Lancet Haematology. “Optimum combinations that result in longer periods of remission, possibly allowing for discontinuation of therapy, are needed.”

The present triplet combination included ublituximab, an anti-CD20 monoclonal antibody; ibrutinib, a Bruton tyrosine kinase inhibitor; and umbralisib, a phosphoinositide 3-kinase delta inhibitor.

A total of 46 patients with CLL/SLL or relapsed/refractory B-cell non-Hodgkin lymphoma received at least one dose of the combination in dose-escalation or dose-expansion study sections.

In the dose-escalation group (n = 24), ublituximab was given intravenously at 900 mg, ibrutinib was given orally at 420 mg for CLL and 560 mg for B-cell non-Hodgkin lymphoma, and umbralisib was given orally at three dose levels: 400 mg, 600 mg, and 800 mg.

In the dose-expansion group (n = 22), umbralisib was set at 800 mg while the other agents remained at the previous doses; treatment continued until intolerance or disease progression occurred. The investigators monitored efficacy and safety at defined intervals.

Results showed that 37 out of 44 evaluable patients (84%) had partial or complete responses to therapy.

Among the 22 CLL/SLL patients, there was a 100% overall response rate for both previously treated and untreated patients. Similarly, all three of the patients with marginal zone lymphoma responded, all six of the patients with mantle cell lymphoma responded, and five of seven patients with follicular lymphoma responded. However, only one of the six patients with diffuse large B-cell lymphoma had even a partial response.

The most common adverse events of any kind were diarrhea (59%), fatigue (50%), infusion-related reaction (43%), dizziness (37%), nausea (37%), and cough (35%). The most common grade 3 or higher adverse events were neutropenia (22%) and cellulitis (13%).

Serious adverse events were reported in 24% of patients; pneumonia, rash, sepsis, atrial fibrillation, and syncope occurred in two patients each; abdominal pain, pneumonitis, cellulitis, headache, skin infection, pleural effusion, upper gastrointestinal bleeding, pericardial effusion, weakness, and diarrhea occurred in one patient each. No adverse event–related deaths were reported.

“The findings of this study establish the tolerable safety profile of the ublituximab, umbralisib, and ibrutinib triplet regimen in chronic lymphocytic leukemia or small lymphocytic lymphoma and relapsed or refractory B-cell non-Hodgkin lymphoma,” the investigators wrote. “This triplet combination is expected to be investigated further in future clinical trials in different patient populations.”

The study was funded by TG Therapeutics. The authors reported financial relationships with TG Therapeutics and other companies.

SOURCE: Nastoupil LJ et al. Lancet Haematol. 2019 Feb;6(2):e100-9.

A triplet combination of targeted agents ublituximab, umbralisib, and ibrutinib may be a safe and effective regimen for patients with chronic lymphocytic leukemia/small lymphocytic lymphoma (CLL/SLL) and other B-cell malignancies, according to early study results.

The phase 1 trial had an overall response rate of 84% and a favorable safety profile, reported lead author Loretta J. Nastoupil, MD, of the University of Texas MD Anderson Cancer Center, Houston, and her colleagues. The results suggest that the regimen could eventually serve as a nonchemotherapeutic option for patients with B-cell malignancies.

“Therapeutic targeting of the B-cell receptor signaling pathway has revolutionized the management of B-cell lymphomas,” the investigators wrote in the Lancet Haematology. “Optimum combinations that result in longer periods of remission, possibly allowing for discontinuation of therapy, are needed.”

The present triplet combination included ublituximab, an anti-CD20 monoclonal antibody; ibrutinib, a Bruton tyrosine kinase inhibitor; and umbralisib, a phosphoinositide 3-kinase delta inhibitor.

A total of 46 patients with CLL/SLL or relapsed/refractory B-cell non-Hodgkin lymphoma received at least one dose of the combination in dose-escalation or dose-expansion study sections.

In the dose-escalation group (n = 24), ublituximab was given intravenously at 900 mg, ibrutinib was given orally at 420 mg for CLL and 560 mg for B-cell non-Hodgkin lymphoma, and umbralisib was given orally at three dose levels: 400 mg, 600 mg, and 800 mg.

In the dose-expansion group (n = 22), umbralisib was set at 800 mg while the other agents remained at the previous doses; treatment continued until intolerance or disease progression occurred. The investigators monitored efficacy and safety at defined intervals.

Results showed that 37 out of 44 evaluable patients (84%) had partial or complete responses to therapy.

Among the 22 CLL/SLL patients, there was a 100% overall response rate for both previously treated and untreated patients. Similarly, all three of the patients with marginal zone lymphoma responded, all six of the patients with mantle cell lymphoma responded, and five of seven patients with follicular lymphoma responded. However, only one of the six patients with diffuse large B-cell lymphoma had even a partial response.

The most common adverse events of any kind were diarrhea (59%), fatigue (50%), infusion-related reaction (43%), dizziness (37%), nausea (37%), and cough (35%). The most common grade 3 or higher adverse events were neutropenia (22%) and cellulitis (13%).

Serious adverse events were reported in 24% of patients; pneumonia, rash, sepsis, atrial fibrillation, and syncope occurred in two patients each; abdominal pain, pneumonitis, cellulitis, headache, skin infection, pleural effusion, upper gastrointestinal bleeding, pericardial effusion, weakness, and diarrhea occurred in one patient each. No adverse event–related deaths were reported.

“The findings of this study establish the tolerable safety profile of the ublituximab, umbralisib, and ibrutinib triplet regimen in chronic lymphocytic leukemia or small lymphocytic lymphoma and relapsed or refractory B-cell non-Hodgkin lymphoma,” the investigators wrote. “This triplet combination is expected to be investigated further in future clinical trials in different patient populations.”

The study was funded by TG Therapeutics. The authors reported financial relationships with TG Therapeutics and other companies.

SOURCE: Nastoupil LJ et al. Lancet Haematol. 2019 Feb;6(2):e100-9.

A triplet combination of targeted agents ublituximab, umbralisib, and ibrutinib may be a safe and effective regimen for patients with chronic lymphocytic leukemia/small lymphocytic lymphoma (CLL/SLL) and other B-cell malignancies, according to early study results.

The phase 1 trial had an overall response rate of 84% and a favorable safety profile, reported lead author Loretta J. Nastoupil, MD, of the University of Texas MD Anderson Cancer Center, Houston, and her colleagues. The results suggest that the regimen could eventually serve as a nonchemotherapeutic option for patients with B-cell malignancies.

“Therapeutic targeting of the B-cell receptor signaling pathway has revolutionized the management of B-cell lymphomas,” the investigators wrote in the Lancet Haematology. “Optimum combinations that result in longer periods of remission, possibly allowing for discontinuation of therapy, are needed.”

The present triplet combination included ublituximab, an anti-CD20 monoclonal antibody; ibrutinib, a Bruton tyrosine kinase inhibitor; and umbralisib, a phosphoinositide 3-kinase delta inhibitor.

A total of 46 patients with CLL/SLL or relapsed/refractory B-cell non-Hodgkin lymphoma received at least one dose of the combination in dose-escalation or dose-expansion study sections.

In the dose-escalation group (n = 24), ublituximab was given intravenously at 900 mg, ibrutinib was given orally at 420 mg for CLL and 560 mg for B-cell non-Hodgkin lymphoma, and umbralisib was given orally at three dose levels: 400 mg, 600 mg, and 800 mg.

In the dose-expansion group (n = 22), umbralisib was set at 800 mg while the other agents remained at the previous doses; treatment continued until intolerance or disease progression occurred. The investigators monitored efficacy and safety at defined intervals.

Results showed that 37 out of 44 evaluable patients (84%) had partial or complete responses to therapy.

Among the 22 CLL/SLL patients, there was a 100% overall response rate for both previously treated and untreated patients. Similarly, all three of the patients with marginal zone lymphoma responded, all six of the patients with mantle cell lymphoma responded, and five of seven patients with follicular lymphoma responded. However, only one of the six patients with diffuse large B-cell lymphoma had even a partial response.

The most common adverse events of any kind were diarrhea (59%), fatigue (50%), infusion-related reaction (43%), dizziness (37%), nausea (37%), and cough (35%). The most common grade 3 or higher adverse events were neutropenia (22%) and cellulitis (13%).

Serious adverse events were reported in 24% of patients; pneumonia, rash, sepsis, atrial fibrillation, and syncope occurred in two patients each; abdominal pain, pneumonitis, cellulitis, headache, skin infection, pleural effusion, upper gastrointestinal bleeding, pericardial effusion, weakness, and diarrhea occurred in one patient each. No adverse event–related deaths were reported.

“The findings of this study establish the tolerable safety profile of the ublituximab, umbralisib, and ibrutinib triplet regimen in chronic lymphocytic leukemia or small lymphocytic lymphoma and relapsed or refractory B-cell non-Hodgkin lymphoma,” the investigators wrote. “This triplet combination is expected to be investigated further in future clinical trials in different patient populations.”

The study was funded by TG Therapeutics. The authors reported financial relationships with TG Therapeutics and other companies.

SOURCE: Nastoupil LJ et al. Lancet Haematol. 2019 Feb;6(2):e100-9.

FROM LANCET HAEMATOLOGY

Key clinical point:

Major finding: Out of 44 patients, 37 (84%) achieved a partial or complete response to therapy.

Study details: A phase 1, multicenter, dose-escalation and dose-expansion trial involving 46 patients with chronic lymphocytic leukemia, small lymphocytic leukemia, or relapsed/refractory non-Hodgkin lymphoma.

Disclosures: The study was funded by TG Therapeutics. The authors reported financial relationships with TG Therapeutics and other companies.

Source: Nastoupil LJ et al. Lancet Haematol. 2019 Feb;6(2):e100-9.

Inhibitor risk nears zero after 75 days in previously untreated hemophilia A

PRAGUE—For previously untreated patients (PUPs) with severe hemophilia A, the risk of developing factor VIII (FVIII) alloantibodies (inhibitors) becomes negligible after 75 exposure days, according to a recent study involving more than 1,000 infants.

This finding answers a long-standing and important question in the management of hemophilia A, reported lead author H. Marijke van den Berg, MD, PhD, of University Medical Centre in Utrecht, The Netherlands.

Inhibitor development is the biggest safety concern facing infants with severe hemophilia A because it affects 25%-35% of the patient population, but no previous studies have adequately described the associated risk profile, she noted.

“Most studies until now collected data until about 50 [exposure days] and not that far beyond,” Dr. van den Berg said at the annual congress of the European Association for Haemophilia and Allied Disorders. “So we were interested to see the serum plateau in our large cohort.”

Such a plateau would represent the time point at which risk of inhibitor development approaches zero.

Dr. van den Berg and her colleagues followed 1,038 PUPs with severe hemophilia A from first exposure to FVIII onward. Data were from drawn from the PedNet Registry. From the initial group, 943 patients (91%) were followed until 50 exposure days, and 899 (87%) were followed until 75 exposure days.

Inhibitor development was defined by a minimum of two positive inhibitor titers. In addition to determining the point in time of inhibitor development, the investigators performed a survival analysis for inhibitor incidence and reported median ages at first exposure and at exposure day 75.

The results showed that 298 out of 300 instances of inhibitor development occurred within 75 exposure days, and no inhibitors developed between exposure day 75 and 150. The final two instances occurred at exposure day 249 and 262, both with a low titer.

Median age at first exposure was 1.1 years, compared with 2.3 years at exposure day 75.

These findings suggest that risk of inhibitors is “near zero” after 75 days and that risk is approaching zero just 1 year after first exposure to FVIII, she said.

The results from this study could affect the design of future clinical trials for PUPs.

“Our recommendation will be to continue frequent [inhibitor] testing until 75 exposure days,” Dr. van den Berg said.

The time frame involved is very short, so close monitoring should be feasible for investigators, she noted.

Dr. van den Berg said that additional data, including Kaplan-Meier curves, would “hopefully” be published in a journal soon.

Dr. van den Berg reported having no relevant financial disclosures.

SOURCE: van den Berg HM et al. EAHAD 2019, Abstract OR05.

PRAGUE—For previously untreated patients (PUPs) with severe hemophilia A, the risk of developing factor VIII (FVIII) alloantibodies (inhibitors) becomes negligible after 75 exposure days, according to a recent study involving more than 1,000 infants.

This finding answers a long-standing and important question in the management of hemophilia A, reported lead author H. Marijke van den Berg, MD, PhD, of University Medical Centre in Utrecht, The Netherlands.

Inhibitor development is the biggest safety concern facing infants with severe hemophilia A because it affects 25%-35% of the patient population, but no previous studies have adequately described the associated risk profile, she noted.

“Most studies until now collected data until about 50 [exposure days] and not that far beyond,” Dr. van den Berg said at the annual congress of the European Association for Haemophilia and Allied Disorders. “So we were interested to see the serum plateau in our large cohort.”

Such a plateau would represent the time point at which risk of inhibitor development approaches zero.

Dr. van den Berg and her colleagues followed 1,038 PUPs with severe hemophilia A from first exposure to FVIII onward. Data were from drawn from the PedNet Registry. From the initial group, 943 patients (91%) were followed until 50 exposure days, and 899 (87%) were followed until 75 exposure days.

Inhibitor development was defined by a minimum of two positive inhibitor titers. In addition to determining the point in time of inhibitor development, the investigators performed a survival analysis for inhibitor incidence and reported median ages at first exposure and at exposure day 75.

The results showed that 298 out of 300 instances of inhibitor development occurred within 75 exposure days, and no inhibitors developed between exposure day 75 and 150. The final two instances occurred at exposure day 249 and 262, both with a low titer.

Median age at first exposure was 1.1 years, compared with 2.3 years at exposure day 75.

These findings suggest that risk of inhibitors is “near zero” after 75 days and that risk is approaching zero just 1 year after first exposure to FVIII, she said.

The results from this study could affect the design of future clinical trials for PUPs.

“Our recommendation will be to continue frequent [inhibitor] testing until 75 exposure days,” Dr. van den Berg said.

The time frame involved is very short, so close monitoring should be feasible for investigators, she noted.

Dr. van den Berg said that additional data, including Kaplan-Meier curves, would “hopefully” be published in a journal soon.

Dr. van den Berg reported having no relevant financial disclosures.

SOURCE: van den Berg HM et al. EAHAD 2019, Abstract OR05.

PRAGUE—For previously untreated patients (PUPs) with severe hemophilia A, the risk of developing factor VIII (FVIII) alloantibodies (inhibitors) becomes negligible after 75 exposure days, according to a recent study involving more than 1,000 infants.

This finding answers a long-standing and important question in the management of hemophilia A, reported lead author H. Marijke van den Berg, MD, PhD, of University Medical Centre in Utrecht, The Netherlands.

Inhibitor development is the biggest safety concern facing infants with severe hemophilia A because it affects 25%-35% of the patient population, but no previous studies have adequately described the associated risk profile, she noted.

“Most studies until now collected data until about 50 [exposure days] and not that far beyond,” Dr. van den Berg said at the annual congress of the European Association for Haemophilia and Allied Disorders. “So we were interested to see the serum plateau in our large cohort.”

Such a plateau would represent the time point at which risk of inhibitor development approaches zero.

Dr. van den Berg and her colleagues followed 1,038 PUPs with severe hemophilia A from first exposure to FVIII onward. Data were from drawn from the PedNet Registry. From the initial group, 943 patients (91%) were followed until 50 exposure days, and 899 (87%) were followed until 75 exposure days.

Inhibitor development was defined by a minimum of two positive inhibitor titers. In addition to determining the point in time of inhibitor development, the investigators performed a survival analysis for inhibitor incidence and reported median ages at first exposure and at exposure day 75.

The results showed that 298 out of 300 instances of inhibitor development occurred within 75 exposure days, and no inhibitors developed between exposure day 75 and 150. The final two instances occurred at exposure day 249 and 262, both with a low titer.

Median age at first exposure was 1.1 years, compared with 2.3 years at exposure day 75.

These findings suggest that risk of inhibitors is “near zero” after 75 days and that risk is approaching zero just 1 year after first exposure to FVIII, she said.

The results from this study could affect the design of future clinical trials for PUPs.

“Our recommendation will be to continue frequent [inhibitor] testing until 75 exposure days,” Dr. van den Berg said.

The time frame involved is very short, so close monitoring should be feasible for investigators, she noted.

Dr. van den Berg said that additional data, including Kaplan-Meier curves, would “hopefully” be published in a journal soon.

Dr. van den Berg reported having no relevant financial disclosures.

SOURCE: van den Berg HM et al. EAHAD 2019, Abstract OR05.

REPORTING FROM EAHAD 2019

Key clinical point: Major finding: Less than 1% of infants with severe hemophilia A developed inhibitors after 75 exposure days.

Study details: An observational study involving 1,038 previously untreated patients with severe hemophilia A, of which 899 (87%) were followed until 75 exposure days.

Disclosures: Dr. van den Berg reported having no relevant financial disclosures.

Source: van den Berg HM et al. EAHAD 2019, Abstract OR05.

Hemophilia intracranial hemorrhage rates declining

PRAGUE – Despite improvements over the past 60 years, intracranial hemorrhage (ICH) remains a significant complication in hemophilia, occurring most frequently among patients with severe forms of the disease, according to a large-scale meta-analysis involving 56 studies and nearly 80,000 patients.

The consequences of a single incident of ICH can be irreparable and life-changing, so clinician knowledge of incidence rates and risk factors is essential, said lead author Anne-Fleur Zwagemaker, a PhD candidate from Amsterdam University Medical Center.

“Intracranial hemorrhage is one of the most severe and fearful complications in hemophilia,” Ms. Zwagemaker said in a presentation at the annual congress of the European Association for Haemophilia and Allied Disorders. “Our aim was to give more precise estimates of ICH numbers and risk factors in hemophilia.”

The review is notable for its scale and quality. After eliminating studies with fewer than 50 patients or other insufficiencies, the investigators were left with 56 studies conducted between 1960 and 2018, involving 79,818 patients with hemophilia. With a mean observation period of 12 years, the data encompassed almost 1 million person-years of data.

Across all studies, 1,508 ICH events were reported. Incidence and mortality rates were 400 and 80 per 100,000 person-years, respectively.

To optimize accuracy, the investigators further restricted studies to those with a sample size of at least 365 patients, leading to a pooled incidence rate of 3.8%. Studies with relevant data showed that about half of the cases of ICH (48%) were spontaneous. Regarding most common bleed locations, about two-thirds were either subdural (30%) or intracerebral (32%).

Pooled incidence rates of ICH have decreased steadily over time, from 7%-8% during the 1960-1979 time period, to 5%-6% from 1980-1999, and most recently to about 3%.

Mortality rates during the same time periods decreased in a similar fashion, from 300, to 100, to 75 deaths per 100,000 person-years.

Additional analysis revealed an expected relationship between disease severity and likelihood of ICH. Mild cases of hemophilia had an ICH incidence rate of 0.9%, moderate cases had a rate of 1.3%, and severe cases topped the scale at 4.5%, entailing an incidence rate ratio of 2.7 between severe and nonsevere patients.

“I think our data show that in hemophilia, ICH is still a very important and frequent complication,” Ms. Zwagemaker said. “Luckily, we also see a decline in numbers, but I think it’s still very important that we identify those at risk in hemophilia and that we acknowledge it’s still a very important problem.”

Dr. Zwagemaker reported having no relevant financial disclosures.

SOURCE: Zwagemaker AF et al. EAHAD 2019, Abstract OR08.

PRAGUE – Despite improvements over the past 60 years, intracranial hemorrhage (ICH) remains a significant complication in hemophilia, occurring most frequently among patients with severe forms of the disease, according to a large-scale meta-analysis involving 56 studies and nearly 80,000 patients.

The consequences of a single incident of ICH can be irreparable and life-changing, so clinician knowledge of incidence rates and risk factors is essential, said lead author Anne-Fleur Zwagemaker, a PhD candidate from Amsterdam University Medical Center.

“Intracranial hemorrhage is one of the most severe and fearful complications in hemophilia,” Ms. Zwagemaker said in a presentation at the annual congress of the European Association for Haemophilia and Allied Disorders. “Our aim was to give more precise estimates of ICH numbers and risk factors in hemophilia.”

The review is notable for its scale and quality. After eliminating studies with fewer than 50 patients or other insufficiencies, the investigators were left with 56 studies conducted between 1960 and 2018, involving 79,818 patients with hemophilia. With a mean observation period of 12 years, the data encompassed almost 1 million person-years of data.

Across all studies, 1,508 ICH events were reported. Incidence and mortality rates were 400 and 80 per 100,000 person-years, respectively.

To optimize accuracy, the investigators further restricted studies to those with a sample size of at least 365 patients, leading to a pooled incidence rate of 3.8%. Studies with relevant data showed that about half of the cases of ICH (48%) were spontaneous. Regarding most common bleed locations, about two-thirds were either subdural (30%) or intracerebral (32%).

Pooled incidence rates of ICH have decreased steadily over time, from 7%-8% during the 1960-1979 time period, to 5%-6% from 1980-1999, and most recently to about 3%.

Mortality rates during the same time periods decreased in a similar fashion, from 300, to 100, to 75 deaths per 100,000 person-years.

Additional analysis revealed an expected relationship between disease severity and likelihood of ICH. Mild cases of hemophilia had an ICH incidence rate of 0.9%, moderate cases had a rate of 1.3%, and severe cases topped the scale at 4.5%, entailing an incidence rate ratio of 2.7 between severe and nonsevere patients.

“I think our data show that in hemophilia, ICH is still a very important and frequent complication,” Ms. Zwagemaker said. “Luckily, we also see a decline in numbers, but I think it’s still very important that we identify those at risk in hemophilia and that we acknowledge it’s still a very important problem.”

Dr. Zwagemaker reported having no relevant financial disclosures.

SOURCE: Zwagemaker AF et al. EAHAD 2019, Abstract OR08.

PRAGUE – Despite improvements over the past 60 years, intracranial hemorrhage (ICH) remains a significant complication in hemophilia, occurring most frequently among patients with severe forms of the disease, according to a large-scale meta-analysis involving 56 studies and nearly 80,000 patients.

The consequences of a single incident of ICH can be irreparable and life-changing, so clinician knowledge of incidence rates and risk factors is essential, said lead author Anne-Fleur Zwagemaker, a PhD candidate from Amsterdam University Medical Center.

“Intracranial hemorrhage is one of the most severe and fearful complications in hemophilia,” Ms. Zwagemaker said in a presentation at the annual congress of the European Association for Haemophilia and Allied Disorders. “Our aim was to give more precise estimates of ICH numbers and risk factors in hemophilia.”

The review is notable for its scale and quality. After eliminating studies with fewer than 50 patients or other insufficiencies, the investigators were left with 56 studies conducted between 1960 and 2018, involving 79,818 patients with hemophilia. With a mean observation period of 12 years, the data encompassed almost 1 million person-years of data.

Across all studies, 1,508 ICH events were reported. Incidence and mortality rates were 400 and 80 per 100,000 person-years, respectively.

To optimize accuracy, the investigators further restricted studies to those with a sample size of at least 365 patients, leading to a pooled incidence rate of 3.8%. Studies with relevant data showed that about half of the cases of ICH (48%) were spontaneous. Regarding most common bleed locations, about two-thirds were either subdural (30%) or intracerebral (32%).

Pooled incidence rates of ICH have decreased steadily over time, from 7%-8% during the 1960-1979 time period, to 5%-6% from 1980-1999, and most recently to about 3%.

Mortality rates during the same time periods decreased in a similar fashion, from 300, to 100, to 75 deaths per 100,000 person-years.

Additional analysis revealed an expected relationship between disease severity and likelihood of ICH. Mild cases of hemophilia had an ICH incidence rate of 0.9%, moderate cases had a rate of 1.3%, and severe cases topped the scale at 4.5%, entailing an incidence rate ratio of 2.7 between severe and nonsevere patients.

“I think our data show that in hemophilia, ICH is still a very important and frequent complication,” Ms. Zwagemaker said. “Luckily, we also see a decline in numbers, but I think it’s still very important that we identify those at risk in hemophilia and that we acknowledge it’s still a very important problem.”

Dr. Zwagemaker reported having no relevant financial disclosures.

SOURCE: Zwagemaker AF et al. EAHAD 2019, Abstract OR08.

REPORTING FROM EAHAD 2019

Key clinical point:

Major finding: The incidence rate of ICH was approximately 7%-8% from 1960 to 1979, compared with approximately 3% from 2000 to 2018.

Study details: A review of 56 studies conducted between 1960 and 2018, involving 79,818 patients with hemophilia.

Disclosures: Dr. Zwagemaker reported having no relevant financial disclosures.

Source: Zwagemaker AF et al. EAHAD 2019, Abstract OR08.

Daratumumab disappoints in non-Hodgkin lymphoma trial

Daratumumab is safe but ineffective for the treatment of patients with relapsed or refractory non-Hodgkin lymphoma (NHL) and CD38 expression of at least 50%, according to findings from a recent phase 2 trial.

Unfortunately, the study met headwinds early on, when initial screening of 112 patients with available tumor samples showed that only about half (56%) had CD38 expression of at least 50%, reported lead author Giles Salles, MD, PhD, of Claude Bernard University in Lyon, France, and his colleagues. The cutoff was based on preclinical models, suggesting that daratumumab-induced cytotoxicity depends on a high level of CD38 expression.

“Only 36 [patients] were eligible for study enrollment, questioning the generalizability of the study population,” the investigators wrote in Clinical Lymphoma, Myeloma & Leukemia.

Of these 36 patients, 15 had diffuse large B-cell lymphoma (DLBCL), 16 had follicular lymphoma (FL), and 5 had mantle cell lymphoma (MCL). Median CD38 expression was 70%. Patients were given 16 mg/kg of IV daratumumab once a week for two cycles, then every 2 weeks for four cycles, and finally on a monthly basis. Cycles were 28 days long. The primary endpoint was overall response rate. Safety and pharmacokinetics were also evaluated.

Results were generally disappointing, with ORR occurring in two patients (12.5%) with FL and one patient (6.7%) with DLBCL. No patients with MCL responded before the study was terminated. On a more encouraging note, 10 of 16 patients with FL maintained stable disease.

“All 16 patients in the FL cohort had progressed/relapsed on their prior treatment regimen; therefore, the maintenance of stable disease in the FL cohort may suggest some clinical benefit of daratumumab in this subset of NHL,” the investigators wrote.

Pharmacokinetics and safety data were similar to those from multiple myeloma studies of daratumumab; no new safety signals or instances of immunogenicity were encountered. The most common grade 3 or higher treatment-related adverse event was thrombocytopenia, which occurred in 11.1% of patients. Infusion-related reactions occurred in 72.2% of patients, but none were grade 4 and only three reactions were grade 3.

The investigators suggested that daratumumab may still play a role in NHL treatment, but not as a single agent.

“It is possible that daratumumab-based combination therapy would have allowed for more responses to be achieved within the current study,” the investigators wrote. “NHL is an extremely heterogeneous disease and the identification of predictive biomarkers and molecular genetics may provide new personalized therapies.”

The study was funded by Janssen Research & Development; two study authors reported employment by Janssen. Others reported financial ties to Janssen, Celgene, Roche, Gilead, Novartis, Amgen, and others.

SOURCE: Salles G et al. Clin Lymphoma Myeloma Leuk. 2019 Jan 2. doi: 10.1016/j.clml.2018.12.013.

Daratumumab is safe but ineffective for the treatment of patients with relapsed or refractory non-Hodgkin lymphoma (NHL) and CD38 expression of at least 50%, according to findings from a recent phase 2 trial.

Unfortunately, the study met headwinds early on, when initial screening of 112 patients with available tumor samples showed that only about half (56%) had CD38 expression of at least 50%, reported lead author Giles Salles, MD, PhD, of Claude Bernard University in Lyon, France, and his colleagues. The cutoff was based on preclinical models, suggesting that daratumumab-induced cytotoxicity depends on a high level of CD38 expression.

“Only 36 [patients] were eligible for study enrollment, questioning the generalizability of the study population,” the investigators wrote in Clinical Lymphoma, Myeloma & Leukemia.

Of these 36 patients, 15 had diffuse large B-cell lymphoma (DLBCL), 16 had follicular lymphoma (FL), and 5 had mantle cell lymphoma (MCL). Median CD38 expression was 70%. Patients were given 16 mg/kg of IV daratumumab once a week for two cycles, then every 2 weeks for four cycles, and finally on a monthly basis. Cycles were 28 days long. The primary endpoint was overall response rate. Safety and pharmacokinetics were also evaluated.

Results were generally disappointing, with ORR occurring in two patients (12.5%) with FL and one patient (6.7%) with DLBCL. No patients with MCL responded before the study was terminated. On a more encouraging note, 10 of 16 patients with FL maintained stable disease.

“All 16 patients in the FL cohort had progressed/relapsed on their prior treatment regimen; therefore, the maintenance of stable disease in the FL cohort may suggest some clinical benefit of daratumumab in this subset of NHL,” the investigators wrote.

Pharmacokinetics and safety data were similar to those from multiple myeloma studies of daratumumab; no new safety signals or instances of immunogenicity were encountered. The most common grade 3 or higher treatment-related adverse event was thrombocytopenia, which occurred in 11.1% of patients. Infusion-related reactions occurred in 72.2% of patients, but none were grade 4 and only three reactions were grade 3.

The investigators suggested that daratumumab may still play a role in NHL treatment, but not as a single agent.

“It is possible that daratumumab-based combination therapy would have allowed for more responses to be achieved within the current study,” the investigators wrote. “NHL is an extremely heterogeneous disease and the identification of predictive biomarkers and molecular genetics may provide new personalized therapies.”

The study was funded by Janssen Research & Development; two study authors reported employment by Janssen. Others reported financial ties to Janssen, Celgene, Roche, Gilead, Novartis, Amgen, and others.

SOURCE: Salles G et al. Clin Lymphoma Myeloma Leuk. 2019 Jan 2. doi: 10.1016/j.clml.2018.12.013.

Daratumumab is safe but ineffective for the treatment of patients with relapsed or refractory non-Hodgkin lymphoma (NHL) and CD38 expression of at least 50%, according to findings from a recent phase 2 trial.

Unfortunately, the study met headwinds early on, when initial screening of 112 patients with available tumor samples showed that only about half (56%) had CD38 expression of at least 50%, reported lead author Giles Salles, MD, PhD, of Claude Bernard University in Lyon, France, and his colleagues. The cutoff was based on preclinical models, suggesting that daratumumab-induced cytotoxicity depends on a high level of CD38 expression.

“Only 36 [patients] were eligible for study enrollment, questioning the generalizability of the study population,” the investigators wrote in Clinical Lymphoma, Myeloma & Leukemia.

Of these 36 patients, 15 had diffuse large B-cell lymphoma (DLBCL), 16 had follicular lymphoma (FL), and 5 had mantle cell lymphoma (MCL). Median CD38 expression was 70%. Patients were given 16 mg/kg of IV daratumumab once a week for two cycles, then every 2 weeks for four cycles, and finally on a monthly basis. Cycles were 28 days long. The primary endpoint was overall response rate. Safety and pharmacokinetics were also evaluated.

Results were generally disappointing, with ORR occurring in two patients (12.5%) with FL and one patient (6.7%) with DLBCL. No patients with MCL responded before the study was terminated. On a more encouraging note, 10 of 16 patients with FL maintained stable disease.

“All 16 patients in the FL cohort had progressed/relapsed on their prior treatment regimen; therefore, the maintenance of stable disease in the FL cohort may suggest some clinical benefit of daratumumab in this subset of NHL,” the investigators wrote.

Pharmacokinetics and safety data were similar to those from multiple myeloma studies of daratumumab; no new safety signals or instances of immunogenicity were encountered. The most common grade 3 or higher treatment-related adverse event was thrombocytopenia, which occurred in 11.1% of patients. Infusion-related reactions occurred in 72.2% of patients, but none were grade 4 and only three reactions were grade 3.

The investigators suggested that daratumumab may still play a role in NHL treatment, but not as a single agent.

“It is possible that daratumumab-based combination therapy would have allowed for more responses to be achieved within the current study,” the investigators wrote. “NHL is an extremely heterogeneous disease and the identification of predictive biomarkers and molecular genetics may provide new personalized therapies.”

The study was funded by Janssen Research & Development; two study authors reported employment by Janssen. Others reported financial ties to Janssen, Celgene, Roche, Gilead, Novartis, Amgen, and others.

SOURCE: Salles G et al. Clin Lymphoma Myeloma Leuk. 2019 Jan 2. doi: 10.1016/j.clml.2018.12.013.

FROM CLINICAL LYMPHOMA, MYELOMA & LEUKEMIA

Key clinical point:

Major finding: The overall response rate was 12.5% for patients with follicular lymphoma and 6.7% for diffuse large B-cell lymphoma (DLBCL). There were no responders in the mantle cell lymphoma cohort.

Study details: An open-label, phase 2 trial involving 15 patients with diffuse large B-cell lymphoma, 16 patients with follicular lymphoma, and 5 patients with mantle cell lymphoma.

Disclosures: The study was funded by Janssen Research & Development; two study authors reported employment by Janssen. Others reported financial ties to Janssen, Celgene, Roche, Gilead, Novartis, Amgen, and others.

Source: Salles G et al. Clin Lymphoma Myeloma Leuk. 2019 Jan 2. doi: 10.1016/j.clml.2018.12.013.

Combo appears to overcome aggressive L-NN-MCL

Some patients with aggressive leukemic nonnodal mantle cell lymphoma (L-NN-MCL) respond very well to combination therapy with rituximab and ibrutinib, according to two case reports.

Both patients, who had aggressive L-NN-MCL and P53 abnormalities, remain free of disease 18 months after treatment with rituximab/ibrutinib and autologous stem cell transplantation (ASCT), reported Shahram Mori, MD, PhD, of the Florida Hospital Cancer Institute in Orlando, and his colleagues.

The findings suggest that P53 gene status in L-NN-MCL may have a significant impact on prognosis and treatment planning. There are currently no guidelines for risk stratifying L-NN-MCL patients.

“Although the recognition of L-NN-MCL is important to avoid overtreatment, there appears to be a subset of patients who either have a more aggressive form or disease that has transformed to a more aggressive form who present with symptomatic disease and/or cytopenias,” the investigators wrote in Clinical Lymphoma, Myeloma & Leukemia.

The investigators described two such cases in their report. Both patients had leukocytosis with various other blood cell derangements and splenomegaly without lymphadenopathy.

The first patient was a 53-year-old African American man with L-NN-MCL and a number of genetic aberrations, including loss of the P53 gene. After two cycles of rituximab with bendamustine proved ineffective, he was switched to rituxan with cyclophosphamide, vincristine, adriamycin, and dexamethasone with high-dose methotrexate and cytarabine. This regimen was also ineffective and his white blood cell count kept rising.

His story changed for the better when the patient was switched to ibrutinib 560 mg daily and rituximab 375 mg/m2 monthly. Within 2 months of starting therapy, his blood abnormalities normalized, and bone marrow biopsy at the end of treatment revealed complete remission without evidence of minimal residual disease. The patient remains in complete remission 18 months after ASCT.

The second patient was a 49-year-old Hispanic man with L-NN-MCL. He had missense mutations in TP53 and KMT2A (MLL), a frameshift mutation in BCOR, and a t(11;14) translocation. Ibrutinib/rituximab was started immediately. After 1 month, his blood levels began to normalize. After five cycles, bone marrow biopsy showed complete remission with no evidence of minimal residual disease. Like the first patient, the second patient remains in complete remission 18 months after ASCT.

“To our knowledge, these are the first two cases of L-NN-MCL with P53 gene mutations/alterations that were successfully treated with a combination of rituximab and ibrutinib,” the investigators wrote. “Our two cases confirm the previous studies by Chapman-Fredricks et al, who also noted P53 gene mutation or deletion is associated with the aggressive course.”

The researchers reported having no financial disclosures.

SOURCE: Mori S et al. Clin Lymphoma Myeloma Leuk. 2019 Feb;19(2):e93-7.

Some patients with aggressive leukemic nonnodal mantle cell lymphoma (L-NN-MCL) respond very well to combination therapy with rituximab and ibrutinib, according to two case reports.

Both patients, who had aggressive L-NN-MCL and P53 abnormalities, remain free of disease 18 months after treatment with rituximab/ibrutinib and autologous stem cell transplantation (ASCT), reported Shahram Mori, MD, PhD, of the Florida Hospital Cancer Institute in Orlando, and his colleagues.

The findings suggest that P53 gene status in L-NN-MCL may have a significant impact on prognosis and treatment planning. There are currently no guidelines for risk stratifying L-NN-MCL patients.

“Although the recognition of L-NN-MCL is important to avoid overtreatment, there appears to be a subset of patients who either have a more aggressive form or disease that has transformed to a more aggressive form who present with symptomatic disease and/or cytopenias,” the investigators wrote in Clinical Lymphoma, Myeloma & Leukemia.

The investigators described two such cases in their report. Both patients had leukocytosis with various other blood cell derangements and splenomegaly without lymphadenopathy.

The first patient was a 53-year-old African American man with L-NN-MCL and a number of genetic aberrations, including loss of the P53 gene. After two cycles of rituximab with bendamustine proved ineffective, he was switched to rituxan with cyclophosphamide, vincristine, adriamycin, and dexamethasone with high-dose methotrexate and cytarabine. This regimen was also ineffective and his white blood cell count kept rising.

His story changed for the better when the patient was switched to ibrutinib 560 mg daily and rituximab 375 mg/m2 monthly. Within 2 months of starting therapy, his blood abnormalities normalized, and bone marrow biopsy at the end of treatment revealed complete remission without evidence of minimal residual disease. The patient remains in complete remission 18 months after ASCT.

The second patient was a 49-year-old Hispanic man with L-NN-MCL. He had missense mutations in TP53 and KMT2A (MLL), a frameshift mutation in BCOR, and a t(11;14) translocation. Ibrutinib/rituximab was started immediately. After 1 month, his blood levels began to normalize. After five cycles, bone marrow biopsy showed complete remission with no evidence of minimal residual disease. Like the first patient, the second patient remains in complete remission 18 months after ASCT.

“To our knowledge, these are the first two cases of L-NN-MCL with P53 gene mutations/alterations that were successfully treated with a combination of rituximab and ibrutinib,” the investigators wrote. “Our two cases confirm the previous studies by Chapman-Fredricks et al, who also noted P53 gene mutation or deletion is associated with the aggressive course.”

The researchers reported having no financial disclosures.

SOURCE: Mori S et al. Clin Lymphoma Myeloma Leuk. 2019 Feb;19(2):e93-7.

Some patients with aggressive leukemic nonnodal mantle cell lymphoma (L-NN-MCL) respond very well to combination therapy with rituximab and ibrutinib, according to two case reports.

Both patients, who had aggressive L-NN-MCL and P53 abnormalities, remain free of disease 18 months after treatment with rituximab/ibrutinib and autologous stem cell transplantation (ASCT), reported Shahram Mori, MD, PhD, of the Florida Hospital Cancer Institute in Orlando, and his colleagues.

The findings suggest that P53 gene status in L-NN-MCL may have a significant impact on prognosis and treatment planning. There are currently no guidelines for risk stratifying L-NN-MCL patients.

“Although the recognition of L-NN-MCL is important to avoid overtreatment, there appears to be a subset of patients who either have a more aggressive form or disease that has transformed to a more aggressive form who present with symptomatic disease and/or cytopenias,” the investigators wrote in Clinical Lymphoma, Myeloma & Leukemia.

The investigators described two such cases in their report. Both patients had leukocytosis with various other blood cell derangements and splenomegaly without lymphadenopathy.

The first patient was a 53-year-old African American man with L-NN-MCL and a number of genetic aberrations, including loss of the P53 gene. After two cycles of rituximab with bendamustine proved ineffective, he was switched to rituxan with cyclophosphamide, vincristine, adriamycin, and dexamethasone with high-dose methotrexate and cytarabine. This regimen was also ineffective and his white blood cell count kept rising.

His story changed for the better when the patient was switched to ibrutinib 560 mg daily and rituximab 375 mg/m2 monthly. Within 2 months of starting therapy, his blood abnormalities normalized, and bone marrow biopsy at the end of treatment revealed complete remission without evidence of minimal residual disease. The patient remains in complete remission 18 months after ASCT.

The second patient was a 49-year-old Hispanic man with L-NN-MCL. He had missense mutations in TP53 and KMT2A (MLL), a frameshift mutation in BCOR, and a t(11;14) translocation. Ibrutinib/rituximab was started immediately. After 1 month, his blood levels began to normalize. After five cycles, bone marrow biopsy showed complete remission with no evidence of minimal residual disease. Like the first patient, the second patient remains in complete remission 18 months after ASCT.

“To our knowledge, these are the first two cases of L-NN-MCL with P53 gene mutations/alterations that were successfully treated with a combination of rituximab and ibrutinib,” the investigators wrote. “Our two cases confirm the previous studies by Chapman-Fredricks et al, who also noted P53 gene mutation or deletion is associated with the aggressive course.”

The researchers reported having no financial disclosures.

SOURCE: Mori S et al. Clin Lymphoma Myeloma Leuk. 2019 Feb;19(2):e93-7.

FROM CLINICAL LYMPHOMA, MYELOMA & LEUKEMIA

Key clinical point:

Major finding: Two patients with aggressive L-NN-MCL and P53 abnormalities who were treated with rituximab/ibrutinib and autologous stem cell transplantation remain free of disease 18 months later.

Study details: Two case reports.

Disclosures: The authors reported having no financial disclosures.

Source: Mori S et al. Clin Lymphoma Myeloma Leuk. 2019 Feb;19(2):e93-7.

Functional MRI detects consciousness after brain damage

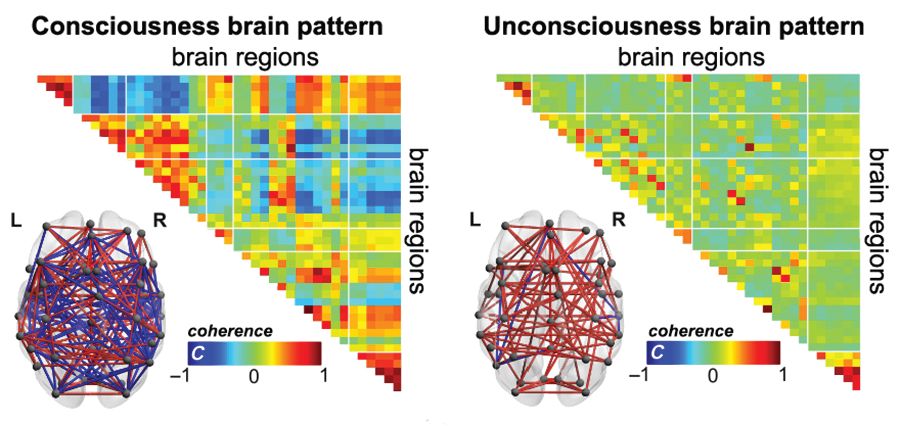

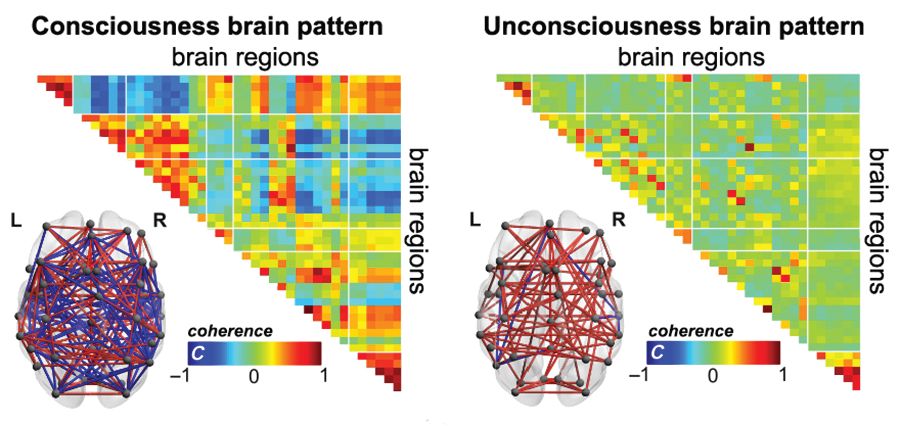

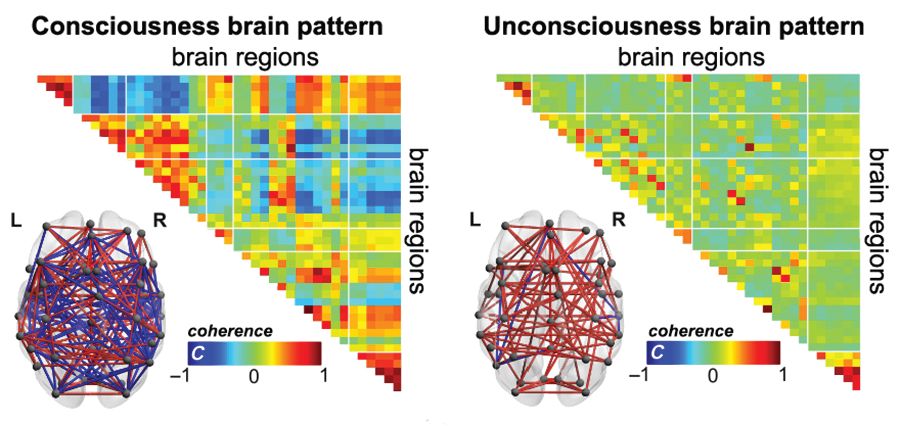

Functional MRI can measure patterns of connectivity to determine levels of consciousness in nonresponsive patients with brain injury, according to results from a multicenter, cross-sectional, observational study.

Blood oxygen level–dependent (BOLD) fMRI showed that brain-wide coordination patterns of high complexity became increasingly common moving from unresponsive patients to those with minimal consciousness to healthy individuals, reported lead author Athena Demertzi, PhD, of GIGA Research Institute at the University of Liège in Belgium, and her colleagues.

“Finding reliable markers indicating the presence or absence of consciousness represents an outstanding open problem for science,” the investigators wrote in Science Advances.

In medicine, an fMRI-based measure of consciousness could supplement behavioral assessments of awareness and guide therapeutic strategies; more broadly, image-based markers could help elucidate the nature of consciousness itself.

“We postulate that consciousness has specific characteristics that are based on the temporal dynamics of ongoing brain activity and its coordination over distant cortical regions,” the investigators wrote. “Our hypothesis stems from the common stance of various contemporary theories which propose that consciousness relates to a dynamic process of self-sustained, coordinated brain-scale activity assisting the tuning to a constantly evolving environment, rather than in static descriptions of brain function.”

There is a need for a reliable way of distinguishing consciousness from unconscious states, the investigators said. “Given that nonresponsiveness can be associated with a variety of brain lesions, varying levels of vigilance, and covert cognition, we highlight the need to determine a common set of features capable of accounting for the capacity to sustain conscious experience.”

To search for patterns of brain signal coordination that correlate with consciousness, four independent research centers performed BOLD fMRI scans of participants at rest or under anesthesia with propofol. Of 159 total participants, 47 were healthy individuals and 112 were patients in a vegetative state/with unresponsive wakefulness syndrome (UWS) or in a minimally conscious state (MCS), based on standardized behavioral assessments. The main data analysis, which included 125 participants, assessed BOLD fMRI signal coordination between six brain networks known to have roles in cognitive and functional processes.

The researchers’ analysis revealed four distinct and recurring brain-wide coordination patterns ranging on a scale from highest activity (pattern 1) to lowest activity (pattern 4). Pattern 1, which exhibited most long-distance edges, spatial complexity, efficiency, and community structure, became increasingly common when moving from UWS patients to MCS patients to healthy control individuals (UWS < MCS < HC, rho = 0.7, Spearman rank correlation between rate and group, P less than 1 x 10-16).

In contrast, pattern 4, characterized by low interareal coordination, showed an inverse trend; it became less common when moving from vegetative patients to healthy individuals (UWS > MCS > HC, Spearman rank correlation between rate and group, rho = –0.6, P less than 1 x 10-11). Although patterns 2 and 3 occurred with equal frequency across all groups, the investigators noted that switching between patterns was most common and predictably sequential in healthy individuals, versus patients with UWS, who were least likely to switch patterns. A total of 23 patients who were scanned under propofol anesthesia were equally likely to exhibit pattern 4, regardless of health status, suggesting that pattern 4 depends upon fixed anatomical pathways. Results were not affected by scanning site or other patient characteristics, such as age, gender, etiology, or chronicity.

“We conclude that these patterns of transient brain signal coordination are characteristic of conscious and unconscious brain states,” the investigators wrote, “warranting future research concerning their relationship to ongoing conscious content, and the possibility of modifying their prevalence by external perturbations, both in healthy and pathological individuals, as well as across species.”

The study was funded by a James S. McDonnell Foundation Collaborative Activity Award, INSERM, the Belgian National Funds for Scientific Research, the Canada Excellence Research Chairs program, and others. The authors declared having no conflicts of interest.

SOURCE: Demertzi A et al. Sci Adv. 2019 Feb 6. doi: 10.1126/sciadv.aat7603.

Functional MRI can measure patterns of connectivity to determine levels of consciousness in nonresponsive patients with brain injury, according to results from a multicenter, cross-sectional, observational study.

Blood oxygen level–dependent (BOLD) fMRI showed that brain-wide coordination patterns of high complexity became increasingly common moving from unresponsive patients to those with minimal consciousness to healthy individuals, reported lead author Athena Demertzi, PhD, of GIGA Research Institute at the University of Liège in Belgium, and her colleagues.

“Finding reliable markers indicating the presence or absence of consciousness represents an outstanding open problem for science,” the investigators wrote in Science Advances.

In medicine, an fMRI-based measure of consciousness could supplement behavioral assessments of awareness and guide therapeutic strategies; more broadly, image-based markers could help elucidate the nature of consciousness itself.

“We postulate that consciousness has specific characteristics that are based on the temporal dynamics of ongoing brain activity and its coordination over distant cortical regions,” the investigators wrote. “Our hypothesis stems from the common stance of various contemporary theories which propose that consciousness relates to a dynamic process of self-sustained, coordinated brain-scale activity assisting the tuning to a constantly evolving environment, rather than in static descriptions of brain function.”

There is a need for a reliable way of distinguishing consciousness from unconscious states, the investigators said. “Given that nonresponsiveness can be associated with a variety of brain lesions, varying levels of vigilance, and covert cognition, we highlight the need to determine a common set of features capable of accounting for the capacity to sustain conscious experience.”

To search for patterns of brain signal coordination that correlate with consciousness, four independent research centers performed BOLD fMRI scans of participants at rest or under anesthesia with propofol. Of 159 total participants, 47 were healthy individuals and 112 were patients in a vegetative state/with unresponsive wakefulness syndrome (UWS) or in a minimally conscious state (MCS), based on standardized behavioral assessments. The main data analysis, which included 125 participants, assessed BOLD fMRI signal coordination between six brain networks known to have roles in cognitive and functional processes.

The researchers’ analysis revealed four distinct and recurring brain-wide coordination patterns ranging on a scale from highest activity (pattern 1) to lowest activity (pattern 4). Pattern 1, which exhibited most long-distance edges, spatial complexity, efficiency, and community structure, became increasingly common when moving from UWS patients to MCS patients to healthy control individuals (UWS < MCS < HC, rho = 0.7, Spearman rank correlation between rate and group, P less than 1 x 10-16).

In contrast, pattern 4, characterized by low interareal coordination, showed an inverse trend; it became less common when moving from vegetative patients to healthy individuals (UWS > MCS > HC, Spearman rank correlation between rate and group, rho = –0.6, P less than 1 x 10-11). Although patterns 2 and 3 occurred with equal frequency across all groups, the investigators noted that switching between patterns was most common and predictably sequential in healthy individuals, versus patients with UWS, who were least likely to switch patterns. A total of 23 patients who were scanned under propofol anesthesia were equally likely to exhibit pattern 4, regardless of health status, suggesting that pattern 4 depends upon fixed anatomical pathways. Results were not affected by scanning site or other patient characteristics, such as age, gender, etiology, or chronicity.

“We conclude that these patterns of transient brain signal coordination are characteristic of conscious and unconscious brain states,” the investigators wrote, “warranting future research concerning their relationship to ongoing conscious content, and the possibility of modifying their prevalence by external perturbations, both in healthy and pathological individuals, as well as across species.”

The study was funded by a James S. McDonnell Foundation Collaborative Activity Award, INSERM, the Belgian National Funds for Scientific Research, the Canada Excellence Research Chairs program, and others. The authors declared having no conflicts of interest.

SOURCE: Demertzi A et al. Sci Adv. 2019 Feb 6. doi: 10.1126/sciadv.aat7603.

Functional MRI can measure patterns of connectivity to determine levels of consciousness in nonresponsive patients with brain injury, according to results from a multicenter, cross-sectional, observational study.

Blood oxygen level–dependent (BOLD) fMRI showed that brain-wide coordination patterns of high complexity became increasingly common moving from unresponsive patients to those with minimal consciousness to healthy individuals, reported lead author Athena Demertzi, PhD, of GIGA Research Institute at the University of Liège in Belgium, and her colleagues.

“Finding reliable markers indicating the presence or absence of consciousness represents an outstanding open problem for science,” the investigators wrote in Science Advances.

In medicine, an fMRI-based measure of consciousness could supplement behavioral assessments of awareness and guide therapeutic strategies; more broadly, image-based markers could help elucidate the nature of consciousness itself.

“We postulate that consciousness has specific characteristics that are based on the temporal dynamics of ongoing brain activity and its coordination over distant cortical regions,” the investigators wrote. “Our hypothesis stems from the common stance of various contemporary theories which propose that consciousness relates to a dynamic process of self-sustained, coordinated brain-scale activity assisting the tuning to a constantly evolving environment, rather than in static descriptions of brain function.”