User login

The Adoption of an Online Journal Club to Improve Research Dissemination and Social Media Engagement Among Hospitalists

Clinicians, educators, and medical journals are increasingly using the social media outlet, Twitter, as a medium to connect and engage with their colleagues. In particular, online journal clubs have created a space for the timely discussion of research, creation of online communities, and dissemination of research.

Social media-based journal clubs are thought to be one way in which journals can leverage the power of social networks so that researchers can engage with a diverse range of end users4 (including bedside clinicians, administrators, and patients). Several examples of these models exist. For example, #GeriMedJC acts as a complimentary, synchronous chat that takes place at the same time as a live, in-person journal club. #NephJC offers multiple 1-hour chats per month and provides an in-depth summary and analysis of each article, while #UroJC is an asynchronous discussion that takes place over 48 hours. Few data exist to describe whether any of these programs produce measurable improvements in indicators of engagement or dissemination of results.

In 2015, the Journal of Hospital Medicine (JHM) began producing a Twitter-based journal club as a means to connect and engage the Hospital Medicine community and allow for discussion and rapid exchange of information and opinions around a specific clinical topic. This study aims to describe the implementation of the first Journal-sponsored, Twitter-based online journal club and ascertain its impact on both Twitter and journal metrics.

METHODS

#JHMChat was launched in October 2015, and was initially held every 2-3 months until January 2017, when chats began to take place monthly. Each 1-hour chat focused on a recently published article in JHM, was moderated by a JHM social media editor (C.M.W., V.M.A.), and included at least 1 study author or guest expert. Articles were chosen by the social media editors based on the following criteria: (1) attractiveness to possible participants, (2) providing topic variety within the journal club series, and (3) sustainability and topic conduciveness to the online chat model. Chats were held at 9 PM EST in order to engage hospitalists across all US time zones and on different days to accommodate authors’ availability. All sessions were framed by 3-4 questions intended to encourage discussion and presented to chat participants at spaced intervals so as to stimulate a current of responses.

Chats were promoted by way of the JHM (@JHospMedicine, 3400 followers) and Society of Hospital Medicine (SHM; @SHMLive, 5800 followers) Twitter feeds beginning 1 month prior to each session. Visual Abstracts5,6 were used to publicize the sessions, also via Twitter, starting in February 2017.

Continuing Medical Education (CME) credits were offered through the SHM to registered participants, starting in July 2016.7 All sessions were cosponsored by the American Board of Internal Medicine (ABIM) Foundation and the Costs of Care Organization, a non-profit organization aimed at improving healthcare value.

Twitter Metrics

After each session, the following Twitter-based engagement metrics were obtained using the Symplur© Healthcare Hashtag project;8 total number of participants and tweets, tweets/participant, and total impressions (calculated as the number of tweets from each participant multiplied by the number of followers that participant currently had then summed up for all participants). Simply put, impressions can also be thought of as the number of times a single Tweet makes it into someone else’s Twitter feed. So as to avoid artificially inflated metrics, all were obtained 2 hours after the end of the journal club. Participants were defined as anyone who posted an original tweet or retweeted during the session and were encouraged to tag their tweets with the hashtag #JHMChat for post-discussion indexing and measurement. Because authors’ or guests’ popularity on Twitter may influence participation rates, we also assessed the number of followers for each participating author. Spearman’s rank correlation was calculated (Microsoft ExcelTM) where appropriate.

Altmetrics and Page Views

As a means to measure exposure and dissemination external to Twitter, we assessed the change (“Delta”) in the each article’s Altmetric score9, a digital-based metric that quantifies the attention received by a scientific publication on various online platforms including news, blogs, and social media. Delta Altmetric scores were calculated as the difference between the scores on the day of the session and 2 weeks after the respective session, with higher scores indicating greater global online discussion. By measuring the Altmetric score on the day of the discussion, we established a baseline score for comparison purposes. Additionally, this allowed us to better attribute any changes that may have occurred to the discussion itself.

Additionally, using information provided by the journal publisher (John Wiley & Sons Publishing) in 2016, we assessed the effect of #JHMChat on the number of article page views on the JHM website relative to the release of the electronic Table of Contents (eTOC). The eTOC release was chosen as it is historically associated with a high number of page views. In order to isolate the effect of #JHMChat, we only reviewed months in which #JHMChat was not held within 3 days of the eTOC release. Because JHM changed publishers in January 2017, we only assessed page view data on 2016 sessions, as the new publisher lacked enhanced search optimization to obtain these data.

Thematic Analysis

In addition to the above measurements, a thematic analysis of each article was conducted to assess any common themes that would influence our chosen metrics. Themes were assessed and ascribed by one author (C.M.W.) and verified by another (V.M.A.).

Participant and Author Experience

To assess the participant experience, responses to a post-session CME questionnaire that assessed (1) overall quality, (2) comprehensiveness of the discussion, (3) whether the participant would recommend the chat to a colleague, and (4) whether participation would lead to practice-changing measures were reviewed. Registration of each session for CME was also quantified. Finally, each participating author was asked to fill out an electronic post-chat survey (SurveyMonkey®) meant to assess the authors’ experience with the journal club (Appendix).

RESULTS

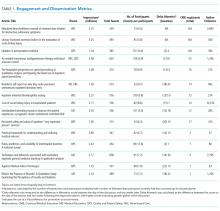

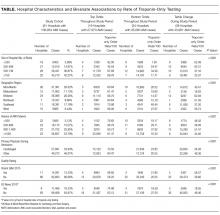

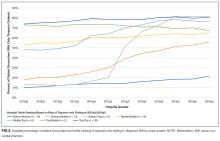

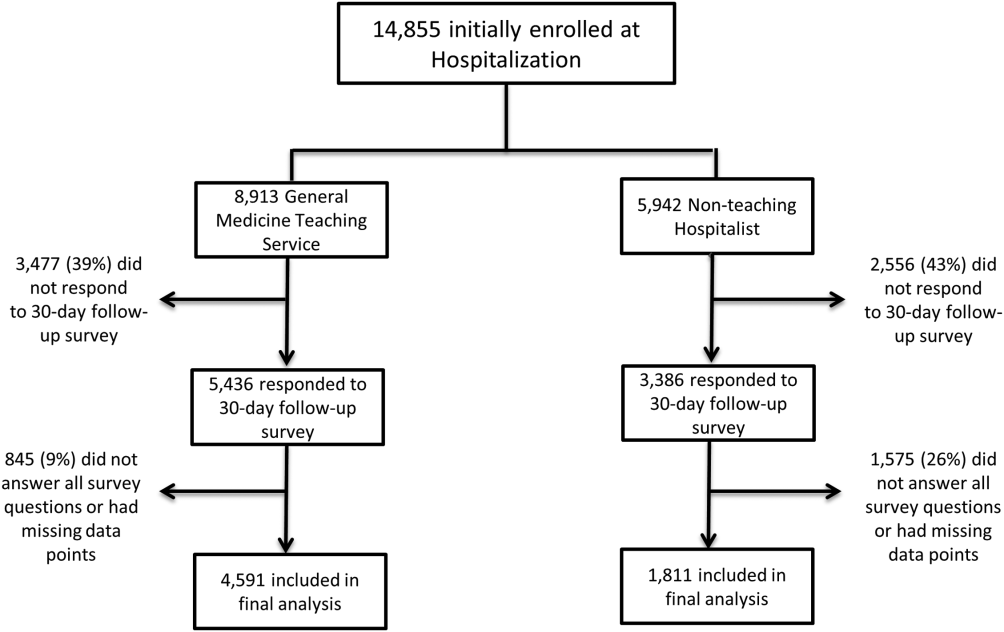

Between October 2015 and November 2017, a total of 15 sessions were held with a mean of 2.17 (±0.583) million impressions/session, 499 (±129) total tweets/session, and 73 (±24) participants/session (compared to a range of 21-58 participants/session from other online journal clubs, where reported) with 7.2 (±2.0) tweets/participant (Table 1). The total number of participants for all sessions was 1096. Participating authors had on average 1389 (±2714) followers, ranging from a low of 37 to a high of 10,376 (Appendix). No correlation between author following and number of participants (r = 0.19), impressions (r = 0.05), or change in Altmetric score (r = 0.17) was seen.

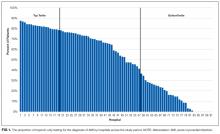

Thematic analysis revealed 3 predominant themes among the chosen articles: Value-based care (VBC), Quality and Patient Safety (QPS), and Medical Education (ME). Articles focused on VBC had the greatest number of impressions (mean ±SD: 2.61 ± 0.55 million) and participants (mean ±SD: 90 ± 12), while QPS articles had the fewest impressions (mean ±SD: 1.71 ± 0.59 million) and number of participants (mean ±SD: 47 ± 16). The mean increase in the Altmetric score among all discussed articles was 14 (±12), from an average baseline of 30 (±37). Medical Education-themed articles appeared to garner the greatest increase in Altmetric scores, averaging an increase of 32 points, compared with an average baseline score of 31 (±32). In contrast, VBC and QPS articles averaged an increase of 8.6 and 8.4 points, from average baselines of 55 (±53) and 17 (±13), respectively. A 2-month analysis of JHM articles not included in these discussions, in which Altmetric scores were measured in the same way as those from the discussion, revealed a baseline Altmetric score of 27 (±24) with an average increase of 8 (±6) 2 weeks following the chat.

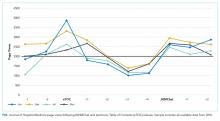

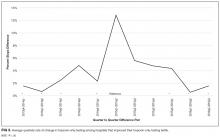

Four articles met the inclusion criteria for page view analysis and suggested that article page views increased to similar levels as the eTOC release (mean: 2668 vs. 2998, respectively; P = .35) (Figure). These increases equate to a 33% and 50% increase in average daily page views (2002) for the chat and eTOC release, respectively.

Ninety-three percent (14/15) of the participating authors responded to the post-discussion survey. All strongly agreed (5/5 on a Likert scale) that the venue allowed for an in-depth discussion about processes and challenges in conducting the study and allowed for greater dissemination and visibility of their work (5/5). Additionally, authors agreed that the journal club was a valuable experience for themselves (4.88/5) and other practitioners (4.88/5). Most agreed that the journal club allowed them to share their work with a different group of participants than usual (4.75/5) and that the experience changed how they would discuss their manuscripts in the future (4.75/5.0); Table 2).

DISCUSSION

The Twitter-based journal club #JHMChat appears to increase social media awareness and dissemination of journal articles and was considered a useful engagement platform by both authors and participants.

Articles with a focus on VBC and ME had the greatest impact on dissemination metrics, particularly, total impressions and Altmetric scores, respectively. Given the strong presence and interest in these topics within Twitter and social media, these findings are not surprising.10,11 For example, over the past several years, the VBC movement has taken shape and grown alongside the expansion of social media, thus giving a space for this community to grow and engage. Of note, the cosponsorship relationship with the ABIM Foundation (which works closely with the Choosing Wisely™ campaign) and the Costs of Care Organization could have influenced the participation and dissemination rates of VBC articles. Medical education articles were also popular and appeared to have increased uptake after chats, based on their Altmetric scores. This may be explained by the fact that medical educators have long utilized social media as a means to connect and engage within their community.12–14 It is also possible that the use of Twitter by trainees (residents, students) may have driven some of the dissemination of ME articles, as this group may not be regular subscribers to JHM.

Online journal clubs offer distinct advantages over traditional in-person journal clubs. First, online journal clubs allow for increased connectivity among online communities, bringing together participants from different geographic areas with diverse training and clinical experiences. Subsequently, this allows for the rapid exchange of both personal and organizational approaches to the topic of discussion.15–17 Second, online journal clubs allow for continual access to the discussion material. For example, while the metrics used in this study only assessed active, synchronous participation, anecdotal evidence and feedback to the authors suggests that many individuals passively engaged by following along or reviewed the chat feed post hoc at their convenience. This asynchronous access is a quality not found in more traditional journal club formats. Finally, because online journal clubs commonly operate with a flattened hierarchy,18 they can break down access barriers to both the researchers who performed the study and thought leaders who commonly participate.17

Several insightful lessons were gleaned in the production and management of this online journal club. On the implementation side, promotion, preparation, and continued organization of an online journal club requires a fair amount of work. In this case, the required time and resources were provided by 2 social media editors in addition to administrative assistance from the SHM. The high attrition rate of online journal clubs over the years attests to these difficulties.24 Additionally, finding incentives to attract and sustain participation can be difficult, as we noted that neither CME nor author popularity (based on their Twitter following) appeared to influence engagement metrics (number of participants, total tweets, and tweets/participant). We also found that partnering with other journal club communities, in particular #NephJC, lead to greater participation rates and impressions. Thus, leveraging connections and topics that span clinical domains may be one way to improve and broaden engagement within these forums. Finally, feedback from participants revealed that the timing of the journal club and the inability to have in-depth discussions, a characteristic commonly associated with traditional journal clubs, were problematic.

This study has several limitations. First, the metrics used to assess social media engagement and dissemination can be easily skewed. For instance, the activity of 1 or 2 individuals with large followings can dramatically influence the number of impressions, giving a falsely elevated sense of broad dissemination. Conversely, there may have been some participants who did not use the #JHMChat hashtag, thus leading to an underestimation in these metrics. Second, while we report total impressions as a measure of dissemination, this metric represents possible interactions and does not guarantee interaction or visualization of that tweet. Additionally, we were unable to characterize our participants and their participation rates over time, as this information is not made available through Symplur© analytics. Third, our page view assessment was limited to 2016 sessions only; therefore, these data may not be an accurate reflection of the impact of #JHMChat on this metric. Fourth, given the marginal response rate to our CME questionnaire, a selection bias could have occurred. Finally, whether social media discussions such as online journal clubs act as leading indicators for future citations remains unclear, as some research has shown an association between increased Altmetric scores and increased citation rates,19-21 while others have not.22,23 Our study was not equipped to assess this correlation.

CONCLUSION

Online journal clubs create new opportunities to connect, engage, and disseminate medical research. These developing forums provide journal editors, researchers, patients, and clinicians with a means to connect and discuss research in ways that were not previously possible. In order to continue to evolve and grow, future research in online journal clubs should explore the downstream effects on citation rates, clinical uptake, and participant knowledge after the sessions.

Acknowledgments

The authors would like to thank Felicia Steele for her assistance in organizing and promoting the chats. Additionally, the authors would like to thank all the authors, guests and participants who took time from their families, work, and daily lives to participate in these activities. Your time and presence were truly appreciated.

Disclosures

The authors of this article operate as the Social Media Editors (C.M.W., V.M.A.) and the Editor-in-Chief (A.A.) for the Journal of Hospital Medicine. Dr. Wray had full access to all the data in the project, takes responsibility for the integrity of the data, and the accuracy of the data analysis.

1. Topf JM, Sparks MA, Phelan PJ, et al. The evolution of the journal club: from osler to twitter. Am J Kidney Dis Off J Natl Kidney Found. 2017;69(6):827-836. doi: 10.1053/j.ajkd.2016.12.012. PubMed

2. Thangasamy IA, Leveridge M, Davies BJ, Finelli A, Stork B, Woo HH. International urology journal club via Twitter: 12-month experience. Eur Urol. 2014;66(1):112-117. doi: 10.1016/j.eururo.2014.01.034. PubMed

3. Gardhouse AI, Budd L, Yang SYC, Wong CL. #GeriMedJC: the Twitter complement to the traditional-format geriatric medicine journal club. J Am Geriatr Soc. 2017;65(6):1347-1351. doi: 10.1111/jgs.14920. PubMed

4. Duque L. How academics and researchers can get more out of social media. Harvard Business Review. https://hbr.org/2016/06/how-academics-and-researchers-can-get-more-out-of-social-media. Accessed November 9, 2017.

5. Wray CM, Arora VM. #VisualAbstract: a revolution in communicating science? Ann Surg. 2017;266(6):e49-e50. doi: 10.1097/SLA.0000000000002339. PubMed

6. Ibrahim AM. Seeing is believing: using visual abstracts to disseminate scientific research. Am J Gastroenterol. 2017:ajg2017268. doi: 10.1038/ajg.2017.268. PubMed

7. #JHMChat. http://shm.hospitalmedicine.org/acton/media/25526/jhmchat. Accessed November 9, 2017.

8. #JHMChat-healthcare social media. Symplur. https://www.symplur.com/search/%23JHMChat. Accessed November 9, 2017.

9. Altmetric. Altmetric. https://www.altmetric.com/. Accessed November 9, 2017.

10. value-based healthcare | Symplur. https://www.symplur.com/topic/value-based-healthcare/. Accessed November 17, 2017.

11. medical education | Symplur. https://www.symplur.com/topic/medical-education/. Accessed November 17, 2017.

12. Sterling M, Leung P, Wright D, Bishop TF. The use of social media in graduate medical education: a systematic review. Acad Med. 2017;92(7):1043. doi: 10.1097/ACM.0000000000001617. PubMed

13. Davis WM, Ho K, Last J. Advancing social media in medical education. CMAJ Can Med Assoc J. 2015;187(8):549-550. doi: 10.1503/cmaj.141417. PubMed

14. Hillman T, Sherbino J. Social media in medical education: a new pedagogical paradigm? Postgrad Med J. 2015;91(1080):544-545. doi: 10.1136/postgradmedj-2015-133686. PubMed

15. Gerds AT, Chan T. Social media in hematology in 2017: dystopia, utopia, or somewhere in-between? Curr Hematol Malig Rep. 2017;12(6):582-591. doi: 10.1007/s11899-017-0424-8. PubMed

16. Mehta N, Flickinger T. The times they are a-changin’: academia, social media and the JGIM Twitter Journal Club. J Gen Intern Med. 2014;29(10):1317-1318. doi: 10.1007/s11606-014-2976-9. PubMed

17. Chan T, Trueger NS, Roland D, Thoma B. Evidence-based medicine in the era of social media: scholarly engagement through participation and online interaction. CJEM. 2017:1-6. doi: 10.1017/cem.2016.407. PubMed

18. Utengen A. The flattening of healthcare: breaking down of barriers in healthcare social media-twitter visualized. https://www.symplur.com/shorts/the-flattening-of-healthcare-twitter-visualized/. Accessed November 8, 2017.

19. Thelwall M, Haustein S, Larivière V, Sugimoto CR. Do altmetrics work? Twitter and ten other social web services. PloS One. 2013;8(5):e64841. doi: 10.1371/journal.pone.0064841. PubMed

20. Peoples BK, Midway SR, Sackett D, Lynch A, Cooney PB. Twitter predicts citation rates of ecological research. PloS One. 2016;11(11):e0166570. doi: 10.1371/journal.pone.0166570. PubMed

21. Eysenbach G. Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123. doi: 10.2196/jmir.2012. PubMed

22. Winter JCF de. The relationship between tweets, citations, and article views for PLOS ONE articles. Scientometrics. 2015;102(2):1773-1779. doi: 10.1007/s11192-014-1445-x.

23. Haustein S, Peters I, Sugimoto CR, Thelwall M, Larivière V. Tweeting biomedicine: an analysis of tweets and citations in the biomedical literature. J Assoc Inf Sci Technol. 2014;65(4):656-669. doi: 10.1002/asi.23101.

24. Journal club. In: Wikipedia. 2017. https://en.wikipedia.org/w/index.php?title=Journal_club&oldid=807037773. Accessed November 9, 2017.

Clinicians, educators, and medical journals are increasingly using the social media outlet, Twitter, as a medium to connect and engage with their colleagues. In particular, online journal clubs have created a space for the timely discussion of research, creation of online communities, and dissemination of research.

Social media-based journal clubs are thought to be one way in which journals can leverage the power of social networks so that researchers can engage with a diverse range of end users4 (including bedside clinicians, administrators, and patients). Several examples of these models exist. For example, #GeriMedJC acts as a complimentary, synchronous chat that takes place at the same time as a live, in-person journal club. #NephJC offers multiple 1-hour chats per month and provides an in-depth summary and analysis of each article, while #UroJC is an asynchronous discussion that takes place over 48 hours. Few data exist to describe whether any of these programs produce measurable improvements in indicators of engagement or dissemination of results.

In 2015, the Journal of Hospital Medicine (JHM) began producing a Twitter-based journal club as a means to connect and engage the Hospital Medicine community and allow for discussion and rapid exchange of information and opinions around a specific clinical topic. This study aims to describe the implementation of the first Journal-sponsored, Twitter-based online journal club and ascertain its impact on both Twitter and journal metrics.

METHODS

#JHMChat was launched in October 2015, and was initially held every 2-3 months until January 2017, when chats began to take place monthly. Each 1-hour chat focused on a recently published article in JHM, was moderated by a JHM social media editor (C.M.W., V.M.A.), and included at least 1 study author or guest expert. Articles were chosen by the social media editors based on the following criteria: (1) attractiveness to possible participants, (2) providing topic variety within the journal club series, and (3) sustainability and topic conduciveness to the online chat model. Chats were held at 9 PM EST in order to engage hospitalists across all US time zones and on different days to accommodate authors’ availability. All sessions were framed by 3-4 questions intended to encourage discussion and presented to chat participants at spaced intervals so as to stimulate a current of responses.

Chats were promoted by way of the JHM (@JHospMedicine, 3400 followers) and Society of Hospital Medicine (SHM; @SHMLive, 5800 followers) Twitter feeds beginning 1 month prior to each session. Visual Abstracts5,6 were used to publicize the sessions, also via Twitter, starting in February 2017.

Continuing Medical Education (CME) credits were offered through the SHM to registered participants, starting in July 2016.7 All sessions were cosponsored by the American Board of Internal Medicine (ABIM) Foundation and the Costs of Care Organization, a non-profit organization aimed at improving healthcare value.

Twitter Metrics

After each session, the following Twitter-based engagement metrics were obtained using the Symplur© Healthcare Hashtag project;8 total number of participants and tweets, tweets/participant, and total impressions (calculated as the number of tweets from each participant multiplied by the number of followers that participant currently had then summed up for all participants). Simply put, impressions can also be thought of as the number of times a single Tweet makes it into someone else’s Twitter feed. So as to avoid artificially inflated metrics, all were obtained 2 hours after the end of the journal club. Participants were defined as anyone who posted an original tweet or retweeted during the session and were encouraged to tag their tweets with the hashtag #JHMChat for post-discussion indexing and measurement. Because authors’ or guests’ popularity on Twitter may influence participation rates, we also assessed the number of followers for each participating author. Spearman’s rank correlation was calculated (Microsoft ExcelTM) where appropriate.

Altmetrics and Page Views

As a means to measure exposure and dissemination external to Twitter, we assessed the change (“Delta”) in the each article’s Altmetric score9, a digital-based metric that quantifies the attention received by a scientific publication on various online platforms including news, blogs, and social media. Delta Altmetric scores were calculated as the difference between the scores on the day of the session and 2 weeks after the respective session, with higher scores indicating greater global online discussion. By measuring the Altmetric score on the day of the discussion, we established a baseline score for comparison purposes. Additionally, this allowed us to better attribute any changes that may have occurred to the discussion itself.

Additionally, using information provided by the journal publisher (John Wiley & Sons Publishing) in 2016, we assessed the effect of #JHMChat on the number of article page views on the JHM website relative to the release of the electronic Table of Contents (eTOC). The eTOC release was chosen as it is historically associated with a high number of page views. In order to isolate the effect of #JHMChat, we only reviewed months in which #JHMChat was not held within 3 days of the eTOC release. Because JHM changed publishers in January 2017, we only assessed page view data on 2016 sessions, as the new publisher lacked enhanced search optimization to obtain these data.

Thematic Analysis

In addition to the above measurements, a thematic analysis of each article was conducted to assess any common themes that would influence our chosen metrics. Themes were assessed and ascribed by one author (C.M.W.) and verified by another (V.M.A.).

Participant and Author Experience

To assess the participant experience, responses to a post-session CME questionnaire that assessed (1) overall quality, (2) comprehensiveness of the discussion, (3) whether the participant would recommend the chat to a colleague, and (4) whether participation would lead to practice-changing measures were reviewed. Registration of each session for CME was also quantified. Finally, each participating author was asked to fill out an electronic post-chat survey (SurveyMonkey®) meant to assess the authors’ experience with the journal club (Appendix).

RESULTS

Between October 2015 and November 2017, a total of 15 sessions were held with a mean of 2.17 (±0.583) million impressions/session, 499 (±129) total tweets/session, and 73 (±24) participants/session (compared to a range of 21-58 participants/session from other online journal clubs, where reported) with 7.2 (±2.0) tweets/participant (Table 1). The total number of participants for all sessions was 1096. Participating authors had on average 1389 (±2714) followers, ranging from a low of 37 to a high of 10,376 (Appendix). No correlation between author following and number of participants (r = 0.19), impressions (r = 0.05), or change in Altmetric score (r = 0.17) was seen.

Thematic analysis revealed 3 predominant themes among the chosen articles: Value-based care (VBC), Quality and Patient Safety (QPS), and Medical Education (ME). Articles focused on VBC had the greatest number of impressions (mean ±SD: 2.61 ± 0.55 million) and participants (mean ±SD: 90 ± 12), while QPS articles had the fewest impressions (mean ±SD: 1.71 ± 0.59 million) and number of participants (mean ±SD: 47 ± 16). The mean increase in the Altmetric score among all discussed articles was 14 (±12), from an average baseline of 30 (±37). Medical Education-themed articles appeared to garner the greatest increase in Altmetric scores, averaging an increase of 32 points, compared with an average baseline score of 31 (±32). In contrast, VBC and QPS articles averaged an increase of 8.6 and 8.4 points, from average baselines of 55 (±53) and 17 (±13), respectively. A 2-month analysis of JHM articles not included in these discussions, in which Altmetric scores were measured in the same way as those from the discussion, revealed a baseline Altmetric score of 27 (±24) with an average increase of 8 (±6) 2 weeks following the chat.

Four articles met the inclusion criteria for page view analysis and suggested that article page views increased to similar levels as the eTOC release (mean: 2668 vs. 2998, respectively; P = .35) (Figure). These increases equate to a 33% and 50% increase in average daily page views (2002) for the chat and eTOC release, respectively.

Ninety-three percent (14/15) of the participating authors responded to the post-discussion survey. All strongly agreed (5/5 on a Likert scale) that the venue allowed for an in-depth discussion about processes and challenges in conducting the study and allowed for greater dissemination and visibility of their work (5/5). Additionally, authors agreed that the journal club was a valuable experience for themselves (4.88/5) and other practitioners (4.88/5). Most agreed that the journal club allowed them to share their work with a different group of participants than usual (4.75/5) and that the experience changed how they would discuss their manuscripts in the future (4.75/5.0); Table 2).

DISCUSSION

The Twitter-based journal club #JHMChat appears to increase social media awareness and dissemination of journal articles and was considered a useful engagement platform by both authors and participants.

Articles with a focus on VBC and ME had the greatest impact on dissemination metrics, particularly, total impressions and Altmetric scores, respectively. Given the strong presence and interest in these topics within Twitter and social media, these findings are not surprising.10,11 For example, over the past several years, the VBC movement has taken shape and grown alongside the expansion of social media, thus giving a space for this community to grow and engage. Of note, the cosponsorship relationship with the ABIM Foundation (which works closely with the Choosing Wisely™ campaign) and the Costs of Care Organization could have influenced the participation and dissemination rates of VBC articles. Medical education articles were also popular and appeared to have increased uptake after chats, based on their Altmetric scores. This may be explained by the fact that medical educators have long utilized social media as a means to connect and engage within their community.12–14 It is also possible that the use of Twitter by trainees (residents, students) may have driven some of the dissemination of ME articles, as this group may not be regular subscribers to JHM.

Online journal clubs offer distinct advantages over traditional in-person journal clubs. First, online journal clubs allow for increased connectivity among online communities, bringing together participants from different geographic areas with diverse training and clinical experiences. Subsequently, this allows for the rapid exchange of both personal and organizational approaches to the topic of discussion.15–17 Second, online journal clubs allow for continual access to the discussion material. For example, while the metrics used in this study only assessed active, synchronous participation, anecdotal evidence and feedback to the authors suggests that many individuals passively engaged by following along or reviewed the chat feed post hoc at their convenience. This asynchronous access is a quality not found in more traditional journal club formats. Finally, because online journal clubs commonly operate with a flattened hierarchy,18 they can break down access barriers to both the researchers who performed the study and thought leaders who commonly participate.17

Several insightful lessons were gleaned in the production and management of this online journal club. On the implementation side, promotion, preparation, and continued organization of an online journal club requires a fair amount of work. In this case, the required time and resources were provided by 2 social media editors in addition to administrative assistance from the SHM. The high attrition rate of online journal clubs over the years attests to these difficulties.24 Additionally, finding incentives to attract and sustain participation can be difficult, as we noted that neither CME nor author popularity (based on their Twitter following) appeared to influence engagement metrics (number of participants, total tweets, and tweets/participant). We also found that partnering with other journal club communities, in particular #NephJC, lead to greater participation rates and impressions. Thus, leveraging connections and topics that span clinical domains may be one way to improve and broaden engagement within these forums. Finally, feedback from participants revealed that the timing of the journal club and the inability to have in-depth discussions, a characteristic commonly associated with traditional journal clubs, were problematic.

This study has several limitations. First, the metrics used to assess social media engagement and dissemination can be easily skewed. For instance, the activity of 1 or 2 individuals with large followings can dramatically influence the number of impressions, giving a falsely elevated sense of broad dissemination. Conversely, there may have been some participants who did not use the #JHMChat hashtag, thus leading to an underestimation in these metrics. Second, while we report total impressions as a measure of dissemination, this metric represents possible interactions and does not guarantee interaction or visualization of that tweet. Additionally, we were unable to characterize our participants and their participation rates over time, as this information is not made available through Symplur© analytics. Third, our page view assessment was limited to 2016 sessions only; therefore, these data may not be an accurate reflection of the impact of #JHMChat on this metric. Fourth, given the marginal response rate to our CME questionnaire, a selection bias could have occurred. Finally, whether social media discussions such as online journal clubs act as leading indicators for future citations remains unclear, as some research has shown an association between increased Altmetric scores and increased citation rates,19-21 while others have not.22,23 Our study was not equipped to assess this correlation.

CONCLUSION

Online journal clubs create new opportunities to connect, engage, and disseminate medical research. These developing forums provide journal editors, researchers, patients, and clinicians with a means to connect and discuss research in ways that were not previously possible. In order to continue to evolve and grow, future research in online journal clubs should explore the downstream effects on citation rates, clinical uptake, and participant knowledge after the sessions.

Acknowledgments

The authors would like to thank Felicia Steele for her assistance in organizing and promoting the chats. Additionally, the authors would like to thank all the authors, guests and participants who took time from their families, work, and daily lives to participate in these activities. Your time and presence were truly appreciated.

Disclosures

The authors of this article operate as the Social Media Editors (C.M.W., V.M.A.) and the Editor-in-Chief (A.A.) for the Journal of Hospital Medicine. Dr. Wray had full access to all the data in the project, takes responsibility for the integrity of the data, and the accuracy of the data analysis.

Clinicians, educators, and medical journals are increasingly using the social media outlet, Twitter, as a medium to connect and engage with their colleagues. In particular, online journal clubs have created a space for the timely discussion of research, creation of online communities, and dissemination of research.

Social media-based journal clubs are thought to be one way in which journals can leverage the power of social networks so that researchers can engage with a diverse range of end users4 (including bedside clinicians, administrators, and patients). Several examples of these models exist. For example, #GeriMedJC acts as a complimentary, synchronous chat that takes place at the same time as a live, in-person journal club. #NephJC offers multiple 1-hour chats per month and provides an in-depth summary and analysis of each article, while #UroJC is an asynchronous discussion that takes place over 48 hours. Few data exist to describe whether any of these programs produce measurable improvements in indicators of engagement or dissemination of results.

In 2015, the Journal of Hospital Medicine (JHM) began producing a Twitter-based journal club as a means to connect and engage the Hospital Medicine community and allow for discussion and rapid exchange of information and opinions around a specific clinical topic. This study aims to describe the implementation of the first Journal-sponsored, Twitter-based online journal club and ascertain its impact on both Twitter and journal metrics.

METHODS

#JHMChat was launched in October 2015, and was initially held every 2-3 months until January 2017, when chats began to take place monthly. Each 1-hour chat focused on a recently published article in JHM, was moderated by a JHM social media editor (C.M.W., V.M.A.), and included at least 1 study author or guest expert. Articles were chosen by the social media editors based on the following criteria: (1) attractiveness to possible participants, (2) providing topic variety within the journal club series, and (3) sustainability and topic conduciveness to the online chat model. Chats were held at 9 PM EST in order to engage hospitalists across all US time zones and on different days to accommodate authors’ availability. All sessions were framed by 3-4 questions intended to encourage discussion and presented to chat participants at spaced intervals so as to stimulate a current of responses.

Chats were promoted by way of the JHM (@JHospMedicine, 3400 followers) and Society of Hospital Medicine (SHM; @SHMLive, 5800 followers) Twitter feeds beginning 1 month prior to each session. Visual Abstracts5,6 were used to publicize the sessions, also via Twitter, starting in February 2017.

Continuing Medical Education (CME) credits were offered through the SHM to registered participants, starting in July 2016.7 All sessions were cosponsored by the American Board of Internal Medicine (ABIM) Foundation and the Costs of Care Organization, a non-profit organization aimed at improving healthcare value.

Twitter Metrics

After each session, the following Twitter-based engagement metrics were obtained using the Symplur© Healthcare Hashtag project;8 total number of participants and tweets, tweets/participant, and total impressions (calculated as the number of tweets from each participant multiplied by the number of followers that participant currently had then summed up for all participants). Simply put, impressions can also be thought of as the number of times a single Tweet makes it into someone else’s Twitter feed. So as to avoid artificially inflated metrics, all were obtained 2 hours after the end of the journal club. Participants were defined as anyone who posted an original tweet or retweeted during the session and were encouraged to tag their tweets with the hashtag #JHMChat for post-discussion indexing and measurement. Because authors’ or guests’ popularity on Twitter may influence participation rates, we also assessed the number of followers for each participating author. Spearman’s rank correlation was calculated (Microsoft ExcelTM) where appropriate.

Altmetrics and Page Views

As a means to measure exposure and dissemination external to Twitter, we assessed the change (“Delta”) in the each article’s Altmetric score9, a digital-based metric that quantifies the attention received by a scientific publication on various online platforms including news, blogs, and social media. Delta Altmetric scores were calculated as the difference between the scores on the day of the session and 2 weeks after the respective session, with higher scores indicating greater global online discussion. By measuring the Altmetric score on the day of the discussion, we established a baseline score for comparison purposes. Additionally, this allowed us to better attribute any changes that may have occurred to the discussion itself.

Additionally, using information provided by the journal publisher (John Wiley & Sons Publishing) in 2016, we assessed the effect of #JHMChat on the number of article page views on the JHM website relative to the release of the electronic Table of Contents (eTOC). The eTOC release was chosen as it is historically associated with a high number of page views. In order to isolate the effect of #JHMChat, we only reviewed months in which #JHMChat was not held within 3 days of the eTOC release. Because JHM changed publishers in January 2017, we only assessed page view data on 2016 sessions, as the new publisher lacked enhanced search optimization to obtain these data.

Thematic Analysis

In addition to the above measurements, a thematic analysis of each article was conducted to assess any common themes that would influence our chosen metrics. Themes were assessed and ascribed by one author (C.M.W.) and verified by another (V.M.A.).

Participant and Author Experience

To assess the participant experience, responses to a post-session CME questionnaire that assessed (1) overall quality, (2) comprehensiveness of the discussion, (3) whether the participant would recommend the chat to a colleague, and (4) whether participation would lead to practice-changing measures were reviewed. Registration of each session for CME was also quantified. Finally, each participating author was asked to fill out an electronic post-chat survey (SurveyMonkey®) meant to assess the authors’ experience with the journal club (Appendix).

RESULTS

Between October 2015 and November 2017, a total of 15 sessions were held with a mean of 2.17 (±0.583) million impressions/session, 499 (±129) total tweets/session, and 73 (±24) participants/session (compared to a range of 21-58 participants/session from other online journal clubs, where reported) with 7.2 (±2.0) tweets/participant (Table 1). The total number of participants for all sessions was 1096. Participating authors had on average 1389 (±2714) followers, ranging from a low of 37 to a high of 10,376 (Appendix). No correlation between author following and number of participants (r = 0.19), impressions (r = 0.05), or change in Altmetric score (r = 0.17) was seen.

Thematic analysis revealed 3 predominant themes among the chosen articles: Value-based care (VBC), Quality and Patient Safety (QPS), and Medical Education (ME). Articles focused on VBC had the greatest number of impressions (mean ±SD: 2.61 ± 0.55 million) and participants (mean ±SD: 90 ± 12), while QPS articles had the fewest impressions (mean ±SD: 1.71 ± 0.59 million) and number of participants (mean ±SD: 47 ± 16). The mean increase in the Altmetric score among all discussed articles was 14 (±12), from an average baseline of 30 (±37). Medical Education-themed articles appeared to garner the greatest increase in Altmetric scores, averaging an increase of 32 points, compared with an average baseline score of 31 (±32). In contrast, VBC and QPS articles averaged an increase of 8.6 and 8.4 points, from average baselines of 55 (±53) and 17 (±13), respectively. A 2-month analysis of JHM articles not included in these discussions, in which Altmetric scores were measured in the same way as those from the discussion, revealed a baseline Altmetric score of 27 (±24) with an average increase of 8 (±6) 2 weeks following the chat.

Four articles met the inclusion criteria for page view analysis and suggested that article page views increased to similar levels as the eTOC release (mean: 2668 vs. 2998, respectively; P = .35) (Figure). These increases equate to a 33% and 50% increase in average daily page views (2002) for the chat and eTOC release, respectively.

Ninety-three percent (14/15) of the participating authors responded to the post-discussion survey. All strongly agreed (5/5 on a Likert scale) that the venue allowed for an in-depth discussion about processes and challenges in conducting the study and allowed for greater dissemination and visibility of their work (5/5). Additionally, authors agreed that the journal club was a valuable experience for themselves (4.88/5) and other practitioners (4.88/5). Most agreed that the journal club allowed them to share their work with a different group of participants than usual (4.75/5) and that the experience changed how they would discuss their manuscripts in the future (4.75/5.0); Table 2).

DISCUSSION

The Twitter-based journal club #JHMChat appears to increase social media awareness and dissemination of journal articles and was considered a useful engagement platform by both authors and participants.

Articles with a focus on VBC and ME had the greatest impact on dissemination metrics, particularly, total impressions and Altmetric scores, respectively. Given the strong presence and interest in these topics within Twitter and social media, these findings are not surprising.10,11 For example, over the past several years, the VBC movement has taken shape and grown alongside the expansion of social media, thus giving a space for this community to grow and engage. Of note, the cosponsorship relationship with the ABIM Foundation (which works closely with the Choosing Wisely™ campaign) and the Costs of Care Organization could have influenced the participation and dissemination rates of VBC articles. Medical education articles were also popular and appeared to have increased uptake after chats, based on their Altmetric scores. This may be explained by the fact that medical educators have long utilized social media as a means to connect and engage within their community.12–14 It is also possible that the use of Twitter by trainees (residents, students) may have driven some of the dissemination of ME articles, as this group may not be regular subscribers to JHM.

Online journal clubs offer distinct advantages over traditional in-person journal clubs. First, online journal clubs allow for increased connectivity among online communities, bringing together participants from different geographic areas with diverse training and clinical experiences. Subsequently, this allows for the rapid exchange of both personal and organizational approaches to the topic of discussion.15–17 Second, online journal clubs allow for continual access to the discussion material. For example, while the metrics used in this study only assessed active, synchronous participation, anecdotal evidence and feedback to the authors suggests that many individuals passively engaged by following along or reviewed the chat feed post hoc at their convenience. This asynchronous access is a quality not found in more traditional journal club formats. Finally, because online journal clubs commonly operate with a flattened hierarchy,18 they can break down access barriers to both the researchers who performed the study and thought leaders who commonly participate.17

Several insightful lessons were gleaned in the production and management of this online journal club. On the implementation side, promotion, preparation, and continued organization of an online journal club requires a fair amount of work. In this case, the required time and resources were provided by 2 social media editors in addition to administrative assistance from the SHM. The high attrition rate of online journal clubs over the years attests to these difficulties.24 Additionally, finding incentives to attract and sustain participation can be difficult, as we noted that neither CME nor author popularity (based on their Twitter following) appeared to influence engagement metrics (number of participants, total tweets, and tweets/participant). We also found that partnering with other journal club communities, in particular #NephJC, lead to greater participation rates and impressions. Thus, leveraging connections and topics that span clinical domains may be one way to improve and broaden engagement within these forums. Finally, feedback from participants revealed that the timing of the journal club and the inability to have in-depth discussions, a characteristic commonly associated with traditional journal clubs, were problematic.

This study has several limitations. First, the metrics used to assess social media engagement and dissemination can be easily skewed. For instance, the activity of 1 or 2 individuals with large followings can dramatically influence the number of impressions, giving a falsely elevated sense of broad dissemination. Conversely, there may have been some participants who did not use the #JHMChat hashtag, thus leading to an underestimation in these metrics. Second, while we report total impressions as a measure of dissemination, this metric represents possible interactions and does not guarantee interaction or visualization of that tweet. Additionally, we were unable to characterize our participants and their participation rates over time, as this information is not made available through Symplur© analytics. Third, our page view assessment was limited to 2016 sessions only; therefore, these data may not be an accurate reflection of the impact of #JHMChat on this metric. Fourth, given the marginal response rate to our CME questionnaire, a selection bias could have occurred. Finally, whether social media discussions such as online journal clubs act as leading indicators for future citations remains unclear, as some research has shown an association between increased Altmetric scores and increased citation rates,19-21 while others have not.22,23 Our study was not equipped to assess this correlation.

CONCLUSION

Online journal clubs create new opportunities to connect, engage, and disseminate medical research. These developing forums provide journal editors, researchers, patients, and clinicians with a means to connect and discuss research in ways that were not previously possible. In order to continue to evolve and grow, future research in online journal clubs should explore the downstream effects on citation rates, clinical uptake, and participant knowledge after the sessions.

Acknowledgments

The authors would like to thank Felicia Steele for her assistance in organizing and promoting the chats. Additionally, the authors would like to thank all the authors, guests and participants who took time from their families, work, and daily lives to participate in these activities. Your time and presence were truly appreciated.

Disclosures

The authors of this article operate as the Social Media Editors (C.M.W., V.M.A.) and the Editor-in-Chief (A.A.) for the Journal of Hospital Medicine. Dr. Wray had full access to all the data in the project, takes responsibility for the integrity of the data, and the accuracy of the data analysis.

1. Topf JM, Sparks MA, Phelan PJ, et al. The evolution of the journal club: from osler to twitter. Am J Kidney Dis Off J Natl Kidney Found. 2017;69(6):827-836. doi: 10.1053/j.ajkd.2016.12.012. PubMed

2. Thangasamy IA, Leveridge M, Davies BJ, Finelli A, Stork B, Woo HH. International urology journal club via Twitter: 12-month experience. Eur Urol. 2014;66(1):112-117. doi: 10.1016/j.eururo.2014.01.034. PubMed

3. Gardhouse AI, Budd L, Yang SYC, Wong CL. #GeriMedJC: the Twitter complement to the traditional-format geriatric medicine journal club. J Am Geriatr Soc. 2017;65(6):1347-1351. doi: 10.1111/jgs.14920. PubMed

4. Duque L. How academics and researchers can get more out of social media. Harvard Business Review. https://hbr.org/2016/06/how-academics-and-researchers-can-get-more-out-of-social-media. Accessed November 9, 2017.

5. Wray CM, Arora VM. #VisualAbstract: a revolution in communicating science? Ann Surg. 2017;266(6):e49-e50. doi: 10.1097/SLA.0000000000002339. PubMed

6. Ibrahim AM. Seeing is believing: using visual abstracts to disseminate scientific research. Am J Gastroenterol. 2017:ajg2017268. doi: 10.1038/ajg.2017.268. PubMed

7. #JHMChat. http://shm.hospitalmedicine.org/acton/media/25526/jhmchat. Accessed November 9, 2017.

8. #JHMChat-healthcare social media. Symplur. https://www.symplur.com/search/%23JHMChat. Accessed November 9, 2017.

9. Altmetric. Altmetric. https://www.altmetric.com/. Accessed November 9, 2017.

10. value-based healthcare | Symplur. https://www.symplur.com/topic/value-based-healthcare/. Accessed November 17, 2017.

11. medical education | Symplur. https://www.symplur.com/topic/medical-education/. Accessed November 17, 2017.

12. Sterling M, Leung P, Wright D, Bishop TF. The use of social media in graduate medical education: a systematic review. Acad Med. 2017;92(7):1043. doi: 10.1097/ACM.0000000000001617. PubMed

13. Davis WM, Ho K, Last J. Advancing social media in medical education. CMAJ Can Med Assoc J. 2015;187(8):549-550. doi: 10.1503/cmaj.141417. PubMed

14. Hillman T, Sherbino J. Social media in medical education: a new pedagogical paradigm? Postgrad Med J. 2015;91(1080):544-545. doi: 10.1136/postgradmedj-2015-133686. PubMed

15. Gerds AT, Chan T. Social media in hematology in 2017: dystopia, utopia, or somewhere in-between? Curr Hematol Malig Rep. 2017;12(6):582-591. doi: 10.1007/s11899-017-0424-8. PubMed

16. Mehta N, Flickinger T. The times they are a-changin’: academia, social media and the JGIM Twitter Journal Club. J Gen Intern Med. 2014;29(10):1317-1318. doi: 10.1007/s11606-014-2976-9. PubMed

17. Chan T, Trueger NS, Roland D, Thoma B. Evidence-based medicine in the era of social media: scholarly engagement through participation and online interaction. CJEM. 2017:1-6. doi: 10.1017/cem.2016.407. PubMed

18. Utengen A. The flattening of healthcare: breaking down of barriers in healthcare social media-twitter visualized. https://www.symplur.com/shorts/the-flattening-of-healthcare-twitter-visualized/. Accessed November 8, 2017.

19. Thelwall M, Haustein S, Larivière V, Sugimoto CR. Do altmetrics work? Twitter and ten other social web services. PloS One. 2013;8(5):e64841. doi: 10.1371/journal.pone.0064841. PubMed

20. Peoples BK, Midway SR, Sackett D, Lynch A, Cooney PB. Twitter predicts citation rates of ecological research. PloS One. 2016;11(11):e0166570. doi: 10.1371/journal.pone.0166570. PubMed

21. Eysenbach G. Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123. doi: 10.2196/jmir.2012. PubMed

22. Winter JCF de. The relationship between tweets, citations, and article views for PLOS ONE articles. Scientometrics. 2015;102(2):1773-1779. doi: 10.1007/s11192-014-1445-x.

23. Haustein S, Peters I, Sugimoto CR, Thelwall M, Larivière V. Tweeting biomedicine: an analysis of tweets and citations in the biomedical literature. J Assoc Inf Sci Technol. 2014;65(4):656-669. doi: 10.1002/asi.23101.

24. Journal club. In: Wikipedia. 2017. https://en.wikipedia.org/w/index.php?title=Journal_club&oldid=807037773. Accessed November 9, 2017.

1. Topf JM, Sparks MA, Phelan PJ, et al. The evolution of the journal club: from osler to twitter. Am J Kidney Dis Off J Natl Kidney Found. 2017;69(6):827-836. doi: 10.1053/j.ajkd.2016.12.012. PubMed

2. Thangasamy IA, Leveridge M, Davies BJ, Finelli A, Stork B, Woo HH. International urology journal club via Twitter: 12-month experience. Eur Urol. 2014;66(1):112-117. doi: 10.1016/j.eururo.2014.01.034. PubMed

3. Gardhouse AI, Budd L, Yang SYC, Wong CL. #GeriMedJC: the Twitter complement to the traditional-format geriatric medicine journal club. J Am Geriatr Soc. 2017;65(6):1347-1351. doi: 10.1111/jgs.14920. PubMed

4. Duque L. How academics and researchers can get more out of social media. Harvard Business Review. https://hbr.org/2016/06/how-academics-and-researchers-can-get-more-out-of-social-media. Accessed November 9, 2017.

5. Wray CM, Arora VM. #VisualAbstract: a revolution in communicating science? Ann Surg. 2017;266(6):e49-e50. doi: 10.1097/SLA.0000000000002339. PubMed

6. Ibrahim AM. Seeing is believing: using visual abstracts to disseminate scientific research. Am J Gastroenterol. 2017:ajg2017268. doi: 10.1038/ajg.2017.268. PubMed

7. #JHMChat. http://shm.hospitalmedicine.org/acton/media/25526/jhmchat. Accessed November 9, 2017.

8. #JHMChat-healthcare social media. Symplur. https://www.symplur.com/search/%23JHMChat. Accessed November 9, 2017.

9. Altmetric. Altmetric. https://www.altmetric.com/. Accessed November 9, 2017.

10. value-based healthcare | Symplur. https://www.symplur.com/topic/value-based-healthcare/. Accessed November 17, 2017.

11. medical education | Symplur. https://www.symplur.com/topic/medical-education/. Accessed November 17, 2017.

12. Sterling M, Leung P, Wright D, Bishop TF. The use of social media in graduate medical education: a systematic review. Acad Med. 2017;92(7):1043. doi: 10.1097/ACM.0000000000001617. PubMed

13. Davis WM, Ho K, Last J. Advancing social media in medical education. CMAJ Can Med Assoc J. 2015;187(8):549-550. doi: 10.1503/cmaj.141417. PubMed

14. Hillman T, Sherbino J. Social media in medical education: a new pedagogical paradigm? Postgrad Med J. 2015;91(1080):544-545. doi: 10.1136/postgradmedj-2015-133686. PubMed

15. Gerds AT, Chan T. Social media in hematology in 2017: dystopia, utopia, or somewhere in-between? Curr Hematol Malig Rep. 2017;12(6):582-591. doi: 10.1007/s11899-017-0424-8. PubMed

16. Mehta N, Flickinger T. The times they are a-changin’: academia, social media and the JGIM Twitter Journal Club. J Gen Intern Med. 2014;29(10):1317-1318. doi: 10.1007/s11606-014-2976-9. PubMed

17. Chan T, Trueger NS, Roland D, Thoma B. Evidence-based medicine in the era of social media: scholarly engagement through participation and online interaction. CJEM. 2017:1-6. doi: 10.1017/cem.2016.407. PubMed

18. Utengen A. The flattening of healthcare: breaking down of barriers in healthcare social media-twitter visualized. https://www.symplur.com/shorts/the-flattening-of-healthcare-twitter-visualized/. Accessed November 8, 2017.

19. Thelwall M, Haustein S, Larivière V, Sugimoto CR. Do altmetrics work? Twitter and ten other social web services. PloS One. 2013;8(5):e64841. doi: 10.1371/journal.pone.0064841. PubMed

20. Peoples BK, Midway SR, Sackett D, Lynch A, Cooney PB. Twitter predicts citation rates of ecological research. PloS One. 2016;11(11):e0166570. doi: 10.1371/journal.pone.0166570. PubMed

21. Eysenbach G. Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res. 2011;13(4):e123. doi: 10.2196/jmir.2012. PubMed

22. Winter JCF de. The relationship between tweets, citations, and article views for PLOS ONE articles. Scientometrics. 2015;102(2):1773-1779. doi: 10.1007/s11192-014-1445-x.

23. Haustein S, Peters I, Sugimoto CR, Thelwall M, Larivière V. Tweeting biomedicine: an analysis of tweets and citations in the biomedical literature. J Assoc Inf Sci Technol. 2014;65(4):656-669. doi: 10.1002/asi.23101.

24. Journal club. In: Wikipedia. 2017. https://en.wikipedia.org/w/index.php?title=Journal_club&oldid=807037773. Accessed November 9, 2017.

© 2018 Society of Hospital Medicine

FYI: This Message Will Interrupt You – Texting Impact on Clinical Learning Environment

Fifteen years ago, beepers with 5-digit call-back numbers were the norm. Pushing a call light button outside the patient’s room to flag the desk clerk that a new order had been hand-written was all part of the lived experience of residency. Using that as our baseline, we have clearly come a long way in the way that we communicate with other clinicians in hospitals. Communication among the patient care team in the digital age predominantly involves bidirectional messaging using mobile devices. The approach is both immediate and convenient. Mobile devices can improve work efficiency, patient safety, and quality of care, but their main advantage may be real-time bedside decision support.1,2 However, the widespread use of mobile devices for communication in healthcare is not without its concerns. First and foremost, there has been abundant literature around short message service (SMS) use in the healthcare setting, and there are concerns surrounding both threats to privacy and the prevalence and impact of interruptions in clinical care.

The first SMS was sent in 1992.3 Text messaging since then has become ubiquitous, even in healthcare, raising concerns around the protection of patient health information under the Health Insurance Portability and Accountability Act (HIPAA). Interestingly, the United States Department of Health and Human Services Office for Civil Rights, enforcer of HIPAA, is tech neutral on the subject.3 Multiple studies have assessed physician stances on SMS communication in the healthcare setting using routine, non-HIPAA-compliant mobile phones. Overall, 60%-80% of respondents admitted to using SMS in patient care, while in another study, 72% and 80% of Internal Medicine residents surveyed found SMS to be the most efficient form of communication and overall preferred method of communication, respectively.3,4 Interestingly, 82.5% of those same residents preferred the hospital-based alphanumeric paging system for security purposes, even though Freundlich et al. make a compelling argument that unidirectional alphanumeric paging systems are most certainly less HIPAA compliant, lacking encryption and password protection.5 Newer platforms that enable HIPAA-compliant messaging are promising, although they may not be fully adopted by clinical teams without full-scale implementation in hospitals.6In addition to privacy concerns with SMS applications on mobile phones, interruptions in healthcare – be it from phone calls, emails, text messages, or in-person conversations – are common. In fact, famed communication researcher Enrico Coeira has notoriously described healthcare communication as ”interrupt-driven.”7 Prior work has shown that frequent interruptions in the healthcare setting can lead to medication prescription errors, errors in computerized physician order entry, and even surgical procedural errors.8-10

While studies have focused on interruptions in clinical care in the healthcare setting, little is known about how education may be compromised by interruptions due to mobile devices. Text messaging during dedicated conference time can lead to inadequate learning and a sense of frustration among residents. In this issue of the Journal of Hospital Medicine, Mendel et al. performed a quality improvement study involving eight academic inpatient clinical training units with the aim of reducing nonurgent text messages during education rounds.11 Their unique interventions included learning sessions, posters, adding alerts to the digital communication platform, and alternative messaging options. Of four sequential interventions, a message alerting the sender that they will be interrupting educational rounds and suggesting a “delayed send” or “send as an FYI” showed the greatest impact, reducing the number of text interruptions per team per educational hour from 0.81 to 0.59 (95% CI 0.51-0.67). When comparing a four-week pre-intervention sample with a four-week end-intervention sample, the percentage of nonurgent messages decreased from 82% to 68% (P < .01).

While these results are promising, challenges to large-scale implementation of such a program exist. Buy-in from the ancillary healthcare team is critical for such interventions to succeed and be sustained. It also places a burden of “point triage” on the healthcare team members, who must assess the patient situation and determine the level of urgency and whether to immediately interrupt, delay interrupt or send an FYI message. For example, in the study by Mendel et al.,11 it is noteworthy that urgent patient care issues were mislabeled as “FYI” in 2% of patients. While this is a seemingly low rate, even one of these mislabeled messages could result in significant adverse patient outcomes and should be considered a “never event.” Finally, the study used a messaging platform with programming flexibility and IT personnel to assist. This could be cost prohibitive for some programs, especially if rolled out to an entire institution.

Communication is critical for effective patient care and unfortunately, the timing of such communication is often not orderly but rather, chaotic. Text message communication can introduce interruptions into all aspects of patient care and education, not only dedicated learning conferences. If the goal is for all residents to attend all conferences, it seems impossible (and likely dangerous) to eliminate all messaging interruptions during conference hours. Nevertheless, it is worth noting that Mandel et al. have moved us creatively toward that goal with a multifaceted approach.11 Future work should address more downstream outcomes, such as objective resident learning retention and adverse patient events relative to the number of interruptions per educational hour. If such studies showed improved learning outcomes and fewer adverse patient events, the next step would be to further strengthen and refine their protocol with real-time and scheduled feedback sessions between providers and other patient care team members in addition to the continued search for additional innovative approaches. In addition, combining artificial intelligence or predictive modeling may help us delineate when an interruption is warranted, for example, when a patient is at high clinical risk without intervention. Likewise, human factors research may help us understand the best way to time and execute an interruption to minimize the risk to clinical care or education. After all, the ideal system would not eliminate interruptions entirely but allow clinicians to know when someone should be interrupted and when they do not need to be interrupted.

Disclosures

The authors have no financial relationships relevant to this article to disclose.

1. Berner ES, Houston TK, Ray MN, et al. Improving ambulatory prescribing safety with a handheld decision support system: a ran domized controlled trial. J Am Med Inform Assoc. 2006;13(2):171-179. doi: 10.1197/jamia.M1961. PubMed

2. Sintchenko V, Iredell JR, Gilbert GL, et al. Handheld computer-based decision support reduces patient length of stay and antibiotic prescribing in critical care. J Am Med Inform Assoc. 2005;12(4):398-402. doi: 10.1197/jamia.M1798. PubMed

3. Drolet BC. Text messaging and protected health information: what is permitted? JAMA. 2017;317(23):2369-2370. doi: 10.1001/jama.2017.5646. PubMed

4. Prochaska MT, Bird AN, Chadaga A, Arora VM. Resident use of text messaging for patient care: ease of use or breach of privacy? JMIR Med Inform. 2015;3(4):e37. doi: 10.2196/medinform.4797. PubMed

5. Samora JB, Blazar PE, Lifchez SD, et al. Mobile messaging communication in health care rules, regulations, penalties, and safety of provider use. JBJS Rev. 2018;6(3):e4. doi: 10.2106/JBJS.RVW.17.00070 PubMed

6. Freundlich RE, Freundlich KL, Drolet BC. Pagers, smartphones, and HIPAA: finding the best solution for electronic communication of protected health information. J Med Syst. 2017;42(1):9. doi: 10.1007/s10916-017-0870-9. PubMed

7. Coiera E. Clinical communication—a new informatics paradigm. In Proceedings of the American. Medical Informatics Association Autumn Symposium. 1996;17-21.

8. Feuerbacher RL, Funk KH, Spight DH, et al. Realistic distractions and interruptions that impair simulated surgical performance by novice surgeons. Arch Surg. 2012;147(11):1026-1030. doi: 10.1001/archsurg.2012.1480. PubMed

9. Agency for Healthcare Research and Quality–Patient Safety Network (AHRQ-PSNet). https://psnet.ahrq.gov/webmm/case/257/order-interrupted-by-text-multitasking-mishapCases & Commentaries. Order Interrupted by Text: Multitasking Mishap. December 2011. Commentary by John Halamka, MD, MS.

10. Westbrook JI, Raban MZ, Walter SR, et al. Task errors by emergency physicians are associated with interruptions, multitasking, fatigue and working memory capacity: a prospective, direct observation study [published online ahead of print January 9, 2018]. BMJ Qual Saf. doi: 10.1136/bmjqs-2017-007333. [Epub ahead of print]. PubMed

11. Mendel A, Lott A, Lo L, et al. A matter of urgency: reducing clinical text message interruptions during educational sessions. J Hosp Med. 2018;13(9):616-622. doi: 10.12788/jhm.2959. PubMed

Fifteen years ago, beepers with 5-digit call-back numbers were the norm. Pushing a call light button outside the patient’s room to flag the desk clerk that a new order had been hand-written was all part of the lived experience of residency. Using that as our baseline, we have clearly come a long way in the way that we communicate with other clinicians in hospitals. Communication among the patient care team in the digital age predominantly involves bidirectional messaging using mobile devices. The approach is both immediate and convenient. Mobile devices can improve work efficiency, patient safety, and quality of care, but their main advantage may be real-time bedside decision support.1,2 However, the widespread use of mobile devices for communication in healthcare is not without its concerns. First and foremost, there has been abundant literature around short message service (SMS) use in the healthcare setting, and there are concerns surrounding both threats to privacy and the prevalence and impact of interruptions in clinical care.

The first SMS was sent in 1992.3 Text messaging since then has become ubiquitous, even in healthcare, raising concerns around the protection of patient health information under the Health Insurance Portability and Accountability Act (HIPAA). Interestingly, the United States Department of Health and Human Services Office for Civil Rights, enforcer of HIPAA, is tech neutral on the subject.3 Multiple studies have assessed physician stances on SMS communication in the healthcare setting using routine, non-HIPAA-compliant mobile phones. Overall, 60%-80% of respondents admitted to using SMS in patient care, while in another study, 72% and 80% of Internal Medicine residents surveyed found SMS to be the most efficient form of communication and overall preferred method of communication, respectively.3,4 Interestingly, 82.5% of those same residents preferred the hospital-based alphanumeric paging system for security purposes, even though Freundlich et al. make a compelling argument that unidirectional alphanumeric paging systems are most certainly less HIPAA compliant, lacking encryption and password protection.5 Newer platforms that enable HIPAA-compliant messaging are promising, although they may not be fully adopted by clinical teams without full-scale implementation in hospitals.6In addition to privacy concerns with SMS applications on mobile phones, interruptions in healthcare – be it from phone calls, emails, text messages, or in-person conversations – are common. In fact, famed communication researcher Enrico Coeira has notoriously described healthcare communication as ”interrupt-driven.”7 Prior work has shown that frequent interruptions in the healthcare setting can lead to medication prescription errors, errors in computerized physician order entry, and even surgical procedural errors.8-10

While studies have focused on interruptions in clinical care in the healthcare setting, little is known about how education may be compromised by interruptions due to mobile devices. Text messaging during dedicated conference time can lead to inadequate learning and a sense of frustration among residents. In this issue of the Journal of Hospital Medicine, Mendel et al. performed a quality improvement study involving eight academic inpatient clinical training units with the aim of reducing nonurgent text messages during education rounds.11 Their unique interventions included learning sessions, posters, adding alerts to the digital communication platform, and alternative messaging options. Of four sequential interventions, a message alerting the sender that they will be interrupting educational rounds and suggesting a “delayed send” or “send as an FYI” showed the greatest impact, reducing the number of text interruptions per team per educational hour from 0.81 to 0.59 (95% CI 0.51-0.67). When comparing a four-week pre-intervention sample with a four-week end-intervention sample, the percentage of nonurgent messages decreased from 82% to 68% (P < .01).

While these results are promising, challenges to large-scale implementation of such a program exist. Buy-in from the ancillary healthcare team is critical for such interventions to succeed and be sustained. It also places a burden of “point triage” on the healthcare team members, who must assess the patient situation and determine the level of urgency and whether to immediately interrupt, delay interrupt or send an FYI message. For example, in the study by Mendel et al.,11 it is noteworthy that urgent patient care issues were mislabeled as “FYI” in 2% of patients. While this is a seemingly low rate, even one of these mislabeled messages could result in significant adverse patient outcomes and should be considered a “never event.” Finally, the study used a messaging platform with programming flexibility and IT personnel to assist. This could be cost prohibitive for some programs, especially if rolled out to an entire institution.

Communication is critical for effective patient care and unfortunately, the timing of such communication is often not orderly but rather, chaotic. Text message communication can introduce interruptions into all aspects of patient care and education, not only dedicated learning conferences. If the goal is for all residents to attend all conferences, it seems impossible (and likely dangerous) to eliminate all messaging interruptions during conference hours. Nevertheless, it is worth noting that Mandel et al. have moved us creatively toward that goal with a multifaceted approach.11 Future work should address more downstream outcomes, such as objective resident learning retention and adverse patient events relative to the number of interruptions per educational hour. If such studies showed improved learning outcomes and fewer adverse patient events, the next step would be to further strengthen and refine their protocol with real-time and scheduled feedback sessions between providers and other patient care team members in addition to the continued search for additional innovative approaches. In addition, combining artificial intelligence or predictive modeling may help us delineate when an interruption is warranted, for example, when a patient is at high clinical risk without intervention. Likewise, human factors research may help us understand the best way to time and execute an interruption to minimize the risk to clinical care or education. After all, the ideal system would not eliminate interruptions entirely but allow clinicians to know when someone should be interrupted and when they do not need to be interrupted.

Disclosures

The authors have no financial relationships relevant to this article to disclose.

Fifteen years ago, beepers with 5-digit call-back numbers were the norm. Pushing a call light button outside the patient’s room to flag the desk clerk that a new order had been hand-written was all part of the lived experience of residency. Using that as our baseline, we have clearly come a long way in the way that we communicate with other clinicians in hospitals. Communication among the patient care team in the digital age predominantly involves bidirectional messaging using mobile devices. The approach is both immediate and convenient. Mobile devices can improve work efficiency, patient safety, and quality of care, but their main advantage may be real-time bedside decision support.1,2 However, the widespread use of mobile devices for communication in healthcare is not without its concerns. First and foremost, there has been abundant literature around short message service (SMS) use in the healthcare setting, and there are concerns surrounding both threats to privacy and the prevalence and impact of interruptions in clinical care.

The first SMS was sent in 1992.3 Text messaging since then has become ubiquitous, even in healthcare, raising concerns around the protection of patient health information under the Health Insurance Portability and Accountability Act (HIPAA). Interestingly, the United States Department of Health and Human Services Office for Civil Rights, enforcer of HIPAA, is tech neutral on the subject.3 Multiple studies have assessed physician stances on SMS communication in the healthcare setting using routine, non-HIPAA-compliant mobile phones. Overall, 60%-80% of respondents admitted to using SMS in patient care, while in another study, 72% and 80% of Internal Medicine residents surveyed found SMS to be the most efficient form of communication and overall preferred method of communication, respectively.3,4 Interestingly, 82.5% of those same residents preferred the hospital-based alphanumeric paging system for security purposes, even though Freundlich et al. make a compelling argument that unidirectional alphanumeric paging systems are most certainly less HIPAA compliant, lacking encryption and password protection.5 Newer platforms that enable HIPAA-compliant messaging are promising, although they may not be fully adopted by clinical teams without full-scale implementation in hospitals.6In addition to privacy concerns with SMS applications on mobile phones, interruptions in healthcare – be it from phone calls, emails, text messages, or in-person conversations – are common. In fact, famed communication researcher Enrico Coeira has notoriously described healthcare communication as ”interrupt-driven.”7 Prior work has shown that frequent interruptions in the healthcare setting can lead to medication prescription errors, errors in computerized physician order entry, and even surgical procedural errors.8-10