User login

HAC Diagnosis Code and MS‐DRG Assignment

One financial incentive to improve quality of care is the Centers for Medicare and Medicaid Services' (CMS) policy to not pay additionally for certain adverse events that are classified as hospital‐acquired conditions (HACs).[1, 2, 3] HACs are specific conditions that occur during the hospital stay and presumably could have been prevented.[4, 5, 6] Under the CMS policy, if an HAC occurs during a patient's stay, that condition is not included in the Medicare Severity Diagnosis‐Related Group (MS‐DRG) assignment.

The MS‐DRG assigned to a patient discharge determines reimbursement. Each MS‐DRG is assigned a weight, which is used to adjust for the fact that the treatment of different conditions consume different resources and have difference costs. Groups of patients who are expected to require above‐average resources have a higher weight than those who require fewer resources, and higher‐weighted MS‐DRG assignment results in a higher payment. In some cases, the inclusion of the diagnosis code of an HAC in the determination of the MS‐DRG results in a higher complexity level and higher DRG weight. The policy is designed to shift the incremental costs associated with treating the HAC to the hospital. As of October 2009, there were 10 HACs included in the CMS nonpayment program (see Supporting Table 1 in the online version of this article). CMS expanded the list of HACs to include 13 conditions in 2013.

| Variable | MS‐DRG Change, No. (%) or MSD, N=980 | No MS‐DRG Change, No. (%) or MSD, N=6,047 | P Value |

|---|---|---|---|

| |||

| Patient sociodemographic characteristics | |||

| Age, y | 62.718.9 | 57.521.9 | <0.001 |

| Race | |||

| White | 687 (70.1) | 4,006 (66.3) | 0.024 |

| Black | 166 (16.9) | 1,100 (18.2) | |

| Hispanic | 45 (4.6) | 416 (6.9) | |

| Other | 82 (8.4) | 525 (8.7) | |

| Sex | <0.001 | ||

| Male | 441 (45.0) | 3,298 (54.5) | |

| Female | 539 (55.0) | 2,749 (45.5) | |

| Payer | <0.001 | ||

| Commercial | 279 (28.5) | 1,609 (26.6) | |

| Medicaid | 88 (9.0) | 910 (15.1) | |

| Medicare | 532 (54.3) | 3,003 (49.7) | |

| Self‐pay/charity | 52 (5.3) | 331 (5.5) | |

| Other | 29 (3.0) | 194 (3.2) | |

| Severity of illness | <0.001 | ||

| Minor | 50 (5.1) | 71 (1.2) | |

| Moderate | 216 (22.0) | 359 (5.9) | |

| Major | 599 (61.1) | 1,318 (21.8) | |

| Extreme | 115 (11.7) | 4,299 (71.1) | |

| Patient clinical characteristics | |||

| Number of ICD‐9 diagnosis codes per patient | 13.76.0 | 20.26.6 | <0.001 |

| MS‐DRG weight | 2.92.1 | 5.96.1 | <0.001 |

| Hospital characteristics | |||

| Mean number of ICD‐9 diagnosis codes per patient per hospital | 8.51.4 | 8.61.4 | 0.280 |

| Total hospital discharges | 15,9576,553 | 16,8576,634 | <0.001 |

| HACs per 1,000 discharges | 9.83.7 | 10.23.7 | <0.001 |

| Hospital‐acquired condition | |||

| Type of HAC | <0.001 | ||

| Pressure ulcer | 334 (34.1) | 1,599 (26.4) | |

| Falls/trauma | 96 (9.8) | 440 (7.3) | |

| Catheter‐associated UTI | 19 (1.9) | 215 (3.6) | |

| Vascular catheter infection | 26 (2.7) | 1,179 (19.5) | |

| DVT/pulmonary embolism | 448 (45.7) | 2,145 (35.5) | |

| Other conditions | 57 (5.8) | 469 (7.8) | |

| HAC position | <0.001 | ||

| 2nd code | 850 (86.7) | 697 (11.5) | |

| 3rd code | 45 (4.6) | 739 (12.2) | |

| 4th code | 30 (3.1) | 641 (10.6) | |

| 5th code | 15 (1.5) | 569 (9.4) | |

| 6th code or higher | 40 (4.1) | 3,401 (56.2) | |

Withholding additional reimbursement for an HAC has been controversial. One area of debate is that the assignment of an HAC may be imprecise, in part due to the variation in how physicians document in the medical record.[1, 2, 6, 7, 8, 9] Coding is derived from documentation in physician notes and is the primary mechanism for assigning International Classification of Diseases, 9th Revision, Clinical Modification (ICD‐9) diagnosis codes to the patient's encounter. The coding process begins with health information technicians (ie, medical record coders) reviewing all medical record documentation to assign diagnosis and procedure codes using the ICD‐9 codes.[10] Primary and secondary diagnoses are determined by certain definitions in the hospital setting. Secondary diagnoses can be further separated into complications or comorbidities in the MS‐DRG system, which can affect reimbursement. The MS‐DRG is then determined using these diagnosis and procedure codes. Physician documentation is the principal source of data for hospital billing, because health information technicians (ie, medical record coders) must assign a code based on what is documented in the chart. If key medical detail is missing or language is ambiguous, then coding can be inaccurate, which may lead to inappropriate compensation.[11]

Accurate and complete ICD‐9 diagnosis and procedure coding is essential for correct MS‐DRG assignment and reimbursement.[12] Physicians may influence coding prioritization by either over‐emphasizing a patient diagnosis or by downplaying the significance of new findings. In addition, unless the physician uses specific, accurate, and accepted terminology, the diagnosis may not even appear in the list of diagnosis codes. Medical records with nonstandard abbreviations may result in coder‐omission of key diagnoses. Finally, when clinicians use qualified diagnoses such as rule‐out or probable, the final diagnosis coded may not be accurate.[10]

Although the CMS policy creates a financial incentive for hospitals to improve quality, the extent to which the policy actually impacts reimbursement across multiple HACs has not been quantified. Additionally, if HACsas a policy initiativereflect actual quality of care, then the position of the ICD‐9 code should not affect MS‐DRG assignment. In this study we evaluated the extent to which MS‐DRG assignment would have been influenced by the presence of an HAC and tested the association of the position of an HAC in the list of ICD‐9 diagnosis codes with changes in MS‐DRG assignment.

METHODS

Study Population

This study was a retrospective analysis of all patients discharged from hospital members of the University HealthSystem Consortium's (UHC) Clinical Data Base between October 2007 and April 2008. The data set was limited to patient discharge records with at least 1 of 10 HACs for which CMS no longer provides additional reimbursement (see Supporting Table 1 in the online version of this article). The presence of an HAC was indicated by the corresponding diagnosis code using the ICD‐9 diagnosis and procedure codes.

Data Source

UHC's Clinical Data Base is a database of patient discharge‐level administrative data used primarily for billing purposes. UHC's Clinical Data Base provides comparative data for in‐hospital healthcare outcomes using encounter‐level and line‐item transactional information from each member organization. UHC is a nonprofit alliance of 116 academic medical centers and 276 of their affiliated hospitals.

Dependent Variable: Change in MS‐DRG Assignment

The dependent variable was a change in MS‐DRG assignment. MS‐DRG assignment was calculated by comparing the MS‐DRG assigned when the HAC's ICD‐9 diagnosis code was considered a no‐payment event and was not included in the determination (ie, post‐policy DRG) with the MS‐DRG that would have been assigned when the HAC was not included in the determination (ie, pre‐policy DRG). The list of ICD‐9 diagnosis codes was entered into MS‐DRG grouping software with the ICD‐9 diagnosis code for each HAC in the identical position presented to CMS. Up to 29 secondary ICD‐9 diagnosis and procedure codes were entered, but the analyses of association on the position of the HAC used the first 9 diagnosis and 6 procedure codes processed by CMS, as only codes in these positions would have changed the MS‐DRG assigned during the study time period. If the 2 MS‐DRGs (pre‐policy DRG and post‐policy DRG) did not match, the case was classified as having a change in MS‐DRG assignment (MS‐DRG change).

Independent variables included in this analysis were coding variables and patient characteristics. Coding variables included the total number of ICD‐9 diagnosis codes recorded in the discharge record, absolute position of the HAC ICD‐9 diagnosis code in the order of all diagnosis codes, weight for the actual MS‐DRG, and specific type of HAC. The absolute position of the HAC was included in the analysis as a categorical variable (second position, third, fourth, fifth, and sixth position and higher). In addition, patient‐level characteristics including sociodemographic characteristics, clinical factors and severity of illness (minor, moderate, major, extreme),[6] and hospital‐level characteristics.

Statistical Analysis

Means and standard deviations or frequencies and percentages were used to describe the variables. A 2 test was used to test for differences in the absolute position of the HAC with change in MS‐DRG assignment (change/no change). In addition, 2 tests were used to test for differences in each of the other categorical independent variables with change in MS‐DRG assignment; t tests were used to test for differences in the continuous variables with change in MS‐DRG assignment.

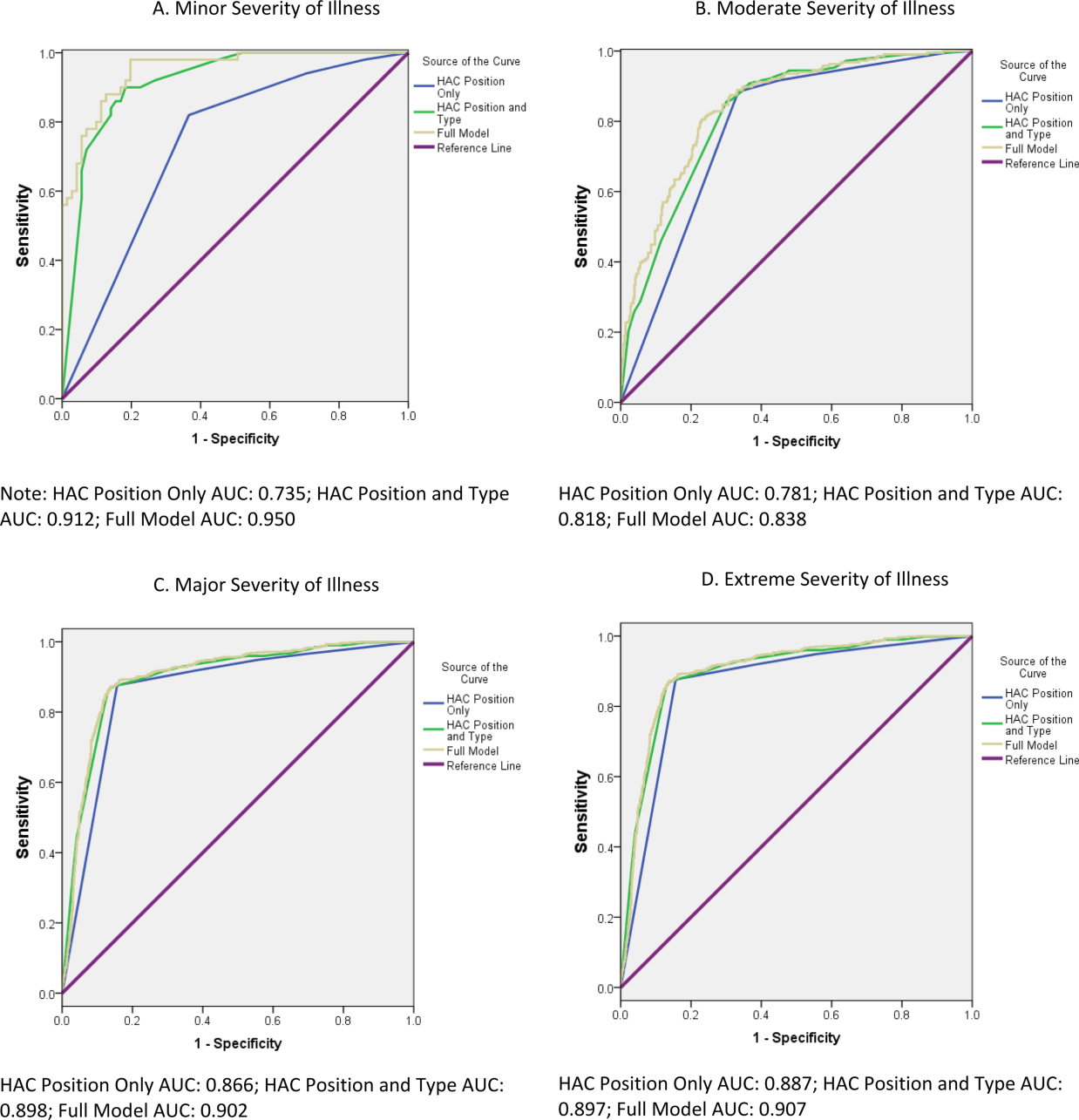

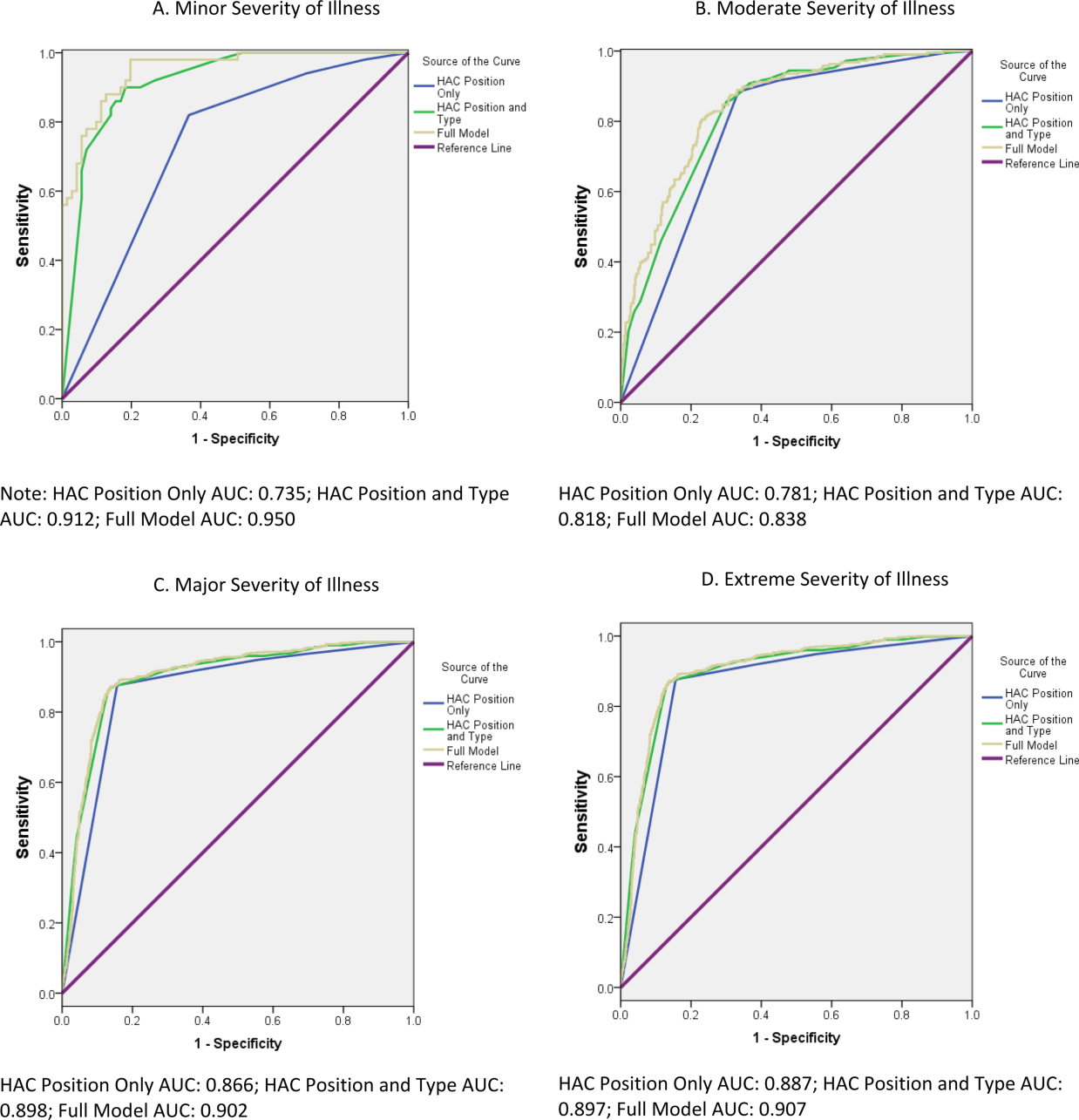

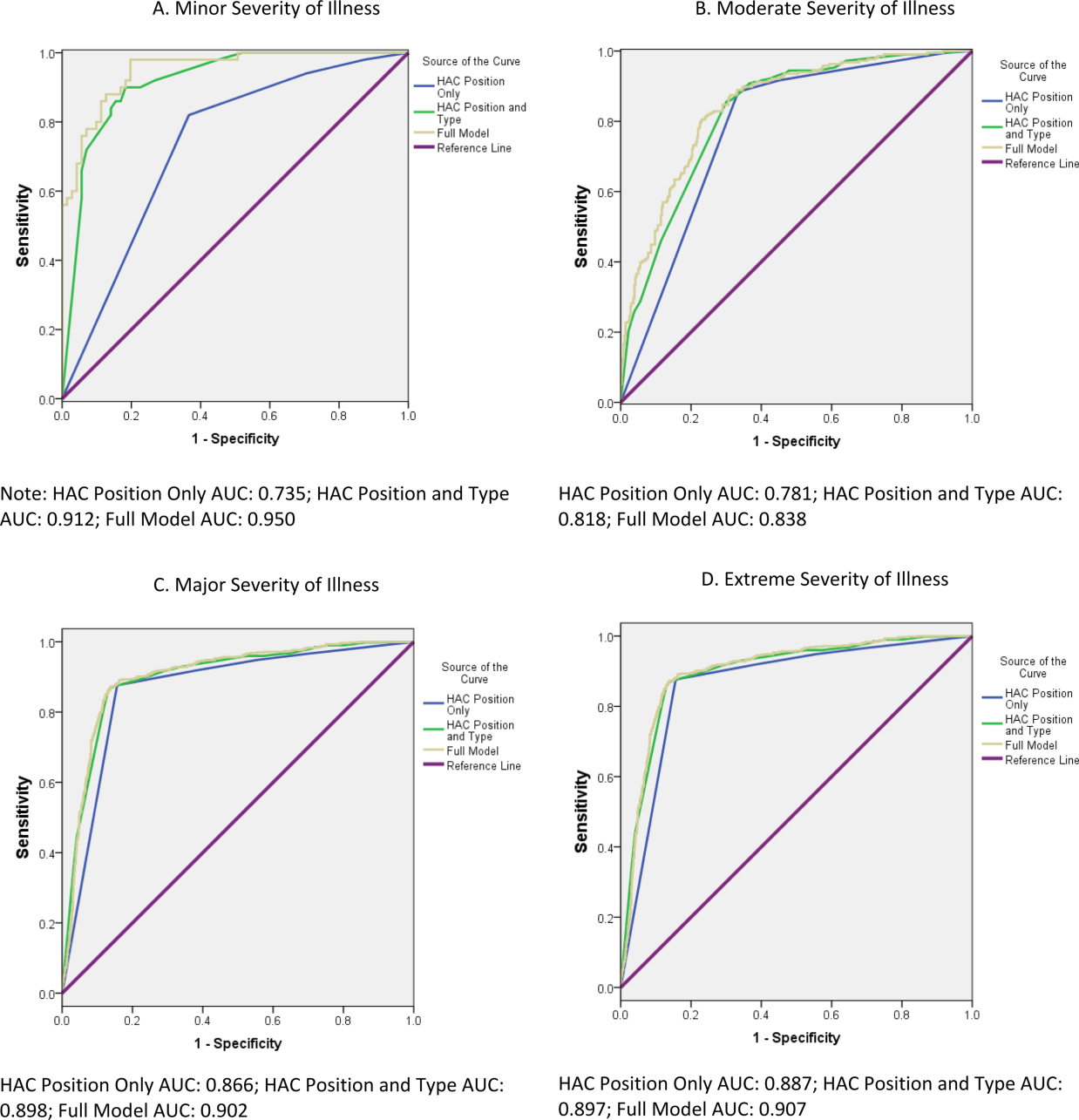

Two multivariable binary logistic regression models were fit to test the relationship between change in MS‐DRG assignment with the absolute position of the HAC, adjusting for coding variables, patient characteristics, and hospital characteristics that were associated with change in MS‐DRG assignment in the bivariate analysis. The first model tested the relationship between change in MS‐DRG and position of the HAC, without accounting for the specific type of HAC, and the second tested the relationship including both position and the specific type of HAC. Receiver operating characteristic (ROC) curves were developed for each model to evaluate the predictive accuracy. Additionally, analyses were stratified by severity of illness, and the areas under the ROC curves for 3 models were compared to determine whether the predictive accuracy increased with the inclusion of variables other than HAC position. The first model included HAC position only, the second model added type of HAC, and the third model added other coding variables and patient‐ and hospital‐level variables.

Two sensitivity analyses were performed to test the robustness of the results. The first analysis tested the sensitivity of the results to the specification of comorbid disease burden, as measured by number of diagnosis codes. We used Elixhauser's method[13] for identifying comorbid conditions to create binary variables indicating the presence or absence of 29 distinct comorbid conditions, then calculated the total number of comorbid conditions. The binary logistic regression model was refit, with the total number of comorbid conditions in place of the number of diagnosis codes. An additional binary logistic regression model was fit that included the individual comorbid conditions that were associated with change in MS‐DRG assignment in a bivariate analysis (P<0.05). The second sensitivity analysis evaluated whether hospital‐level variation in coding practices explained change in MS‐DRG assignment using a hierarchical binary logistic regression model that included hospital as a random effect.

All statistical analyses were conducted using the SAS version 9.2 statistical software package (SAS Institute Inc., Cary, NC). The Rush University Medical Center Institutional Review Board approved the study protocol.

RESULTS

Of the 954,946 discharges from UHC academic medical centers, 7027 patients (0.7%) had an HAC. Of the patients with an HAC, 6047 did not change MS‐DRG assignment, whereas 980 patients (13.8%) had a change in MS‐DRG assignment. Patients with a change in MS‐DRG assignment were significantly different from those without a change in MS‐DRG assignment on all patient‐level characteristics and all but 1 hospital characteristic (Table 1). The variable with the largest absolute difference between those with and without a change in MS‐DRG was the actual position of the HAC; 86.7% of those with an MS‐DRG change had their HAC in the second position, whereas those without a change had only 11.5% in the second position.

After controlling for patient and hospital characteristics, an HAC in the second position in the list of ICD‐9 codes was associated with the greatest likelihood of a change in MS‐DRG assignment (P<0.001) (Table 2). Each additional ICD‐9 code decreased the odds of an MS‐DRG change (P=0.004), demonstrating that having more secondary diagnosis codes was associated with a lesser likelihood of an MS‐DRG change. After including the individual HACs in the regression model, the second position remained associated with the likelihood of a change in MS‐DRG assignment (results not shown). The predictive accuracy of our model did not improve, however, with the addition of type of HAC. The area under the ROC curve was 0.94 in both models, indicating high predictive power.

| Intercept | Odds Ratio | P Value |

|---|---|---|

| ||

| Minor severity of illness | 6.80 | <0.001 |

| Moderate severity of illness | 5.52 | <0.001 |

| Major severity of illness | 8.02 | <0.001 |

| Number of ICD‐9 diagnosis codes per patient | 0.97 | 0.004 |

| HAC ICD‐9 diagnosis code in 2nd position | 40.52 | <0.001 |

| HAC ICD‐9 diagnosis code in 3rd position | 1.82 | 0.009 |

| HAC ICD‐9 diagnosis code in 4th position | 1.72 | 0.032 |

| HAC ICD‐9 diagnosis code in 5th position | 1.15 | 0.662 |

| Area under the ROC curve | 0.94 | <0.001* |

| Area under the ROC curve, model with patient socio‐demographic characteristics only | 0.85 | |

The proportion of cases with a change in MS‐DRG by severity of illness is reported in Table 3. The largest proportion of cases with a change in MS‐DRG was in the minor severity of illness category (41.3%), whereas only 2.6% of cases with an extreme severity of illness had a change in MS‐DRG. Figure 1 shows ROC curves stratified by severity of illness. Figure 1A illustrates the ROC curves for the 121 (1.7%) patients with minor severity of illness. The area under the ROC curve for the model including HAC position only was 0.74, indicating moderate predictive power. The inclusion of HAC type increased the predictive power to 0.91, and inclusion of sociodemographic characteristics further increased the predictive power to 0.95. Figure 1BD illustrates the ROC curves for moderate, major, and extreme severities of illness. For more severe illnesses, the predictive accuracy of the models with only HAC position were similar to the full models, demonstrating that HAC position alone had a high predictive power for change in MS‐DRG assignment.

| Variable | No. | Within Category Percent With MS‐DRG Change |

|---|---|---|

| ||

| Severity of illness | ||

| Minor | 121 | 41.3 |

| Moderate | 575 | 37.6 |

| Major | 1,917 | 31.3 |

| Extreme | 4,414 | 2.6 |

In a sensitivity analysis that evaluated the robustness of our results to the specification of disease burden, inclusion of the number of comorbid conditions did not improve the predictive accuracy of the model. Although inclusion of individual comorbid conditions rather than number of diagnosis codes attenuated the odds ratio (OR) for HAC position (OR: 40.5 in the original model vs OR: 32.9 in the model with individual comorbid conditions), the improvement of the predictive accuracy of the model was small (area under the ROC curve=0.936 in the original model vs 0.943 in the model with individual conditions, P<0.001) (results not shown). In a sensitivity analysis using a hierarchical logistic regression model that included hospital random effects, hospital‐level variation in coding practices did not attenuate the relationship between HAC position and MS‐DRG change (results not shown).

DISCUSSION

This study investigated the association of a change in MS‐DRG assignment and position of the ICD‐9 diagnosis codes for HACs in a sample of patients discharged from US academic medical centers. We found that only 14% of the MS‐DRGs for patients with an HAC would have experienced a change in DRG assignment. Our results are consistent with those of Teufack et al.,[14] who estimated the economic impact of CMS' HAC policy for neurosurgery services at a single hospital to be 0.007% of overall net revenues. Nevertheless, the majority of hospitals have increased their efforts to prevent HACs that are included in CMS' policy.[15] At the same time, most hospitals have not increased their budgets for preventing HACs, and instead have reallocated resources from nontargeted HACs to those included in CMS' policy.

The low proportion of records that are impacted by the policy may be partially explained by the fact that CMS' policy only has an impact on reimbursement for MS‐DRGs with multiple levels. For example, heart failure has 3 levels of reimbursement in the MS‐DRG system (Table 4). Prior to CMS' policy, a heart failure patient with an air embolism as an HAC would have been classified in the most severe MS‐DRG (291), whereas after implementation the patient would be classified in the least severe MS‐DRG, if no other complication or comorbidity (CC) or a major complication or comorbidity (MCC) were present. Chest pain has only 1 level, and reimbursement for a patient with an HAC and classified in the chest pain MS‐DRG would not be impacted by CMS' policy. Most hospitalized patients are complicated, and the proportion of patients who are complicated will continue to increase over time as less complex care shifts to the ambulatory setting. The relative effectiveness of CMS' policy is likely to diminish with the continued shift of care to the ambulatory setting.

| Variable | MS‐DRG | DRG Weight |

|---|---|---|

| ||

| Heart failure and shock | ||

| With major complications and comorbidities (MS‐DRG 291) | 291 | 1.5062 |

| With complications and comorbidities | 292 | 0.9952 |

| Without major complications or comorbidities | 293 | 0.6718 |

| Chest pain | 313 | 0.5992 |

Patient discharges with a diagnosis code for as HAC in the second position were substantially more likely to have a change in MS‐DRG assignment compared to cases with an HAC listed lower in the final list of diagnosis codes. Perhaps it is not surprising that MS‐DRG assignment is most likely to change when the HAC is in the second position, because an ICD‐9 diagnosis code in this position is more likely to be a major complication or comorbidity. For HACs listed in a lower position of the list of ICD‐9 diagnosis codes, it is likely that the patient had another major complication or comorbidity listed in the second position that would have maintained classification in the same MS‐DRG. Our results suggest that physicians and hospitals caring for patients with lower complexity of illness will sustain a higher financial burden as a result of an HAC under CMS' policy compared to providers whose patients sustain the exact same HAC but have underlying medical care of greater complexity.

These results raise further concerns about the ability of CMS' payment policy to improve quality. One criticism of CMS' policy is that all HACs are not universally preventable. If they are not preventable, payment reductions promulgated via the policy would be punitive rather than incentivizing. In their study of central catheter‐associated bloodstream infections and catheter‐associated urinary tract infections, for example, Lee et al. found no change in infection rates after implementation of CMS' policy.[16] As such, some have suggested HACs should not be used to determine reimbursement, and CMS should abandon its current nonpayment policy.[4, 17] Our findings echo this criticism given that the financial penalty for an HAC depends on whether a patient is more or less complex.

Because coding emanates from physician documentation, a uniform documentation process must exist to ensure nonvariable coding practices.[1, 2, 7, 9] This is not the case, however, and some hospitals comanage documentation to refine or maximize the number of ICD‐9 diagnosis and procedure codes. Furthermore, there are certain differences in the documentation practices of individual physicians. If physician documentation and coding variation leads to fewer ICD‐9 codes during an encounter, the chance that an HAC will influence MS‐DRG change increases.

Another source of variation in coding practices found in this study was code sequencing. Although guidelines for appropriate ICD‐9 diagnosis coding currently exist, individual subjectivity remains. The most essential step in the coding process is identifying the principal diagnosis by extrapolating from physician documentation and clinical data. For example, when a patient is admitted for chest pain, and after some evaluation it is determined that the patient experienced a myocardial infarction, then myocardial infarction becomes the principal diagnosis. Based on that principal diagnosis, coders must select the relevant secondary diagnoses. The process involves a series of steps that must be followed exactly in order to ensure accurate coding.[12] There are no guidelines by which coding personnel must follow to sequence secondary diagnoses, with the exception of listed MCCs and CCs prior to other secondary diagnoses. Ultimately, the order by which these codes are assigned may result in unfavorable variation in MS‐DRG assignment.[1, 2, 4, 7, 8, 9, 17]

There are a number of limitations to this study. First, our cohort included only UHC‐affiliated academic medical centers, which may not represent all acute‐care hospitals and their coding practices. Although our data are for discharges prior to implementation of the policy, we were able to analyze the anticipated impact of the policy prior to any direct or indirect changes in coding that may have occurred in response to CMS' policy. Additionally, the number of diagnosis codes accepted by CMS was expanded from 9 to 25 in 2011. Future analyses that include MS‐DRG classifications with the expanded number of diagnosis codes should be conducted to validate our findings and determine whether any changes have occurred over time. It is not known whether low illness severity scores signify patient or hospital characteristics. If they represent patient characteristics, then CMS' policy will disproportionately affect hospitals taking care of less severely ill patients. Alternatively, if hospital coding practice explains more of the variation in the number of ICD‐9 codes (and thus severity of illness), then the system of adjudicating reimbursement via HACs to incentivize quality of care will be flawed, as there is no standard position for HACs on a more lengthy diagnosis list. Finally, we did not evaluate the change in DRG weight with the reassignment of MS‐DRG if the HAC had been included in the calculation. Future work should evaluate whether there is a differential impact of the policy by change in MS‐DRG weight.

CONCLUSION

Under CMS' current policy, hospitals and physicians caring for patients with lower severity of illness and have an HAC will be penalized by CMS disproportionately more than those caring for more complex, sicker patients with the identical HAC. If, in fact, HACs are indicators of a hospital's quality of care, then the CMS policy will likely do little to foster improved quality unless there is a reduction in coding practice variation and modifications to ensure that the policy impacts reimbursement, independent of severity of illness.

Disclosures

The authors acknowledge the financial support for data acquisition from the Rush University College of Health Sciences. The authors report no conflicts of interest.

- Centers for Medicare and Medicaid Services. Hospital‐acquired conditions (present on admission indicator). Available at: http://www.cms.hhs.gov/HospitalAcqCond/05_Coding.asp#TopOfPage. Updated 2012. Accessed September 20, 2012.

- Centers for Medicare and Medicaid Services. Hospital‐acquired conditions: coding. Available at: http://www.cms.gov/Medicare/Medicare‐Fee‐for‐Service‐Payment/HospitalAcqCond/Coding.html. Updated 2012. Accessed February 2, 2012.

- ICD‐9‐CM 2009 Coders' Desk Reference for Procedures. Eden Prairie, MN: Ingenix; 2009.

- , , , . Hospital complications: linking payment reduction to preventability. Jt Comm J Qual Patient Saf. 2009;35(5):283–285.

- , , , et al. Change in MS‐DRG assignment and hospital reimbursement as a result of Centers for Medicare

One financial incentive to improve quality of care is the Centers for Medicare and Medicaid Services' (CMS) policy to not pay additionally for certain adverse events that are classified as hospital‐acquired conditions (HACs).[1, 2, 3] HACs are specific conditions that occur during the hospital stay and presumably could have been prevented.[4, 5, 6] Under the CMS policy, if an HAC occurs during a patient's stay, that condition is not included in the Medicare Severity Diagnosis‐Related Group (MS‐DRG) assignment.

The MS‐DRG assigned to a patient discharge determines reimbursement. Each MS‐DRG is assigned a weight, which is used to adjust for the fact that the treatment of different conditions consume different resources and have difference costs. Groups of patients who are expected to require above‐average resources have a higher weight than those who require fewer resources, and higher‐weighted MS‐DRG assignment results in a higher payment. In some cases, the inclusion of the diagnosis code of an HAC in the determination of the MS‐DRG results in a higher complexity level and higher DRG weight. The policy is designed to shift the incremental costs associated with treating the HAC to the hospital. As of October 2009, there were 10 HACs included in the CMS nonpayment program (see Supporting Table 1 in the online version of this article). CMS expanded the list of HACs to include 13 conditions in 2013.

| Variable | MS‐DRG Change, No. (%) or MSD, N=980 | No MS‐DRG Change, No. (%) or MSD, N=6,047 | P Value |

|---|---|---|---|

| |||

| Patient sociodemographic characteristics | |||

| Age, y | 62.718.9 | 57.521.9 | <0.001 |

| Race | |||

| White | 687 (70.1) | 4,006 (66.3) | 0.024 |

| Black | 166 (16.9) | 1,100 (18.2) | |

| Hispanic | 45 (4.6) | 416 (6.9) | |

| Other | 82 (8.4) | 525 (8.7) | |

| Sex | <0.001 | ||

| Male | 441 (45.0) | 3,298 (54.5) | |

| Female | 539 (55.0) | 2,749 (45.5) | |

| Payer | <0.001 | ||

| Commercial | 279 (28.5) | 1,609 (26.6) | |

| Medicaid | 88 (9.0) | 910 (15.1) | |

| Medicare | 532 (54.3) | 3,003 (49.7) | |

| Self‐pay/charity | 52 (5.3) | 331 (5.5) | |

| Other | 29 (3.0) | 194 (3.2) | |

| Severity of illness | <0.001 | ||

| Minor | 50 (5.1) | 71 (1.2) | |

| Moderate | 216 (22.0) | 359 (5.9) | |

| Major | 599 (61.1) | 1,318 (21.8) | |

| Extreme | 115 (11.7) | 4,299 (71.1) | |

| Patient clinical characteristics | |||

| Number of ICD‐9 diagnosis codes per patient | 13.76.0 | 20.26.6 | <0.001 |

| MS‐DRG weight | 2.92.1 | 5.96.1 | <0.001 |

| Hospital characteristics | |||

| Mean number of ICD‐9 diagnosis codes per patient per hospital | 8.51.4 | 8.61.4 | 0.280 |

| Total hospital discharges | 15,9576,553 | 16,8576,634 | <0.001 |

| HACs per 1,000 discharges | 9.83.7 | 10.23.7 | <0.001 |

| Hospital‐acquired condition | |||

| Type of HAC | <0.001 | ||

| Pressure ulcer | 334 (34.1) | 1,599 (26.4) | |

| Falls/trauma | 96 (9.8) | 440 (7.3) | |

| Catheter‐associated UTI | 19 (1.9) | 215 (3.6) | |

| Vascular catheter infection | 26 (2.7) | 1,179 (19.5) | |

| DVT/pulmonary embolism | 448 (45.7) | 2,145 (35.5) | |

| Other conditions | 57 (5.8) | 469 (7.8) | |

| HAC position | <0.001 | ||

| 2nd code | 850 (86.7) | 697 (11.5) | |

| 3rd code | 45 (4.6) | 739 (12.2) | |

| 4th code | 30 (3.1) | 641 (10.6) | |

| 5th code | 15 (1.5) | 569 (9.4) | |

| 6th code or higher | 40 (4.1) | 3,401 (56.2) | |

Withholding additional reimbursement for an HAC has been controversial. One area of debate is that the assignment of an HAC may be imprecise, in part due to the variation in how physicians document in the medical record.[1, 2, 6, 7, 8, 9] Coding is derived from documentation in physician notes and is the primary mechanism for assigning International Classification of Diseases, 9th Revision, Clinical Modification (ICD‐9) diagnosis codes to the patient's encounter. The coding process begins with health information technicians (ie, medical record coders) reviewing all medical record documentation to assign diagnosis and procedure codes using the ICD‐9 codes.[10] Primary and secondary diagnoses are determined by certain definitions in the hospital setting. Secondary diagnoses can be further separated into complications or comorbidities in the MS‐DRG system, which can affect reimbursement. The MS‐DRG is then determined using these diagnosis and procedure codes. Physician documentation is the principal source of data for hospital billing, because health information technicians (ie, medical record coders) must assign a code based on what is documented in the chart. If key medical detail is missing or language is ambiguous, then coding can be inaccurate, which may lead to inappropriate compensation.[11]

Accurate and complete ICD‐9 diagnosis and procedure coding is essential for correct MS‐DRG assignment and reimbursement.[12] Physicians may influence coding prioritization by either over‐emphasizing a patient diagnosis or by downplaying the significance of new findings. In addition, unless the physician uses specific, accurate, and accepted terminology, the diagnosis may not even appear in the list of diagnosis codes. Medical records with nonstandard abbreviations may result in coder‐omission of key diagnoses. Finally, when clinicians use qualified diagnoses such as rule‐out or probable, the final diagnosis coded may not be accurate.[10]

Although the CMS policy creates a financial incentive for hospitals to improve quality, the extent to which the policy actually impacts reimbursement across multiple HACs has not been quantified. Additionally, if HACsas a policy initiativereflect actual quality of care, then the position of the ICD‐9 code should not affect MS‐DRG assignment. In this study we evaluated the extent to which MS‐DRG assignment would have been influenced by the presence of an HAC and tested the association of the position of an HAC in the list of ICD‐9 diagnosis codes with changes in MS‐DRG assignment.

METHODS

Study Population

This study was a retrospective analysis of all patients discharged from hospital members of the University HealthSystem Consortium's (UHC) Clinical Data Base between October 2007 and April 2008. The data set was limited to patient discharge records with at least 1 of 10 HACs for which CMS no longer provides additional reimbursement (see Supporting Table 1 in the online version of this article). The presence of an HAC was indicated by the corresponding diagnosis code using the ICD‐9 diagnosis and procedure codes.

Data Source

UHC's Clinical Data Base is a database of patient discharge‐level administrative data used primarily for billing purposes. UHC's Clinical Data Base provides comparative data for in‐hospital healthcare outcomes using encounter‐level and line‐item transactional information from each member organization. UHC is a nonprofit alliance of 116 academic medical centers and 276 of their affiliated hospitals.

Dependent Variable: Change in MS‐DRG Assignment

The dependent variable was a change in MS‐DRG assignment. MS‐DRG assignment was calculated by comparing the MS‐DRG assigned when the HAC's ICD‐9 diagnosis code was considered a no‐payment event and was not included in the determination (ie, post‐policy DRG) with the MS‐DRG that would have been assigned when the HAC was not included in the determination (ie, pre‐policy DRG). The list of ICD‐9 diagnosis codes was entered into MS‐DRG grouping software with the ICD‐9 diagnosis code for each HAC in the identical position presented to CMS. Up to 29 secondary ICD‐9 diagnosis and procedure codes were entered, but the analyses of association on the position of the HAC used the first 9 diagnosis and 6 procedure codes processed by CMS, as only codes in these positions would have changed the MS‐DRG assigned during the study time period. If the 2 MS‐DRGs (pre‐policy DRG and post‐policy DRG) did not match, the case was classified as having a change in MS‐DRG assignment (MS‐DRG change).

Independent variables included in this analysis were coding variables and patient characteristics. Coding variables included the total number of ICD‐9 diagnosis codes recorded in the discharge record, absolute position of the HAC ICD‐9 diagnosis code in the order of all diagnosis codes, weight for the actual MS‐DRG, and specific type of HAC. The absolute position of the HAC was included in the analysis as a categorical variable (second position, third, fourth, fifth, and sixth position and higher). In addition, patient‐level characteristics including sociodemographic characteristics, clinical factors and severity of illness (minor, moderate, major, extreme),[6] and hospital‐level characteristics.

Statistical Analysis

Means and standard deviations or frequencies and percentages were used to describe the variables. A 2 test was used to test for differences in the absolute position of the HAC with change in MS‐DRG assignment (change/no change). In addition, 2 tests were used to test for differences in each of the other categorical independent variables with change in MS‐DRG assignment; t tests were used to test for differences in the continuous variables with change in MS‐DRG assignment.

Two multivariable binary logistic regression models were fit to test the relationship between change in MS‐DRG assignment with the absolute position of the HAC, adjusting for coding variables, patient characteristics, and hospital characteristics that were associated with change in MS‐DRG assignment in the bivariate analysis. The first model tested the relationship between change in MS‐DRG and position of the HAC, without accounting for the specific type of HAC, and the second tested the relationship including both position and the specific type of HAC. Receiver operating characteristic (ROC) curves were developed for each model to evaluate the predictive accuracy. Additionally, analyses were stratified by severity of illness, and the areas under the ROC curves for 3 models were compared to determine whether the predictive accuracy increased with the inclusion of variables other than HAC position. The first model included HAC position only, the second model added type of HAC, and the third model added other coding variables and patient‐ and hospital‐level variables.

Two sensitivity analyses were performed to test the robustness of the results. The first analysis tested the sensitivity of the results to the specification of comorbid disease burden, as measured by number of diagnosis codes. We used Elixhauser's method[13] for identifying comorbid conditions to create binary variables indicating the presence or absence of 29 distinct comorbid conditions, then calculated the total number of comorbid conditions. The binary logistic regression model was refit, with the total number of comorbid conditions in place of the number of diagnosis codes. An additional binary logistic regression model was fit that included the individual comorbid conditions that were associated with change in MS‐DRG assignment in a bivariate analysis (P<0.05). The second sensitivity analysis evaluated whether hospital‐level variation in coding practices explained change in MS‐DRG assignment using a hierarchical binary logistic regression model that included hospital as a random effect.

All statistical analyses were conducted using the SAS version 9.2 statistical software package (SAS Institute Inc., Cary, NC). The Rush University Medical Center Institutional Review Board approved the study protocol.

RESULTS

Of the 954,946 discharges from UHC academic medical centers, 7027 patients (0.7%) had an HAC. Of the patients with an HAC, 6047 did not change MS‐DRG assignment, whereas 980 patients (13.8%) had a change in MS‐DRG assignment. Patients with a change in MS‐DRG assignment were significantly different from those without a change in MS‐DRG assignment on all patient‐level characteristics and all but 1 hospital characteristic (Table 1). The variable with the largest absolute difference between those with and without a change in MS‐DRG was the actual position of the HAC; 86.7% of those with an MS‐DRG change had their HAC in the second position, whereas those without a change had only 11.5% in the second position.

After controlling for patient and hospital characteristics, an HAC in the second position in the list of ICD‐9 codes was associated with the greatest likelihood of a change in MS‐DRG assignment (P<0.001) (Table 2). Each additional ICD‐9 code decreased the odds of an MS‐DRG change (P=0.004), demonstrating that having more secondary diagnosis codes was associated with a lesser likelihood of an MS‐DRG change. After including the individual HACs in the regression model, the second position remained associated with the likelihood of a change in MS‐DRG assignment (results not shown). The predictive accuracy of our model did not improve, however, with the addition of type of HAC. The area under the ROC curve was 0.94 in both models, indicating high predictive power.

| Intercept | Odds Ratio | P Value |

|---|---|---|

| ||

| Minor severity of illness | 6.80 | <0.001 |

| Moderate severity of illness | 5.52 | <0.001 |

| Major severity of illness | 8.02 | <0.001 |

| Number of ICD‐9 diagnosis codes per patient | 0.97 | 0.004 |

| HAC ICD‐9 diagnosis code in 2nd position | 40.52 | <0.001 |

| HAC ICD‐9 diagnosis code in 3rd position | 1.82 | 0.009 |

| HAC ICD‐9 diagnosis code in 4th position | 1.72 | 0.032 |

| HAC ICD‐9 diagnosis code in 5th position | 1.15 | 0.662 |

| Area under the ROC curve | 0.94 | <0.001* |

| Area under the ROC curve, model with patient socio‐demographic characteristics only | 0.85 | |

The proportion of cases with a change in MS‐DRG by severity of illness is reported in Table 3. The largest proportion of cases with a change in MS‐DRG was in the minor severity of illness category (41.3%), whereas only 2.6% of cases with an extreme severity of illness had a change in MS‐DRG. Figure 1 shows ROC curves stratified by severity of illness. Figure 1A illustrates the ROC curves for the 121 (1.7%) patients with minor severity of illness. The area under the ROC curve for the model including HAC position only was 0.74, indicating moderate predictive power. The inclusion of HAC type increased the predictive power to 0.91, and inclusion of sociodemographic characteristics further increased the predictive power to 0.95. Figure 1BD illustrates the ROC curves for moderate, major, and extreme severities of illness. For more severe illnesses, the predictive accuracy of the models with only HAC position were similar to the full models, demonstrating that HAC position alone had a high predictive power for change in MS‐DRG assignment.

| Variable | No. | Within Category Percent With MS‐DRG Change |

|---|---|---|

| ||

| Severity of illness | ||

| Minor | 121 | 41.3 |

| Moderate | 575 | 37.6 |

| Major | 1,917 | 31.3 |

| Extreme | 4,414 | 2.6 |

In a sensitivity analysis that evaluated the robustness of our results to the specification of disease burden, inclusion of the number of comorbid conditions did not improve the predictive accuracy of the model. Although inclusion of individual comorbid conditions rather than number of diagnosis codes attenuated the odds ratio (OR) for HAC position (OR: 40.5 in the original model vs OR: 32.9 in the model with individual comorbid conditions), the improvement of the predictive accuracy of the model was small (area under the ROC curve=0.936 in the original model vs 0.943 in the model with individual conditions, P<0.001) (results not shown). In a sensitivity analysis using a hierarchical logistic regression model that included hospital random effects, hospital‐level variation in coding practices did not attenuate the relationship between HAC position and MS‐DRG change (results not shown).

DISCUSSION

This study investigated the association of a change in MS‐DRG assignment and position of the ICD‐9 diagnosis codes for HACs in a sample of patients discharged from US academic medical centers. We found that only 14% of the MS‐DRGs for patients with an HAC would have experienced a change in DRG assignment. Our results are consistent with those of Teufack et al.,[14] who estimated the economic impact of CMS' HAC policy for neurosurgery services at a single hospital to be 0.007% of overall net revenues. Nevertheless, the majority of hospitals have increased their efforts to prevent HACs that are included in CMS' policy.[15] At the same time, most hospitals have not increased their budgets for preventing HACs, and instead have reallocated resources from nontargeted HACs to those included in CMS' policy.

The low proportion of records that are impacted by the policy may be partially explained by the fact that CMS' policy only has an impact on reimbursement for MS‐DRGs with multiple levels. For example, heart failure has 3 levels of reimbursement in the MS‐DRG system (Table 4). Prior to CMS' policy, a heart failure patient with an air embolism as an HAC would have been classified in the most severe MS‐DRG (291), whereas after implementation the patient would be classified in the least severe MS‐DRG, if no other complication or comorbidity (CC) or a major complication or comorbidity (MCC) were present. Chest pain has only 1 level, and reimbursement for a patient with an HAC and classified in the chest pain MS‐DRG would not be impacted by CMS' policy. Most hospitalized patients are complicated, and the proportion of patients who are complicated will continue to increase over time as less complex care shifts to the ambulatory setting. The relative effectiveness of CMS' policy is likely to diminish with the continued shift of care to the ambulatory setting.

| Variable | MS‐DRG | DRG Weight |

|---|---|---|

| ||

| Heart failure and shock | ||

| With major complications and comorbidities (MS‐DRG 291) | 291 | 1.5062 |

| With complications and comorbidities | 292 | 0.9952 |

| Without major complications or comorbidities | 293 | 0.6718 |

| Chest pain | 313 | 0.5992 |

Patient discharges with a diagnosis code for as HAC in the second position were substantially more likely to have a change in MS‐DRG assignment compared to cases with an HAC listed lower in the final list of diagnosis codes. Perhaps it is not surprising that MS‐DRG assignment is most likely to change when the HAC is in the second position, because an ICD‐9 diagnosis code in this position is more likely to be a major complication or comorbidity. For HACs listed in a lower position of the list of ICD‐9 diagnosis codes, it is likely that the patient had another major complication or comorbidity listed in the second position that would have maintained classification in the same MS‐DRG. Our results suggest that physicians and hospitals caring for patients with lower complexity of illness will sustain a higher financial burden as a result of an HAC under CMS' policy compared to providers whose patients sustain the exact same HAC but have underlying medical care of greater complexity.

These results raise further concerns about the ability of CMS' payment policy to improve quality. One criticism of CMS' policy is that all HACs are not universally preventable. If they are not preventable, payment reductions promulgated via the policy would be punitive rather than incentivizing. In their study of central catheter‐associated bloodstream infections and catheter‐associated urinary tract infections, for example, Lee et al. found no change in infection rates after implementation of CMS' policy.[16] As such, some have suggested HACs should not be used to determine reimbursement, and CMS should abandon its current nonpayment policy.[4, 17] Our findings echo this criticism given that the financial penalty for an HAC depends on whether a patient is more or less complex.

Because coding emanates from physician documentation, a uniform documentation process must exist to ensure nonvariable coding practices.[1, 2, 7, 9] This is not the case, however, and some hospitals comanage documentation to refine or maximize the number of ICD‐9 diagnosis and procedure codes. Furthermore, there are certain differences in the documentation practices of individual physicians. If physician documentation and coding variation leads to fewer ICD‐9 codes during an encounter, the chance that an HAC will influence MS‐DRG change increases.

Another source of variation in coding practices found in this study was code sequencing. Although guidelines for appropriate ICD‐9 diagnosis coding currently exist, individual subjectivity remains. The most essential step in the coding process is identifying the principal diagnosis by extrapolating from physician documentation and clinical data. For example, when a patient is admitted for chest pain, and after some evaluation it is determined that the patient experienced a myocardial infarction, then myocardial infarction becomes the principal diagnosis. Based on that principal diagnosis, coders must select the relevant secondary diagnoses. The process involves a series of steps that must be followed exactly in order to ensure accurate coding.[12] There are no guidelines by which coding personnel must follow to sequence secondary diagnoses, with the exception of listed MCCs and CCs prior to other secondary diagnoses. Ultimately, the order by which these codes are assigned may result in unfavorable variation in MS‐DRG assignment.[1, 2, 4, 7, 8, 9, 17]

There are a number of limitations to this study. First, our cohort included only UHC‐affiliated academic medical centers, which may not represent all acute‐care hospitals and their coding practices. Although our data are for discharges prior to implementation of the policy, we were able to analyze the anticipated impact of the policy prior to any direct or indirect changes in coding that may have occurred in response to CMS' policy. Additionally, the number of diagnosis codes accepted by CMS was expanded from 9 to 25 in 2011. Future analyses that include MS‐DRG classifications with the expanded number of diagnosis codes should be conducted to validate our findings and determine whether any changes have occurred over time. It is not known whether low illness severity scores signify patient or hospital characteristics. If they represent patient characteristics, then CMS' policy will disproportionately affect hospitals taking care of less severely ill patients. Alternatively, if hospital coding practice explains more of the variation in the number of ICD‐9 codes (and thus severity of illness), then the system of adjudicating reimbursement via HACs to incentivize quality of care will be flawed, as there is no standard position for HACs on a more lengthy diagnosis list. Finally, we did not evaluate the change in DRG weight with the reassignment of MS‐DRG if the HAC had been included in the calculation. Future work should evaluate whether there is a differential impact of the policy by change in MS‐DRG weight.

CONCLUSION

Under CMS' current policy, hospitals and physicians caring for patients with lower severity of illness and have an HAC will be penalized by CMS disproportionately more than those caring for more complex, sicker patients with the identical HAC. If, in fact, HACs are indicators of a hospital's quality of care, then the CMS policy will likely do little to foster improved quality unless there is a reduction in coding practice variation and modifications to ensure that the policy impacts reimbursement, independent of severity of illness.

Disclosures

The authors acknowledge the financial support for data acquisition from the Rush University College of Health Sciences. The authors report no conflicts of interest.

One financial incentive to improve quality of care is the Centers for Medicare and Medicaid Services' (CMS) policy to not pay additionally for certain adverse events that are classified as hospital‐acquired conditions (HACs).[1, 2, 3] HACs are specific conditions that occur during the hospital stay and presumably could have been prevented.[4, 5, 6] Under the CMS policy, if an HAC occurs during a patient's stay, that condition is not included in the Medicare Severity Diagnosis‐Related Group (MS‐DRG) assignment.

The MS‐DRG assigned to a patient discharge determines reimbursement. Each MS‐DRG is assigned a weight, which is used to adjust for the fact that the treatment of different conditions consume different resources and have difference costs. Groups of patients who are expected to require above‐average resources have a higher weight than those who require fewer resources, and higher‐weighted MS‐DRG assignment results in a higher payment. In some cases, the inclusion of the diagnosis code of an HAC in the determination of the MS‐DRG results in a higher complexity level and higher DRG weight. The policy is designed to shift the incremental costs associated with treating the HAC to the hospital. As of October 2009, there were 10 HACs included in the CMS nonpayment program (see Supporting Table 1 in the online version of this article). CMS expanded the list of HACs to include 13 conditions in 2013.

| Variable | MS‐DRG Change, No. (%) or MSD, N=980 | No MS‐DRG Change, No. (%) or MSD, N=6,047 | P Value |

|---|---|---|---|

| |||

| Patient sociodemographic characteristics | |||

| Age, y | 62.718.9 | 57.521.9 | <0.001 |

| Race | |||

| White | 687 (70.1) | 4,006 (66.3) | 0.024 |

| Black | 166 (16.9) | 1,100 (18.2) | |

| Hispanic | 45 (4.6) | 416 (6.9) | |

| Other | 82 (8.4) | 525 (8.7) | |

| Sex | <0.001 | ||

| Male | 441 (45.0) | 3,298 (54.5) | |

| Female | 539 (55.0) | 2,749 (45.5) | |

| Payer | <0.001 | ||

| Commercial | 279 (28.5) | 1,609 (26.6) | |

| Medicaid | 88 (9.0) | 910 (15.1) | |

| Medicare | 532 (54.3) | 3,003 (49.7) | |

| Self‐pay/charity | 52 (5.3) | 331 (5.5) | |

| Other | 29 (3.0) | 194 (3.2) | |

| Severity of illness | <0.001 | ||

| Minor | 50 (5.1) | 71 (1.2) | |

| Moderate | 216 (22.0) | 359 (5.9) | |

| Major | 599 (61.1) | 1,318 (21.8) | |

| Extreme | 115 (11.7) | 4,299 (71.1) | |

| Patient clinical characteristics | |||

| Number of ICD‐9 diagnosis codes per patient | 13.76.0 | 20.26.6 | <0.001 |

| MS‐DRG weight | 2.92.1 | 5.96.1 | <0.001 |

| Hospital characteristics | |||

| Mean number of ICD‐9 diagnosis codes per patient per hospital | 8.51.4 | 8.61.4 | 0.280 |

| Total hospital discharges | 15,9576,553 | 16,8576,634 | <0.001 |

| HACs per 1,000 discharges | 9.83.7 | 10.23.7 | <0.001 |

| Hospital‐acquired condition | |||

| Type of HAC | <0.001 | ||

| Pressure ulcer | 334 (34.1) | 1,599 (26.4) | |

| Falls/trauma | 96 (9.8) | 440 (7.3) | |

| Catheter‐associated UTI | 19 (1.9) | 215 (3.6) | |

| Vascular catheter infection | 26 (2.7) | 1,179 (19.5) | |

| DVT/pulmonary embolism | 448 (45.7) | 2,145 (35.5) | |

| Other conditions | 57 (5.8) | 469 (7.8) | |

| HAC position | <0.001 | ||

| 2nd code | 850 (86.7) | 697 (11.5) | |

| 3rd code | 45 (4.6) | 739 (12.2) | |

| 4th code | 30 (3.1) | 641 (10.6) | |

| 5th code | 15 (1.5) | 569 (9.4) | |

| 6th code or higher | 40 (4.1) | 3,401 (56.2) | |

Withholding additional reimbursement for an HAC has been controversial. One area of debate is that the assignment of an HAC may be imprecise, in part due to the variation in how physicians document in the medical record.[1, 2, 6, 7, 8, 9] Coding is derived from documentation in physician notes and is the primary mechanism for assigning International Classification of Diseases, 9th Revision, Clinical Modification (ICD‐9) diagnosis codes to the patient's encounter. The coding process begins with health information technicians (ie, medical record coders) reviewing all medical record documentation to assign diagnosis and procedure codes using the ICD‐9 codes.[10] Primary and secondary diagnoses are determined by certain definitions in the hospital setting. Secondary diagnoses can be further separated into complications or comorbidities in the MS‐DRG system, which can affect reimbursement. The MS‐DRG is then determined using these diagnosis and procedure codes. Physician documentation is the principal source of data for hospital billing, because health information technicians (ie, medical record coders) must assign a code based on what is documented in the chart. If key medical detail is missing or language is ambiguous, then coding can be inaccurate, which may lead to inappropriate compensation.[11]

Accurate and complete ICD‐9 diagnosis and procedure coding is essential for correct MS‐DRG assignment and reimbursement.[12] Physicians may influence coding prioritization by either over‐emphasizing a patient diagnosis or by downplaying the significance of new findings. In addition, unless the physician uses specific, accurate, and accepted terminology, the diagnosis may not even appear in the list of diagnosis codes. Medical records with nonstandard abbreviations may result in coder‐omission of key diagnoses. Finally, when clinicians use qualified diagnoses such as rule‐out or probable, the final diagnosis coded may not be accurate.[10]

Although the CMS policy creates a financial incentive for hospitals to improve quality, the extent to which the policy actually impacts reimbursement across multiple HACs has not been quantified. Additionally, if HACsas a policy initiativereflect actual quality of care, then the position of the ICD‐9 code should not affect MS‐DRG assignment. In this study we evaluated the extent to which MS‐DRG assignment would have been influenced by the presence of an HAC and tested the association of the position of an HAC in the list of ICD‐9 diagnosis codes with changes in MS‐DRG assignment.

METHODS

Study Population

This study was a retrospective analysis of all patients discharged from hospital members of the University HealthSystem Consortium's (UHC) Clinical Data Base between October 2007 and April 2008. The data set was limited to patient discharge records with at least 1 of 10 HACs for which CMS no longer provides additional reimbursement (see Supporting Table 1 in the online version of this article). The presence of an HAC was indicated by the corresponding diagnosis code using the ICD‐9 diagnosis and procedure codes.

Data Source

UHC's Clinical Data Base is a database of patient discharge‐level administrative data used primarily for billing purposes. UHC's Clinical Data Base provides comparative data for in‐hospital healthcare outcomes using encounter‐level and line‐item transactional information from each member organization. UHC is a nonprofit alliance of 116 academic medical centers and 276 of their affiliated hospitals.

Dependent Variable: Change in MS‐DRG Assignment

The dependent variable was a change in MS‐DRG assignment. MS‐DRG assignment was calculated by comparing the MS‐DRG assigned when the HAC's ICD‐9 diagnosis code was considered a no‐payment event and was not included in the determination (ie, post‐policy DRG) with the MS‐DRG that would have been assigned when the HAC was not included in the determination (ie, pre‐policy DRG). The list of ICD‐9 diagnosis codes was entered into MS‐DRG grouping software with the ICD‐9 diagnosis code for each HAC in the identical position presented to CMS. Up to 29 secondary ICD‐9 diagnosis and procedure codes were entered, but the analyses of association on the position of the HAC used the first 9 diagnosis and 6 procedure codes processed by CMS, as only codes in these positions would have changed the MS‐DRG assigned during the study time period. If the 2 MS‐DRGs (pre‐policy DRG and post‐policy DRG) did not match, the case was classified as having a change in MS‐DRG assignment (MS‐DRG change).

Independent variables included in this analysis were coding variables and patient characteristics. Coding variables included the total number of ICD‐9 diagnosis codes recorded in the discharge record, absolute position of the HAC ICD‐9 diagnosis code in the order of all diagnosis codes, weight for the actual MS‐DRG, and specific type of HAC. The absolute position of the HAC was included in the analysis as a categorical variable (second position, third, fourth, fifth, and sixth position and higher). In addition, patient‐level characteristics including sociodemographic characteristics, clinical factors and severity of illness (minor, moderate, major, extreme),[6] and hospital‐level characteristics.

Statistical Analysis

Means and standard deviations or frequencies and percentages were used to describe the variables. A 2 test was used to test for differences in the absolute position of the HAC with change in MS‐DRG assignment (change/no change). In addition, 2 tests were used to test for differences in each of the other categorical independent variables with change in MS‐DRG assignment; t tests were used to test for differences in the continuous variables with change in MS‐DRG assignment.

Two multivariable binary logistic regression models were fit to test the relationship between change in MS‐DRG assignment with the absolute position of the HAC, adjusting for coding variables, patient characteristics, and hospital characteristics that were associated with change in MS‐DRG assignment in the bivariate analysis. The first model tested the relationship between change in MS‐DRG and position of the HAC, without accounting for the specific type of HAC, and the second tested the relationship including both position and the specific type of HAC. Receiver operating characteristic (ROC) curves were developed for each model to evaluate the predictive accuracy. Additionally, analyses were stratified by severity of illness, and the areas under the ROC curves for 3 models were compared to determine whether the predictive accuracy increased with the inclusion of variables other than HAC position. The first model included HAC position only, the second model added type of HAC, and the third model added other coding variables and patient‐ and hospital‐level variables.

Two sensitivity analyses were performed to test the robustness of the results. The first analysis tested the sensitivity of the results to the specification of comorbid disease burden, as measured by number of diagnosis codes. We used Elixhauser's method[13] for identifying comorbid conditions to create binary variables indicating the presence or absence of 29 distinct comorbid conditions, then calculated the total number of comorbid conditions. The binary logistic regression model was refit, with the total number of comorbid conditions in place of the number of diagnosis codes. An additional binary logistic regression model was fit that included the individual comorbid conditions that were associated with change in MS‐DRG assignment in a bivariate analysis (P<0.05). The second sensitivity analysis evaluated whether hospital‐level variation in coding practices explained change in MS‐DRG assignment using a hierarchical binary logistic regression model that included hospital as a random effect.

All statistical analyses were conducted using the SAS version 9.2 statistical software package (SAS Institute Inc., Cary, NC). The Rush University Medical Center Institutional Review Board approved the study protocol.

RESULTS

Of the 954,946 discharges from UHC academic medical centers, 7027 patients (0.7%) had an HAC. Of the patients with an HAC, 6047 did not change MS‐DRG assignment, whereas 980 patients (13.8%) had a change in MS‐DRG assignment. Patients with a change in MS‐DRG assignment were significantly different from those without a change in MS‐DRG assignment on all patient‐level characteristics and all but 1 hospital characteristic (Table 1). The variable with the largest absolute difference between those with and without a change in MS‐DRG was the actual position of the HAC; 86.7% of those with an MS‐DRG change had their HAC in the second position, whereas those without a change had only 11.5% in the second position.

After controlling for patient and hospital characteristics, an HAC in the second position in the list of ICD‐9 codes was associated with the greatest likelihood of a change in MS‐DRG assignment (P<0.001) (Table 2). Each additional ICD‐9 code decreased the odds of an MS‐DRG change (P=0.004), demonstrating that having more secondary diagnosis codes was associated with a lesser likelihood of an MS‐DRG change. After including the individual HACs in the regression model, the second position remained associated with the likelihood of a change in MS‐DRG assignment (results not shown). The predictive accuracy of our model did not improve, however, with the addition of type of HAC. The area under the ROC curve was 0.94 in both models, indicating high predictive power.

| Intercept | Odds Ratio | P Value |

|---|---|---|

| ||

| Minor severity of illness | 6.80 | <0.001 |

| Moderate severity of illness | 5.52 | <0.001 |

| Major severity of illness | 8.02 | <0.001 |

| Number of ICD‐9 diagnosis codes per patient | 0.97 | 0.004 |

| HAC ICD‐9 diagnosis code in 2nd position | 40.52 | <0.001 |

| HAC ICD‐9 diagnosis code in 3rd position | 1.82 | 0.009 |

| HAC ICD‐9 diagnosis code in 4th position | 1.72 | 0.032 |

| HAC ICD‐9 diagnosis code in 5th position | 1.15 | 0.662 |

| Area under the ROC curve | 0.94 | <0.001* |

| Area under the ROC curve, model with patient socio‐demographic characteristics only | 0.85 | |

The proportion of cases with a change in MS‐DRG by severity of illness is reported in Table 3. The largest proportion of cases with a change in MS‐DRG was in the minor severity of illness category (41.3%), whereas only 2.6% of cases with an extreme severity of illness had a change in MS‐DRG. Figure 1 shows ROC curves stratified by severity of illness. Figure 1A illustrates the ROC curves for the 121 (1.7%) patients with minor severity of illness. The area under the ROC curve for the model including HAC position only was 0.74, indicating moderate predictive power. The inclusion of HAC type increased the predictive power to 0.91, and inclusion of sociodemographic characteristics further increased the predictive power to 0.95. Figure 1BD illustrates the ROC curves for moderate, major, and extreme severities of illness. For more severe illnesses, the predictive accuracy of the models with only HAC position were similar to the full models, demonstrating that HAC position alone had a high predictive power for change in MS‐DRG assignment.

| Variable | No. | Within Category Percent With MS‐DRG Change |

|---|---|---|

| ||

| Severity of illness | ||

| Minor | 121 | 41.3 |

| Moderate | 575 | 37.6 |

| Major | 1,917 | 31.3 |

| Extreme | 4,414 | 2.6 |

In a sensitivity analysis that evaluated the robustness of our results to the specification of disease burden, inclusion of the number of comorbid conditions did not improve the predictive accuracy of the model. Although inclusion of individual comorbid conditions rather than number of diagnosis codes attenuated the odds ratio (OR) for HAC position (OR: 40.5 in the original model vs OR: 32.9 in the model with individual comorbid conditions), the improvement of the predictive accuracy of the model was small (area under the ROC curve=0.936 in the original model vs 0.943 in the model with individual conditions, P<0.001) (results not shown). In a sensitivity analysis using a hierarchical logistic regression model that included hospital random effects, hospital‐level variation in coding practices did not attenuate the relationship between HAC position and MS‐DRG change (results not shown).

DISCUSSION

This study investigated the association of a change in MS‐DRG assignment and position of the ICD‐9 diagnosis codes for HACs in a sample of patients discharged from US academic medical centers. We found that only 14% of the MS‐DRGs for patients with an HAC would have experienced a change in DRG assignment. Our results are consistent with those of Teufack et al.,[14] who estimated the economic impact of CMS' HAC policy for neurosurgery services at a single hospital to be 0.007% of overall net revenues. Nevertheless, the majority of hospitals have increased their efforts to prevent HACs that are included in CMS' policy.[15] At the same time, most hospitals have not increased their budgets for preventing HACs, and instead have reallocated resources from nontargeted HACs to those included in CMS' policy.

The low proportion of records that are impacted by the policy may be partially explained by the fact that CMS' policy only has an impact on reimbursement for MS‐DRGs with multiple levels. For example, heart failure has 3 levels of reimbursement in the MS‐DRG system (Table 4). Prior to CMS' policy, a heart failure patient with an air embolism as an HAC would have been classified in the most severe MS‐DRG (291), whereas after implementation the patient would be classified in the least severe MS‐DRG, if no other complication or comorbidity (CC) or a major complication or comorbidity (MCC) were present. Chest pain has only 1 level, and reimbursement for a patient with an HAC and classified in the chest pain MS‐DRG would not be impacted by CMS' policy. Most hospitalized patients are complicated, and the proportion of patients who are complicated will continue to increase over time as less complex care shifts to the ambulatory setting. The relative effectiveness of CMS' policy is likely to diminish with the continued shift of care to the ambulatory setting.

| Variable | MS‐DRG | DRG Weight |

|---|---|---|

| ||

| Heart failure and shock | ||

| With major complications and comorbidities (MS‐DRG 291) | 291 | 1.5062 |

| With complications and comorbidities | 292 | 0.9952 |

| Without major complications or comorbidities | 293 | 0.6718 |

| Chest pain | 313 | 0.5992 |

Patient discharges with a diagnosis code for as HAC in the second position were substantially more likely to have a change in MS‐DRG assignment compared to cases with an HAC listed lower in the final list of diagnosis codes. Perhaps it is not surprising that MS‐DRG assignment is most likely to change when the HAC is in the second position, because an ICD‐9 diagnosis code in this position is more likely to be a major complication or comorbidity. For HACs listed in a lower position of the list of ICD‐9 diagnosis codes, it is likely that the patient had another major complication or comorbidity listed in the second position that would have maintained classification in the same MS‐DRG. Our results suggest that physicians and hospitals caring for patients with lower complexity of illness will sustain a higher financial burden as a result of an HAC under CMS' policy compared to providers whose patients sustain the exact same HAC but have underlying medical care of greater complexity.

These results raise further concerns about the ability of CMS' payment policy to improve quality. One criticism of CMS' policy is that all HACs are not universally preventable. If they are not preventable, payment reductions promulgated via the policy would be punitive rather than incentivizing. In their study of central catheter‐associated bloodstream infections and catheter‐associated urinary tract infections, for example, Lee et al. found no change in infection rates after implementation of CMS' policy.[16] As such, some have suggested HACs should not be used to determine reimbursement, and CMS should abandon its current nonpayment policy.[4, 17] Our findings echo this criticism given that the financial penalty for an HAC depends on whether a patient is more or less complex.

Because coding emanates from physician documentation, a uniform documentation process must exist to ensure nonvariable coding practices.[1, 2, 7, 9] This is not the case, however, and some hospitals comanage documentation to refine or maximize the number of ICD‐9 diagnosis and procedure codes. Furthermore, there are certain differences in the documentation practices of individual physicians. If physician documentation and coding variation leads to fewer ICD‐9 codes during an encounter, the chance that an HAC will influence MS‐DRG change increases.

Another source of variation in coding practices found in this study was code sequencing. Although guidelines for appropriate ICD‐9 diagnosis coding currently exist, individual subjectivity remains. The most essential step in the coding process is identifying the principal diagnosis by extrapolating from physician documentation and clinical data. For example, when a patient is admitted for chest pain, and after some evaluation it is determined that the patient experienced a myocardial infarction, then myocardial infarction becomes the principal diagnosis. Based on that principal diagnosis, coders must select the relevant secondary diagnoses. The process involves a series of steps that must be followed exactly in order to ensure accurate coding.[12] There are no guidelines by which coding personnel must follow to sequence secondary diagnoses, with the exception of listed MCCs and CCs prior to other secondary diagnoses. Ultimately, the order by which these codes are assigned may result in unfavorable variation in MS‐DRG assignment.[1, 2, 4, 7, 8, 9, 17]

There are a number of limitations to this study. First, our cohort included only UHC‐affiliated academic medical centers, which may not represent all acute‐care hospitals and their coding practices. Although our data are for discharges prior to implementation of the policy, we were able to analyze the anticipated impact of the policy prior to any direct or indirect changes in coding that may have occurred in response to CMS' policy. Additionally, the number of diagnosis codes accepted by CMS was expanded from 9 to 25 in 2011. Future analyses that include MS‐DRG classifications with the expanded number of diagnosis codes should be conducted to validate our findings and determine whether any changes have occurred over time. It is not known whether low illness severity scores signify patient or hospital characteristics. If they represent patient characteristics, then CMS' policy will disproportionately affect hospitals taking care of less severely ill patients. Alternatively, if hospital coding practice explains more of the variation in the number of ICD‐9 codes (and thus severity of illness), then the system of adjudicating reimbursement via HACs to incentivize quality of care will be flawed, as there is no standard position for HACs on a more lengthy diagnosis list. Finally, we did not evaluate the change in DRG weight with the reassignment of MS‐DRG if the HAC had been included in the calculation. Future work should evaluate whether there is a differential impact of the policy by change in MS‐DRG weight.

CONCLUSION

Under CMS' current policy, hospitals and physicians caring for patients with lower severity of illness and have an HAC will be penalized by CMS disproportionately more than those caring for more complex, sicker patients with the identical HAC. If, in fact, HACs are indicators of a hospital's quality of care, then the CMS policy will likely do little to foster improved quality unless there is a reduction in coding practice variation and modifications to ensure that the policy impacts reimbursement, independent of severity of illness.

Disclosures

The authors acknowledge the financial support for data acquisition from the Rush University College of Health Sciences. The authors report no conflicts of interest.

- Centers for Medicare and Medicaid Services. Hospital‐acquired conditions (present on admission indicator). Available at: http://www.cms.hhs.gov/HospitalAcqCond/05_Coding.asp#TopOfPage. Updated 2012. Accessed September 20, 2012.

- Centers for Medicare and Medicaid Services. Hospital‐acquired conditions: coding. Available at: http://www.cms.gov/Medicare/Medicare‐Fee‐for‐Service‐Payment/HospitalAcqCond/Coding.html. Updated 2012. Accessed February 2, 2012.

- ICD‐9‐CM 2009 Coders' Desk Reference for Procedures. Eden Prairie, MN: Ingenix; 2009.

- , , , . Hospital complications: linking payment reduction to preventability. Jt Comm J Qual Patient Saf. 2009;35(5):283–285.

- , , , et al. Change in MS‐DRG assignment and hospital reimbursement as a result of Centers for Medicare

- Centers for Medicare and Medicaid Services. Hospital‐acquired conditions (present on admission indicator). Available at: http://www.cms.hhs.gov/HospitalAcqCond/05_Coding.asp#TopOfPage. Updated 2012. Accessed September 20, 2012.

- Centers for Medicare and Medicaid Services. Hospital‐acquired conditions: coding. Available at: http://www.cms.gov/Medicare/Medicare‐Fee‐for‐Service‐Payment/HospitalAcqCond/Coding.html. Updated 2012. Accessed February 2, 2012.

- ICD‐9‐CM 2009 Coders' Desk Reference for Procedures. Eden Prairie, MN: Ingenix; 2009.

- , , , . Hospital complications: linking payment reduction to preventability. Jt Comm J Qual Patient Saf. 2009;35(5):283–285.

- , , , et al. Change in MS‐DRG assignment and hospital reimbursement as a result of Centers for Medicare

© 2014 Society of Hospital Medicine

Diagnosis Discrepancies and LOS

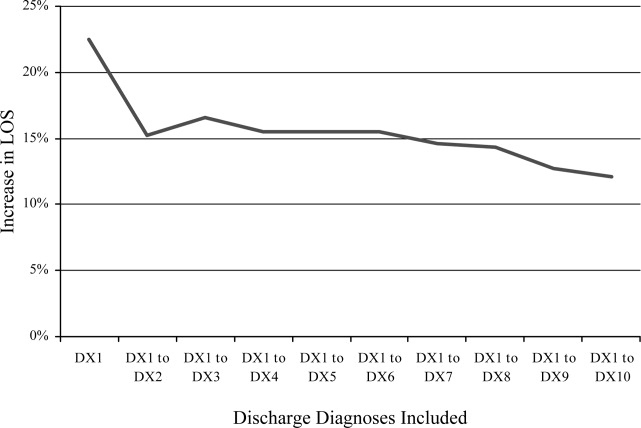

Recent research has found that the addition of clinical data to administrative data strengthens the accuracy of predicting inpatient mortality.1, 2 Pine et al.1 showed that including present on admission (POA) codes and numerical laboratory data resulted in substantially better fitting risk adjustment models than those based on administrative data alone. Risk adjustment models, despite improvement with the use of POA codes, are still imperfect and severity adjustment alone does not explain differences in mortality as well as we would hope.2

The addition of POA codes improves prediction of mortality, since they distinguish between conditions that were present at the time of admission and conditions that were acquired during the hospitalization, but it is not known if the addition of these codes is related to other measures of hospital performancesuch as differences in length of stay (LOS). Which of the factors related to the patient's clinical condition at the time of hospital admission drive differences in outcomes?

A patient's admission diagnosis may be an important piece of information that accounts for differences in hospital care. A patient's diagnosis at the time of hospital admission leads to the initial course of treatment. If the admitting diagnosis is inaccurate, a physician may spend critical time following a course of unneeded treatment until the correct diagnosis is made (reflected by a discrepancy between the admitting and discharge diagnosis codes). This discrepancy may be a marker of the fact that, while some patients are admitted to the hospital for treatment of a previously diagnosed condition, other patients require a diagnostic workup to determine the clinical problem.

A discrepancy may also reflect poor systems of documenting critical information and result in delays in care, with potentially serious health consequences.3, 4 If diagnosis discrepancy is a marker of difficult‐to‐diagnose cases, leading to delays in care, we may be able to improve our understanding of perceived differences in the production of high‐quality medical care and proactively identify cases which need more attention at admission to ensure that necessary care is provided as quickly as possible.

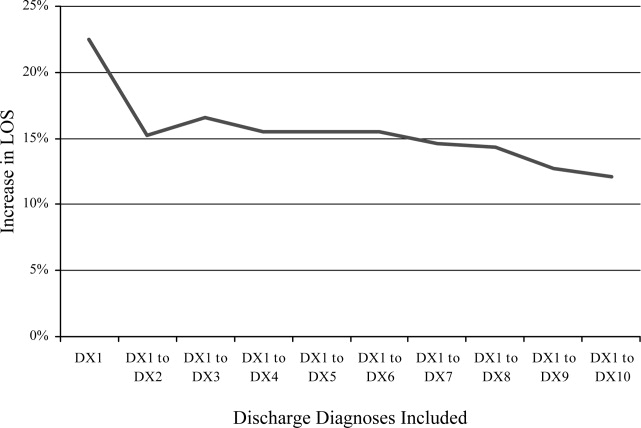

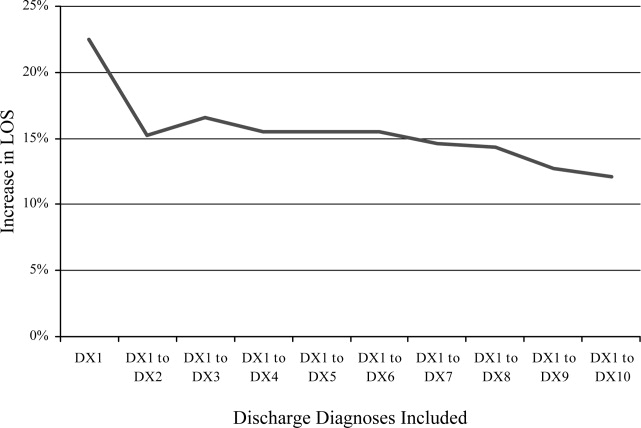

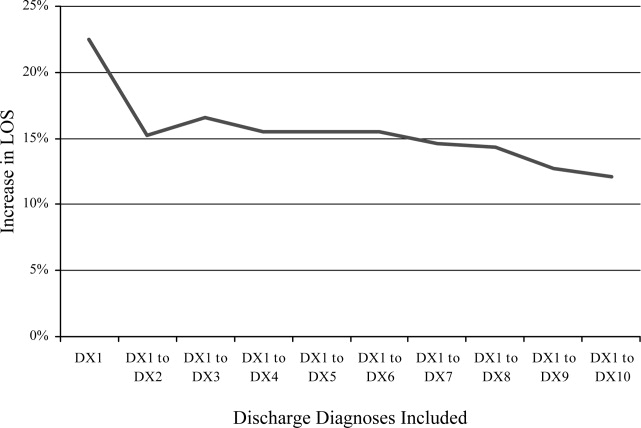

Almost universally, comparisons of hospital performance are risk‐adjusted to account for differences in case mix and severity across institutions. These risk‐adjustment models rely on discharge diagnoses to adjust for clinical differences among patients, even though recent research has shown that models using discharge diagnoses alone are inadequate predictors of variation in mortality among hospitals. While the findings of Pine et al.1 suggest the need to add certain clinical information, such as laboratory values, to improve these models, this information may be costly for some institutions to collect and report. We aimed to explore whether other simple to measure factors that are independent of the quality of care provided and routinely collected by hospitals' electronic information systems can be used to improve risk‐adjustment models. To assess the potential of other routinely collected diagnostic information in explaining differences in health outcomes, this study examined whether a discrepancy between the admission and discharge diagnoses was associated with hospital LOS.

Patients and Methods

Patient Population

The sample included all patients age 18 years and older who were admitted to and discharged from the general medicine units at Rush University Medical Center between July 2005 and June 2006. We further limited the sample to patients who were admitted via the emergency department (ED) or directly by their physician, excluding patients with scheduled admissions for which LOS may vary little and patients transferred from other hospitals. We also excluded patients admitted directly to the intensive care units. However, some patients were transferred to the intensive care units during their stay and we retained these patients. Only a small percent of cases fit this designation (1.2%). We did not explore the effects of this clinical situation due to small numbers of patients. Our attempt was to constitute a sample that would include patients for whom admission is more likely for an episodic and diagnostically complex set of symptoms and signs.

Diagnosis Discrepancy