User login

Breast arterial calcification, low bone mass predict CAD in women

Breast arterial calcification and low bone mass (LBM) were strongly linked to risk of coronary artery disease in asymptomatic women, according to a cross-sectional registry study.

The results suggest that breast arterial calcification – easily visible on every mammogram – “provides an independent and incremental predictive value over conventional risk factors,” wrote Yeonyee E. Yoon, MD, and colleagues from Seoul National University Bundang Hospital in Seongnam, South Korea.

In addition, they show that “atherosclerosis imaging allows a more direct visualization of the cumulative effects of all risk determinants in an individual patient,” they noted. The report is in JACC: Cardiovascular Imaging.

The researchers evaluated 2,100 asymptomatic women aged at least 40 years in the Women Health Registry Study for Bone, Breast, and Coronary Artery Disease (BBC study). All underwent a self-referred health evaluation that included simultaneous dual-energy x-ray absorptiometry (DXA), coronary computed tomography angiography (CCTA), and digital mammography. They predicted the 10-year risk of atherosclerotic cardiovascular disease (ASCVD) with the Korean Risk Prediction Model (KRPM) and Pooled Cohort Equation (PCE).

Overall, Dr. Yoon and colleagues found that 199 patients (9.5%) had BAC and 716 patients (34.1%) had LBM, with 235 patients (11.2%) having coronary artery calcification (CAC) and 328 with coronary artery plaque (CAP, 15.6%). BAC presence was associated with CAC (unadjusted odds ratio, 3.54), with mild (OR, 2.84) and severe BAC scores (OR, 5.50) having a greater association with CAC. All associations were statistically significant at P less than .001.

LBM also had a positive link with CAC that grew with its severity. Specifically, the odds ratio of CAC with osteopenia, defined as a T-score between –1.0 and –2.5, was 2.06, and that for osteoporosis, defined as a T-score at or below –2.5, was 3.21. All links were significant at P less than .001.

Similarly, BAC and LBM were also significantly tied to coronary artery plaque, with mild (OR, 2.61) and moderate (OR, 4.15) BAC severity as well as osteopenia (OR, 1.76) and osteoporosis (OR 2.82) being significantly associated with CAP (all P less than .001).

In a multivariable analysis, BAC presence and BAC score were significantly associated with CAC and CAP after adjustment for 10-year ASCVD risk. Predictions for CAC and CAP under the KRPM and PCE curve analyses showed a significant increase of areas under the curve (0.71 vs. 0.64), while adding BAC presence significantly increased the AUCs for the KRPM curve analysis (0.61 vs 0.64).

“Being able to predict CAC or CAP presence in an individual patient based on the presence and severity of BAC in addition to the use of conventional risk stratification algorithms may help clinicians decide when to recommend further cardiac tests and how aggressive interventions to prescribe in order to prevent the onset of clinical CAD,” the researchers noted.

They added that patients were all self-referred women, and results may not be generalizable to a larger population. The study’s retrospective nature also is a limitation, the researchers wrote. However, they said they are planning a future trial that will attempt to determine whether there is a long-term clinical benefit to identifying BAC and LBM in women without symptoms of CAD.

This study was supported by the Basic Science Research Program through the National Research Foundation of Korea; the Ministry of Science, ICT, and Future Planning; and the Seoul National University Bundang Hospital Research Fund. The authors report no relevant financial disclosures.

SOURCE: Yoon YE et al. JACC: Cardiovasc Imaging. 2018 Aug 15. doi: 10.1016/j.jcmg.2018.07.004.

Breast arterial calcification and low bone mass (LBM) were strongly linked to risk of coronary artery disease in asymptomatic women, according to a cross-sectional registry study.

The results suggest that breast arterial calcification – easily visible on every mammogram – “provides an independent and incremental predictive value over conventional risk factors,” wrote Yeonyee E. Yoon, MD, and colleagues from Seoul National University Bundang Hospital in Seongnam, South Korea.

In addition, they show that “atherosclerosis imaging allows a more direct visualization of the cumulative effects of all risk determinants in an individual patient,” they noted. The report is in JACC: Cardiovascular Imaging.

The researchers evaluated 2,100 asymptomatic women aged at least 40 years in the Women Health Registry Study for Bone, Breast, and Coronary Artery Disease (BBC study). All underwent a self-referred health evaluation that included simultaneous dual-energy x-ray absorptiometry (DXA), coronary computed tomography angiography (CCTA), and digital mammography. They predicted the 10-year risk of atherosclerotic cardiovascular disease (ASCVD) with the Korean Risk Prediction Model (KRPM) and Pooled Cohort Equation (PCE).

Overall, Dr. Yoon and colleagues found that 199 patients (9.5%) had BAC and 716 patients (34.1%) had LBM, with 235 patients (11.2%) having coronary artery calcification (CAC) and 328 with coronary artery plaque (CAP, 15.6%). BAC presence was associated with CAC (unadjusted odds ratio, 3.54), with mild (OR, 2.84) and severe BAC scores (OR, 5.50) having a greater association with CAC. All associations were statistically significant at P less than .001.

LBM also had a positive link with CAC that grew with its severity. Specifically, the odds ratio of CAC with osteopenia, defined as a T-score between –1.0 and –2.5, was 2.06, and that for osteoporosis, defined as a T-score at or below –2.5, was 3.21. All links were significant at P less than .001.

Similarly, BAC and LBM were also significantly tied to coronary artery plaque, with mild (OR, 2.61) and moderate (OR, 4.15) BAC severity as well as osteopenia (OR, 1.76) and osteoporosis (OR 2.82) being significantly associated with CAP (all P less than .001).

In a multivariable analysis, BAC presence and BAC score were significantly associated with CAC and CAP after adjustment for 10-year ASCVD risk. Predictions for CAC and CAP under the KRPM and PCE curve analyses showed a significant increase of areas under the curve (0.71 vs. 0.64), while adding BAC presence significantly increased the AUCs for the KRPM curve analysis (0.61 vs 0.64).

“Being able to predict CAC or CAP presence in an individual patient based on the presence and severity of BAC in addition to the use of conventional risk stratification algorithms may help clinicians decide when to recommend further cardiac tests and how aggressive interventions to prescribe in order to prevent the onset of clinical CAD,” the researchers noted.

They added that patients were all self-referred women, and results may not be generalizable to a larger population. The study’s retrospective nature also is a limitation, the researchers wrote. However, they said they are planning a future trial that will attempt to determine whether there is a long-term clinical benefit to identifying BAC and LBM in women without symptoms of CAD.

This study was supported by the Basic Science Research Program through the National Research Foundation of Korea; the Ministry of Science, ICT, and Future Planning; and the Seoul National University Bundang Hospital Research Fund. The authors report no relevant financial disclosures.

SOURCE: Yoon YE et al. JACC: Cardiovasc Imaging. 2018 Aug 15. doi: 10.1016/j.jcmg.2018.07.004.

Breast arterial calcification and low bone mass (LBM) were strongly linked to risk of coronary artery disease in asymptomatic women, according to a cross-sectional registry study.

The results suggest that breast arterial calcification – easily visible on every mammogram – “provides an independent and incremental predictive value over conventional risk factors,” wrote Yeonyee E. Yoon, MD, and colleagues from Seoul National University Bundang Hospital in Seongnam, South Korea.

In addition, they show that “atherosclerosis imaging allows a more direct visualization of the cumulative effects of all risk determinants in an individual patient,” they noted. The report is in JACC: Cardiovascular Imaging.

The researchers evaluated 2,100 asymptomatic women aged at least 40 years in the Women Health Registry Study for Bone, Breast, and Coronary Artery Disease (BBC study). All underwent a self-referred health evaluation that included simultaneous dual-energy x-ray absorptiometry (DXA), coronary computed tomography angiography (CCTA), and digital mammography. They predicted the 10-year risk of atherosclerotic cardiovascular disease (ASCVD) with the Korean Risk Prediction Model (KRPM) and Pooled Cohort Equation (PCE).

Overall, Dr. Yoon and colleagues found that 199 patients (9.5%) had BAC and 716 patients (34.1%) had LBM, with 235 patients (11.2%) having coronary artery calcification (CAC) and 328 with coronary artery plaque (CAP, 15.6%). BAC presence was associated with CAC (unadjusted odds ratio, 3.54), with mild (OR, 2.84) and severe BAC scores (OR, 5.50) having a greater association with CAC. All associations were statistically significant at P less than .001.

LBM also had a positive link with CAC that grew with its severity. Specifically, the odds ratio of CAC with osteopenia, defined as a T-score between –1.0 and –2.5, was 2.06, and that for osteoporosis, defined as a T-score at or below –2.5, was 3.21. All links were significant at P less than .001.

Similarly, BAC and LBM were also significantly tied to coronary artery plaque, with mild (OR, 2.61) and moderate (OR, 4.15) BAC severity as well as osteopenia (OR, 1.76) and osteoporosis (OR 2.82) being significantly associated with CAP (all P less than .001).

In a multivariable analysis, BAC presence and BAC score were significantly associated with CAC and CAP after adjustment for 10-year ASCVD risk. Predictions for CAC and CAP under the KRPM and PCE curve analyses showed a significant increase of areas under the curve (0.71 vs. 0.64), while adding BAC presence significantly increased the AUCs for the KRPM curve analysis (0.61 vs 0.64).

“Being able to predict CAC or CAP presence in an individual patient based on the presence and severity of BAC in addition to the use of conventional risk stratification algorithms may help clinicians decide when to recommend further cardiac tests and how aggressive interventions to prescribe in order to prevent the onset of clinical CAD,” the researchers noted.

They added that patients were all self-referred women, and results may not be generalizable to a larger population. The study’s retrospective nature also is a limitation, the researchers wrote. However, they said they are planning a future trial that will attempt to determine whether there is a long-term clinical benefit to identifying BAC and LBM in women without symptoms of CAD.

This study was supported by the Basic Science Research Program through the National Research Foundation of Korea; the Ministry of Science, ICT, and Future Planning; and the Seoul National University Bundang Hospital Research Fund. The authors report no relevant financial disclosures.

SOURCE: Yoon YE et al. JACC: Cardiovasc Imaging. 2018 Aug 15. doi: 10.1016/j.jcmg.2018.07.004.

FROM JACC: CARDIOVASCULAR IMAGING

Key clinical point:

Major finding: Breast arterial calcification (BAC) carried an odds ratio of 3.54 and low bone mass (LBM) carried an OR of 2.22 for the associated presence of coronary artery calcification, while BAC had an OR of 3.02 and LBM had an OR of 1.92 for associated presence of coronary atherosclerotic plaque.

Study details: A cross-sectional study of 2,100 women analyzed for subclinical coronary artery disease in the Bone, Breast, and CAD health registry study.

Disclosures: This study was supported by the Basic Science Research Program through the National Research Foundation of Korea; the Ministry of Science, ICT, and Future Planning; and the Seoul National University Bundang Hospital Research Fund. The authors report no relevant financial disclosures.

Source: Yoon YE et al. JACC: Cardiovasc Imaging. 2018 Aug 15; doi: 10.1016/j.jcmg.2018.07.004.

Aggressive drainage regimen may promote spontaneous pleurodesis

Compared with patients who underwent that was guided by symptoms, patients who underwent once-daily drainage had similar breathless scores but an increased rate of spontaneous pleurodesis and better quality of life scores, according to recent research published in the Lancet Respiratory Medicine.

“In patients in whom pleurodesis is an important goal (e.g., those undertaking strategies involving an indwelling pleural catheter plus pleurodesing agents), aggressive drainage should be done for at least 60 days,” Sanjeevan Muruganandan, FRACP, MBBS, from Sir Charles Gairdner Hospital in Perth, Australia, and his colleagues wrote in their study. “Future studies will need to establish if more aggressive (e.g., twice daily) regimens for the initial phase could further enhance success rates.”

Dr. Muruganandan and his colleagues evaluated 87 patients with symptomatic malignant pleural effusions between July 2015 and January 2017 from 11 centers in Australia, New Zealand, Hong Kong, and Malaysia in the randomized controlled AMPLE-2 trial, in which patients received either once daily (43 patients) or symptom-guided (44 patients) drainage for 60 days with a 6-month follow-up. Patients were excluded if they had a pleural infection, were pregnant, had a previous pneumonectomy or ipsilateral lobectomy, had “significant loculations likely to preclude effective fluid drainage,” or had an estimated survival of less than 3 months. Patients were identified and grouped based on whether they had mesothelioma- or nonmesothelioma-type cancer, with cancer type being minimalized during randomization.

At 60 days, patients in the aggressive daily drainage group had a mean daily breathless score of 13.1 mm (geometric means; 95% confidence interval, 9.8-17.4), compared with a mean of 17.3 mm (95% CI, 13.0-22.0) in the symptom-guided drainage group. In the aggressive drainage group, 16 of 43 patients (37.2%) achieved spontaneous pleurodesis at 60 days, compared with 11 of 44 patients (11.4%) in the symptom-guided drainage group (P = .0049). At 6 months, 19 of 43 (44.2%) patients in the aggressive drainage group had spontaneous pleurodesis, compared with 7 of 44 patients (15.9%; P = .004) in the symptom-guided drainage group (hazard ratio, 3.287; 95% CI, 1.396-7.740; P = .0065).

In each group, the investigators noted adverse events: 11 of 43 (25.6%) patients in the aggressive drainage group and 12 of 44 patients (27.3%) in the symptom-guided drainage group reported a severe adverse event. There were no significant differences in mortality, pain scores, and hospital stay between the groups. Regarding quality of life, the investigators found patients in the aggressive drainage group reported better scores using the EuroQoL-5 Dimensions-5 Levels assessment (estimated means, 0.713; 95% CI, 0.647-0.779) than did patients in the symptom-guided group (0.601; 95% CI, 0.536-0.667), with an estimated difference in means of 0.112 (95% CI, 0.0198-0.204; P = .0174).

The investigators suggested that aggressive drainage may have some unmeasured benefits. “Daily removal of the fluid might have provided benefits in symptoms not captured with our breathlessness and pain measurements. The higher pleurodesis rate, with resultant freedom from fluid (and symptom) recurrence and of the catheter, might have contributed to the better reported quality of life. Additionally, it has been suggested that indwelling pleural catheter drainage gives patients an important sense of control when they are feeling helpless with their advancing cancer.”

They concluded, “For patients whose primary care aim is palliation (e.g., those with very limited life expectancy or significant trapped lung where pleurodesis is unlikely), our data show that symptom-guided drainage offers an effective means of breathlessness control without the inconvenience and costs of daily drainages. The ability to predict the likelihood of pleurodesis will help guide the choice of regimen and should be a topic of future studies.”

Three authors reported serving on the advisory board of CareFusion/BD, two authors reported an educational grant from Rocket Medical (UK), and one author reported an educational grant from CareFusion/BD. The other authors reported no relevant conflicts of interest.

SOURCE: Muruganandan S et al. Lancet Respir Med. 2018 July 20. doi: 10.1016/S2213-2600(18)30288-1.

Compared with patients who underwent that was guided by symptoms, patients who underwent once-daily drainage had similar breathless scores but an increased rate of spontaneous pleurodesis and better quality of life scores, according to recent research published in the Lancet Respiratory Medicine.

“In patients in whom pleurodesis is an important goal (e.g., those undertaking strategies involving an indwelling pleural catheter plus pleurodesing agents), aggressive drainage should be done for at least 60 days,” Sanjeevan Muruganandan, FRACP, MBBS, from Sir Charles Gairdner Hospital in Perth, Australia, and his colleagues wrote in their study. “Future studies will need to establish if more aggressive (e.g., twice daily) regimens for the initial phase could further enhance success rates.”

Dr. Muruganandan and his colleagues evaluated 87 patients with symptomatic malignant pleural effusions between July 2015 and January 2017 from 11 centers in Australia, New Zealand, Hong Kong, and Malaysia in the randomized controlled AMPLE-2 trial, in which patients received either once daily (43 patients) or symptom-guided (44 patients) drainage for 60 days with a 6-month follow-up. Patients were excluded if they had a pleural infection, were pregnant, had a previous pneumonectomy or ipsilateral lobectomy, had “significant loculations likely to preclude effective fluid drainage,” or had an estimated survival of less than 3 months. Patients were identified and grouped based on whether they had mesothelioma- or nonmesothelioma-type cancer, with cancer type being minimalized during randomization.

At 60 days, patients in the aggressive daily drainage group had a mean daily breathless score of 13.1 mm (geometric means; 95% confidence interval, 9.8-17.4), compared with a mean of 17.3 mm (95% CI, 13.0-22.0) in the symptom-guided drainage group. In the aggressive drainage group, 16 of 43 patients (37.2%) achieved spontaneous pleurodesis at 60 days, compared with 11 of 44 patients (11.4%) in the symptom-guided drainage group (P = .0049). At 6 months, 19 of 43 (44.2%) patients in the aggressive drainage group had spontaneous pleurodesis, compared with 7 of 44 patients (15.9%; P = .004) in the symptom-guided drainage group (hazard ratio, 3.287; 95% CI, 1.396-7.740; P = .0065).

In each group, the investigators noted adverse events: 11 of 43 (25.6%) patients in the aggressive drainage group and 12 of 44 patients (27.3%) in the symptom-guided drainage group reported a severe adverse event. There were no significant differences in mortality, pain scores, and hospital stay between the groups. Regarding quality of life, the investigators found patients in the aggressive drainage group reported better scores using the EuroQoL-5 Dimensions-5 Levels assessment (estimated means, 0.713; 95% CI, 0.647-0.779) than did patients in the symptom-guided group (0.601; 95% CI, 0.536-0.667), with an estimated difference in means of 0.112 (95% CI, 0.0198-0.204; P = .0174).

The investigators suggested that aggressive drainage may have some unmeasured benefits. “Daily removal of the fluid might have provided benefits in symptoms not captured with our breathlessness and pain measurements. The higher pleurodesis rate, with resultant freedom from fluid (and symptom) recurrence and of the catheter, might have contributed to the better reported quality of life. Additionally, it has been suggested that indwelling pleural catheter drainage gives patients an important sense of control when they are feeling helpless with their advancing cancer.”

They concluded, “For patients whose primary care aim is palliation (e.g., those with very limited life expectancy or significant trapped lung where pleurodesis is unlikely), our data show that symptom-guided drainage offers an effective means of breathlessness control without the inconvenience and costs of daily drainages. The ability to predict the likelihood of pleurodesis will help guide the choice of regimen and should be a topic of future studies.”

Three authors reported serving on the advisory board of CareFusion/BD, two authors reported an educational grant from Rocket Medical (UK), and one author reported an educational grant from CareFusion/BD. The other authors reported no relevant conflicts of interest.

SOURCE: Muruganandan S et al. Lancet Respir Med. 2018 July 20. doi: 10.1016/S2213-2600(18)30288-1.

Compared with patients who underwent that was guided by symptoms, patients who underwent once-daily drainage had similar breathless scores but an increased rate of spontaneous pleurodesis and better quality of life scores, according to recent research published in the Lancet Respiratory Medicine.

“In patients in whom pleurodesis is an important goal (e.g., those undertaking strategies involving an indwelling pleural catheter plus pleurodesing agents), aggressive drainage should be done for at least 60 days,” Sanjeevan Muruganandan, FRACP, MBBS, from Sir Charles Gairdner Hospital in Perth, Australia, and his colleagues wrote in their study. “Future studies will need to establish if more aggressive (e.g., twice daily) regimens for the initial phase could further enhance success rates.”

Dr. Muruganandan and his colleagues evaluated 87 patients with symptomatic malignant pleural effusions between July 2015 and January 2017 from 11 centers in Australia, New Zealand, Hong Kong, and Malaysia in the randomized controlled AMPLE-2 trial, in which patients received either once daily (43 patients) or symptom-guided (44 patients) drainage for 60 days with a 6-month follow-up. Patients were excluded if they had a pleural infection, were pregnant, had a previous pneumonectomy or ipsilateral lobectomy, had “significant loculations likely to preclude effective fluid drainage,” or had an estimated survival of less than 3 months. Patients were identified and grouped based on whether they had mesothelioma- or nonmesothelioma-type cancer, with cancer type being minimalized during randomization.

At 60 days, patients in the aggressive daily drainage group had a mean daily breathless score of 13.1 mm (geometric means; 95% confidence interval, 9.8-17.4), compared with a mean of 17.3 mm (95% CI, 13.0-22.0) in the symptom-guided drainage group. In the aggressive drainage group, 16 of 43 patients (37.2%) achieved spontaneous pleurodesis at 60 days, compared with 11 of 44 patients (11.4%) in the symptom-guided drainage group (P = .0049). At 6 months, 19 of 43 (44.2%) patients in the aggressive drainage group had spontaneous pleurodesis, compared with 7 of 44 patients (15.9%; P = .004) in the symptom-guided drainage group (hazard ratio, 3.287; 95% CI, 1.396-7.740; P = .0065).

In each group, the investigators noted adverse events: 11 of 43 (25.6%) patients in the aggressive drainage group and 12 of 44 patients (27.3%) in the symptom-guided drainage group reported a severe adverse event. There were no significant differences in mortality, pain scores, and hospital stay between the groups. Regarding quality of life, the investigators found patients in the aggressive drainage group reported better scores using the EuroQoL-5 Dimensions-5 Levels assessment (estimated means, 0.713; 95% CI, 0.647-0.779) than did patients in the symptom-guided group (0.601; 95% CI, 0.536-0.667), with an estimated difference in means of 0.112 (95% CI, 0.0198-0.204; P = .0174).

The investigators suggested that aggressive drainage may have some unmeasured benefits. “Daily removal of the fluid might have provided benefits in symptoms not captured with our breathlessness and pain measurements. The higher pleurodesis rate, with resultant freedom from fluid (and symptom) recurrence and of the catheter, might have contributed to the better reported quality of life. Additionally, it has been suggested that indwelling pleural catheter drainage gives patients an important sense of control when they are feeling helpless with their advancing cancer.”

They concluded, “For patients whose primary care aim is palliation (e.g., those with very limited life expectancy or significant trapped lung where pleurodesis is unlikely), our data show that symptom-guided drainage offers an effective means of breathlessness control without the inconvenience and costs of daily drainages. The ability to predict the likelihood of pleurodesis will help guide the choice of regimen and should be a topic of future studies.”

Three authors reported serving on the advisory board of CareFusion/BD, two authors reported an educational grant from Rocket Medical (UK), and one author reported an educational grant from CareFusion/BD. The other authors reported no relevant conflicts of interest.

SOURCE: Muruganandan S et al. Lancet Respir Med. 2018 July 20. doi: 10.1016/S2213-2600(18)30288-1.

FROM THE LANCET RESPIRATORY MEDICINE

Key clinical point: There was no significant difference between groups in mean daily breathlessness scores, but more patients in the daily drainage group achieved spontaneous pleurodesis, compared with those in the symptom-guided group.

Major finding: Of the patients in the daily drainage group, 37.2% achieved spontaneous pleurodesis, compared with 11.4% in the symptom-guided group, at 60 days.

Study details: A randomized, multicenter, open-label trial of 87 patients between July 2016 and January 2017 from 11 centers in Australia, New Zealand, Hong Kong, and Malaysia in the AMPLE-2 study.

Disclosures: Three authors report serving on the advisory board of CareFusion/BD, two authors report an educational grant from Rocket Medical (UK), and one author reports an educational grant from CareFusion/BD. The other authors report no relevant conflicts of interest.

Source: Muruganandan S et al. Lancet Respir Med. 2018 July 20. doi: 10.1016/S2213-2600(18)30288-1.

Labor induction at 39 weeks reduced cesarean rate for low-risk, first-time mothers

Nulliparous women who were induced at 39 weeks had the same relative risk of adverse perinatal outcomes but a lower risk of a cesarean delivery, compared with women who received expectant management, results that researchers say contrast traditional recommendations for perinatal care, according to study from the New England Journal of Medicine.

“These findings contradict the conclusions of multiple observational studies that have suggested that labor induction is associated with an increased risk of adverse maternal and perinatal outcomes,” William A. Grobman, MD, the Arthur Hale Curtis, MD, Professor of Obstetrics and Gynecology at Northwestern University in Chicago, and his colleagues wrote. “These studies, however, compared women who underwent labor induction with those who had spontaneous labor, which is not a comparison that is useful to guide clinical decision making.”

Dr. Grobman and his colleagues evaluated the deliveries of 3,062 women who underwent labor induction between 39 weeks of gestation and 39 weeks and 4 days of gestation, and compared them with outcomes of 3,044 women who received expectant management until 40 weeks and 5 days of gestation. Women in both groups had a singleton fetus, no indication of early delivery, and did not plan on delivering by C-section. The participants were assessed again at about 38 weeks of gestation and randomly assigned to receive labor induction or expectant management as part of a multicenter randomized, controlled, parallel-group, unmasked trial in 41 maternal-fetal medicine departments in hospitals participating in the Eunice Kennedy Shriver National Institute of Child Health and Human Development network screened between March 2014, and August 2017.

Primary perinatal outcomes and components were defined as perinatal death, respiratory support, an Apgar score of 3 or less at 5 minutes, hypoxic-ischemic encephalopathy, seizure, infection, meconium aspiration syndrome, birth trauma, intracranial or subgaleal hemorrhage, or hypotension that requires vasopressor support. The principal secondary outcome was cesarean delivery, but other secondary outcomes included neonatal or intensive care, infection, postpartum hospital stay, and hypertension, among others.

Dr. Grobman and his colleagues found 132 (4.3%) of neonates in the induction group and 164 (5.4%) in the expectant-management group experienced a primary composite outcome (relative risk, 0.80; 95% confidence interval, 0.64-1.00; P = .049).

Regarding secondary outcomes, there was a significantly lower risk of cesarean delivery in the induction group, with 18.6% of women undergoing a cesarean delivery, compared with 22.2% of women in the expectant-management group (RR, 0.84; 95% CI, 0.76-0.93; P less than .001). Women in the labor induction group had a significantly lower relative risk of hypertensive disorders of pregnancy (9.1%), compared with the expectant-management (14.1%) group (RR, 0.64; 95% CI, 0.56-0.74; P less than .001). The investigators said women who underwent induced labor had lower 10-point Likert scale scores, were more likely to have “extensions of the uterine incision during cesarean delivery,” perceived they had “more control” during delivery, and had a shorter postpartum stay in the hospital, compared with women who received expectant management. However, women in the induced labor group also had a longer stay in the labor and delivery units, they said.

The researchers noted the limitations in this study, which included its unmasked design, lack of power to detect infrequent outcome differences, and the lack of information surrounding labor induction at 39 weeks in low-risk nulliparous women.

“These results suggest that policies aimed at the avoidance of elective labor induction among low-risk nulliparous women at 39 weeks of gestation are unlikely to reduce the rate of cesarean delivery on a population level; the trial provides information that can be incorporated into discussions that rely on principles of shared decision making,” Dr. Grobman and his colleagues wrote.

This study was funded by the Eunice Kennedy Shriver National Institute of Child Health and Human Development. Dr. Silver reports receiving personal fees from Gestavision. The other authors report no relevant financial disclosures.

SOURCE: Grobman WA et al. N Engl J Med. 2018 Aug 9. doi: 10.1056/NEJMoa1800566.

Of the more than 50,000 women screened for the study by Grobman et al., there were more than 44,000 women excluded and more than 16,000 did not participate in the trial. Further, the study participants tended to be younger and comprised more black or Hispanic women than the general population of mothers in the United States, Michael F. Greene, MD, said in a related editorial.

“Readers can only speculate as to why so many women declined to participate in the trial and what implications the demographics of the participants may have for the generalizability of the trial results and the acceptability of elective induction of labor at 39 weeks among women in the United States more generally,” Dr. Greene said. “If induction at 39 weeks becomes a widely popular option, busy obstetrical centers will need to find new ways to accommodate larger numbers of women with longer lengths of stay in the labor and delivery unit.”

Nevertheless, the study reflects a “public preference for a less interventionist approach” to delivery, Dr. Greene said, and the interest is backed by available data. He cited a meta-analysis of 20 randomized trials that found inducing labor at 39 weeks may reduce perinatal morality while not increasing the risk of operative deliveries. Specifically, he noted a randomized trial from the United Kingdom found induction of labor among 619 women at 39 weeks who were at least 35 years old did not affect the participants’ perception of delivery or increase the number of operative deliveries.

“These results across multiple obstetrical centers in the United States, however, should reassure women that elective induction of labor at 39 weeks is a reasonable choice that is very unlikely to result in poorer obstetrical outcomes,” he said.

Dr. Greene is chief of obstetrics and gynecology at Massachusetts General Hospital in Boston. He reported no relevant conflicts of interest. These comments summarize his editorial accompanying the article by Dr. Grobman and his associates ( N Engl J Med. 2018 Aug 9;379[6]:580-1 ).

Of the more than 50,000 women screened for the study by Grobman et al., there were more than 44,000 women excluded and more than 16,000 did not participate in the trial. Further, the study participants tended to be younger and comprised more black or Hispanic women than the general population of mothers in the United States, Michael F. Greene, MD, said in a related editorial.

“Readers can only speculate as to why so many women declined to participate in the trial and what implications the demographics of the participants may have for the generalizability of the trial results and the acceptability of elective induction of labor at 39 weeks among women in the United States more generally,” Dr. Greene said. “If induction at 39 weeks becomes a widely popular option, busy obstetrical centers will need to find new ways to accommodate larger numbers of women with longer lengths of stay in the labor and delivery unit.”

Nevertheless, the study reflects a “public preference for a less interventionist approach” to delivery, Dr. Greene said, and the interest is backed by available data. He cited a meta-analysis of 20 randomized trials that found inducing labor at 39 weeks may reduce perinatal morality while not increasing the risk of operative deliveries. Specifically, he noted a randomized trial from the United Kingdom found induction of labor among 619 women at 39 weeks who were at least 35 years old did not affect the participants’ perception of delivery or increase the number of operative deliveries.

“These results across multiple obstetrical centers in the United States, however, should reassure women that elective induction of labor at 39 weeks is a reasonable choice that is very unlikely to result in poorer obstetrical outcomes,” he said.

Dr. Greene is chief of obstetrics and gynecology at Massachusetts General Hospital in Boston. He reported no relevant conflicts of interest. These comments summarize his editorial accompanying the article by Dr. Grobman and his associates ( N Engl J Med. 2018 Aug 9;379[6]:580-1 ).

Of the more than 50,000 women screened for the study by Grobman et al., there were more than 44,000 women excluded and more than 16,000 did not participate in the trial. Further, the study participants tended to be younger and comprised more black or Hispanic women than the general population of mothers in the United States, Michael F. Greene, MD, said in a related editorial.

“Readers can only speculate as to why so many women declined to participate in the trial and what implications the demographics of the participants may have for the generalizability of the trial results and the acceptability of elective induction of labor at 39 weeks among women in the United States more generally,” Dr. Greene said. “If induction at 39 weeks becomes a widely popular option, busy obstetrical centers will need to find new ways to accommodate larger numbers of women with longer lengths of stay in the labor and delivery unit.”

Nevertheless, the study reflects a “public preference for a less interventionist approach” to delivery, Dr. Greene said, and the interest is backed by available data. He cited a meta-analysis of 20 randomized trials that found inducing labor at 39 weeks may reduce perinatal morality while not increasing the risk of operative deliveries. Specifically, he noted a randomized trial from the United Kingdom found induction of labor among 619 women at 39 weeks who were at least 35 years old did not affect the participants’ perception of delivery or increase the number of operative deliveries.

“These results across multiple obstetrical centers in the United States, however, should reassure women that elective induction of labor at 39 weeks is a reasonable choice that is very unlikely to result in poorer obstetrical outcomes,” he said.

Dr. Greene is chief of obstetrics and gynecology at Massachusetts General Hospital in Boston. He reported no relevant conflicts of interest. These comments summarize his editorial accompanying the article by Dr. Grobman and his associates ( N Engl J Med. 2018 Aug 9;379[6]:580-1 ).

Nulliparous women who were induced at 39 weeks had the same relative risk of adverse perinatal outcomes but a lower risk of a cesarean delivery, compared with women who received expectant management, results that researchers say contrast traditional recommendations for perinatal care, according to study from the New England Journal of Medicine.

“These findings contradict the conclusions of multiple observational studies that have suggested that labor induction is associated with an increased risk of adverse maternal and perinatal outcomes,” William A. Grobman, MD, the Arthur Hale Curtis, MD, Professor of Obstetrics and Gynecology at Northwestern University in Chicago, and his colleagues wrote. “These studies, however, compared women who underwent labor induction with those who had spontaneous labor, which is not a comparison that is useful to guide clinical decision making.”

Dr. Grobman and his colleagues evaluated the deliveries of 3,062 women who underwent labor induction between 39 weeks of gestation and 39 weeks and 4 days of gestation, and compared them with outcomes of 3,044 women who received expectant management until 40 weeks and 5 days of gestation. Women in both groups had a singleton fetus, no indication of early delivery, and did not plan on delivering by C-section. The participants were assessed again at about 38 weeks of gestation and randomly assigned to receive labor induction or expectant management as part of a multicenter randomized, controlled, parallel-group, unmasked trial in 41 maternal-fetal medicine departments in hospitals participating in the Eunice Kennedy Shriver National Institute of Child Health and Human Development network screened between March 2014, and August 2017.

Primary perinatal outcomes and components were defined as perinatal death, respiratory support, an Apgar score of 3 or less at 5 minutes, hypoxic-ischemic encephalopathy, seizure, infection, meconium aspiration syndrome, birth trauma, intracranial or subgaleal hemorrhage, or hypotension that requires vasopressor support. The principal secondary outcome was cesarean delivery, but other secondary outcomes included neonatal or intensive care, infection, postpartum hospital stay, and hypertension, among others.

Dr. Grobman and his colleagues found 132 (4.3%) of neonates in the induction group and 164 (5.4%) in the expectant-management group experienced a primary composite outcome (relative risk, 0.80; 95% confidence interval, 0.64-1.00; P = .049).

Regarding secondary outcomes, there was a significantly lower risk of cesarean delivery in the induction group, with 18.6% of women undergoing a cesarean delivery, compared with 22.2% of women in the expectant-management group (RR, 0.84; 95% CI, 0.76-0.93; P less than .001). Women in the labor induction group had a significantly lower relative risk of hypertensive disorders of pregnancy (9.1%), compared with the expectant-management (14.1%) group (RR, 0.64; 95% CI, 0.56-0.74; P less than .001). The investigators said women who underwent induced labor had lower 10-point Likert scale scores, were more likely to have “extensions of the uterine incision during cesarean delivery,” perceived they had “more control” during delivery, and had a shorter postpartum stay in the hospital, compared with women who received expectant management. However, women in the induced labor group also had a longer stay in the labor and delivery units, they said.

The researchers noted the limitations in this study, which included its unmasked design, lack of power to detect infrequent outcome differences, and the lack of information surrounding labor induction at 39 weeks in low-risk nulliparous women.

“These results suggest that policies aimed at the avoidance of elective labor induction among low-risk nulliparous women at 39 weeks of gestation are unlikely to reduce the rate of cesarean delivery on a population level; the trial provides information that can be incorporated into discussions that rely on principles of shared decision making,” Dr. Grobman and his colleagues wrote.

This study was funded by the Eunice Kennedy Shriver National Institute of Child Health and Human Development. Dr. Silver reports receiving personal fees from Gestavision. The other authors report no relevant financial disclosures.

SOURCE: Grobman WA et al. N Engl J Med. 2018 Aug 9. doi: 10.1056/NEJMoa1800566.

Nulliparous women who were induced at 39 weeks had the same relative risk of adverse perinatal outcomes but a lower risk of a cesarean delivery, compared with women who received expectant management, results that researchers say contrast traditional recommendations for perinatal care, according to study from the New England Journal of Medicine.

“These findings contradict the conclusions of multiple observational studies that have suggested that labor induction is associated with an increased risk of adverse maternal and perinatal outcomes,” William A. Grobman, MD, the Arthur Hale Curtis, MD, Professor of Obstetrics and Gynecology at Northwestern University in Chicago, and his colleagues wrote. “These studies, however, compared women who underwent labor induction with those who had spontaneous labor, which is not a comparison that is useful to guide clinical decision making.”

Dr. Grobman and his colleagues evaluated the deliveries of 3,062 women who underwent labor induction between 39 weeks of gestation and 39 weeks and 4 days of gestation, and compared them with outcomes of 3,044 women who received expectant management until 40 weeks and 5 days of gestation. Women in both groups had a singleton fetus, no indication of early delivery, and did not plan on delivering by C-section. The participants were assessed again at about 38 weeks of gestation and randomly assigned to receive labor induction or expectant management as part of a multicenter randomized, controlled, parallel-group, unmasked trial in 41 maternal-fetal medicine departments in hospitals participating in the Eunice Kennedy Shriver National Institute of Child Health and Human Development network screened between March 2014, and August 2017.

Primary perinatal outcomes and components were defined as perinatal death, respiratory support, an Apgar score of 3 or less at 5 minutes, hypoxic-ischemic encephalopathy, seizure, infection, meconium aspiration syndrome, birth trauma, intracranial or subgaleal hemorrhage, or hypotension that requires vasopressor support. The principal secondary outcome was cesarean delivery, but other secondary outcomes included neonatal or intensive care, infection, postpartum hospital stay, and hypertension, among others.

Dr. Grobman and his colleagues found 132 (4.3%) of neonates in the induction group and 164 (5.4%) in the expectant-management group experienced a primary composite outcome (relative risk, 0.80; 95% confidence interval, 0.64-1.00; P = .049).

Regarding secondary outcomes, there was a significantly lower risk of cesarean delivery in the induction group, with 18.6% of women undergoing a cesarean delivery, compared with 22.2% of women in the expectant-management group (RR, 0.84; 95% CI, 0.76-0.93; P less than .001). Women in the labor induction group had a significantly lower relative risk of hypertensive disorders of pregnancy (9.1%), compared with the expectant-management (14.1%) group (RR, 0.64; 95% CI, 0.56-0.74; P less than .001). The investigators said women who underwent induced labor had lower 10-point Likert scale scores, were more likely to have “extensions of the uterine incision during cesarean delivery,” perceived they had “more control” during delivery, and had a shorter postpartum stay in the hospital, compared with women who received expectant management. However, women in the induced labor group also had a longer stay in the labor and delivery units, they said.

The researchers noted the limitations in this study, which included its unmasked design, lack of power to detect infrequent outcome differences, and the lack of information surrounding labor induction at 39 weeks in low-risk nulliparous women.

“These results suggest that policies aimed at the avoidance of elective labor induction among low-risk nulliparous women at 39 weeks of gestation are unlikely to reduce the rate of cesarean delivery on a population level; the trial provides information that can be incorporated into discussions that rely on principles of shared decision making,” Dr. Grobman and his colleagues wrote.

This study was funded by the Eunice Kennedy Shriver National Institute of Child Health and Human Development. Dr. Silver reports receiving personal fees from Gestavision. The other authors report no relevant financial disclosures.

SOURCE: Grobman WA et al. N Engl J Med. 2018 Aug 9. doi: 10.1056/NEJMoa1800566.

FROM NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point:

Major finding: 18.6% of women in the induced labor group underwent cesarean delivery, compared with 22.2% in the expectant management group.

Study details: A multicenter randomized, controlled, parallel-group, unmasked trial of 6,106 women from 41 maternal-fetal medicine departments in hospitals participating in the Eunice Kennedy Shriver National Institute of Child Health and Human Development network screened between March 2014 and August 2017.

Disclosures: This study was funded by the Eunice Kennedy Shriver National Institute of Child Health and Human Development. Dr. Silver reports receiving personal fees from Gestavision. The other authors report no relevant financial disclosures..

Source: Grobman WA et al. N Engl J Med. 2018 Aug 9. doi: 10.1056/NEJMoa1800566.

New MS criteria may create more false positives

The revised McDonald criteria for multiple sclerosis (MS) has led to more diagnoses in patients with clinically isolated syndrome (CIS), but a new study of the criteria has suggested that they may lead to a number of false positive MS diagnoses among patients with a less severe disease state.

“In our data, specificity of the 2017 criteria was significantly lower than for the 2010 criteria,” Roos M. van der Vuurst de Vries, MD, from the department of neurology at Erasmus Medical Center in Rotterdam, the Netherlands, and her colleagues wrote in JAMA Neurology. “Earlier data showed that the previous McDonald criteria lead to a higher number of MS diagnoses in patients who will not have a second attack.”

Dr. van der Vuurst de Vries and her colleagues analyzed data from 229 patients with a CIS who underwent an MRI of the spinal cord to assess for dissemination in space (DIS); of these, 180 patients were scored for both DIS and dissemination in time (DIT) and had a “baseline MRI scan that included T1 images after gadolinium administration or scans that did not show any T2 hyperintense lesions.” Some patients also underwent a baseline lumbar puncture if clinically required.

Patients were assessed using both the 2010 and 2017 McDonald criteria for MS, and results were measured using sensitivity, specificity, positive predictive and negative predictive values, and accuracy at 1-year, 3-year, and 5-year follow-up. “The most important addition is that the new criteria allow MS diagnosis when the MRI scan meets criteria for DIS and unique oligoclonal bands (OCB) are present in [cerebrospinal fluid], even in absence of DIT on the MRI scan,” the researchers wrote. “The other major difference is that not only asymptomatic but also symptomatic lesions can be used to demonstrate DIS and DIT on MRI. Furthermore, cortical lesions can be used to demonstrate dissemination in space.”

The researchers found that 124 patients met 2010 DIS criteria (54%) and that 74 patients (60%) went on to develop clinically definite MS, while 149 patients (65%) met 2017 DIS criteria, and 89 patients (60%) went on to clinically definite MS. There were 46 patients (26%) who met 2010 DIT criteria, and 33 of those patients (72%) were diagnosed with clinically definite MS; 126 patients (70%) met 2017 DIT criteria, and 76 of those patients (60%) had clinically definite MS. The sensitivity for the 2010 criteria was 36% (95% confidence interval, 27%-47%)versus 68% for the 2017 criteria (95% CI, 57%-77%; P less than .001). However, specificity for the 2017 criteria was lower (61%; 95% CI, 50%-71%) when compared with the 2010 criteria (85%; 95% CI, 76%-92%; P less than .001). Researchers found more baseline MS diagnoses with the 2017 criteria than with the 2010 criteria, but they noted that 14 of 22 patients (64%) under the 2010 criteria and 26 of 48 patients (54%) under the 2017 criteria with MS had a second attack within 5 years.

The study was supported by the Dutch Multiple Sclerosis Research Foundation. One or more authors received compensation from Teva, Merck, Roche, Sanofi Genzyme, Biogen, and Novartis in the form of honoraria, for advisory board membership, as travel grants, or for participation in trials.

SOURCE: van der Vuurst de Vries RM, et al. JAMA Neurol. 2018 Aug 6. doi: 10.1001/jamaneurol.2018.2160.

The revised McDonald criteria for multiple sclerosis (MS) has led to more diagnoses in patients with clinically isolated syndrome (CIS), but a new study of the criteria has suggested that they may lead to a number of false positive MS diagnoses among patients with a less severe disease state.

“In our data, specificity of the 2017 criteria was significantly lower than for the 2010 criteria,” Roos M. van der Vuurst de Vries, MD, from the department of neurology at Erasmus Medical Center in Rotterdam, the Netherlands, and her colleagues wrote in JAMA Neurology. “Earlier data showed that the previous McDonald criteria lead to a higher number of MS diagnoses in patients who will not have a second attack.”

Dr. van der Vuurst de Vries and her colleagues analyzed data from 229 patients with a CIS who underwent an MRI of the spinal cord to assess for dissemination in space (DIS); of these, 180 patients were scored for both DIS and dissemination in time (DIT) and had a “baseline MRI scan that included T1 images after gadolinium administration or scans that did not show any T2 hyperintense lesions.” Some patients also underwent a baseline lumbar puncture if clinically required.

Patients were assessed using both the 2010 and 2017 McDonald criteria for MS, and results were measured using sensitivity, specificity, positive predictive and negative predictive values, and accuracy at 1-year, 3-year, and 5-year follow-up. “The most important addition is that the new criteria allow MS diagnosis when the MRI scan meets criteria for DIS and unique oligoclonal bands (OCB) are present in [cerebrospinal fluid], even in absence of DIT on the MRI scan,” the researchers wrote. “The other major difference is that not only asymptomatic but also symptomatic lesions can be used to demonstrate DIS and DIT on MRI. Furthermore, cortical lesions can be used to demonstrate dissemination in space.”

The researchers found that 124 patients met 2010 DIS criteria (54%) and that 74 patients (60%) went on to develop clinically definite MS, while 149 patients (65%) met 2017 DIS criteria, and 89 patients (60%) went on to clinically definite MS. There were 46 patients (26%) who met 2010 DIT criteria, and 33 of those patients (72%) were diagnosed with clinically definite MS; 126 patients (70%) met 2017 DIT criteria, and 76 of those patients (60%) had clinically definite MS. The sensitivity for the 2010 criteria was 36% (95% confidence interval, 27%-47%)versus 68% for the 2017 criteria (95% CI, 57%-77%; P less than .001). However, specificity for the 2017 criteria was lower (61%; 95% CI, 50%-71%) when compared with the 2010 criteria (85%; 95% CI, 76%-92%; P less than .001). Researchers found more baseline MS diagnoses with the 2017 criteria than with the 2010 criteria, but they noted that 14 of 22 patients (64%) under the 2010 criteria and 26 of 48 patients (54%) under the 2017 criteria with MS had a second attack within 5 years.

The study was supported by the Dutch Multiple Sclerosis Research Foundation. One or more authors received compensation from Teva, Merck, Roche, Sanofi Genzyme, Biogen, and Novartis in the form of honoraria, for advisory board membership, as travel grants, or for participation in trials.

SOURCE: van der Vuurst de Vries RM, et al. JAMA Neurol. 2018 Aug 6. doi: 10.1001/jamaneurol.2018.2160.

The revised McDonald criteria for multiple sclerosis (MS) has led to more diagnoses in patients with clinically isolated syndrome (CIS), but a new study of the criteria has suggested that they may lead to a number of false positive MS diagnoses among patients with a less severe disease state.

“In our data, specificity of the 2017 criteria was significantly lower than for the 2010 criteria,” Roos M. van der Vuurst de Vries, MD, from the department of neurology at Erasmus Medical Center in Rotterdam, the Netherlands, and her colleagues wrote in JAMA Neurology. “Earlier data showed that the previous McDonald criteria lead to a higher number of MS diagnoses in patients who will not have a second attack.”

Dr. van der Vuurst de Vries and her colleagues analyzed data from 229 patients with a CIS who underwent an MRI of the spinal cord to assess for dissemination in space (DIS); of these, 180 patients were scored for both DIS and dissemination in time (DIT) and had a “baseline MRI scan that included T1 images after gadolinium administration or scans that did not show any T2 hyperintense lesions.” Some patients also underwent a baseline lumbar puncture if clinically required.

Patients were assessed using both the 2010 and 2017 McDonald criteria for MS, and results were measured using sensitivity, specificity, positive predictive and negative predictive values, and accuracy at 1-year, 3-year, and 5-year follow-up. “The most important addition is that the new criteria allow MS diagnosis when the MRI scan meets criteria for DIS and unique oligoclonal bands (OCB) are present in [cerebrospinal fluid], even in absence of DIT on the MRI scan,” the researchers wrote. “The other major difference is that not only asymptomatic but also symptomatic lesions can be used to demonstrate DIS and DIT on MRI. Furthermore, cortical lesions can be used to demonstrate dissemination in space.”

The researchers found that 124 patients met 2010 DIS criteria (54%) and that 74 patients (60%) went on to develop clinically definite MS, while 149 patients (65%) met 2017 DIS criteria, and 89 patients (60%) went on to clinically definite MS. There were 46 patients (26%) who met 2010 DIT criteria, and 33 of those patients (72%) were diagnosed with clinically definite MS; 126 patients (70%) met 2017 DIT criteria, and 76 of those patients (60%) had clinically definite MS. The sensitivity for the 2010 criteria was 36% (95% confidence interval, 27%-47%)versus 68% for the 2017 criteria (95% CI, 57%-77%; P less than .001). However, specificity for the 2017 criteria was lower (61%; 95% CI, 50%-71%) when compared with the 2010 criteria (85%; 95% CI, 76%-92%; P less than .001). Researchers found more baseline MS diagnoses with the 2017 criteria than with the 2010 criteria, but they noted that 14 of 22 patients (64%) under the 2010 criteria and 26 of 48 patients (54%) under the 2017 criteria with MS had a second attack within 5 years.

The study was supported by the Dutch Multiple Sclerosis Research Foundation. One or more authors received compensation from Teva, Merck, Roche, Sanofi Genzyme, Biogen, and Novartis in the form of honoraria, for advisory board membership, as travel grants, or for participation in trials.

SOURCE: van der Vuurst de Vries RM, et al. JAMA Neurol. 2018 Aug 6. doi: 10.1001/jamaneurol.2018.2160.

FROM JAMA NEUROLOGY

Key clinical point: The 2017 McDonald criteria for MS may diagnose more patients with a clinically isolated syndrome, but a lower specificity may also capture more patients who do not have a second CIS event.

Major finding: The sensitivity for the 2017 criteria was greater at 68%, compared with 36% in the 2010 criteria; however, specificity was significantly lower in the 2017 criteria at 61%, compared with 85% in the 2010 criteria.

Data source: An original study of 229 patients from the Netherlands with CIS who underwent an MRI scan within 3 months of symptoms.

Disclosures: The study was supported by the Dutch Multiple Sclerosis Research Foundation. One or more authors received compensation from Teva, Merck, Roche, Sanofi Genzyme, Biogen, and Novartis in the form of honoraria, for advisory board membership, as travel grants, or for participation in trials.

Source: van der Vuurst de Vries RM et al. JAMA Neurol. 2018 Aug 6. doi: 10.1001/jamaneurol.2018.2160.

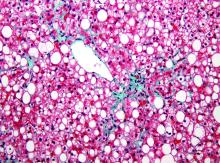

Weight gain linked to progression of fibrosis in NAFLD patients

according to recent research published in Clinical Gastroenterology and Hepatology.

Researchers evaluated 40,700 Korean adults (minimum age, 18 years) with NAFLD who underwent health screenings during 2002-2016 with a median 6-year follow-up. Patients were categorized and placed into weight quintiles based on whether they lost weight (quintile 1, 2.3-kg or greater weight loss; quintile 2, 2.2-kg to 0.6-kg weight loss), gained weight (quintile 4, 0.7- to 2.1-kg weight gain; quintile 5, at least 2.2-kg or greater weight gain) or whether their weight remained stable (quintile 3, 0.5-kg weight loss to 0.6-kg weight gain). Researchers followed patients from baseline to fibrosis progression or last visit, calculated as person-years, and used the aspartate aminotransferase to platelet ratio index (APRI) to measure outcomes. They defined body mass index based on criteria specific to Asian populations, with underweight categorized as less than 18.5 kg/m2, normal weight as 18.5-23 kg/m2, overweight as 23-25 kg/m2, and obese as at least 25 kg/m2.

“Our findings from mostly asymptomatic, relatively young individuals with ultrasonographically detected steatosis, possibly reflecting low-risk NAFLD patients, are less likely to be affected by survivor bias and biases related to comorbidities, compared with previous findings from cohorts of high-risk groups that underwent liver biopsy,” Seungho Ryu, MD, PhD, from Kangbuk Samsung Hospital in Seoul, South Korea, and colleagues wrote in the study.

There were 5,454 participants who progressed from a low APRI to an intermediate or high APRI within 275,451.5 person-years, researchers said. Compared with the stable-weight group, hazard ratios for APRI progression in the first weight-change quintile were 0.68 (95% confidence interval, 0.62-0.74) and 0.86 in the second weight-change quintile (95% CI, 0.78-0.94). In the weight-gain groups, an increase in weight was associated with APRI progression in the fourth quintile (HR, 1.17; 95% CI, 1.07-1.28) and fifth quintile (HR, 1.71; 95% CI, 1.58-1.85) groups.

After multivariable adjustment, there was an increase in APRI progression among patients with BMIs between 23 and 24.9 kg/m2 (HR, 1.13; 95% CI, 1.02-1.26), between 25 and 29.9 kg/m2 (HR, 1.41; 95% CI, 1.28-1.55), and greater than or equal to 30 kg/m2 (HR, 2.09; 95% CI, 1.86-2.36) compared with patients with a BMI between 18.5 and 22.9 kg/m2,.

Limitations of the study included the use of ultrasonography in place of liver biopsy for diagnosing NAFLD and the use of APRI to predict fibrosis in individuals with NAFLD, researchers said.

“APRI has demonstrated a reasonable utility as a noninvasive method for the prediction of histologically confirmed advanced fibrosis,” Dr. Ryu and colleagues wrote. “Nonetheless, we acknowledge that there is no currently available longitudinal data to support the use of worsening noninvasive fibrosis markers as an indicator of histological progression of fibrosis stage over time.”

Other limitations included the study’s retrospective design, lack of availability of medication use and dietary intake, and lack of generalization based on a young, healthy population of mostly Korean employees who were employed by companies or local government. However, researchers said clinicians should encourage their patients with NAFLD to maintain a healthy weight to avoid progression of fibrosis.

The authors reported no relevant financial disclosures.

SOURCE: Kim Y et al. Clin Gastroenterol Hepatol. 2018. doi: 10.1016/j.cgh.2018.07.006.

according to recent research published in Clinical Gastroenterology and Hepatology.

Researchers evaluated 40,700 Korean adults (minimum age, 18 years) with NAFLD who underwent health screenings during 2002-2016 with a median 6-year follow-up. Patients were categorized and placed into weight quintiles based on whether they lost weight (quintile 1, 2.3-kg or greater weight loss; quintile 2, 2.2-kg to 0.6-kg weight loss), gained weight (quintile 4, 0.7- to 2.1-kg weight gain; quintile 5, at least 2.2-kg or greater weight gain) or whether their weight remained stable (quintile 3, 0.5-kg weight loss to 0.6-kg weight gain). Researchers followed patients from baseline to fibrosis progression or last visit, calculated as person-years, and used the aspartate aminotransferase to platelet ratio index (APRI) to measure outcomes. They defined body mass index based on criteria specific to Asian populations, with underweight categorized as less than 18.5 kg/m2, normal weight as 18.5-23 kg/m2, overweight as 23-25 kg/m2, and obese as at least 25 kg/m2.

“Our findings from mostly asymptomatic, relatively young individuals with ultrasonographically detected steatosis, possibly reflecting low-risk NAFLD patients, are less likely to be affected by survivor bias and biases related to comorbidities, compared with previous findings from cohorts of high-risk groups that underwent liver biopsy,” Seungho Ryu, MD, PhD, from Kangbuk Samsung Hospital in Seoul, South Korea, and colleagues wrote in the study.

There were 5,454 participants who progressed from a low APRI to an intermediate or high APRI within 275,451.5 person-years, researchers said. Compared with the stable-weight group, hazard ratios for APRI progression in the first weight-change quintile were 0.68 (95% confidence interval, 0.62-0.74) and 0.86 in the second weight-change quintile (95% CI, 0.78-0.94). In the weight-gain groups, an increase in weight was associated with APRI progression in the fourth quintile (HR, 1.17; 95% CI, 1.07-1.28) and fifth quintile (HR, 1.71; 95% CI, 1.58-1.85) groups.

After multivariable adjustment, there was an increase in APRI progression among patients with BMIs between 23 and 24.9 kg/m2 (HR, 1.13; 95% CI, 1.02-1.26), between 25 and 29.9 kg/m2 (HR, 1.41; 95% CI, 1.28-1.55), and greater than or equal to 30 kg/m2 (HR, 2.09; 95% CI, 1.86-2.36) compared with patients with a BMI between 18.5 and 22.9 kg/m2,.

Limitations of the study included the use of ultrasonography in place of liver biopsy for diagnosing NAFLD and the use of APRI to predict fibrosis in individuals with NAFLD, researchers said.

“APRI has demonstrated a reasonable utility as a noninvasive method for the prediction of histologically confirmed advanced fibrosis,” Dr. Ryu and colleagues wrote. “Nonetheless, we acknowledge that there is no currently available longitudinal data to support the use of worsening noninvasive fibrosis markers as an indicator of histological progression of fibrosis stage over time.”

Other limitations included the study’s retrospective design, lack of availability of medication use and dietary intake, and lack of generalization based on a young, healthy population of mostly Korean employees who were employed by companies or local government. However, researchers said clinicians should encourage their patients with NAFLD to maintain a healthy weight to avoid progression of fibrosis.

The authors reported no relevant financial disclosures.

SOURCE: Kim Y et al. Clin Gastroenterol Hepatol. 2018. doi: 10.1016/j.cgh.2018.07.006.

according to recent research published in Clinical Gastroenterology and Hepatology.

Researchers evaluated 40,700 Korean adults (minimum age, 18 years) with NAFLD who underwent health screenings during 2002-2016 with a median 6-year follow-up. Patients were categorized and placed into weight quintiles based on whether they lost weight (quintile 1, 2.3-kg or greater weight loss; quintile 2, 2.2-kg to 0.6-kg weight loss), gained weight (quintile 4, 0.7- to 2.1-kg weight gain; quintile 5, at least 2.2-kg or greater weight gain) or whether their weight remained stable (quintile 3, 0.5-kg weight loss to 0.6-kg weight gain). Researchers followed patients from baseline to fibrosis progression or last visit, calculated as person-years, and used the aspartate aminotransferase to platelet ratio index (APRI) to measure outcomes. They defined body mass index based on criteria specific to Asian populations, with underweight categorized as less than 18.5 kg/m2, normal weight as 18.5-23 kg/m2, overweight as 23-25 kg/m2, and obese as at least 25 kg/m2.

“Our findings from mostly asymptomatic, relatively young individuals with ultrasonographically detected steatosis, possibly reflecting low-risk NAFLD patients, are less likely to be affected by survivor bias and biases related to comorbidities, compared with previous findings from cohorts of high-risk groups that underwent liver biopsy,” Seungho Ryu, MD, PhD, from Kangbuk Samsung Hospital in Seoul, South Korea, and colleagues wrote in the study.

There were 5,454 participants who progressed from a low APRI to an intermediate or high APRI within 275,451.5 person-years, researchers said. Compared with the stable-weight group, hazard ratios for APRI progression in the first weight-change quintile were 0.68 (95% confidence interval, 0.62-0.74) and 0.86 in the second weight-change quintile (95% CI, 0.78-0.94). In the weight-gain groups, an increase in weight was associated with APRI progression in the fourth quintile (HR, 1.17; 95% CI, 1.07-1.28) and fifth quintile (HR, 1.71; 95% CI, 1.58-1.85) groups.

After multivariable adjustment, there was an increase in APRI progression among patients with BMIs between 23 and 24.9 kg/m2 (HR, 1.13; 95% CI, 1.02-1.26), between 25 and 29.9 kg/m2 (HR, 1.41; 95% CI, 1.28-1.55), and greater than or equal to 30 kg/m2 (HR, 2.09; 95% CI, 1.86-2.36) compared with patients with a BMI between 18.5 and 22.9 kg/m2,.

Limitations of the study included the use of ultrasonography in place of liver biopsy for diagnosing NAFLD and the use of APRI to predict fibrosis in individuals with NAFLD, researchers said.

“APRI has demonstrated a reasonable utility as a noninvasive method for the prediction of histologically confirmed advanced fibrosis,” Dr. Ryu and colleagues wrote. “Nonetheless, we acknowledge that there is no currently available longitudinal data to support the use of worsening noninvasive fibrosis markers as an indicator of histological progression of fibrosis stage over time.”

Other limitations included the study’s retrospective design, lack of availability of medication use and dietary intake, and lack of generalization based on a young, healthy population of mostly Korean employees who were employed by companies or local government. However, researchers said clinicians should encourage their patients with NAFLD to maintain a healthy weight to avoid progression of fibrosis.

The authors reported no relevant financial disclosures.

SOURCE: Kim Y et al. Clin Gastroenterol Hepatol. 2018. doi: 10.1016/j.cgh.2018.07.006.

FROM CLINICAL GASTROENTEROLOGY AND HEPATOLOGY

Key clinical point: Obesity and weight gain were linked to progression of fibrosis in adults with NAFLD.

Major finding: Degree of weight change was associated with risk of fibrosis progression; patients who gained weight in quintile 4 and quintile 5 had hazard ratios of 1.17 and 1.71, respectively, when compared with the quintile of patients whose weight remained stable.

Data source: A retrospective study of 40,700 Korean adults with NAFLD who underwent health screenings during 2002-2016 with a median 6-year follow-up.

Disclosures: The authors reported no relevant financial disclosures.

Source: Kim Y et al. Clin Gastroenterol Hepatol. 2018. doi: 10.1016/j.cgh.2018.07.006.

Opioid use has not declined meaningfully

Opioid use has not significantly declined over the past 10 years despite efforts to educate prescribers about the risks of opioid abuse, with over half of disabled Medicare beneficiaries using opioids each year, according to a recent retrospective cohort study published in the BMJ.

“We found very high prevalence of opioid use and opioid doses in disabled Medicare beneficiaries, most likely reflecting the high burden of illness in this population,” Molly M. Jeffery, PhD, from the Mayo Clinic, Rochester, Minn., and her associates wrote in their study.

The investigators evaluated pharmaceutical and medical claims data from 48 million individuals who were commercially insured or were Medicare Advantage recipients (both those eligible because they were older than 65 years and those under 65 years old who still were eligible because of disability). The researchers found that 52% of disabled Medicare patients, 26% of aged Medicare patients, and 14% of commercially insured patients used opioids annually within the study period.

In the commercially insured group, there was little fluctuation in patient opioid prevalence by quarter, with an average daily dose of 17 mg morphine equivalents (MME) during 2011-2016; 6% of patients used opioids quarterly at the beginning and end of the study. There was an increase of quarterly opioid prevalence in the aged Medicare group from 11% to 14% at the beginning and end of the study period. Average daily dose also increased during this period for the aged Medicare group from 18 MME in 2011 to 20 MME in 2016.

Researchers said commercial beneficiaries between 45 years and 54 years old had the highest prevalence of opioid use. The disabled Medicare group saw the greatest increase among groups in opioid prevalence and average daily dose, with a 26% prevalence in 2007 and 53 MME average daily dose, which increased to a prevalence of 39% and an average daily dose of 56 MME in 2016.

“Doctors and patients should consider whether long-term opioid use is improving the patient’s ability to function and, if not, should consider other treatments either as an addition or replacement to opioid use,” Dr. Jeffery and her colleagues wrote. “Evidence-based approaches are needed to improve both the safety of opioid use and patient outcomes including pain management and ability to function.”

The researchers noted limitations in the study, such as not including people with Medicaid, fee-for-service Medicare, or the uninsured. In addition, the data reviewed did not indicate the prevalence of chronic pain or pain duration in the patient population studied, they said.

The authors reported no relevant financial disclosures.

SOURCE: Jeffery MM et al. BMJ. 2018 Aug 1. doi: 10.1136/bmj.k2833.

Opioid use has not significantly declined over the past 10 years despite efforts to educate prescribers about the risks of opioid abuse, with over half of disabled Medicare beneficiaries using opioids each year, according to a recent retrospective cohort study published in the BMJ.

“We found very high prevalence of opioid use and opioid doses in disabled Medicare beneficiaries, most likely reflecting the high burden of illness in this population,” Molly M. Jeffery, PhD, from the Mayo Clinic, Rochester, Minn., and her associates wrote in their study.

The investigators evaluated pharmaceutical and medical claims data from 48 million individuals who were commercially insured or were Medicare Advantage recipients (both those eligible because they were older than 65 years and those under 65 years old who still were eligible because of disability). The researchers found that 52% of disabled Medicare patients, 26% of aged Medicare patients, and 14% of commercially insured patients used opioids annually within the study period.

In the commercially insured group, there was little fluctuation in patient opioid prevalence by quarter, with an average daily dose of 17 mg morphine equivalents (MME) during 2011-2016; 6% of patients used opioids quarterly at the beginning and end of the study. There was an increase of quarterly opioid prevalence in the aged Medicare group from 11% to 14% at the beginning and end of the study period. Average daily dose also increased during this period for the aged Medicare group from 18 MME in 2011 to 20 MME in 2016.

Researchers said commercial beneficiaries between 45 years and 54 years old had the highest prevalence of opioid use. The disabled Medicare group saw the greatest increase among groups in opioid prevalence and average daily dose, with a 26% prevalence in 2007 and 53 MME average daily dose, which increased to a prevalence of 39% and an average daily dose of 56 MME in 2016.

“Doctors and patients should consider whether long-term opioid use is improving the patient’s ability to function and, if not, should consider other treatments either as an addition or replacement to opioid use,” Dr. Jeffery and her colleagues wrote. “Evidence-based approaches are needed to improve both the safety of opioid use and patient outcomes including pain management and ability to function.”

The researchers noted limitations in the study, such as not including people with Medicaid, fee-for-service Medicare, or the uninsured. In addition, the data reviewed did not indicate the prevalence of chronic pain or pain duration in the patient population studied, they said.

The authors reported no relevant financial disclosures.

SOURCE: Jeffery MM et al. BMJ. 2018 Aug 1. doi: 10.1136/bmj.k2833.

Opioid use has not significantly declined over the past 10 years despite efforts to educate prescribers about the risks of opioid abuse, with over half of disabled Medicare beneficiaries using opioids each year, according to a recent retrospective cohort study published in the BMJ.

“We found very high prevalence of opioid use and opioid doses in disabled Medicare beneficiaries, most likely reflecting the high burden of illness in this population,” Molly M. Jeffery, PhD, from the Mayo Clinic, Rochester, Minn., and her associates wrote in their study.

The investigators evaluated pharmaceutical and medical claims data from 48 million individuals who were commercially insured or were Medicare Advantage recipients (both those eligible because they were older than 65 years and those under 65 years old who still were eligible because of disability). The researchers found that 52% of disabled Medicare patients, 26% of aged Medicare patients, and 14% of commercially insured patients used opioids annually within the study period.

In the commercially insured group, there was little fluctuation in patient opioid prevalence by quarter, with an average daily dose of 17 mg morphine equivalents (MME) during 2011-2016; 6% of patients used opioids quarterly at the beginning and end of the study. There was an increase of quarterly opioid prevalence in the aged Medicare group from 11% to 14% at the beginning and end of the study period. Average daily dose also increased during this period for the aged Medicare group from 18 MME in 2011 to 20 MME in 2016.

Researchers said commercial beneficiaries between 45 years and 54 years old had the highest prevalence of opioid use. The disabled Medicare group saw the greatest increase among groups in opioid prevalence and average daily dose, with a 26% prevalence in 2007 and 53 MME average daily dose, which increased to a prevalence of 39% and an average daily dose of 56 MME in 2016.

“Doctors and patients should consider whether long-term opioid use is improving the patient’s ability to function and, if not, should consider other treatments either as an addition or replacement to opioid use,” Dr. Jeffery and her colleagues wrote. “Evidence-based approaches are needed to improve both the safety of opioid use and patient outcomes including pain management and ability to function.”

The researchers noted limitations in the study, such as not including people with Medicaid, fee-for-service Medicare, or the uninsured. In addition, the data reviewed did not indicate the prevalence of chronic pain or pain duration in the patient population studied, they said.

The authors reported no relevant financial disclosures.

SOURCE: Jeffery MM et al. BMJ. 2018 Aug 1. doi: 10.1136/bmj.k2833.

FROM THE BMJ

Key clinical point: The highest prevalence was seen among disabled patients with Medicare Advantage.

Major finding: Of those studied, annual prevalence of opioid use was 14% for commercial beneficiaries, 26% for aged Medicare beneficiaries, and 52% for disabled Medicare beneficiaries.

Study details: An observational cohort study of claims data from 48 million people who had commercial insurance or Medicare Advantage between January 2007 and December 2016.

Disclosures: The authors reported no relevant financial disclosures.

Source: Jeffery MM et al. BMJ. 2018 Aug 1. doi: 10.1136/bmj.k2833.

Autoimmune connective tissue disease predicted by interferon status, family history

Patients at risk of autoimmune connective tissue disease who progressed to actual disease had elevated interferon scores and a family history of autoimmune rheumatic disease, suggesting the interferon scores could be used to predict disease progression, according to results from a prospective, observational study published in Annals of the Rheumatic Diseases.