User login

Age-related cognitive decline not inevitable?

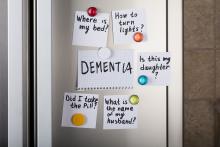

Investigators found that despite the presence of neuropathologies associated with Alzheimer’s disease (AD), many centenarians maintained high levels of cognitive performance.

“Cognitive decline is not inevitable,” senior author Henne Holstege, PhD, assistant professor, Amsterdam Alzheimer Center and Clinical Genetics, Amsterdam University Medical Center, said in an interview.

“At 100 years or older, high levels of cognitive performance can be maintained for several years, even when individuals are exposed to risk factors associated with cognitive decline,” she said.

The study was published online Jan. 15 in JAMA Network Open.

Escaping cognitive decline

Dr. Holstege said her interest in researching aging and cognitive health was inspired by the “fascinating” story of Hendrikje van Andel-Schipper, who died at age 115 in 2015 “completely cognitively healthy.” Her mother, who died at age 100, also was cognitively intact at the end of her life.

“I wanted to know how it is possible that some people can completely escape all aspects of cognitive decline while reaching extreme ages,” Dr. Holstege said.

To discover the secret to cognitive health in the oldest old, Dr. Holstege initiated the 100-Plus Study, which involved a cohort of healthy centenarians.

The investigators conducted extensive neuropsychological testing and collected blood and fecal samples to examine “the myriad factors that influence physical health, including genetics, neuropathology, blood markers, and the gut microbiome, to explore the molecular and neuropsychologic constellations associated with the escape from cognitive decline.”

The goal of the research was to investigate “to what extent centenarians were able to maintain their cognitive health after study inclusion, and to what extent this was associated with genetic, physical, or neuropathological features,” she said.

The study included 330 centenarians who completed one or more neuropsychological assessments. Neuropathologic studies were available for 44 participants.

To assess baseline cognitive performance, the researchers administered a wide array of neurocognitive tests, as well as the Mini–Mental State Examination, from which mean z scores for cognitive domains were calculated.

Additional factors in the analysis included sex, age, APOE status, cognitive reserve, physical health, and whether participants lived independently.

At autopsy, amyloid-beta (A-beta) level, the level of intracellular accumulation of phosphorylated tau protein in neurofibrillary tangles (NFTs), and the neuritic plaque (NP) load were assessed.

Resilience and cognitive reserve

At baseline, the median age of the centenarians (n = 330, 72.4% women) was 100.5 years (interquartile range, 100.2-101.7). A little over half (56.7%) lived independently, and the majority had good vision (65%) and hearing (56.4%). Most (78.8%) were able to walk independently, and 37.9% had achieved the highest International Standard Classification of Education level of postsecondary education.

The researchers found “varying degrees of neuropathology” in the brains of the 44 donors, including A-beta, NFT, and NPs.

The duration of follow-up in analyzing cognitive trajectories ranged from 0 to 4 years (median, 1.6 years).

Assessments of all cognitive domains showed no decline, with the exception of a “slight” decrement in memory function (beta −.10 SD per year; 95% confidence interval, –.14 to –.05 SD; P < .001).

Cognitive performance was associated with factors of physical health or cognitive reserve, for example, greater independence in performing activities of daily living, as assessed by the Barthel index (beta .37 SD per year; 95% CI, .24-.49; P < .001), or higher educational level (beta .41 SD per year; 95% CI, .29-.53; P < .001).

Despite findings of neuropathologic “hallmarks” of AD post mortem in the brains of the centenarians, these were not associated with cognitive performance or rate of decline.

APOE epsilon-4 or an APOE epsilon-3 alleles also were not significantly associated with cognitive performance or decline, suggesting that the “effects of APOE alleles are exerted before the age of 100 years,” the authors noted.

“Our findings suggest that after reaching age 100 years, cognitive performance remains relatively stable during ensuing years. Therefore, these centenarians might be resilient or resistant against different risk factors of cognitive decline,” the authors wrote. They also speculate that resilience may be attributable to greater cognitive reserve.

“Our preliminary data indicate that approximately 60% of the chance to reach 100 years old is heritable. Therefore, to get a better understanding of which genetic factors associate with the prolonged maintenance of cognitive health, we are looking into which genetic variants occur more commonly in centenarians compared to younger individuals,” said Dr. Holstege.

“Of course, more research needs to be performed to get a better understanding of how such genetic elements might sustain brain health,” she added.

A ‘landmark study’

Commenting on the study in an interview, Thomas Perls, MD, MPH, professor of medicine, Boston University, called it a “landmark” study in research on exceptional longevity in humans.

Dr. Perls, the author of an accompanying editorial, noted that “one cannot absolutely assume a certain level or disability or risk for disease just because a person has achieved extreme age – in fact, if anything, their ability to achieve much older ages likely indicates that they have resistance or resilience to aging-related problems.”

Understanding the mechanism of the resilience could lead to treatment or prevention of AD, said Dr. Perls, who was not involved in the research.

“People have to be careful about ageist myths and attitudes and not have the ageist idea that the older you get, the sicker you get, because many individuals disprove that,” he cautioned.

The study was supported by Stichting Alzheimer Nederland and Stichting Vumc Fonds. Research from the Alzheimer Center Amsterdam is part of the neurodegeneration research program of Amsterdam Neuroscience. Dr. Holstege and Dr. Perls reported having no relevant financial relationships. The other authors’ disclosures are listed on the original article.

A version of this article first appeared on Medscape.com.

Investigators found that despite the presence of neuropathologies associated with Alzheimer’s disease (AD), many centenarians maintained high levels of cognitive performance.

“Cognitive decline is not inevitable,” senior author Henne Holstege, PhD, assistant professor, Amsterdam Alzheimer Center and Clinical Genetics, Amsterdam University Medical Center, said in an interview.

“At 100 years or older, high levels of cognitive performance can be maintained for several years, even when individuals are exposed to risk factors associated with cognitive decline,” she said.

The study was published online Jan. 15 in JAMA Network Open.

Escaping cognitive decline

Dr. Holstege said her interest in researching aging and cognitive health was inspired by the “fascinating” story of Hendrikje van Andel-Schipper, who died at age 115 in 2015 “completely cognitively healthy.” Her mother, who died at age 100, also was cognitively intact at the end of her life.

“I wanted to know how it is possible that some people can completely escape all aspects of cognitive decline while reaching extreme ages,” Dr. Holstege said.

To discover the secret to cognitive health in the oldest old, Dr. Holstege initiated the 100-Plus Study, which involved a cohort of healthy centenarians.

The investigators conducted extensive neuropsychological testing and collected blood and fecal samples to examine “the myriad factors that influence physical health, including genetics, neuropathology, blood markers, and the gut microbiome, to explore the molecular and neuropsychologic constellations associated with the escape from cognitive decline.”

The goal of the research was to investigate “to what extent centenarians were able to maintain their cognitive health after study inclusion, and to what extent this was associated with genetic, physical, or neuropathological features,” she said.

The study included 330 centenarians who completed one or more neuropsychological assessments. Neuropathologic studies were available for 44 participants.

To assess baseline cognitive performance, the researchers administered a wide array of neurocognitive tests, as well as the Mini–Mental State Examination, from which mean z scores for cognitive domains were calculated.

Additional factors in the analysis included sex, age, APOE status, cognitive reserve, physical health, and whether participants lived independently.

At autopsy, amyloid-beta (A-beta) level, the level of intracellular accumulation of phosphorylated tau protein in neurofibrillary tangles (NFTs), and the neuritic plaque (NP) load were assessed.

Resilience and cognitive reserve

At baseline, the median age of the centenarians (n = 330, 72.4% women) was 100.5 years (interquartile range, 100.2-101.7). A little over half (56.7%) lived independently, and the majority had good vision (65%) and hearing (56.4%). Most (78.8%) were able to walk independently, and 37.9% had achieved the highest International Standard Classification of Education level of postsecondary education.

The researchers found “varying degrees of neuropathology” in the brains of the 44 donors, including A-beta, NFT, and NPs.

The duration of follow-up in analyzing cognitive trajectories ranged from 0 to 4 years (median, 1.6 years).

Assessments of all cognitive domains showed no decline, with the exception of a “slight” decrement in memory function (beta −.10 SD per year; 95% confidence interval, –.14 to –.05 SD; P < .001).

Cognitive performance was associated with factors of physical health or cognitive reserve, for example, greater independence in performing activities of daily living, as assessed by the Barthel index (beta .37 SD per year; 95% CI, .24-.49; P < .001), or higher educational level (beta .41 SD per year; 95% CI, .29-.53; P < .001).

Despite findings of neuropathologic “hallmarks” of AD post mortem in the brains of the centenarians, these were not associated with cognitive performance or rate of decline.

APOE epsilon-4 or an APOE epsilon-3 alleles also were not significantly associated with cognitive performance or decline, suggesting that the “effects of APOE alleles are exerted before the age of 100 years,” the authors noted.

“Our findings suggest that after reaching age 100 years, cognitive performance remains relatively stable during ensuing years. Therefore, these centenarians might be resilient or resistant against different risk factors of cognitive decline,” the authors wrote. They also speculate that resilience may be attributable to greater cognitive reserve.

“Our preliminary data indicate that approximately 60% of the chance to reach 100 years old is heritable. Therefore, to get a better understanding of which genetic factors associate with the prolonged maintenance of cognitive health, we are looking into which genetic variants occur more commonly in centenarians compared to younger individuals,” said Dr. Holstege.

“Of course, more research needs to be performed to get a better understanding of how such genetic elements might sustain brain health,” she added.

A ‘landmark study’

Commenting on the study in an interview, Thomas Perls, MD, MPH, professor of medicine, Boston University, called it a “landmark” study in research on exceptional longevity in humans.

Dr. Perls, the author of an accompanying editorial, noted that “one cannot absolutely assume a certain level or disability or risk for disease just because a person has achieved extreme age – in fact, if anything, their ability to achieve much older ages likely indicates that they have resistance or resilience to aging-related problems.”

Understanding the mechanism of the resilience could lead to treatment or prevention of AD, said Dr. Perls, who was not involved in the research.

“People have to be careful about ageist myths and attitudes and not have the ageist idea that the older you get, the sicker you get, because many individuals disprove that,” he cautioned.

The study was supported by Stichting Alzheimer Nederland and Stichting Vumc Fonds. Research from the Alzheimer Center Amsterdam is part of the neurodegeneration research program of Amsterdam Neuroscience. Dr. Holstege and Dr. Perls reported having no relevant financial relationships. The other authors’ disclosures are listed on the original article.

A version of this article first appeared on Medscape.com.

Investigators found that despite the presence of neuropathologies associated with Alzheimer’s disease (AD), many centenarians maintained high levels of cognitive performance.

“Cognitive decline is not inevitable,” senior author Henne Holstege, PhD, assistant professor, Amsterdam Alzheimer Center and Clinical Genetics, Amsterdam University Medical Center, said in an interview.

“At 100 years or older, high levels of cognitive performance can be maintained for several years, even when individuals are exposed to risk factors associated with cognitive decline,” she said.

The study was published online Jan. 15 in JAMA Network Open.

Escaping cognitive decline

Dr. Holstege said her interest in researching aging and cognitive health was inspired by the “fascinating” story of Hendrikje van Andel-Schipper, who died at age 115 in 2015 “completely cognitively healthy.” Her mother, who died at age 100, also was cognitively intact at the end of her life.

“I wanted to know how it is possible that some people can completely escape all aspects of cognitive decline while reaching extreme ages,” Dr. Holstege said.

To discover the secret to cognitive health in the oldest old, Dr. Holstege initiated the 100-Plus Study, which involved a cohort of healthy centenarians.

The investigators conducted extensive neuropsychological testing and collected blood and fecal samples to examine “the myriad factors that influence physical health, including genetics, neuropathology, blood markers, and the gut microbiome, to explore the molecular and neuropsychologic constellations associated with the escape from cognitive decline.”

The goal of the research was to investigate “to what extent centenarians were able to maintain their cognitive health after study inclusion, and to what extent this was associated with genetic, physical, or neuropathological features,” she said.

The study included 330 centenarians who completed one or more neuropsychological assessments. Neuropathologic studies were available for 44 participants.

To assess baseline cognitive performance, the researchers administered a wide array of neurocognitive tests, as well as the Mini–Mental State Examination, from which mean z scores for cognitive domains were calculated.

Additional factors in the analysis included sex, age, APOE status, cognitive reserve, physical health, and whether participants lived independently.

At autopsy, amyloid-beta (A-beta) level, the level of intracellular accumulation of phosphorylated tau protein in neurofibrillary tangles (NFTs), and the neuritic plaque (NP) load were assessed.

Resilience and cognitive reserve

At baseline, the median age of the centenarians (n = 330, 72.4% women) was 100.5 years (interquartile range, 100.2-101.7). A little over half (56.7%) lived independently, and the majority had good vision (65%) and hearing (56.4%). Most (78.8%) were able to walk independently, and 37.9% had achieved the highest International Standard Classification of Education level of postsecondary education.

The researchers found “varying degrees of neuropathology” in the brains of the 44 donors, including A-beta, NFT, and NPs.

The duration of follow-up in analyzing cognitive trajectories ranged from 0 to 4 years (median, 1.6 years).

Assessments of all cognitive domains showed no decline, with the exception of a “slight” decrement in memory function (beta −.10 SD per year; 95% confidence interval, –.14 to –.05 SD; P < .001).

Cognitive performance was associated with factors of physical health or cognitive reserve, for example, greater independence in performing activities of daily living, as assessed by the Barthel index (beta .37 SD per year; 95% CI, .24-.49; P < .001), or higher educational level (beta .41 SD per year; 95% CI, .29-.53; P < .001).

Despite findings of neuropathologic “hallmarks” of AD post mortem in the brains of the centenarians, these were not associated with cognitive performance or rate of decline.

APOE epsilon-4 or an APOE epsilon-3 alleles also were not significantly associated with cognitive performance or decline, suggesting that the “effects of APOE alleles are exerted before the age of 100 years,” the authors noted.

“Our findings suggest that after reaching age 100 years, cognitive performance remains relatively stable during ensuing years. Therefore, these centenarians might be resilient or resistant against different risk factors of cognitive decline,” the authors wrote. They also speculate that resilience may be attributable to greater cognitive reserve.

“Our preliminary data indicate that approximately 60% of the chance to reach 100 years old is heritable. Therefore, to get a better understanding of which genetic factors associate with the prolonged maintenance of cognitive health, we are looking into which genetic variants occur more commonly in centenarians compared to younger individuals,” said Dr. Holstege.

“Of course, more research needs to be performed to get a better understanding of how such genetic elements might sustain brain health,” she added.

A ‘landmark study’

Commenting on the study in an interview, Thomas Perls, MD, MPH, professor of medicine, Boston University, called it a “landmark” study in research on exceptional longevity in humans.

Dr. Perls, the author of an accompanying editorial, noted that “one cannot absolutely assume a certain level or disability or risk for disease just because a person has achieved extreme age – in fact, if anything, their ability to achieve much older ages likely indicates that they have resistance or resilience to aging-related problems.”

Understanding the mechanism of the resilience could lead to treatment or prevention of AD, said Dr. Perls, who was not involved in the research.

“People have to be careful about ageist myths and attitudes and not have the ageist idea that the older you get, the sicker you get, because many individuals disprove that,” he cautioned.

The study was supported by Stichting Alzheimer Nederland and Stichting Vumc Fonds. Research from the Alzheimer Center Amsterdam is part of the neurodegeneration research program of Amsterdam Neuroscience. Dr. Holstege and Dr. Perls reported having no relevant financial relationships. The other authors’ disclosures are listed on the original article.

A version of this article first appeared on Medscape.com.

Simple blood test plus AI may flag early-stage Alzheimer’s disease

, raising the prospect of early intervention when effective treatments become available.

In a study, investigators used six AI methodologies, including Deep Learning, to assess blood leukocyte epigenomic biomarkers. They found more than 150 genetic differences among study participants with Alzheimer’s disease in comparison with participants who did not have Alzheimer’s disease.

All of the AI platforms were effective in predicting Alzheimer’s disease. Deep Learning’s assessment of intragenic cytosine-phosphate-guanines (CpGs) had sensitivity and specificity rates of 97%.

“It’s almost as if the leukocytes have become a newspaper to tell us, ‘This is what is going on in the brain,’ “ lead author Ray Bahado-Singh, MD, chair of the department of obstetrics and gynecology, Oakland University, Auburn Hills, Mich., said in a news release.

The researchers noted that the findings, if replicated in future studies, may help in providing Alzheimer’s disease diagnoses “much earlier” in the disease process. “The holy grail is to identify patients in the preclinical stage so effective early interventions, including new medications, can be studied and ultimately used,” Dr. Bahado-Singh said.

“This certainly isn’t the final step in Alzheimer’s research, but I think this represents a significant change in direction,” he told attendees at a press briefing.

The findings were published online March 31 in PLOS ONE.

Silver tsunami

The investigators noted that Alzheimer’s disease is often diagnosed when the disease is in its later stages, after irreversible brain damage has occurred. “There is currently no cure for the disease, and the treatment is limited to drugs that attempt to treat symptoms and have little effect on the disease’s progression,” they noted.

Coinvestigator Khaled Imam, MD, director of geriatric medicine for Beaumont Health in Michigan, pointed out that although MRI and lumbar puncture can identify Alzheimer’s disease early on, the processes are expensive and/or invasive.

“Having biomarkers in the blood ... and being able to identify [Alzheimer’s disease] years before symptoms start, hopefully we’d be able to intervene early on in the process of the disease,” Dr. Imam said.

It is estimated that the number of Americans aged 85 and older will triple by 2050. This impending “silver tsunami,” which will come with a commensurate increase in Alzheimer’s disease cases, makes it even more important to be able to diagnose the disease early on, he noted.

The study included 24 individuals with late-onset Alzheimer’s disease (70.8% women; mean age, 83 years); 24 were deemed to be “cognitively healthy” (66.7% women; mean age, 80 years). About 500 ng of genomic DNA was extracted from whole-blood samples from each participant.

The researchers used the Infinium MethylationEPIC BeadChip array, and the samples were then examined for markers of methylation that would “indicate the disease process has started,” they noted.

In addition to Deep Learning, the five other AI platforms were the Support Vector Machine, Generalized Linear Model, Prediction Analysis for Microarrays, Random Forest, and Linear Discriminant Analysis.

These platforms were used to assess leukocyte genome changes. To predict Alzheimer’s disease, the researchers also used Ingenuity Pathway Analysis.

Significant “chemical changes”

Results showed that the Alzheimer’s disease group had 152 significantly differentially methylated CpGs in 171 genes in comparison with the non-Alzheimer’s disease group (false discovery rate P value < .05).

As a whole, using intragenic and intergenic/extragenic CpGs, the AI platforms were effective in predicting who had Alzheimer’s disease (area under the curve [AUC], ≥ 0.93). Using intragenic markers, the AUC for Deep Learning was 0.99.

“We looked at close to a million different sites, and we saw some chemical changes that we know are associated with alteration or change in gene function,” Dr. Bahado-Singh said.

Altered genes that were found in the Alzheimer’s disease group included CR1L, CTSV, S1PR1, and LTB4R – all of which “have been previously linked with Alzheimer’s disease and dementia,” the researchers noted. They also found the methylated genes CTSV and PRMT5, both of which have been previously associated with cardiovascular disease.

“A significant strength of our study is the novelty, i.e. the use of blood leukocytes to accurately detect Alzheimer’s disease and also for interrogating the pathogenesis of Alzheimer’s disease,” the investigators wrote.

Dr. Bahado-Singh said that the test let them identify changes in cells in the blood, “giving us a comprehensive account not only of the fact that the brain is being affected by Alzheimer’s disease but it’s telling us what kinds of processes are going on in the brain.

“Normally you don’t have access to the brain. This gives us a simple blood test to get an ongoing reading of the course of events in the brain – and potentially tell us very early on before the onset of symptoms,” he added.

Cautiously optimistic

During the question-and-answer session following his presentation at the briefing, Dr. Bahado-Singh reiterated that they are at a very early stage in the research and were not able to make clinical recommendations at this point. However, he added, “There was evidence that DNA methylation change could likely precede the onset of abnormalities in the cells that give rise to the disease.”

Coinvestigator Stewart Graham, PhD, director of Alzheimer’s research at Beaumont Health, added that although the initial study findings led to some excitement for the team, “we have to be very conservative with what we say.”

He noted that the findings need to be replicated in a more diverse population. Still, “we’re excited at the moment and looking forward to seeing what the future results hold,” Dr. Graham said.

Dr. Bahado-Singh said that if larger studies confirm the findings and the test is viable, it would make sense to use it as a screen for individuals older than 65. He noted that because of the aging of the population, “this subset of individuals will constitute a larger and larger fraction of the population globally.”

Still early days

Commenting on the findings, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, noted that the investigators used an “interesting” diagnostic process.

“It was a unique approach to looking at and trying to understand what might be some of the biological underpinnings and using these tools and technologies to determine if they’re able to differentiate individuals with Alzheimer’s disease” from those without Alzheimer’s disease, said Dr. Snyder, who was not involved with the research.

“Ultimately, we want to know who is at greater risk, who may have some of the changing biology at the earliest time point so that we can intervene to stop the progression of the disease,” she said.

She pointed out that a number of types of biomarker tests are currently under investigation, many of which are measuring different outcomes. “And that’s what we want to see going forward. We want to have as many tools in our toolbox that allow us to accurately diagnose at that earliest time point,” Dr. Snyder said.

“At this point, [the current study] is still pretty early, so it needs to be replicated and then expanded to larger groups to really understand what they may be seeing,” she added.

Dr. Bahado-Singh, Dr. Imam, Dr. Graham, and Dr. Snyder have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, raising the prospect of early intervention when effective treatments become available.

In a study, investigators used six AI methodologies, including Deep Learning, to assess blood leukocyte epigenomic biomarkers. They found more than 150 genetic differences among study participants with Alzheimer’s disease in comparison with participants who did not have Alzheimer’s disease.

All of the AI platforms were effective in predicting Alzheimer’s disease. Deep Learning’s assessment of intragenic cytosine-phosphate-guanines (CpGs) had sensitivity and specificity rates of 97%.

“It’s almost as if the leukocytes have become a newspaper to tell us, ‘This is what is going on in the brain,’ “ lead author Ray Bahado-Singh, MD, chair of the department of obstetrics and gynecology, Oakland University, Auburn Hills, Mich., said in a news release.

The researchers noted that the findings, if replicated in future studies, may help in providing Alzheimer’s disease diagnoses “much earlier” in the disease process. “The holy grail is to identify patients in the preclinical stage so effective early interventions, including new medications, can be studied and ultimately used,” Dr. Bahado-Singh said.

“This certainly isn’t the final step in Alzheimer’s research, but I think this represents a significant change in direction,” he told attendees at a press briefing.

The findings were published online March 31 in PLOS ONE.

Silver tsunami

The investigators noted that Alzheimer’s disease is often diagnosed when the disease is in its later stages, after irreversible brain damage has occurred. “There is currently no cure for the disease, and the treatment is limited to drugs that attempt to treat symptoms and have little effect on the disease’s progression,” they noted.

Coinvestigator Khaled Imam, MD, director of geriatric medicine for Beaumont Health in Michigan, pointed out that although MRI and lumbar puncture can identify Alzheimer’s disease early on, the processes are expensive and/or invasive.

“Having biomarkers in the blood ... and being able to identify [Alzheimer’s disease] years before symptoms start, hopefully we’d be able to intervene early on in the process of the disease,” Dr. Imam said.

It is estimated that the number of Americans aged 85 and older will triple by 2050. This impending “silver tsunami,” which will come with a commensurate increase in Alzheimer’s disease cases, makes it even more important to be able to diagnose the disease early on, he noted.

The study included 24 individuals with late-onset Alzheimer’s disease (70.8% women; mean age, 83 years); 24 were deemed to be “cognitively healthy” (66.7% women; mean age, 80 years). About 500 ng of genomic DNA was extracted from whole-blood samples from each participant.

The researchers used the Infinium MethylationEPIC BeadChip array, and the samples were then examined for markers of methylation that would “indicate the disease process has started,” they noted.

In addition to Deep Learning, the five other AI platforms were the Support Vector Machine, Generalized Linear Model, Prediction Analysis for Microarrays, Random Forest, and Linear Discriminant Analysis.

These platforms were used to assess leukocyte genome changes. To predict Alzheimer’s disease, the researchers also used Ingenuity Pathway Analysis.

Significant “chemical changes”

Results showed that the Alzheimer’s disease group had 152 significantly differentially methylated CpGs in 171 genes in comparison with the non-Alzheimer’s disease group (false discovery rate P value < .05).

As a whole, using intragenic and intergenic/extragenic CpGs, the AI platforms were effective in predicting who had Alzheimer’s disease (area under the curve [AUC], ≥ 0.93). Using intragenic markers, the AUC for Deep Learning was 0.99.

“We looked at close to a million different sites, and we saw some chemical changes that we know are associated with alteration or change in gene function,” Dr. Bahado-Singh said.

Altered genes that were found in the Alzheimer’s disease group included CR1L, CTSV, S1PR1, and LTB4R – all of which “have been previously linked with Alzheimer’s disease and dementia,” the researchers noted. They also found the methylated genes CTSV and PRMT5, both of which have been previously associated with cardiovascular disease.

“A significant strength of our study is the novelty, i.e. the use of blood leukocytes to accurately detect Alzheimer’s disease and also for interrogating the pathogenesis of Alzheimer’s disease,” the investigators wrote.

Dr. Bahado-Singh said that the test let them identify changes in cells in the blood, “giving us a comprehensive account not only of the fact that the brain is being affected by Alzheimer’s disease but it’s telling us what kinds of processes are going on in the brain.

“Normally you don’t have access to the brain. This gives us a simple blood test to get an ongoing reading of the course of events in the brain – and potentially tell us very early on before the onset of symptoms,” he added.

Cautiously optimistic

During the question-and-answer session following his presentation at the briefing, Dr. Bahado-Singh reiterated that they are at a very early stage in the research and were not able to make clinical recommendations at this point. However, he added, “There was evidence that DNA methylation change could likely precede the onset of abnormalities in the cells that give rise to the disease.”

Coinvestigator Stewart Graham, PhD, director of Alzheimer’s research at Beaumont Health, added that although the initial study findings led to some excitement for the team, “we have to be very conservative with what we say.”

He noted that the findings need to be replicated in a more diverse population. Still, “we’re excited at the moment and looking forward to seeing what the future results hold,” Dr. Graham said.

Dr. Bahado-Singh said that if larger studies confirm the findings and the test is viable, it would make sense to use it as a screen for individuals older than 65. He noted that because of the aging of the population, “this subset of individuals will constitute a larger and larger fraction of the population globally.”

Still early days

Commenting on the findings, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, noted that the investigators used an “interesting” diagnostic process.

“It was a unique approach to looking at and trying to understand what might be some of the biological underpinnings and using these tools and technologies to determine if they’re able to differentiate individuals with Alzheimer’s disease” from those without Alzheimer’s disease, said Dr. Snyder, who was not involved with the research.

“Ultimately, we want to know who is at greater risk, who may have some of the changing biology at the earliest time point so that we can intervene to stop the progression of the disease,” she said.

She pointed out that a number of types of biomarker tests are currently under investigation, many of which are measuring different outcomes. “And that’s what we want to see going forward. We want to have as many tools in our toolbox that allow us to accurately diagnose at that earliest time point,” Dr. Snyder said.

“At this point, [the current study] is still pretty early, so it needs to be replicated and then expanded to larger groups to really understand what they may be seeing,” she added.

Dr. Bahado-Singh, Dr. Imam, Dr. Graham, and Dr. Snyder have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, raising the prospect of early intervention when effective treatments become available.

In a study, investigators used six AI methodologies, including Deep Learning, to assess blood leukocyte epigenomic biomarkers. They found more than 150 genetic differences among study participants with Alzheimer’s disease in comparison with participants who did not have Alzheimer’s disease.

All of the AI platforms were effective in predicting Alzheimer’s disease. Deep Learning’s assessment of intragenic cytosine-phosphate-guanines (CpGs) had sensitivity and specificity rates of 97%.

“It’s almost as if the leukocytes have become a newspaper to tell us, ‘This is what is going on in the brain,’ “ lead author Ray Bahado-Singh, MD, chair of the department of obstetrics and gynecology, Oakland University, Auburn Hills, Mich., said in a news release.

The researchers noted that the findings, if replicated in future studies, may help in providing Alzheimer’s disease diagnoses “much earlier” in the disease process. “The holy grail is to identify patients in the preclinical stage so effective early interventions, including new medications, can be studied and ultimately used,” Dr. Bahado-Singh said.

“This certainly isn’t the final step in Alzheimer’s research, but I think this represents a significant change in direction,” he told attendees at a press briefing.

The findings were published online March 31 in PLOS ONE.

Silver tsunami

The investigators noted that Alzheimer’s disease is often diagnosed when the disease is in its later stages, after irreversible brain damage has occurred. “There is currently no cure for the disease, and the treatment is limited to drugs that attempt to treat symptoms and have little effect on the disease’s progression,” they noted.

Coinvestigator Khaled Imam, MD, director of geriatric medicine for Beaumont Health in Michigan, pointed out that although MRI and lumbar puncture can identify Alzheimer’s disease early on, the processes are expensive and/or invasive.

“Having biomarkers in the blood ... and being able to identify [Alzheimer’s disease] years before symptoms start, hopefully we’d be able to intervene early on in the process of the disease,” Dr. Imam said.

It is estimated that the number of Americans aged 85 and older will triple by 2050. This impending “silver tsunami,” which will come with a commensurate increase in Alzheimer’s disease cases, makes it even more important to be able to diagnose the disease early on, he noted.

The study included 24 individuals with late-onset Alzheimer’s disease (70.8% women; mean age, 83 years); 24 were deemed to be “cognitively healthy” (66.7% women; mean age, 80 years). About 500 ng of genomic DNA was extracted from whole-blood samples from each participant.

The researchers used the Infinium MethylationEPIC BeadChip array, and the samples were then examined for markers of methylation that would “indicate the disease process has started,” they noted.

In addition to Deep Learning, the five other AI platforms were the Support Vector Machine, Generalized Linear Model, Prediction Analysis for Microarrays, Random Forest, and Linear Discriminant Analysis.

These platforms were used to assess leukocyte genome changes. To predict Alzheimer’s disease, the researchers also used Ingenuity Pathway Analysis.

Significant “chemical changes”

Results showed that the Alzheimer’s disease group had 152 significantly differentially methylated CpGs in 171 genes in comparison with the non-Alzheimer’s disease group (false discovery rate P value < .05).

As a whole, using intragenic and intergenic/extragenic CpGs, the AI platforms were effective in predicting who had Alzheimer’s disease (area under the curve [AUC], ≥ 0.93). Using intragenic markers, the AUC for Deep Learning was 0.99.

“We looked at close to a million different sites, and we saw some chemical changes that we know are associated with alteration or change in gene function,” Dr. Bahado-Singh said.

Altered genes that were found in the Alzheimer’s disease group included CR1L, CTSV, S1PR1, and LTB4R – all of which “have been previously linked with Alzheimer’s disease and dementia,” the researchers noted. They also found the methylated genes CTSV and PRMT5, both of which have been previously associated with cardiovascular disease.

“A significant strength of our study is the novelty, i.e. the use of blood leukocytes to accurately detect Alzheimer’s disease and also for interrogating the pathogenesis of Alzheimer’s disease,” the investigators wrote.

Dr. Bahado-Singh said that the test let them identify changes in cells in the blood, “giving us a comprehensive account not only of the fact that the brain is being affected by Alzheimer’s disease but it’s telling us what kinds of processes are going on in the brain.

“Normally you don’t have access to the brain. This gives us a simple blood test to get an ongoing reading of the course of events in the brain – and potentially tell us very early on before the onset of symptoms,” he added.

Cautiously optimistic

During the question-and-answer session following his presentation at the briefing, Dr. Bahado-Singh reiterated that they are at a very early stage in the research and were not able to make clinical recommendations at this point. However, he added, “There was evidence that DNA methylation change could likely precede the onset of abnormalities in the cells that give rise to the disease.”

Coinvestigator Stewart Graham, PhD, director of Alzheimer’s research at Beaumont Health, added that although the initial study findings led to some excitement for the team, “we have to be very conservative with what we say.”

He noted that the findings need to be replicated in a more diverse population. Still, “we’re excited at the moment and looking forward to seeing what the future results hold,” Dr. Graham said.

Dr. Bahado-Singh said that if larger studies confirm the findings and the test is viable, it would make sense to use it as a screen for individuals older than 65. He noted that because of the aging of the population, “this subset of individuals will constitute a larger and larger fraction of the population globally.”

Still early days

Commenting on the findings, Heather Snyder, PhD, vice president of medical and scientific relations at the Alzheimer’s Association, noted that the investigators used an “interesting” diagnostic process.

“It was a unique approach to looking at and trying to understand what might be some of the biological underpinnings and using these tools and technologies to determine if they’re able to differentiate individuals with Alzheimer’s disease” from those without Alzheimer’s disease, said Dr. Snyder, who was not involved with the research.

“Ultimately, we want to know who is at greater risk, who may have some of the changing biology at the earliest time point so that we can intervene to stop the progression of the disease,” she said.

She pointed out that a number of types of biomarker tests are currently under investigation, many of which are measuring different outcomes. “And that’s what we want to see going forward. We want to have as many tools in our toolbox that allow us to accurately diagnose at that earliest time point,” Dr. Snyder said.

“At this point, [the current study] is still pretty early, so it needs to be replicated and then expanded to larger groups to really understand what they may be seeing,” she added.

Dr. Bahado-Singh, Dr. Imam, Dr. Graham, and Dr. Snyder have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM PLOS ONE

A paleolithic raw bar, and the human brush with extinction

This essay is adapted from the newly released book, “A History of the Human Brain: From the Sea Sponge to CRISPR, How Our Brain Evolved.”

“He was a bold man that first ate an oyster.” – Jonathan Swift

That man or, just as likely, that woman, may have done so out of necessity. It was either eat this glistening, gray blob of briny goo or perish.

Beginning 190,000 years ago, a glacial age we identify today as Marine Isotope Stage 6, or MIS6, had set in, cooling and drying out much of the planet. There was widespread drought, leaving the African plains a harsher, more barren substrate for survival – an arena of competition, desperation, and starvation for many species, including ours. Some estimates have the sapiens population dipping to just a few hundred people during MIS6. Like other apes today, we were an endangered species. But through some nexus of intelligence, ecological exploitation, and luck, we managed. Anthropologists argue over what part of Africa would’ve been hospitable enough to rescue sapiens from Darwinian oblivion. Arizona State University archaeologist Curtis Marean, PhD, believes the continent’s southern shore is a good candidate.

For 2 decades, Dr. Marean has overseen excavations at a site called Pinnacle Point on the South African coast. The region has over 9,000 plant species, including the world’s most diverse population of geophytes, plants with underground energy-storage organs such as bulbs, tubers, and rhizomes. These subterranean stores are rich in calories and carbohydrates, and, by virtue of being buried, are protected from most other species (save the occasional tool-wielding chimpanzee). They are also adapted to cold climates and, when cooked, easily digested. All in all, a coup for hunter-gatherers.

The other enticement at Pinnacle Point could be found with a few easy steps toward the sea. Mollusks. Geological samples from MIS6 show South Africa’s shores were packed with mussels, oysters, clams, and a variety of sea snails. We almost certainly turned to them for nutrition.

Dr. Marean’s research suggests that, sometime around 160,000 years ago, at least one group of sapiens began supplementing their terrestrial diet by exploiting the region’s rich shellfish beds. This is the oldest evidence to date of humans consistently feasting on seafood – easy, predictable, immobile calories. No hunting required. As inland Africa dried up, learning to shuck mussels and oysters was a key adaptation to coastal living, one that supported our later migration out of the continent.

Dr. Marean believes the change in behavior was possible thanks to our already keen brains, which supported an ability to track tides, especially spring tides. Spring tides occur twice a month with each new and full moon and result in the greatest difference between high and low tidewaters. The people of Pinnacle Point learned to exploit this cycle. “By tracking tides, we would have had easy, reliable access to high-quality proteins and fats from shellfish every 2 weeks as the ocean receded,” he says. “Whereas you can’t rely on land animals to always be in the same place at the same time.” Work by Jan De Vynck, PhD, a professor at Nelson Mandela University in South Africa, supports this idea, showing that foraging shellfish beds under optimal tidal conditions can yield a staggering 3,500 calories per hour!

“I don’t know if we owe our existence to seafood, but it was certainly important for the population [that Dr.] Curtis studies. That place is full of mussels,” said Ian Tattersall, PhD, curator emeritus with the American Museum of Natural History in New York.

“And I like the idea that during a population bottleneck we got creative and learned how to focus on marine resources.” Innovations, Dr. Tattersall explained, typically occur in small, fixed populations. Large populations have too much genetic inertia to support radical innovation; the status quo is enough to survive. “If you’re looking for evolutionary innovation, you have to look at smaller groups.”

MIS6 wasn’t the only near-extinction in our past. During the Pleistocene epoch, roughly 2.5 million to 12,000 years ago, humans tended to maintain a small population, hovering around a million and later growing to maybe 8 million at most. Periodically, our numbers dipped as climate shifts, natural disasters, and food shortages brought us dangerously close to extinction. Modern humans are descended from the hearty survivors of these bottlenecks.

One especially dire stretch occurred around 1 million years ago. Our effective population (the number of breeding individuals) shriveled to around 18,000, smaller than that of other apes at the time. Worse, our genetic diversity – the insurance policy on evolutionary success and the ability to adapt – plummeted. A similar near extinction may have occurred around 75,000 years ago, the result of a massive volcanic eruption in Sumatra.

Our smarts and adaptability helped us endure these tough times – omnivorism helped us weather scarcity.

A sea of vitamins

Both Dr. Marean and Dr. Tattersall agree that the sapiens hanging on in southern Africa couldn’t have lived entirely on shellfish.

Most likely they also spent time hunting and foraging roots inland, making pilgrimages to the sea during spring tides. Dr. Marean believes coastal cuisine may have allowed a paltry human population to hang on until climate change led to more hospitable terrain. He’s not entirely sold on the idea that marine life was necessarily a driver of human brain evolution.

By the time we incorporated seafood into our diets we were already smart, our brains shaped through millennia of selection for intelligence. “Being a marine forager requires a certain degree of sophisticated smarts,” he said. It requires tracking the lunar cycle and planning excursions to the coast at the right times. Shellfish were simply another source of calories.

Unless you ask Michael Crawford.

Dr. Crawford is a professor at Imperial College London and a strident believer that our brains are those of sea creatures. Sort of.

In 1972, he copublished a paper concluding that the brain is structurally and functionally dependent on an omega-3 fatty acid called docosahexaenoic acid, or DHA. The human brain is composed of nearly 60% fat, so it’s not surprising that certain fats are important to brain health. Nearly 50 years after Dr. Crawford’s study, omega-3 supplements are now a multi-billion-dollar business.

Omega-3s, or more formally, omega-3 polyunsaturated fatty acids (PUFAs), are essential fats, meaning they aren’t produced by the body and must be obtained through diet. We get them from vegetable oils, nuts, seeds, and animals that eat such things. But take an informal poll, and you’ll find most people probably associate omega fatty acids with fish and other seafood.

In the 1970s and 1980s, scientists took notice of the low rates of heart disease in Eskimo communities. Research linked their cardiovascular health to a high-fish diet (though fish cannot produce omega-3s, they source them from algae), and eventually the medical and scientific communities began to rethink fat. Study after study found omega-3 fatty acids to be healthy. They were linked with a lower risk for heart disease and overall mortality. All those decades of parents forcing various fish oils on their grimacing children now had some science behind them. There is such a thing as a good fat.

, especially DHA and eicosapentaenoic acid, or EPA. Omega fats provide structure to neuronal cell membranes and are crucial in neuron-to-neuron communication. They increase levels of a protein called brain-derived neurotrophic factor (BDNF), which supports neuronal growth and survival. A growing body of evidence shows omega-3 supplementation may slow down the process of neurodegeneration, the gradual deterioration of the brain that results in Alzheimer’s disease and other forms of dementia.

Popping a daily omega-3 supplement or, better still, eating a seafood-rich diet, may increase blood flow to the brain. In 2019, the International Society for Nutritional Psychiatry Research recommended omega-3s as an adjunct therapy for major depressive disorder. PUFAs appear to reduce the risk for and severity of mood disorders such as depression and to boost attention in children with ADHD as effectively as drug therapies.

Many researchers claim there would’ve been plenty of DHA available on land to support early humans, and marine foods were just one of many sources.

Not Dr. Crawford.

He believes that brain development and function are not only dependent on DHA but, in fact, DHA sourced from the sea was critical to mammalian brain evolution. “The animal brain evolved 600 million years ago in the ocean and was dependent on DHA, as well as compounds such as iodine, which is also in short supply on land,” he said. “To build a brain, you need these building blocks, which were rich at sea and on rocky shores.”

Dr. Crawford cites his early biochemical work showing DHA isn’t readily accessible from the muscle tissue of land animals. Using DHA tagged with a radioactive isotope, he and his colleagues in the 1970s found that “ready-made” DHA, like that found in shellfish, is incorporated into the developing rat brain with 10-fold greater efficiency than plant- and land animal–sourced DHA, where it exists as its metabolic precursor alpha-linolenic acid. “I’m afraid the idea that ample DHA was available from the fats of animals on the savanna is just not true,” he disputes. According to Dr. Crawford, our tiny, wormlike ancestors were able to evolve primitive nervous systems and flit through the silt thanks to the abundance of healthy fat to be had by living in the ocean and consuming algae.

For over 40 years, Dr. Crawford has argued that rising rates of mental illness are a result of post–World War II dietary changes, especially the move toward land-sourced food and the medical community’s subsequent support of low-fat diets. He feels that omega-3s from seafood were critical to humans’ rapid neural march toward higher cognition, and are therefore critical to brain health. “The continued rise in mental illness is an incredibly important threat to mankind and society, and moving away from marine foods is a major contributor,” said Dr. Crawford.

University of Sherbrooke (Que.) physiology professor Stephen Cunnane, PhD, tends to agree that aquatically sourced nutrients were crucial to human evolution. It’s the importance of coastal living he’s not sure about. He believes hominins would’ve incorporated fish from lakes and rivers into their diet for millions of years. In his view, it wasn’t just omega-3s that contributed to our big brains, but a cluster of nutrients found in fish: iodine, iron, zinc, copper, and selenium among them. “I think DHA was hugely important to our evolution and brain health but I don’t think it was a magic bullet all by itself,” he said. “Numerous other nutrients found in fish and shellfish were very probably important, too, and are now known to be good for the brain.”

Dr. Marean agrees. “Accessing the marine food chain could have had a huge impact on fertility, survival, and overall health, including brain health, in part, due to the high return on omega-3 fatty acids and other nutrients.” But, he speculates, before MIS6, hominins would have had access to plenty of brain-healthy terrestrial nutrition, including meat from animals that consumed omega-3–rich plants and grains.

Dr. Cunnane agrees with Dr. Marean to a degree. He’s confident that higher intelligence evolved gradually over millions of years as mutations inching the cognitive needle forward conferred survival and reproductive advantages – but he maintains that certain advantages like, say, being able to shuck an oyster, allowed an already intelligent brain to thrive.

Foraging marine life in the waters off of Africa likely played an important role in keeping some of our ancestors alive and supported our subsequent propagation throughout the world. By this point, the human brain was already a marvel of consciousness and computing, not too dissimilar to the one we carry around today.

In all likelihood, Pleistocene humans probably got their nutrients and calories wherever they could. If we lived inland, we hunted. Maybe we speared the occasional catfish. We sourced nutrients from fruits, leaves, and nuts. A few times a month, those of us near the coast enjoyed a feast of mussels and oysters.

Dr. Stetka is an editorial director at Medscape.com, a former neuroscience researcher, and a nonpracticing physician. A version of this article first appeared on Medscape.

This essay is adapted from the newly released book, “A History of the Human Brain: From the Sea Sponge to CRISPR, How Our Brain Evolved.”

“He was a bold man that first ate an oyster.” – Jonathan Swift

That man or, just as likely, that woman, may have done so out of necessity. It was either eat this glistening, gray blob of briny goo or perish.

Beginning 190,000 years ago, a glacial age we identify today as Marine Isotope Stage 6, or MIS6, had set in, cooling and drying out much of the planet. There was widespread drought, leaving the African plains a harsher, more barren substrate for survival – an arena of competition, desperation, and starvation for many species, including ours. Some estimates have the sapiens population dipping to just a few hundred people during MIS6. Like other apes today, we were an endangered species. But through some nexus of intelligence, ecological exploitation, and luck, we managed. Anthropologists argue over what part of Africa would’ve been hospitable enough to rescue sapiens from Darwinian oblivion. Arizona State University archaeologist Curtis Marean, PhD, believes the continent’s southern shore is a good candidate.

For 2 decades, Dr. Marean has overseen excavations at a site called Pinnacle Point on the South African coast. The region has over 9,000 plant species, including the world’s most diverse population of geophytes, plants with underground energy-storage organs such as bulbs, tubers, and rhizomes. These subterranean stores are rich in calories and carbohydrates, and, by virtue of being buried, are protected from most other species (save the occasional tool-wielding chimpanzee). They are also adapted to cold climates and, when cooked, easily digested. All in all, a coup for hunter-gatherers.

The other enticement at Pinnacle Point could be found with a few easy steps toward the sea. Mollusks. Geological samples from MIS6 show South Africa’s shores were packed with mussels, oysters, clams, and a variety of sea snails. We almost certainly turned to them for nutrition.

Dr. Marean’s research suggests that, sometime around 160,000 years ago, at least one group of sapiens began supplementing their terrestrial diet by exploiting the region’s rich shellfish beds. This is the oldest evidence to date of humans consistently feasting on seafood – easy, predictable, immobile calories. No hunting required. As inland Africa dried up, learning to shuck mussels and oysters was a key adaptation to coastal living, one that supported our later migration out of the continent.

Dr. Marean believes the change in behavior was possible thanks to our already keen brains, which supported an ability to track tides, especially spring tides. Spring tides occur twice a month with each new and full moon and result in the greatest difference between high and low tidewaters. The people of Pinnacle Point learned to exploit this cycle. “By tracking tides, we would have had easy, reliable access to high-quality proteins and fats from shellfish every 2 weeks as the ocean receded,” he says. “Whereas you can’t rely on land animals to always be in the same place at the same time.” Work by Jan De Vynck, PhD, a professor at Nelson Mandela University in South Africa, supports this idea, showing that foraging shellfish beds under optimal tidal conditions can yield a staggering 3,500 calories per hour!

“I don’t know if we owe our existence to seafood, but it was certainly important for the population [that Dr.] Curtis studies. That place is full of mussels,” said Ian Tattersall, PhD, curator emeritus with the American Museum of Natural History in New York.

“And I like the idea that during a population bottleneck we got creative and learned how to focus on marine resources.” Innovations, Dr. Tattersall explained, typically occur in small, fixed populations. Large populations have too much genetic inertia to support radical innovation; the status quo is enough to survive. “If you’re looking for evolutionary innovation, you have to look at smaller groups.”

MIS6 wasn’t the only near-extinction in our past. During the Pleistocene epoch, roughly 2.5 million to 12,000 years ago, humans tended to maintain a small population, hovering around a million and later growing to maybe 8 million at most. Periodically, our numbers dipped as climate shifts, natural disasters, and food shortages brought us dangerously close to extinction. Modern humans are descended from the hearty survivors of these bottlenecks.

One especially dire stretch occurred around 1 million years ago. Our effective population (the number of breeding individuals) shriveled to around 18,000, smaller than that of other apes at the time. Worse, our genetic diversity – the insurance policy on evolutionary success and the ability to adapt – plummeted. A similar near extinction may have occurred around 75,000 years ago, the result of a massive volcanic eruption in Sumatra.

Our smarts and adaptability helped us endure these tough times – omnivorism helped us weather scarcity.

A sea of vitamins

Both Dr. Marean and Dr. Tattersall agree that the sapiens hanging on in southern Africa couldn’t have lived entirely on shellfish.

Most likely they also spent time hunting and foraging roots inland, making pilgrimages to the sea during spring tides. Dr. Marean believes coastal cuisine may have allowed a paltry human population to hang on until climate change led to more hospitable terrain. He’s not entirely sold on the idea that marine life was necessarily a driver of human brain evolution.

By the time we incorporated seafood into our diets we were already smart, our brains shaped through millennia of selection for intelligence. “Being a marine forager requires a certain degree of sophisticated smarts,” he said. It requires tracking the lunar cycle and planning excursions to the coast at the right times. Shellfish were simply another source of calories.

Unless you ask Michael Crawford.

Dr. Crawford is a professor at Imperial College London and a strident believer that our brains are those of sea creatures. Sort of.

In 1972, he copublished a paper concluding that the brain is structurally and functionally dependent on an omega-3 fatty acid called docosahexaenoic acid, or DHA. The human brain is composed of nearly 60% fat, so it’s not surprising that certain fats are important to brain health. Nearly 50 years after Dr. Crawford’s study, omega-3 supplements are now a multi-billion-dollar business.

Omega-3s, or more formally, omega-3 polyunsaturated fatty acids (PUFAs), are essential fats, meaning they aren’t produced by the body and must be obtained through diet. We get them from vegetable oils, nuts, seeds, and animals that eat such things. But take an informal poll, and you’ll find most people probably associate omega fatty acids with fish and other seafood.

In the 1970s and 1980s, scientists took notice of the low rates of heart disease in Eskimo communities. Research linked their cardiovascular health to a high-fish diet (though fish cannot produce omega-3s, they source them from algae), and eventually the medical and scientific communities began to rethink fat. Study after study found omega-3 fatty acids to be healthy. They were linked with a lower risk for heart disease and overall mortality. All those decades of parents forcing various fish oils on their grimacing children now had some science behind them. There is such a thing as a good fat.

, especially DHA and eicosapentaenoic acid, or EPA. Omega fats provide structure to neuronal cell membranes and are crucial in neuron-to-neuron communication. They increase levels of a protein called brain-derived neurotrophic factor (BDNF), which supports neuronal growth and survival. A growing body of evidence shows omega-3 supplementation may slow down the process of neurodegeneration, the gradual deterioration of the brain that results in Alzheimer’s disease and other forms of dementia.

Popping a daily omega-3 supplement or, better still, eating a seafood-rich diet, may increase blood flow to the brain. In 2019, the International Society for Nutritional Psychiatry Research recommended omega-3s as an adjunct therapy for major depressive disorder. PUFAs appear to reduce the risk for and severity of mood disorders such as depression and to boost attention in children with ADHD as effectively as drug therapies.

Many researchers claim there would’ve been plenty of DHA available on land to support early humans, and marine foods were just one of many sources.

Not Dr. Crawford.

He believes that brain development and function are not only dependent on DHA but, in fact, DHA sourced from the sea was critical to mammalian brain evolution. “The animal brain evolved 600 million years ago in the ocean and was dependent on DHA, as well as compounds such as iodine, which is also in short supply on land,” he said. “To build a brain, you need these building blocks, which were rich at sea and on rocky shores.”

Dr. Crawford cites his early biochemical work showing DHA isn’t readily accessible from the muscle tissue of land animals. Using DHA tagged with a radioactive isotope, he and his colleagues in the 1970s found that “ready-made” DHA, like that found in shellfish, is incorporated into the developing rat brain with 10-fold greater efficiency than plant- and land animal–sourced DHA, where it exists as its metabolic precursor alpha-linolenic acid. “I’m afraid the idea that ample DHA was available from the fats of animals on the savanna is just not true,” he disputes. According to Dr. Crawford, our tiny, wormlike ancestors were able to evolve primitive nervous systems and flit through the silt thanks to the abundance of healthy fat to be had by living in the ocean and consuming algae.

For over 40 years, Dr. Crawford has argued that rising rates of mental illness are a result of post–World War II dietary changes, especially the move toward land-sourced food and the medical community’s subsequent support of low-fat diets. He feels that omega-3s from seafood were critical to humans’ rapid neural march toward higher cognition, and are therefore critical to brain health. “The continued rise in mental illness is an incredibly important threat to mankind and society, and moving away from marine foods is a major contributor,” said Dr. Crawford.

University of Sherbrooke (Que.) physiology professor Stephen Cunnane, PhD, tends to agree that aquatically sourced nutrients were crucial to human evolution. It’s the importance of coastal living he’s not sure about. He believes hominins would’ve incorporated fish from lakes and rivers into their diet for millions of years. In his view, it wasn’t just omega-3s that contributed to our big brains, but a cluster of nutrients found in fish: iodine, iron, zinc, copper, and selenium among them. “I think DHA was hugely important to our evolution and brain health but I don’t think it was a magic bullet all by itself,” he said. “Numerous other nutrients found in fish and shellfish were very probably important, too, and are now known to be good for the brain.”

Dr. Marean agrees. “Accessing the marine food chain could have had a huge impact on fertility, survival, and overall health, including brain health, in part, due to the high return on omega-3 fatty acids and other nutrients.” But, he speculates, before MIS6, hominins would have had access to plenty of brain-healthy terrestrial nutrition, including meat from animals that consumed omega-3–rich plants and grains.

Dr. Cunnane agrees with Dr. Marean to a degree. He’s confident that higher intelligence evolved gradually over millions of years as mutations inching the cognitive needle forward conferred survival and reproductive advantages – but he maintains that certain advantages like, say, being able to shuck an oyster, allowed an already intelligent brain to thrive.

Foraging marine life in the waters off of Africa likely played an important role in keeping some of our ancestors alive and supported our subsequent propagation throughout the world. By this point, the human brain was already a marvel of consciousness and computing, not too dissimilar to the one we carry around today.

In all likelihood, Pleistocene humans probably got their nutrients and calories wherever they could. If we lived inland, we hunted. Maybe we speared the occasional catfish. We sourced nutrients from fruits, leaves, and nuts. A few times a month, those of us near the coast enjoyed a feast of mussels and oysters.

Dr. Stetka is an editorial director at Medscape.com, a former neuroscience researcher, and a nonpracticing physician. A version of this article first appeared on Medscape.

This essay is adapted from the newly released book, “A History of the Human Brain: From the Sea Sponge to CRISPR, How Our Brain Evolved.”

“He was a bold man that first ate an oyster.” – Jonathan Swift

That man or, just as likely, that woman, may have done so out of necessity. It was either eat this glistening, gray blob of briny goo or perish.

Beginning 190,000 years ago, a glacial age we identify today as Marine Isotope Stage 6, or MIS6, had set in, cooling and drying out much of the planet. There was widespread drought, leaving the African plains a harsher, more barren substrate for survival – an arena of competition, desperation, and starvation for many species, including ours. Some estimates have the sapiens population dipping to just a few hundred people during MIS6. Like other apes today, we were an endangered species. But through some nexus of intelligence, ecological exploitation, and luck, we managed. Anthropologists argue over what part of Africa would’ve been hospitable enough to rescue sapiens from Darwinian oblivion. Arizona State University archaeologist Curtis Marean, PhD, believes the continent’s southern shore is a good candidate.

For 2 decades, Dr. Marean has overseen excavations at a site called Pinnacle Point on the South African coast. The region has over 9,000 plant species, including the world’s most diverse population of geophytes, plants with underground energy-storage organs such as bulbs, tubers, and rhizomes. These subterranean stores are rich in calories and carbohydrates, and, by virtue of being buried, are protected from most other species (save the occasional tool-wielding chimpanzee). They are also adapted to cold climates and, when cooked, easily digested. All in all, a coup for hunter-gatherers.

The other enticement at Pinnacle Point could be found with a few easy steps toward the sea. Mollusks. Geological samples from MIS6 show South Africa’s shores were packed with mussels, oysters, clams, and a variety of sea snails. We almost certainly turned to them for nutrition.

Dr. Marean’s research suggests that, sometime around 160,000 years ago, at least one group of sapiens began supplementing their terrestrial diet by exploiting the region’s rich shellfish beds. This is the oldest evidence to date of humans consistently feasting on seafood – easy, predictable, immobile calories. No hunting required. As inland Africa dried up, learning to shuck mussels and oysters was a key adaptation to coastal living, one that supported our later migration out of the continent.

Dr. Marean believes the change in behavior was possible thanks to our already keen brains, which supported an ability to track tides, especially spring tides. Spring tides occur twice a month with each new and full moon and result in the greatest difference between high and low tidewaters. The people of Pinnacle Point learned to exploit this cycle. “By tracking tides, we would have had easy, reliable access to high-quality proteins and fats from shellfish every 2 weeks as the ocean receded,” he says. “Whereas you can’t rely on land animals to always be in the same place at the same time.” Work by Jan De Vynck, PhD, a professor at Nelson Mandela University in South Africa, supports this idea, showing that foraging shellfish beds under optimal tidal conditions can yield a staggering 3,500 calories per hour!

“I don’t know if we owe our existence to seafood, but it was certainly important for the population [that Dr.] Curtis studies. That place is full of mussels,” said Ian Tattersall, PhD, curator emeritus with the American Museum of Natural History in New York.

“And I like the idea that during a population bottleneck we got creative and learned how to focus on marine resources.” Innovations, Dr. Tattersall explained, typically occur in small, fixed populations. Large populations have too much genetic inertia to support radical innovation; the status quo is enough to survive. “If you’re looking for evolutionary innovation, you have to look at smaller groups.”

MIS6 wasn’t the only near-extinction in our past. During the Pleistocene epoch, roughly 2.5 million to 12,000 years ago, humans tended to maintain a small population, hovering around a million and later growing to maybe 8 million at most. Periodically, our numbers dipped as climate shifts, natural disasters, and food shortages brought us dangerously close to extinction. Modern humans are descended from the hearty survivors of these bottlenecks.

One especially dire stretch occurred around 1 million years ago. Our effective population (the number of breeding individuals) shriveled to around 18,000, smaller than that of other apes at the time. Worse, our genetic diversity – the insurance policy on evolutionary success and the ability to adapt – plummeted. A similar near extinction may have occurred around 75,000 years ago, the result of a massive volcanic eruption in Sumatra.

Our smarts and adaptability helped us endure these tough times – omnivorism helped us weather scarcity.

A sea of vitamins

Both Dr. Marean and Dr. Tattersall agree that the sapiens hanging on in southern Africa couldn’t have lived entirely on shellfish.

Most likely they also spent time hunting and foraging roots inland, making pilgrimages to the sea during spring tides. Dr. Marean believes coastal cuisine may have allowed a paltry human population to hang on until climate change led to more hospitable terrain. He’s not entirely sold on the idea that marine life was necessarily a driver of human brain evolution.

By the time we incorporated seafood into our diets we were already smart, our brains shaped through millennia of selection for intelligence. “Being a marine forager requires a certain degree of sophisticated smarts,” he said. It requires tracking the lunar cycle and planning excursions to the coast at the right times. Shellfish were simply another source of calories.

Unless you ask Michael Crawford.

Dr. Crawford is a professor at Imperial College London and a strident believer that our brains are those of sea creatures. Sort of.

In 1972, he copublished a paper concluding that the brain is structurally and functionally dependent on an omega-3 fatty acid called docosahexaenoic acid, or DHA. The human brain is composed of nearly 60% fat, so it’s not surprising that certain fats are important to brain health. Nearly 50 years after Dr. Crawford’s study, omega-3 supplements are now a multi-billion-dollar business.

Omega-3s, or more formally, omega-3 polyunsaturated fatty acids (PUFAs), are essential fats, meaning they aren’t produced by the body and must be obtained through diet. We get them from vegetable oils, nuts, seeds, and animals that eat such things. But take an informal poll, and you’ll find most people probably associate omega fatty acids with fish and other seafood.

In the 1970s and 1980s, scientists took notice of the low rates of heart disease in Eskimo communities. Research linked their cardiovascular health to a high-fish diet (though fish cannot produce omega-3s, they source them from algae), and eventually the medical and scientific communities began to rethink fat. Study after study found omega-3 fatty acids to be healthy. They were linked with a lower risk for heart disease and overall mortality. All those decades of parents forcing various fish oils on their grimacing children now had some science behind them. There is such a thing as a good fat.

, especially DHA and eicosapentaenoic acid, or EPA. Omega fats provide structure to neuronal cell membranes and are crucial in neuron-to-neuron communication. They increase levels of a protein called brain-derived neurotrophic factor (BDNF), which supports neuronal growth and survival. A growing body of evidence shows omega-3 supplementation may slow down the process of neurodegeneration, the gradual deterioration of the brain that results in Alzheimer’s disease and other forms of dementia.

Popping a daily omega-3 supplement or, better still, eating a seafood-rich diet, may increase blood flow to the brain. In 2019, the International Society for Nutritional Psychiatry Research recommended omega-3s as an adjunct therapy for major depressive disorder. PUFAs appear to reduce the risk for and severity of mood disorders such as depression and to boost attention in children with ADHD as effectively as drug therapies.

Many researchers claim there would’ve been plenty of DHA available on land to support early humans, and marine foods were just one of many sources.

Not Dr. Crawford.

He believes that brain development and function are not only dependent on DHA but, in fact, DHA sourced from the sea was critical to mammalian brain evolution. “The animal brain evolved 600 million years ago in the ocean and was dependent on DHA, as well as compounds such as iodine, which is also in short supply on land,” he said. “To build a brain, you need these building blocks, which were rich at sea and on rocky shores.”

Dr. Crawford cites his early biochemical work showing DHA isn’t readily accessible from the muscle tissue of land animals. Using DHA tagged with a radioactive isotope, he and his colleagues in the 1970s found that “ready-made” DHA, like that found in shellfish, is incorporated into the developing rat brain with 10-fold greater efficiency than plant- and land animal–sourced DHA, where it exists as its metabolic precursor alpha-linolenic acid. “I’m afraid the idea that ample DHA was available from the fats of animals on the savanna is just not true,” he disputes. According to Dr. Crawford, our tiny, wormlike ancestors were able to evolve primitive nervous systems and flit through the silt thanks to the abundance of healthy fat to be had by living in the ocean and consuming algae.

For over 40 years, Dr. Crawford has argued that rising rates of mental illness are a result of post–World War II dietary changes, especially the move toward land-sourced food and the medical community’s subsequent support of low-fat diets. He feels that omega-3s from seafood were critical to humans’ rapid neural march toward higher cognition, and are therefore critical to brain health. “The continued rise in mental illness is an incredibly important threat to mankind and society, and moving away from marine foods is a major contributor,” said Dr. Crawford.

University of Sherbrooke (Que.) physiology professor Stephen Cunnane, PhD, tends to agree that aquatically sourced nutrients were crucial to human evolution. It’s the importance of coastal living he’s not sure about. He believes hominins would’ve incorporated fish from lakes and rivers into their diet for millions of years. In his view, it wasn’t just omega-3s that contributed to our big brains, but a cluster of nutrients found in fish: iodine, iron, zinc, copper, and selenium among them. “I think DHA was hugely important to our evolution and brain health but I don’t think it was a magic bullet all by itself,” he said. “Numerous other nutrients found in fish and shellfish were very probably important, too, and are now known to be good for the brain.”