User login

Cancer patients’ caregivers may carry greater burden

©ASCO/Todd Buchanan 2016

SAN FRANCISCO—New research suggests caring for a loved one with cancer may be more burdensome than caring for a loved one with a different condition.

Researchers analyzed data from “Caregiving in the U.S. 2015,” an online panel study of unpaid adult caregivers.

The team compared cancer and non-cancer caregivers to determine similarities and differences in characteristics and experiences.

The findings were presented at the 2016 Palliative Care in Oncology Symposium (abstract 4).

The study included 1248 caregivers, age 18 and older at the time they were surveyed, who provided care to an adult patient. Seven percent of these caregivers were looking after patients with cancer.

Cancer caregivers reported spending more hours per week providing care than non-cancer caregivers—32.9 and 23.9 hours, respectively.

In addition, cancer caregivers were more likely than other caregivers to communicate with healthcare professionals (82% and 62%, respectively), monitor and adjust patients’ care (76% and 66%, respectively), and advocate on behalf of patients (62% and 49%, respectively).

Despite high levels of engagement with providers, cancer caregivers were nearly twice as likely as non-cancer caregivers to report needing more help and information with making end-of-life decisions—40% and 21%, respectively.

“Our research demonstrates the ripple effect that cancer has on families and patient support systems,” said study investigator Erin Kent, PhD, of the National Cancer Institute in Rockville, Maryland.

“Caregiving can be extremely stressful and demanding—physically, emotionally, and financially. The data show we need to do a better job of supporting these individuals, as their wellbeing is essential to the patient’s quality of life and outcomes.”

Dr Kent emphasized the cyclical nature of cancer care, often requiring short, highly intense periods of time where patients undergo active treatment as a possible reason for the increased intensity in caregiving. She noted that such intensity is also associated with increased caregiver stress and depression.

“Based on our findings, it’s clear we need additional research on caregiving to better understand at what point providers and clinicians should intervene to assess the wellbeing of caregivers,” Dr Kent said.

“Technology, combined with use of a clinical distress rating system, could be promising in the future as a means to ensure caregivers are being supported in a meaningful way.” ![]()

©ASCO/Todd Buchanan 2016

SAN FRANCISCO—New research suggests caring for a loved one with cancer may be more burdensome than caring for a loved one with a different condition.

Researchers analyzed data from “Caregiving in the U.S. 2015,” an online panel study of unpaid adult caregivers.

The team compared cancer and non-cancer caregivers to determine similarities and differences in characteristics and experiences.

The findings were presented at the 2016 Palliative Care in Oncology Symposium (abstract 4).

The study included 1248 caregivers, age 18 and older at the time they were surveyed, who provided care to an adult patient. Seven percent of these caregivers were looking after patients with cancer.

Cancer caregivers reported spending more hours per week providing care than non-cancer caregivers—32.9 and 23.9 hours, respectively.

In addition, cancer caregivers were more likely than other caregivers to communicate with healthcare professionals (82% and 62%, respectively), monitor and adjust patients’ care (76% and 66%, respectively), and advocate on behalf of patients (62% and 49%, respectively).

Despite high levels of engagement with providers, cancer caregivers were nearly twice as likely as non-cancer caregivers to report needing more help and information with making end-of-life decisions—40% and 21%, respectively.

“Our research demonstrates the ripple effect that cancer has on families and patient support systems,” said study investigator Erin Kent, PhD, of the National Cancer Institute in Rockville, Maryland.

“Caregiving can be extremely stressful and demanding—physically, emotionally, and financially. The data show we need to do a better job of supporting these individuals, as their wellbeing is essential to the patient’s quality of life and outcomes.”

Dr Kent emphasized the cyclical nature of cancer care, often requiring short, highly intense periods of time where patients undergo active treatment as a possible reason for the increased intensity in caregiving. She noted that such intensity is also associated with increased caregiver stress and depression.

“Based on our findings, it’s clear we need additional research on caregiving to better understand at what point providers and clinicians should intervene to assess the wellbeing of caregivers,” Dr Kent said.

“Technology, combined with use of a clinical distress rating system, could be promising in the future as a means to ensure caregivers are being supported in a meaningful way.” ![]()

©ASCO/Todd Buchanan 2016

SAN FRANCISCO—New research suggests caring for a loved one with cancer may be more burdensome than caring for a loved one with a different condition.

Researchers analyzed data from “Caregiving in the U.S. 2015,” an online panel study of unpaid adult caregivers.

The team compared cancer and non-cancer caregivers to determine similarities and differences in characteristics and experiences.

The findings were presented at the 2016 Palliative Care in Oncology Symposium (abstract 4).

The study included 1248 caregivers, age 18 and older at the time they were surveyed, who provided care to an adult patient. Seven percent of these caregivers were looking after patients with cancer.

Cancer caregivers reported spending more hours per week providing care than non-cancer caregivers—32.9 and 23.9 hours, respectively.

In addition, cancer caregivers were more likely than other caregivers to communicate with healthcare professionals (82% and 62%, respectively), monitor and adjust patients’ care (76% and 66%, respectively), and advocate on behalf of patients (62% and 49%, respectively).

Despite high levels of engagement with providers, cancer caregivers were nearly twice as likely as non-cancer caregivers to report needing more help and information with making end-of-life decisions—40% and 21%, respectively.

“Our research demonstrates the ripple effect that cancer has on families and patient support systems,” said study investigator Erin Kent, PhD, of the National Cancer Institute in Rockville, Maryland.

“Caregiving can be extremely stressful and demanding—physically, emotionally, and financially. The data show we need to do a better job of supporting these individuals, as their wellbeing is essential to the patient’s quality of life and outcomes.”

Dr Kent emphasized the cyclical nature of cancer care, often requiring short, highly intense periods of time where patients undergo active treatment as a possible reason for the increased intensity in caregiving. She noted that such intensity is also associated with increased caregiver stress and depression.

“Based on our findings, it’s clear we need additional research on caregiving to better understand at what point providers and clinicians should intervene to assess the wellbeing of caregivers,” Dr Kent said.

“Technology, combined with use of a clinical distress rating system, could be promising in the future as a means to ensure caregivers are being supported in a meaningful way.” ![]()

Improving communication between cancer pts and docs

©ASCO/Todd Buchanan 2016

SAN FRANCISCO—Results of the VOICE study showed that training advanced cancer patients and their oncologists on how to communicate resulted in more clinically meaningful discussions between the parties.

However, these discussions did not significantly improve patients’ understanding of their prognosis, have a significant impact on their quality of life or end-of-life care, or significantly improve the

patient-physician relationship.

Ronald Epstein, MD, of the University of Rochester in New York, and his colleagues reported results from this study in JAMA Oncology and at the 2016 Palliative Care in Oncology Symposium (abstract 2).

The VOICE study included 265 patients with stage 3 or 4 cancer, 130 of whom received communication training. As part of the training, patients received a booklet Dr Epstein’s team wrote called “My Cancer Care: What Now? What Next? What I Prefer.”

The patients and their caregivers also met with social workers or nurses to discuss commonly asked questions and how to express their fears, for example, or how to be assertive and state their preferences.

Of the 38 oncologists studied, 19 received communication training. This included mock office sessions with actors (known as standardized patients), video training, and individualized feedback.

Later, the researchers audio-recorded real sessions between the oncologists and patients, then asked both groups to fill out questionnaires. The team coded the interactions and matched the scores to the goals of the training.

Results

The study’s primary endpoint was a composite of 4 communication measures—engaging patients in consultations, responding to emotions, informing patients about prognosis and treatment choices, and balanced framing of information.

The researchers found that communication training resulted in a significant improvement in this endpoint (P=0.02).

“We have shown, in the first large study of its kind, that it is possible to change the conversation in advanced cancer,” Dr Epstein said. “This is a huge first step.”

However, when Dr Epstein and his colleagues looked at the individual components of the endpoint, only the engaging measure was significantly different between the intervention and control groups.

Communication training had no significant effect on the patient-physician relationship, patients’ quality of life, or healthcare utilization at the end of life.

Likewise, communication training had no significant effect on patients’ understanding of their prognosis, which was assessed by the discordance in 2-year survival estimates and curability estimates between patients and physicians.

“We need to try harder to communicate well so that it’s harder to miscommunicate,” Dr Epstein said. “Simply having the conversation is not enough. The quality of the conversation will influence a mutual understanding between patients and their oncologists.”

The researchers said a limitation of this study may have been the timing of the training, which was only provided once and not timed to key decision points during patients’ trajectories. The effects of the training may have waned over the months, especially as the cancer progressed.

“We need to embed communication interventions into the fabric of everyday clinical care,” Dr Epstein said. “This does not take a lot of time, but, in our audio-recordings, there was precious little dialogue that reaffirmed the human experience and the needs of patients. The next step is to make good communication the rule, not the exception, so that cancer patients’ voices can be heard.” ![]()

©ASCO/Todd Buchanan 2016

SAN FRANCISCO—Results of the VOICE study showed that training advanced cancer patients and their oncologists on how to communicate resulted in more clinically meaningful discussions between the parties.

However, these discussions did not significantly improve patients’ understanding of their prognosis, have a significant impact on their quality of life or end-of-life care, or significantly improve the

patient-physician relationship.

Ronald Epstein, MD, of the University of Rochester in New York, and his colleagues reported results from this study in JAMA Oncology and at the 2016 Palliative Care in Oncology Symposium (abstract 2).

The VOICE study included 265 patients with stage 3 or 4 cancer, 130 of whom received communication training. As part of the training, patients received a booklet Dr Epstein’s team wrote called “My Cancer Care: What Now? What Next? What I Prefer.”

The patients and their caregivers also met with social workers or nurses to discuss commonly asked questions and how to express their fears, for example, or how to be assertive and state their preferences.

Of the 38 oncologists studied, 19 received communication training. This included mock office sessions with actors (known as standardized patients), video training, and individualized feedback.

Later, the researchers audio-recorded real sessions between the oncologists and patients, then asked both groups to fill out questionnaires. The team coded the interactions and matched the scores to the goals of the training.

Results

The study’s primary endpoint was a composite of 4 communication measures—engaging patients in consultations, responding to emotions, informing patients about prognosis and treatment choices, and balanced framing of information.

The researchers found that communication training resulted in a significant improvement in this endpoint (P=0.02).

“We have shown, in the first large study of its kind, that it is possible to change the conversation in advanced cancer,” Dr Epstein said. “This is a huge first step.”

However, when Dr Epstein and his colleagues looked at the individual components of the endpoint, only the engaging measure was significantly different between the intervention and control groups.

Communication training had no significant effect on the patient-physician relationship, patients’ quality of life, or healthcare utilization at the end of life.

Likewise, communication training had no significant effect on patients’ understanding of their prognosis, which was assessed by the discordance in 2-year survival estimates and curability estimates between patients and physicians.

“We need to try harder to communicate well so that it’s harder to miscommunicate,” Dr Epstein said. “Simply having the conversation is not enough. The quality of the conversation will influence a mutual understanding between patients and their oncologists.”

The researchers said a limitation of this study may have been the timing of the training, which was only provided once and not timed to key decision points during patients’ trajectories. The effects of the training may have waned over the months, especially as the cancer progressed.

“We need to embed communication interventions into the fabric of everyday clinical care,” Dr Epstein said. “This does not take a lot of time, but, in our audio-recordings, there was precious little dialogue that reaffirmed the human experience and the needs of patients. The next step is to make good communication the rule, not the exception, so that cancer patients’ voices can be heard.” ![]()

©ASCO/Todd Buchanan 2016

SAN FRANCISCO—Results of the VOICE study showed that training advanced cancer patients and their oncologists on how to communicate resulted in more clinically meaningful discussions between the parties.

However, these discussions did not significantly improve patients’ understanding of their prognosis, have a significant impact on their quality of life or end-of-life care, or significantly improve the

patient-physician relationship.

Ronald Epstein, MD, of the University of Rochester in New York, and his colleagues reported results from this study in JAMA Oncology and at the 2016 Palliative Care in Oncology Symposium (abstract 2).

The VOICE study included 265 patients with stage 3 or 4 cancer, 130 of whom received communication training. As part of the training, patients received a booklet Dr Epstein’s team wrote called “My Cancer Care: What Now? What Next? What I Prefer.”

The patients and their caregivers also met with social workers or nurses to discuss commonly asked questions and how to express their fears, for example, or how to be assertive and state their preferences.

Of the 38 oncologists studied, 19 received communication training. This included mock office sessions with actors (known as standardized patients), video training, and individualized feedback.

Later, the researchers audio-recorded real sessions between the oncologists and patients, then asked both groups to fill out questionnaires. The team coded the interactions and matched the scores to the goals of the training.

Results

The study’s primary endpoint was a composite of 4 communication measures—engaging patients in consultations, responding to emotions, informing patients about prognosis and treatment choices, and balanced framing of information.

The researchers found that communication training resulted in a significant improvement in this endpoint (P=0.02).

“We have shown, in the first large study of its kind, that it is possible to change the conversation in advanced cancer,” Dr Epstein said. “This is a huge first step.”

However, when Dr Epstein and his colleagues looked at the individual components of the endpoint, only the engaging measure was significantly different between the intervention and control groups.

Communication training had no significant effect on the patient-physician relationship, patients’ quality of life, or healthcare utilization at the end of life.

Likewise, communication training had no significant effect on patients’ understanding of their prognosis, which was assessed by the discordance in 2-year survival estimates and curability estimates between patients and physicians.

“We need to try harder to communicate well so that it’s harder to miscommunicate,” Dr Epstein said. “Simply having the conversation is not enough. The quality of the conversation will influence a mutual understanding between patients and their oncologists.”

The researchers said a limitation of this study may have been the timing of the training, which was only provided once and not timed to key decision points during patients’ trajectories. The effects of the training may have waned over the months, especially as the cancer progressed.

“We need to embed communication interventions into the fabric of everyday clinical care,” Dr Epstein said. “This does not take a lot of time, but, in our audio-recordings, there was precious little dialogue that reaffirmed the human experience and the needs of patients. The next step is to make good communication the rule, not the exception, so that cancer patients’ voices can be heard.” ![]()

Combo could provide cure for CML, team says

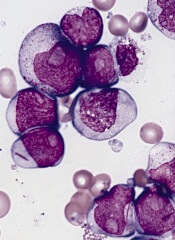

Preclinical research suggests that combining a BCL2 inhibitor with a BCR-ABL tyrosine kinase inhibitor (TKI) can eradicate leukemia stem cells (LSCs) in chronic myeloid leukemia (CML).

In mouse models of CML, combining the TKI nilotinib with the BCL2 inhibitor venetoclax enhanced antileukemic activity and decreased numbers of long-term LSCs.

The 2-drug combination exhibited similar activity in samples from patients with blast crisis CML.

“Our results demonstrate that . . . employing combined blockade of BCL-2 and BCR-ABL has the potential for curing CML and significantly improving outcomes for patients with blast crisis, and, as such, warrants clinical testing,” said Michael Andreeff, MD, of the University of Texas MD Anderson Cancer Center in Houston.

Dr Andreeff and his colleagues reported these results in Science Translational Medicine. The study was funded by National Institutes of Health, the Paul and Mary Haas Chair in Genetics, and Abbvie Inc., the company developing venetoclax.

The researchers noted that, although BCR-ABL TKIs have proven effective against CML, they rarely eliminate CML stem cells.

“It is believed that TKIs do not eliminate residual stem cells because they are not dependent on BCR-ABL signaling,” said study author Bing Carter, PhD, also of MD Anderson Cancer Center. “Hence, cures of CML with TKIs are rare.”

Dr Carter has worked for several years on eliminating residual CML stem cells, which could mean CML patients would no longer require long-term treatment with TKIs. Based on the current study, she and her colleagues believe that combining a TKI with a BCL-2 inhibitor may be a solution.

The researchers found that targeting both BCL-2 and BCR-ABL with venetoclax and nilotinib, respectively, exerted “potent antileukemic activity” and prolonged survival in BCR-ABL transgenic mice.

After stopping treatment, the median survival was 34.5 days for control mice, 70 days for mice treated with nilotinib alone (P=0.2146), 115 days for mice treated with venetoclax alone (P=0.0079), and 168 days for mice treated with nilotinib and venetoclax in combination (P=0.0002).

Subsequent experiments in mice showed that nilotinib alone did not significantly affect the frequency of long-term LSCs, although venetoclax alone did. Treatment with both drugs reduced the frequency of long-term LSCs even more than venetoclax alone.

Finally, the researchers tested venetoclax, nilotinib, and the combination in cells from 6 patients with blast crisis CML, all of whom had failed treatment with at least 1 TKI.

The team found that venetoclax and nilotinib had a synergistic apoptotic effect on bulk and stem/progenitor CML cells.

The researchers said these results suggest that combined inhibition of BCL-2 and BCR-ABL tyrosine kinase has the potential to significantly improve the depth of response and cure rates of chronic phase and blast crisis CML.

“This combination strategy may also apply to other malignancies that depend on kinase signaling for progression and maintenance,” Dr Andreeff added. ![]()

Preclinical research suggests that combining a BCL2 inhibitor with a BCR-ABL tyrosine kinase inhibitor (TKI) can eradicate leukemia stem cells (LSCs) in chronic myeloid leukemia (CML).

In mouse models of CML, combining the TKI nilotinib with the BCL2 inhibitor venetoclax enhanced antileukemic activity and decreased numbers of long-term LSCs.

The 2-drug combination exhibited similar activity in samples from patients with blast crisis CML.

“Our results demonstrate that . . . employing combined blockade of BCL-2 and BCR-ABL has the potential for curing CML and significantly improving outcomes for patients with blast crisis, and, as such, warrants clinical testing,” said Michael Andreeff, MD, of the University of Texas MD Anderson Cancer Center in Houston.

Dr Andreeff and his colleagues reported these results in Science Translational Medicine. The study was funded by National Institutes of Health, the Paul and Mary Haas Chair in Genetics, and Abbvie Inc., the company developing venetoclax.

The researchers noted that, although BCR-ABL TKIs have proven effective against CML, they rarely eliminate CML stem cells.

“It is believed that TKIs do not eliminate residual stem cells because they are not dependent on BCR-ABL signaling,” said study author Bing Carter, PhD, also of MD Anderson Cancer Center. “Hence, cures of CML with TKIs are rare.”

Dr Carter has worked for several years on eliminating residual CML stem cells, which could mean CML patients would no longer require long-term treatment with TKIs. Based on the current study, she and her colleagues believe that combining a TKI with a BCL-2 inhibitor may be a solution.

The researchers found that targeting both BCL-2 and BCR-ABL with venetoclax and nilotinib, respectively, exerted “potent antileukemic activity” and prolonged survival in BCR-ABL transgenic mice.

After stopping treatment, the median survival was 34.5 days for control mice, 70 days for mice treated with nilotinib alone (P=0.2146), 115 days for mice treated with venetoclax alone (P=0.0079), and 168 days for mice treated with nilotinib and venetoclax in combination (P=0.0002).

Subsequent experiments in mice showed that nilotinib alone did not significantly affect the frequency of long-term LSCs, although venetoclax alone did. Treatment with both drugs reduced the frequency of long-term LSCs even more than venetoclax alone.

Finally, the researchers tested venetoclax, nilotinib, and the combination in cells from 6 patients with blast crisis CML, all of whom had failed treatment with at least 1 TKI.

The team found that venetoclax and nilotinib had a synergistic apoptotic effect on bulk and stem/progenitor CML cells.

The researchers said these results suggest that combined inhibition of BCL-2 and BCR-ABL tyrosine kinase has the potential to significantly improve the depth of response and cure rates of chronic phase and blast crisis CML.

“This combination strategy may also apply to other malignancies that depend on kinase signaling for progression and maintenance,” Dr Andreeff added. ![]()

Preclinical research suggests that combining a BCL2 inhibitor with a BCR-ABL tyrosine kinase inhibitor (TKI) can eradicate leukemia stem cells (LSCs) in chronic myeloid leukemia (CML).

In mouse models of CML, combining the TKI nilotinib with the BCL2 inhibitor venetoclax enhanced antileukemic activity and decreased numbers of long-term LSCs.

The 2-drug combination exhibited similar activity in samples from patients with blast crisis CML.

“Our results demonstrate that . . . employing combined blockade of BCL-2 and BCR-ABL has the potential for curing CML and significantly improving outcomes for patients with blast crisis, and, as such, warrants clinical testing,” said Michael Andreeff, MD, of the University of Texas MD Anderson Cancer Center in Houston.

Dr Andreeff and his colleagues reported these results in Science Translational Medicine. The study was funded by National Institutes of Health, the Paul and Mary Haas Chair in Genetics, and Abbvie Inc., the company developing venetoclax.

The researchers noted that, although BCR-ABL TKIs have proven effective against CML, they rarely eliminate CML stem cells.

“It is believed that TKIs do not eliminate residual stem cells because they are not dependent on BCR-ABL signaling,” said study author Bing Carter, PhD, also of MD Anderson Cancer Center. “Hence, cures of CML with TKIs are rare.”

Dr Carter has worked for several years on eliminating residual CML stem cells, which could mean CML patients would no longer require long-term treatment with TKIs. Based on the current study, she and her colleagues believe that combining a TKI with a BCL-2 inhibitor may be a solution.

The researchers found that targeting both BCL-2 and BCR-ABL with venetoclax and nilotinib, respectively, exerted “potent antileukemic activity” and prolonged survival in BCR-ABL transgenic mice.

After stopping treatment, the median survival was 34.5 days for control mice, 70 days for mice treated with nilotinib alone (P=0.2146), 115 days for mice treated with venetoclax alone (P=0.0079), and 168 days for mice treated with nilotinib and venetoclax in combination (P=0.0002).

Subsequent experiments in mice showed that nilotinib alone did not significantly affect the frequency of long-term LSCs, although venetoclax alone did. Treatment with both drugs reduced the frequency of long-term LSCs even more than venetoclax alone.

Finally, the researchers tested venetoclax, nilotinib, and the combination in cells from 6 patients with blast crisis CML, all of whom had failed treatment with at least 1 TKI.

The team found that venetoclax and nilotinib had a synergistic apoptotic effect on bulk and stem/progenitor CML cells.

The researchers said these results suggest that combined inhibition of BCL-2 and BCR-ABL tyrosine kinase has the potential to significantly improve the depth of response and cure rates of chronic phase and blast crisis CML.

“This combination strategy may also apply to other malignancies that depend on kinase signaling for progression and maintenance,” Dr Andreeff added. ![]()

Blood sample collection, storage impacts protein levels

Photo by Graham Colm

Factors related to blood sample collection and storage can have a substantial impact on the biomolecular composition of the sample, according to research published in EBioMedicine.

The study showed that freezer storage time and the month and season during which a blood sample is collected can affect protein concentrations.

In fact, researchers said these factors should be considered covariates of the same importance as the sample provider’s age or gender.

“This discovery will change the way the entire world works with biobank blood,” said study author Stefan Enroth, PhD, of Uppsala University in Sweden.

“All research on, and analysis of, biobank blood going forward should also take into account what we have discovered—namely, the time aspect. It is completely new.”

As part of their research on uterine cancer, Dr Enroth and his colleagues looked at plasma samples collected from 1988 to 2014. There were 380 samples from 106 women between the ages of 29 and 73.

The researchers looked at the duration of sample storage, the women’s chronological age at sample collection, and the season and month of the year the sample was collected, assessing the impact of these factors on the abundance levels of 108 proteins.

When studying the impact of storage time, the researchers used only samples from 50-year-old women in order to isolate the time effect. The team found that storage time affected 18 proteins and explained 4.8% to 34.9% of the variance observed.

The women’s chronological age at the time of sample collection, after the adjustment for storage time, affected 70 proteins and explained 1.1% to 33.5% of the variance.

“We suspected that we’d find an influence from storage time, but we thought it would be much less,” said study author Ulf Gyllensten, PhD, of Uppsala University.

“It has now been demonstrated that storage time can be a factor at least as important as the age of the individual at sampling.”

The other major finding of the study is that protein levels vary depending on the season or month in which the samples were taken.

The researchers said results in the month analysis corresponded with the seasonal analysis, so they hypothesized that sunlight hours at the time of sampling could explain some of the variance they observed in plasma protein abundance levels.

The team found the number of sunlight hours affected 36 proteins and explained up to 4.5% of the variance observed after adjusting for storage time and age.

The researchers said these results suggest that information on the sample handling history should be regarded as “equally prominent covariates” as age or gender. Therefore, the information should be included in epidemiological studies involving protein levels. ![]()

Photo by Graham Colm

Factors related to blood sample collection and storage can have a substantial impact on the biomolecular composition of the sample, according to research published in EBioMedicine.

The study showed that freezer storage time and the month and season during which a blood sample is collected can affect protein concentrations.

In fact, researchers said these factors should be considered covariates of the same importance as the sample provider’s age or gender.

“This discovery will change the way the entire world works with biobank blood,” said study author Stefan Enroth, PhD, of Uppsala University in Sweden.

“All research on, and analysis of, biobank blood going forward should also take into account what we have discovered—namely, the time aspect. It is completely new.”

As part of their research on uterine cancer, Dr Enroth and his colleagues looked at plasma samples collected from 1988 to 2014. There were 380 samples from 106 women between the ages of 29 and 73.

The researchers looked at the duration of sample storage, the women’s chronological age at sample collection, and the season and month of the year the sample was collected, assessing the impact of these factors on the abundance levels of 108 proteins.

When studying the impact of storage time, the researchers used only samples from 50-year-old women in order to isolate the time effect. The team found that storage time affected 18 proteins and explained 4.8% to 34.9% of the variance observed.

The women’s chronological age at the time of sample collection, after the adjustment for storage time, affected 70 proteins and explained 1.1% to 33.5% of the variance.

“We suspected that we’d find an influence from storage time, but we thought it would be much less,” said study author Ulf Gyllensten, PhD, of Uppsala University.

“It has now been demonstrated that storage time can be a factor at least as important as the age of the individual at sampling.”

The other major finding of the study is that protein levels vary depending on the season or month in which the samples were taken.

The researchers said results in the month analysis corresponded with the seasonal analysis, so they hypothesized that sunlight hours at the time of sampling could explain some of the variance they observed in plasma protein abundance levels.

The team found the number of sunlight hours affected 36 proteins and explained up to 4.5% of the variance observed after adjusting for storage time and age.

The researchers said these results suggest that information on the sample handling history should be regarded as “equally prominent covariates” as age or gender. Therefore, the information should be included in epidemiological studies involving protein levels. ![]()

Photo by Graham Colm

Factors related to blood sample collection and storage can have a substantial impact on the biomolecular composition of the sample, according to research published in EBioMedicine.

The study showed that freezer storage time and the month and season during which a blood sample is collected can affect protein concentrations.

In fact, researchers said these factors should be considered covariates of the same importance as the sample provider’s age or gender.

“This discovery will change the way the entire world works with biobank blood,” said study author Stefan Enroth, PhD, of Uppsala University in Sweden.

“All research on, and analysis of, biobank blood going forward should also take into account what we have discovered—namely, the time aspect. It is completely new.”

As part of their research on uterine cancer, Dr Enroth and his colleagues looked at plasma samples collected from 1988 to 2014. There were 380 samples from 106 women between the ages of 29 and 73.

The researchers looked at the duration of sample storage, the women’s chronological age at sample collection, and the season and month of the year the sample was collected, assessing the impact of these factors on the abundance levels of 108 proteins.

When studying the impact of storage time, the researchers used only samples from 50-year-old women in order to isolate the time effect. The team found that storage time affected 18 proteins and explained 4.8% to 34.9% of the variance observed.

The women’s chronological age at the time of sample collection, after the adjustment for storage time, affected 70 proteins and explained 1.1% to 33.5% of the variance.

“We suspected that we’d find an influence from storage time, but we thought it would be much less,” said study author Ulf Gyllensten, PhD, of Uppsala University.

“It has now been demonstrated that storage time can be a factor at least as important as the age of the individual at sampling.”

The other major finding of the study is that protein levels vary depending on the season or month in which the samples were taken.

The researchers said results in the month analysis corresponded with the seasonal analysis, so they hypothesized that sunlight hours at the time of sampling could explain some of the variance they observed in plasma protein abundance levels.

The team found the number of sunlight hours affected 36 proteins and explained up to 4.5% of the variance observed after adjusting for storage time and age.

The researchers said these results suggest that information on the sample handling history should be regarded as “equally prominent covariates” as age or gender. Therefore, the information should be included in epidemiological studies involving protein levels. ![]()

Change may improve efficacy of malaria vaccine

Photo by Caitlin Kleiboer

Results of a phase 2 trial suggest that changing the dosing schedule can improve the efficacy of the malaria vaccine candidate RTS,S/AS01 (Mosquirix).

Researchers tested RTS,S/AS01 in 46 malaria-naïve US adults, using the controlled human malaria infection model (CHMI).

About 87% of subjects who received the modified dosing regimen were protected from malaria, compared to 63% of subjects who received the standard dosing schedule.

Jason Regules, MD, of the US Army Medical Research Institute of Infectious Diseases in Frederick, Maryland, and his colleagues reported these results in the Journal of Infectious Diseases.

The study was funded by GlaxoSmithKline, the US Military Infectious Disease Research Program, and the PATH Malaria Vaccine Initiative. RTS,S/AS01 is being developed by GlaxoSmithKline and the PATH Malaria Vaccine Initiative.

RTS,S/AS01 has been tested in trials of young children in Africa, and early results seemed promising. But long-term follow-up in a phase 2 study and a phase 3 study suggested the vaccine’s efficacy wanes over time.

Therefore, Dr Regules and his colleagues sought to determine if a novel immunization schedule—specifically, delaying RTS,S/AS01 administration and reducing dosage of the third vaccination, as well as any following booster dose—would significantly increase the vaccine’s ability to protect against infection.

The researchers evaluated RTS,S/AS01 in 46 malaria-naïve adults. First, the team immunized the subjects according to 2 regimens:

- A 0-, 1-, 7-month schedule with a fractional third dose (Fx017M)

- A 0-, 1-, 2-month schedule (012M, the current standard).

Following the third vaccination, subjects were exposed to malaria-causing parasites using CHMI, and the researchers evaluated the extent to which each regimen protected against infection.

During follow-up, the team assessed the efficacy of an additional fractional dose, or booster, in protecting against a second CHMI.

Twenty-six of the 30 subjects—86.7%—who received the Fx017M regimen and 10 of the 16—62.5%—who received the 012M regimen were protected from infection following the first CHMI.

In addition to providing more protection from malaria infection, the Fx017M regimen delayed infection longer than the 012M regimen.

About 90% of the Fx017M group who received a fourth fractional booster dose and underwent the second CHMI were protected from infection.

Four out of 5 subjects from both vaccination groups who were infected during the first CHMI were protected against the second, after receiving the fourth (fractional) dose of RTS,S/AS01.

The subjects did not report any serious health events as a result of receiving the vaccinations, and no safety concerns were associated with reducing dosages.

“With these results in hand, we are planning additional studies in the United States and Africa that will seek to further refine the dosing and schedule for maximum impact and to see whether these early stage results in American adults will translate into similarly high efficacy in sub-Saharan Africa, a region that bears much of the malaria disease burden,” said study author Ashley J. Birkett, PhD, director of PATH’s Malaria Vaccine Initiative.

“The results of these planned studies won’t be available for several years, however. It therefore remains critical that the pilot implementation for the recommended pediatric regimen of RTS,S/AS01, being led by the World Health Organization, moves forward as soon as possible. We need to help protect as many children as we can, as soon as we can, while we continue to pursue eradication—the only truly sustainable solution to malaria.” ![]()

Photo by Caitlin Kleiboer

Results of a phase 2 trial suggest that changing the dosing schedule can improve the efficacy of the malaria vaccine candidate RTS,S/AS01 (Mosquirix).

Researchers tested RTS,S/AS01 in 46 malaria-naïve US adults, using the controlled human malaria infection model (CHMI).

About 87% of subjects who received the modified dosing regimen were protected from malaria, compared to 63% of subjects who received the standard dosing schedule.

Jason Regules, MD, of the US Army Medical Research Institute of Infectious Diseases in Frederick, Maryland, and his colleagues reported these results in the Journal of Infectious Diseases.

The study was funded by GlaxoSmithKline, the US Military Infectious Disease Research Program, and the PATH Malaria Vaccine Initiative. RTS,S/AS01 is being developed by GlaxoSmithKline and the PATH Malaria Vaccine Initiative.

RTS,S/AS01 has been tested in trials of young children in Africa, and early results seemed promising. But long-term follow-up in a phase 2 study and a phase 3 study suggested the vaccine’s efficacy wanes over time.

Therefore, Dr Regules and his colleagues sought to determine if a novel immunization schedule—specifically, delaying RTS,S/AS01 administration and reducing dosage of the third vaccination, as well as any following booster dose—would significantly increase the vaccine’s ability to protect against infection.

The researchers evaluated RTS,S/AS01 in 46 malaria-naïve adults. First, the team immunized the subjects according to 2 regimens:

- A 0-, 1-, 7-month schedule with a fractional third dose (Fx017M)

- A 0-, 1-, 2-month schedule (012M, the current standard).

Following the third vaccination, subjects were exposed to malaria-causing parasites using CHMI, and the researchers evaluated the extent to which each regimen protected against infection.

During follow-up, the team assessed the efficacy of an additional fractional dose, or booster, in protecting against a second CHMI.

Twenty-six of the 30 subjects—86.7%—who received the Fx017M regimen and 10 of the 16—62.5%—who received the 012M regimen were protected from infection following the first CHMI.

In addition to providing more protection from malaria infection, the Fx017M regimen delayed infection longer than the 012M regimen.

About 90% of the Fx017M group who received a fourth fractional booster dose and underwent the second CHMI were protected from infection.

Four out of 5 subjects from both vaccination groups who were infected during the first CHMI were protected against the second, after receiving the fourth (fractional) dose of RTS,S/AS01.

The subjects did not report any serious health events as a result of receiving the vaccinations, and no safety concerns were associated with reducing dosages.

“With these results in hand, we are planning additional studies in the United States and Africa that will seek to further refine the dosing and schedule for maximum impact and to see whether these early stage results in American adults will translate into similarly high efficacy in sub-Saharan Africa, a region that bears much of the malaria disease burden,” said study author Ashley J. Birkett, PhD, director of PATH’s Malaria Vaccine Initiative.

“The results of these planned studies won’t be available for several years, however. It therefore remains critical that the pilot implementation for the recommended pediatric regimen of RTS,S/AS01, being led by the World Health Organization, moves forward as soon as possible. We need to help protect as many children as we can, as soon as we can, while we continue to pursue eradication—the only truly sustainable solution to malaria.” ![]()

Photo by Caitlin Kleiboer

Results of a phase 2 trial suggest that changing the dosing schedule can improve the efficacy of the malaria vaccine candidate RTS,S/AS01 (Mosquirix).

Researchers tested RTS,S/AS01 in 46 malaria-naïve US adults, using the controlled human malaria infection model (CHMI).

About 87% of subjects who received the modified dosing regimen were protected from malaria, compared to 63% of subjects who received the standard dosing schedule.

Jason Regules, MD, of the US Army Medical Research Institute of Infectious Diseases in Frederick, Maryland, and his colleagues reported these results in the Journal of Infectious Diseases.

The study was funded by GlaxoSmithKline, the US Military Infectious Disease Research Program, and the PATH Malaria Vaccine Initiative. RTS,S/AS01 is being developed by GlaxoSmithKline and the PATH Malaria Vaccine Initiative.

RTS,S/AS01 has been tested in trials of young children in Africa, and early results seemed promising. But long-term follow-up in a phase 2 study and a phase 3 study suggested the vaccine’s efficacy wanes over time.

Therefore, Dr Regules and his colleagues sought to determine if a novel immunization schedule—specifically, delaying RTS,S/AS01 administration and reducing dosage of the third vaccination, as well as any following booster dose—would significantly increase the vaccine’s ability to protect against infection.

The researchers evaluated RTS,S/AS01 in 46 malaria-naïve adults. First, the team immunized the subjects according to 2 regimens:

- A 0-, 1-, 7-month schedule with a fractional third dose (Fx017M)

- A 0-, 1-, 2-month schedule (012M, the current standard).

Following the third vaccination, subjects were exposed to malaria-causing parasites using CHMI, and the researchers evaluated the extent to which each regimen protected against infection.

During follow-up, the team assessed the efficacy of an additional fractional dose, or booster, in protecting against a second CHMI.

Twenty-six of the 30 subjects—86.7%—who received the Fx017M regimen and 10 of the 16—62.5%—who received the 012M regimen were protected from infection following the first CHMI.

In addition to providing more protection from malaria infection, the Fx017M regimen delayed infection longer than the 012M regimen.

About 90% of the Fx017M group who received a fourth fractional booster dose and underwent the second CHMI were protected from infection.

Four out of 5 subjects from both vaccination groups who were infected during the first CHMI were protected against the second, after receiving the fourth (fractional) dose of RTS,S/AS01.

The subjects did not report any serious health events as a result of receiving the vaccinations, and no safety concerns were associated with reducing dosages.

“With these results in hand, we are planning additional studies in the United States and Africa that will seek to further refine the dosing and schedule for maximum impact and to see whether these early stage results in American adults will translate into similarly high efficacy in sub-Saharan Africa, a region that bears much of the malaria disease burden,” said study author Ashley J. Birkett, PhD, director of PATH’s Malaria Vaccine Initiative.

“The results of these planned studies won’t be available for several years, however. It therefore remains critical that the pilot implementation for the recommended pediatric regimen of RTS,S/AS01, being led by the World Health Organization, moves forward as soon as possible. We need to help protect as many children as we can, as soon as we can, while we continue to pursue eradication—the only truly sustainable solution to malaria.” ![]()

How AML suppresses hematopoiesis

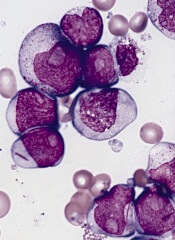

Exosomes shed by acute myeloid leukemia (AML) cells carry microRNAs that directly impair hematopoiesis, according to preclinical research published in Science Signaling.

Previous research suggested that AML exosomes can suppress residual hematopoietic stem and progenitor cell (HSPC) function indirectly through stromal reprogramming of niche retention factors.

The new study indicates that AML exosomes can block hematopoiesis by delivering microRNAs that directly suppress blood production when taken up by HSPCs.

Noah Hornick, of Oregon Health & Science University in Portland, and his colleagues conducted this study, isolating exosomes from cultures of human AML cells and from the plasma of mice with AML.

The researchers found these exosomes were enriched in 2 microRNAs—miR-150 and miR-155.

When cultured with HSPCs, the exosomes suppressed the expression of the transcription factor c-MYB, which is involved in HSPC proliferation and differentiation.

Blocking the function of miR-155 prevented AML cells or their exosomes from reducing c-MYB abundance and inhibiting the proliferation of cultured HSPCs.

Using a method called RISC-Trap, the researchers identified other targets of microRNAs in AML exosomes, from which they predicted protein networks that could be disrupted in cells taking up the exosomes.

The team said this study suggests that interfering with exosome-delivered microRNAs in the bone marrow or restoring the abundance of their targets may enhance AML patients’ ability to produce healthy blood cells. ![]()

Exosomes shed by acute myeloid leukemia (AML) cells carry microRNAs that directly impair hematopoiesis, according to preclinical research published in Science Signaling.

Previous research suggested that AML exosomes can suppress residual hematopoietic stem and progenitor cell (HSPC) function indirectly through stromal reprogramming of niche retention factors.

The new study indicates that AML exosomes can block hematopoiesis by delivering microRNAs that directly suppress blood production when taken up by HSPCs.

Noah Hornick, of Oregon Health & Science University in Portland, and his colleagues conducted this study, isolating exosomes from cultures of human AML cells and from the plasma of mice with AML.

The researchers found these exosomes were enriched in 2 microRNAs—miR-150 and miR-155.

When cultured with HSPCs, the exosomes suppressed the expression of the transcription factor c-MYB, which is involved in HSPC proliferation and differentiation.

Blocking the function of miR-155 prevented AML cells or their exosomes from reducing c-MYB abundance and inhibiting the proliferation of cultured HSPCs.

Using a method called RISC-Trap, the researchers identified other targets of microRNAs in AML exosomes, from which they predicted protein networks that could be disrupted in cells taking up the exosomes.

The team said this study suggests that interfering with exosome-delivered microRNAs in the bone marrow or restoring the abundance of their targets may enhance AML patients’ ability to produce healthy blood cells. ![]()

Exosomes shed by acute myeloid leukemia (AML) cells carry microRNAs that directly impair hematopoiesis, according to preclinical research published in Science Signaling.

Previous research suggested that AML exosomes can suppress residual hematopoietic stem and progenitor cell (HSPC) function indirectly through stromal reprogramming of niche retention factors.

The new study indicates that AML exosomes can block hematopoiesis by delivering microRNAs that directly suppress blood production when taken up by HSPCs.

Noah Hornick, of Oregon Health & Science University in Portland, and his colleagues conducted this study, isolating exosomes from cultures of human AML cells and from the plasma of mice with AML.

The researchers found these exosomes were enriched in 2 microRNAs—miR-150 and miR-155.

When cultured with HSPCs, the exosomes suppressed the expression of the transcription factor c-MYB, which is involved in HSPC proliferation and differentiation.

Blocking the function of miR-155 prevented AML cells or their exosomes from reducing c-MYB abundance and inhibiting the proliferation of cultured HSPCs.

Using a method called RISC-Trap, the researchers identified other targets of microRNAs in AML exosomes, from which they predicted protein networks that could be disrupted in cells taking up the exosomes.

The team said this study suggests that interfering with exosome-delivered microRNAs in the bone marrow or restoring the abundance of their targets may enhance AML patients’ ability to produce healthy blood cells. ![]()

CBT may be best option for pts with MRD, doc says

Photo courtesy of NHS

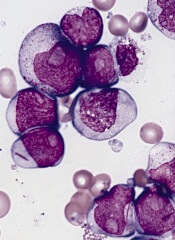

A cord blood transplant (CBT) may be the best option for patients with acute leukemia or myelodysplastic syndrome who have minimal residual disease (MRD) and no related donor, according to the senior author of a study published in NEJM.

This retrospective study showed that patients with MRD at the time of transplant were less likely to relapse if they received CBT rather than a graft from an unrelated adult donor, whether HLA-matched or mismatched.

In addition, the risk of death was significantly higher for patients with a mismatched donor than for CBT recipients, although there was no significant difference between those with a matched donor and CBT recipients.

Among patients without MRD, there were no significant differences between the transplant types for the risk of relapse or death.

“This paper shows that if you’ve got high-risk disease and are at high risk for relapse post-transplant, transplant with a cord blood donor may be the best option,” said Colleen Delaney, MD, of Fred Hutchinson Cancer Research Center in Seattle, Washington.

Dr Delaney and her colleagues analyzed data on 582 patients—300 with acute myeloid leukemia, 185 with acute lymphoblastic leukemia, and 97 with myelodysplastic syndromes.

Most patients received a transplant from an HLA-matched unrelated donor (n=344), 140 received a CBT from an unrelated donor, and 98 received a transplant from an HLA-mismatched unrelated donor.

The researchers calculated the relative risks of death and relapse for each transplant group, and they found that a patient’s MRD status prior to transplant played a role.

Presence of MRD

Among patients with MRD, the risk of death was significantly higher for recipients of mismatched grafts than for CBT recipients, with a hazard ratio (HR) of 2.92 (P=0.001).

However, the risk of death was not significantly different for recipients of matched grafts compared to CBT recipients. The HR was 1.69 (P=0.08).

The risk of relapse was about 3 times higher for recipients of mismatched grafts (HR=3.01, P=0.02) or matched grafts (HR=2.92, P=0.007) than for CBT recipients.

No MRD

Among patients without MRD, there was no significant difference in the risk of death for recipients of CBT, mismatched grafts (HR=1.36, P=0.30), or matched grafts (HR=0.78, P=0.33).

And there was no significant difference in the risk of relapse for recipients of CBT, mismatched grafts (HR=1.28, P=0.60), or matched grafts (HR=1.30, P=0.46).

“This brings home the point that cord blood shouldn’t be called an alternative donor,” Dr Delaney said. “The outcomes are the same as a conventional donor.” ![]()

Photo courtesy of NHS

A cord blood transplant (CBT) may be the best option for patients with acute leukemia or myelodysplastic syndrome who have minimal residual disease (MRD) and no related donor, according to the senior author of a study published in NEJM.

This retrospective study showed that patients with MRD at the time of transplant were less likely to relapse if they received CBT rather than a graft from an unrelated adult donor, whether HLA-matched or mismatched.

In addition, the risk of death was significantly higher for patients with a mismatched donor than for CBT recipients, although there was no significant difference between those with a matched donor and CBT recipients.

Among patients without MRD, there were no significant differences between the transplant types for the risk of relapse or death.

“This paper shows that if you’ve got high-risk disease and are at high risk for relapse post-transplant, transplant with a cord blood donor may be the best option,” said Colleen Delaney, MD, of Fred Hutchinson Cancer Research Center in Seattle, Washington.

Dr Delaney and her colleagues analyzed data on 582 patients—300 with acute myeloid leukemia, 185 with acute lymphoblastic leukemia, and 97 with myelodysplastic syndromes.

Most patients received a transplant from an HLA-matched unrelated donor (n=344), 140 received a CBT from an unrelated donor, and 98 received a transplant from an HLA-mismatched unrelated donor.

The researchers calculated the relative risks of death and relapse for each transplant group, and they found that a patient’s MRD status prior to transplant played a role.

Presence of MRD

Among patients with MRD, the risk of death was significantly higher for recipients of mismatched grafts than for CBT recipients, with a hazard ratio (HR) of 2.92 (P=0.001).

However, the risk of death was not significantly different for recipients of matched grafts compared to CBT recipients. The HR was 1.69 (P=0.08).

The risk of relapse was about 3 times higher for recipients of mismatched grafts (HR=3.01, P=0.02) or matched grafts (HR=2.92, P=0.007) than for CBT recipients.

No MRD

Among patients without MRD, there was no significant difference in the risk of death for recipients of CBT, mismatched grafts (HR=1.36, P=0.30), or matched grafts (HR=0.78, P=0.33).

And there was no significant difference in the risk of relapse for recipients of CBT, mismatched grafts (HR=1.28, P=0.60), or matched grafts (HR=1.30, P=0.46).

“This brings home the point that cord blood shouldn’t be called an alternative donor,” Dr Delaney said. “The outcomes are the same as a conventional donor.” ![]()

Photo courtesy of NHS

A cord blood transplant (CBT) may be the best option for patients with acute leukemia or myelodysplastic syndrome who have minimal residual disease (MRD) and no related donor, according to the senior author of a study published in NEJM.

This retrospective study showed that patients with MRD at the time of transplant were less likely to relapse if they received CBT rather than a graft from an unrelated adult donor, whether HLA-matched or mismatched.

In addition, the risk of death was significantly higher for patients with a mismatched donor than for CBT recipients, although there was no significant difference between those with a matched donor and CBT recipients.

Among patients without MRD, there were no significant differences between the transplant types for the risk of relapse or death.

“This paper shows that if you’ve got high-risk disease and are at high risk for relapse post-transplant, transplant with a cord blood donor may be the best option,” said Colleen Delaney, MD, of Fred Hutchinson Cancer Research Center in Seattle, Washington.

Dr Delaney and her colleagues analyzed data on 582 patients—300 with acute myeloid leukemia, 185 with acute lymphoblastic leukemia, and 97 with myelodysplastic syndromes.

Most patients received a transplant from an HLA-matched unrelated donor (n=344), 140 received a CBT from an unrelated donor, and 98 received a transplant from an HLA-mismatched unrelated donor.

The researchers calculated the relative risks of death and relapse for each transplant group, and they found that a patient’s MRD status prior to transplant played a role.

Presence of MRD

Among patients with MRD, the risk of death was significantly higher for recipients of mismatched grafts than for CBT recipients, with a hazard ratio (HR) of 2.92 (P=0.001).

However, the risk of death was not significantly different for recipients of matched grafts compared to CBT recipients. The HR was 1.69 (P=0.08).

The risk of relapse was about 3 times higher for recipients of mismatched grafts (HR=3.01, P=0.02) or matched grafts (HR=2.92, P=0.007) than for CBT recipients.

No MRD

Among patients without MRD, there was no significant difference in the risk of death for recipients of CBT, mismatched grafts (HR=1.36, P=0.30), or matched grafts (HR=0.78, P=0.33).

And there was no significant difference in the risk of relapse for recipients of CBT, mismatched grafts (HR=1.28, P=0.60), or matched grafts (HR=1.30, P=0.46).

“This brings home the point that cord blood shouldn’t be called an alternative donor,” Dr Delaney said. “The outcomes are the same as a conventional donor.”

Risk factors for early death in older pts with DLBCL

Photo courtesy of CDC

A retrospective study has uncovered potential risk factors for early death in older patients with diffuse large B-cell lymphoma (DLBCL).

Researchers examined electronic health record data for roughly 5500 DLBCL patients over the age of 65 who received contemporary immunochemotherapy.

This revealed 7 factors that were significantly associated with the risk of death within 30 days of treatment initiation.

“The first month of treatment, when patients are compromised both by active lymphoma and toxicities of chemotherapy, is a period of particular concern, as nearly 1 in 4 patients were hospitalized during that time,” said study author Adam J. Olszewski, MD, of Rhode Island Hospital in Providence.

“While comprehensive geriatric assessment remains the gold standard for risk assessment, our study suggests that readily available data from electronic medical records can help identify the high-risk factors in practice.”

Dr Olszewski and his colleagues described their study in JNCCN.

The researchers looked at Medicare claims linked to Surveillance, Epidemiology and End Results registry data for 5530 DLBCL patients who had a median age of 76.

The patients were treated with rituximab, cyclophosphamide, and vincristine in combination with doxorubicin, mitoxantrone, or etoposide from 2003 to 2012.

The cumulative incidence of death at 30 days was 2.2%. The most common causes of death were lymphoma (72%), heart disease (9%), septicemia (3%), and cerebrovascular events (3%).

The researchers created a prediction model based on 7 factors that were significantly associated with early death in multivariate analysis. These include:

- B symptoms

- Chronic kidney disease

- Poor performance status

- Prior use of walking aids or wheelchairs

- Prior hospitalization within the past 12 months

- Upper endoscopy within the past 12 months

- Age 75 or older.

Patients with 0 to 1 of these risk factors were considered low-risk, with a 0.6% chance of early death. Fifty-six percent of the patients studied fit this definition.

Patients with 2 to 3 of the risk factors were intermediate-risk, with a 3.2% chance of early death. Thirty-eight percent of the patients studied fell into this category.

Only 6% of the patients studied were considered high-risk. These patients had 4 or more risk factors and an 8.3% chance of early death.

The researchers also found the administration of prophylactic granulocyte-colony stimulating factor (G-CSF) was associated with lower probability of early death in the high-risk group.

They noted that prophylactic G-CSF was given to 66% of patients in this study, which suggests an opportunity for preventing early deaths.

“It is equally important to realize that a majority of older patients without risk factors can safely receive curative immunochemotherapy,” Dr Olszewski said. “Enhanced supportive care and monitoring should be provided for high-risk groups.”

Photo courtesy of CDC

A retrospective study has uncovered potential risk factors for early death in older patients with diffuse large B-cell lymphoma (DLBCL).

Researchers examined electronic health record data for roughly 5500 DLBCL patients over the age of 65 who received contemporary immunochemotherapy.

This revealed 7 factors that were significantly associated with the risk of death within 30 days of treatment initiation.

“The first month of treatment, when patients are compromised both by active lymphoma and toxicities of chemotherapy, is a period of particular concern, as nearly 1 in 4 patients were hospitalized during that time,” said study author Adam J. Olszewski, MD, of Rhode Island Hospital in Providence.

“While comprehensive geriatric assessment remains the gold standard for risk assessment, our study suggests that readily available data from electronic medical records can help identify the high-risk factors in practice.”

Dr Olszewski and his colleagues described their study in JNCCN.

The researchers looked at Medicare claims linked to Surveillance, Epidemiology and End Results registry data for 5530 DLBCL patients who had a median age of 76.

The patients were treated with rituximab, cyclophosphamide, and vincristine in combination with doxorubicin, mitoxantrone, or etoposide from 2003 to 2012.

The cumulative incidence of death at 30 days was 2.2%. The most common causes of death were lymphoma (72%), heart disease (9%), septicemia (3%), and cerebrovascular events (3%).

The researchers created a prediction model based on 7 factors that were significantly associated with early death in multivariate analysis. These include:

- B symptoms

- Chronic kidney disease

- Poor performance status

- Prior use of walking aids or wheelchairs

- Prior hospitalization within the past 12 months

- Upper endoscopy within the past 12 months

- Age 75 or older.

Patients with 0 to 1 of these risk factors were considered low-risk, with a 0.6% chance of early death. Fifty-six percent of the patients studied fit this definition.

Patients with 2 to 3 of the risk factors were intermediate-risk, with a 3.2% chance of early death. Thirty-eight percent of the patients studied fell into this category.

Only 6% of the patients studied were considered high-risk. These patients had 4 or more risk factors and an 8.3% chance of early death.

The researchers also found the administration of prophylactic granulocyte-colony stimulating factor (G-CSF) was associated with lower probability of early death in the high-risk group.

They noted that prophylactic G-CSF was given to 66% of patients in this study, which suggests an opportunity for preventing early deaths.

“It is equally important to realize that a majority of older patients without risk factors can safely receive curative immunochemotherapy,” Dr Olszewski said. “Enhanced supportive care and monitoring should be provided for high-risk groups.”

Photo courtesy of CDC

A retrospective study has uncovered potential risk factors for early death in older patients with diffuse large B-cell lymphoma (DLBCL).

Researchers examined electronic health record data for roughly 5500 DLBCL patients over the age of 65 who received contemporary immunochemotherapy.

This revealed 7 factors that were significantly associated with the risk of death within 30 days of treatment initiation.

“The first month of treatment, when patients are compromised both by active lymphoma and toxicities of chemotherapy, is a period of particular concern, as nearly 1 in 4 patients were hospitalized during that time,” said study author Adam J. Olszewski, MD, of Rhode Island Hospital in Providence.

“While comprehensive geriatric assessment remains the gold standard for risk assessment, our study suggests that readily available data from electronic medical records can help identify the high-risk factors in practice.”

Dr Olszewski and his colleagues described their study in JNCCN.

The researchers looked at Medicare claims linked to Surveillance, Epidemiology and End Results registry data for 5530 DLBCL patients who had a median age of 76.

The patients were treated with rituximab, cyclophosphamide, and vincristine in combination with doxorubicin, mitoxantrone, or etoposide from 2003 to 2012.

The cumulative incidence of death at 30 days was 2.2%. The most common causes of death were lymphoma (72%), heart disease (9%), septicemia (3%), and cerebrovascular events (3%).

The researchers created a prediction model based on 7 factors that were significantly associated with early death in multivariate analysis. These include:

- B symptoms

- Chronic kidney disease

- Poor performance status

- Prior use of walking aids or wheelchairs

- Prior hospitalization within the past 12 months

- Upper endoscopy within the past 12 months

- Age 75 or older.

Patients with 0 to 1 of these risk factors were considered low-risk, with a 0.6% chance of early death. Fifty-six percent of the patients studied fit this definition.

Patients with 2 to 3 of the risk factors were intermediate-risk, with a 3.2% chance of early death. Thirty-eight percent of the patients studied fell into this category.

Only 6% of the patients studied were considered high-risk. These patients had 4 or more risk factors and an 8.3% chance of early death.

The researchers also found the administration of prophylactic granulocyte-colony stimulating factor (G-CSF) was associated with lower probability of early death in the high-risk group.

They noted that prophylactic G-CSF was given to 66% of patients in this study, which suggests an opportunity for preventing early deaths.

“It is equally important to realize that a majority of older patients without risk factors can safely receive curative immunochemotherapy,” Dr Olszewski said. “Enhanced supportive care and monitoring should be provided for high-risk groups.”

Gene therapy effective against SCD in mice

blood cells from a mouse

Image courtesy of

University of Michigan

Preclinical research suggests a novel gene therapy may be effective against sickle cell disease (SCD).

The therapy is designed to selectively inhibit the fetal hemoglobin repressor BCL11A in erythroid cells.

Researchers found this was sufficient to increase fetal hemoglobin production and reverse the effects of SCD in vivo, without presenting the same problems as ubiquitous BCL11A knockdown.

The team reported these findings in The Journal of Clinical Investigation.

Previous research showed that suppressing BCL11A can replace the defective beta

hemoglobin that causes sickling with healthy fetal hemoglobin.

“BCL11A represses fetal hemoglobin, which does not lead to sickling, and also activates beta hemoglobin, which is affected by the sickle cell mutation,” explained study author David A. Williams, MD, of Boston Children’s Hospital in Massachusetts.

“So when you knock BCL11A down, you simultaneously increase fetal hemoglobin and repress sickling hemoglobin, which is why we think this is the best approach to gene therapy in sickle cell disease.”

However, Dr Williams and his colleagues found that ubiquitous knockdown of BCL11A impaired the engraftment of human and murine hematopoietic stem cells (HSCs).

To circumvent this problem, the researchers set out to silence BCL11A only in erythroid cells.

Selectively knocking down BCL11A involved several layers of engineering. As the core of their gene therapy vector, the researchers used a short hairpin RNA that inactivates BCL11A. To get it into cells, they embedded the short hairpin RNA in a microRNA that cells generally recognize and process.

To make this assembly work in the right place at the right time, the team hooked it to a promoter of beta hemoglobin expression, together with regulatory elements active only in erythroid cells. Finally, they inserted the whole package into a lentivirus.

HSCs from mice and SCD patients were then exposed to the manipulated virus, taking up the new genetic material. The resulting genetically engineered erythroid cells began producing fetal hemoglobin rather than the mutated beta hemoglobin.

When HSCs treated with this gene therapy were transplanted into mice with SCD, the cells engrafted successfully and reduced signs of SCD—namely, hemolytic anemia and increased numbers

of reticulocytes.

Dr Williams believes this approach could substantially increase the ratio of non-sickling to sickling hemoglobin in SCD. He also said the approach could be beneficial in beta-thalassemia.

blood cells from a mouse

Image courtesy of

University of Michigan

Preclinical research suggests a novel gene therapy may be effective against sickle cell disease (SCD).

The therapy is designed to selectively inhibit the fetal hemoglobin repressor BCL11A in erythroid cells.

Researchers found this was sufficient to increase fetal hemoglobin production and reverse the effects of SCD in vivo, without presenting the same problems as ubiquitous BCL11A knockdown.

The team reported these findings in The Journal of Clinical Investigation.

Previous research showed that suppressing BCL11A can replace the defective beta

hemoglobin that causes sickling with healthy fetal hemoglobin.

“BCL11A represses fetal hemoglobin, which does not lead to sickling, and also activates beta hemoglobin, which is affected by the sickle cell mutation,” explained study author David A. Williams, MD, of Boston Children’s Hospital in Massachusetts.

“So when you knock BCL11A down, you simultaneously increase fetal hemoglobin and repress sickling hemoglobin, which is why we think this is the best approach to gene therapy in sickle cell disease.”

However, Dr Williams and his colleagues found that ubiquitous knockdown of BCL11A impaired the engraftment of human and murine hematopoietic stem cells (HSCs).

To circumvent this problem, the researchers set out to silence BCL11A only in erythroid cells.

Selectively knocking down BCL11A involved several layers of engineering. As the core of their gene therapy vector, the researchers used a short hairpin RNA that inactivates BCL11A. To get it into cells, they embedded the short hairpin RNA in a microRNA that cells generally recognize and process.

To make this assembly work in the right place at the right time, the team hooked it to a promoter of beta hemoglobin expression, together with regulatory elements active only in erythroid cells. Finally, they inserted the whole package into a lentivirus.

HSCs from mice and SCD patients were then exposed to the manipulated virus, taking up the new genetic material. The resulting genetically engineered erythroid cells began producing fetal hemoglobin rather than the mutated beta hemoglobin.

When HSCs treated with this gene therapy were transplanted into mice with SCD, the cells engrafted successfully and reduced signs of SCD—namely, hemolytic anemia and increased numbers

of reticulocytes.

Dr Williams believes this approach could substantially increase the ratio of non-sickling to sickling hemoglobin in SCD. He also said the approach could be beneficial in beta-thalassemia.

blood cells from a mouse

Image courtesy of

University of Michigan

Preclinical research suggests a novel gene therapy may be effective against sickle cell disease (SCD).

The therapy is designed to selectively inhibit the fetal hemoglobin repressor BCL11A in erythroid cells.

Researchers found this was sufficient to increase fetal hemoglobin production and reverse the effects of SCD in vivo, without presenting the same problems as ubiquitous BCL11A knockdown.

The team reported these findings in The Journal of Clinical Investigation.

Previous research showed that suppressing BCL11A can replace the defective beta

hemoglobin that causes sickling with healthy fetal hemoglobin.

“BCL11A represses fetal hemoglobin, which does not lead to sickling, and also activates beta hemoglobin, which is affected by the sickle cell mutation,” explained study author David A. Williams, MD, of Boston Children’s Hospital in Massachusetts.

“So when you knock BCL11A down, you simultaneously increase fetal hemoglobin and repress sickling hemoglobin, which is why we think this is the best approach to gene therapy in sickle cell disease.”

However, Dr Williams and his colleagues found that ubiquitous knockdown of BCL11A impaired the engraftment of human and murine hematopoietic stem cells (HSCs).

To circumvent this problem, the researchers set out to silence BCL11A only in erythroid cells.

Selectively knocking down BCL11A involved several layers of engineering. As the core of their gene therapy vector, the researchers used a short hairpin RNA that inactivates BCL11A. To get it into cells, they embedded the short hairpin RNA in a microRNA that cells generally recognize and process.

To make this assembly work in the right place at the right time, the team hooked it to a promoter of beta hemoglobin expression, together with regulatory elements active only in erythroid cells. Finally, they inserted the whole package into a lentivirus.

HSCs from mice and SCD patients were then exposed to the manipulated virus, taking up the new genetic material. The resulting genetically engineered erythroid cells began producing fetal hemoglobin rather than the mutated beta hemoglobin.

When HSCs treated with this gene therapy were transplanted into mice with SCD, the cells engrafted successfully and reduced signs of SCD—namely, hemolytic anemia and increased numbers

of reticulocytes.

Dr Williams believes this approach could substantially increase the ratio of non-sickling to sickling hemoglobin in SCD. He also said the approach could be beneficial in beta-thalassemia.

Optimizing CAR T-cell therapy in NHL

Photo from Fred Hutchinson

Cancer Research Center

Results from a phase 1 study have provided insights that may help researchers optimize treatment with JCAR014, a chimeric antigen receptor (CAR) T-cell therapy, in patients with advanced non-Hodgkin lymphoma (NHL).

Researchers said they identified a lymphodepleting regimen that improved the likelihood of complete response (CR) to JCAR014.

Although the regimen also increased the risk of severe cytokine release syndrome (CRS) and neurotoxicity, the researchers discovered biomarkers that might allow them to identify patients who have a high risk of these events and could potentially benefit from early interventions.

The researchers reported these findings in Science Translational Medicine. The trial (NCT01865617) was funded, in part, by Juno Therapeutics, the company developing JCAR014.

Previous results from this trial, in patients with acute lymphoblastic leukemia, were published in The Journal of Clinical Investigation.

About JCAR014

JCAR014 is a CD19-directed CAR T-cell therapy in which CD4+ and CD8+ cells are administered in equal proportions.