User login

It’s not time to abandon routine screening mammography in average-risk women in their 40s

In the 1970s and early 1980s, population-based screening mammography was studied in numerous randomized control trials (RCTs), with the primary outcome of reduced breast cancer mortality. Although technology and the sensitivity of mammography in the 1980s was somewhat rudimentary compared with current screening, a meta-analysis of these RCTs demonstrated a clear mortality benefit for screening mammography.1 As a result, widespread population-based mammography was introduced in the mid-1980s in the United States and has become a standard for breast cancer screening.

Since that time, few RCTs of screening mammography versus observation have been conducted because of the ethical challenges of entering women into such studies as well as the difficulty and expense of long-term follow-up to measure the effect of screening on breast cancer mortality. Without ongoing RCTs of mammography, retrospective, observational, and computer simulation trials of the efficacy and harms of screening mammography have been conducted using proxy measures of mortality (such as stage at diagnosis), and some have questioned the overall benefit of screening mammography.2,3

To further complicate this controversy, some national guidelines have recommended against routinely recommending screening mammography for women aged 40 to 49 based on concerns that the harms (callbacks, benign breast biopsies, overdiagnosis) exceed the potential benefits (earlier diagnosis, possible decrease in needed treatments, reduced breast cancer mortality).4 This has resulted in a confusing morass of national recommendations with uncertainty regarding the question of whether to routinely offer screening mammography for women in their 40s at average risk for breast cancer.4-6

Recently, to address this question Duffy and colleagues conducted a large RCT of women in their 40s to evaluate the long-term effect of mammography on breast cancer mortality.7 Here, I review the study in depth and offer some guidance to clinicians and women struggling with screening decisions.

Breast cancer mortality significantly lower in the screening group

The RCT, known as the UK Age trial, was conducted in England, Wales, and Scotland and enrolled 160,921 women from 1990 through 1997.7 Women were randomly assigned in a 2:1 ratio to observation or annual screening mammogram beginning at age 39–41 until age 48. (In the United Kingdom, all women are screened starting at age 50.) Study enrollees were followed for a median of 22.8 years, and the primary outcome was breast cancer mortality.

The study results showed a 25% relative risk (RR) reduction in breast cancer mortality at 10 years of follow-up in the mammography group compared with the unscreened women (83 breast cancer deaths in the mammography group vs 219 in the observation group [RR, 0.75; 95% confidence interval (CI), 0.58–0.97; P = .029]). Based on the prevalence of breast cancer in women in their 40s, this 25% relative risk reduction translates into approximately 1 less death per 1,000 women who undergo routine screening in their 40s.

While there was no additional significant mortality reduction beyond 10 years of follow-up, as noted mammography is offered routinely starting at age 50 to all women in the United Kingdom. The authors concluded that “reducing the lower age limit for screening from 50 to 40 years [of age] could potentially reduce breast cancer mortality.”

Was overdiagnosis a concern? Another finding in this trial was related to overdiagnosis of breast cancer in the screened group. Overdiagnosis refers to mammographic-only diagnosis (that is, no clinical findings) of nonaggressive breast cancer, which would remain indolent and not harm the patient. The study results demonstrated essentially no overdiagnosis in women screened at age 40 compared with the unscreened group.

Continue to: Large trial, long follow-up are key strengths...

Large trial, long follow-up are key strengths

The UK Age trial’s primary strength is its study design: a large population-based RCT that included diverse participants with the critical study outcome for cancer screening (mortality). The study’s long-term follow-up is another key strength, since breast cancer mortality typically occurs 7 to 10 years after diagnosis. In addition, results were available for 99.9% of the women enrolled in the trial (that is, only 0.1% of women were lost to follow-up). Interestingly, the demonstrated mortality reduction with screening mammography for women in their 40s validates the mortality benefit demonstrated in other large RCTs of women in their 40s.1

Another strong point is that the study addresses the issue of whether screening women in their 40s results in overdiagnosis compared with women who start screening in their 50s. Further, this study validates a prior observational study that mammographic findings of nonprogressive cancers do not disappear, so nonaggressive cancers that present on mammography in women in their 40s still would be detected when women start screening in their 50s.8

Study limitations should be noted

The study has several limitations. For example, significant improvements have been made in breast cancer treatments that may mitigate against the positive impact of screening mammography. The impact of changed breast cancer management over the past 20 years could not be addressed with this study’s design since women would have been treated in the 1990s. In addition, substantial improvements have occurred in breast cancer screening standards (2 views vs the single view used in the study) and technology since the 1990s. Current mammography includes nearly uniform use of either digital mammography (DM) or digital breast tomosynthesis (DBT), both of which improve breast cancer detection for women in their 40s compared with the older film-screen technology. In addition, DBT reduces false-positive results by approximately 40%, resulting in fewer callbacks and biopsies. While improved cancer detection and reduced false-positive results are seen with DM and DBT, whether these technology improvements result in improved breast cancer mortality has not yet been sufficiently studied.

Perhaps the most important limitation in this study is that the women did not undergo routine risk assessment before trial entry to assure that they all were at “average risk.” As a result, both high- and average-risk women would have been included in this population-based trial. Without risk stratification, it remains uncertain whether the reduction in breast cancer mortality disproportionately exists within a high-risk subgroup (such as breast cancer gene mutation carriers).

Finally, the cost efficacy of routine screening mammography for women in their 40s was not evaluated in this study.

The UK Age trial in perspective

The good news is that there is the clear evidence that breast cancer mortality rates (deaths per 100,000) have decreased by about 40% over the past 50 years, likely due to improvements in breast cancer treatment and routine screening mammography.9 Breast cancer mortality reduction is particularly important because breast cancer remains the most common cancer and is the second leading cause of cancer death in women in the United States. In the past decade, considerable debate has arisen arguing whether this reduction in breast cancer mortality is due to improved treatments, routine screening mammography, or both. Authors of a retrospective trial in Australia, recently reviewed in OBG Management, suggested that the majority of improvement is due to improvements in treatment.3,10 However, as the authors pointed out, due to the trial’s retrospective design, causality only can be inferred. The current UK Age trial does add to the numerous prospective trials demonstrating mortality benefit for mammography in women in their 40s.11

What remains a challenge for clinicians, and for women struggling with the mammography question, is the absence of risk assessment in these long-term RCT trials as well as in the large retrospective database studies. Without risk stratification, these studies treated all the study population as “average risk.” Because breast cancer risk assessment is sporadically performed in clinical practice and there are no published RCTs of screening mammography in risk-assessed “average risk” women in their 40s, it remains uncertain whether the women benefiting from screening in their 40s are in a high-risk group or whether women of average risk in this age group also are benefiting from routine screening mammography.

Continue to: What’s next: Incorporate routine risk assessment into clinical practice...

What’s next: Incorporate routine risk assessment into clinical practice

It is not time to abandon screening mammography for all women in their 40s. Rather, routine risk assessment should be performed using one of many available validated or widely tested tools, a recommendation supported by the American College of Obstetricians and Gynecologists, the National Comprehensive Cancer Network, and the US Preventive Services Task Force.5,6,12

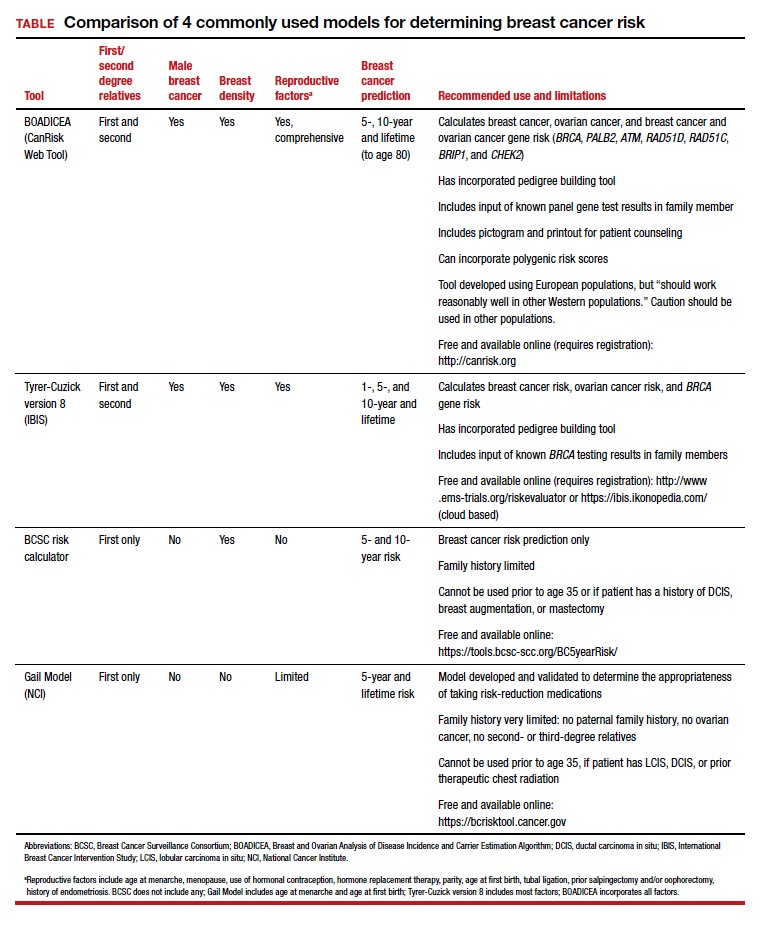

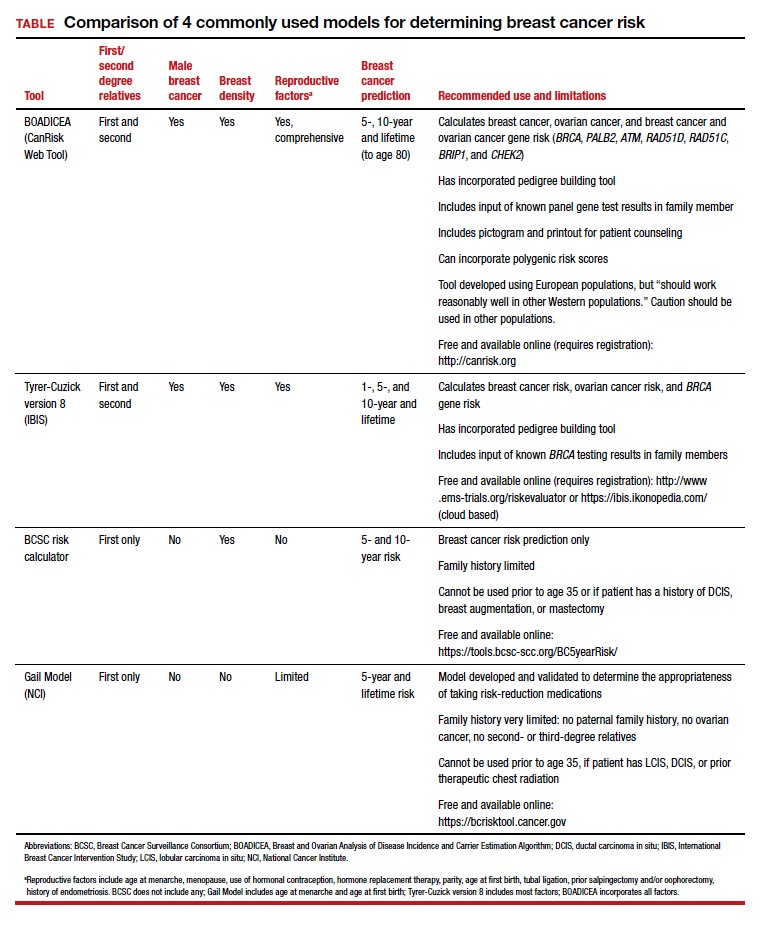

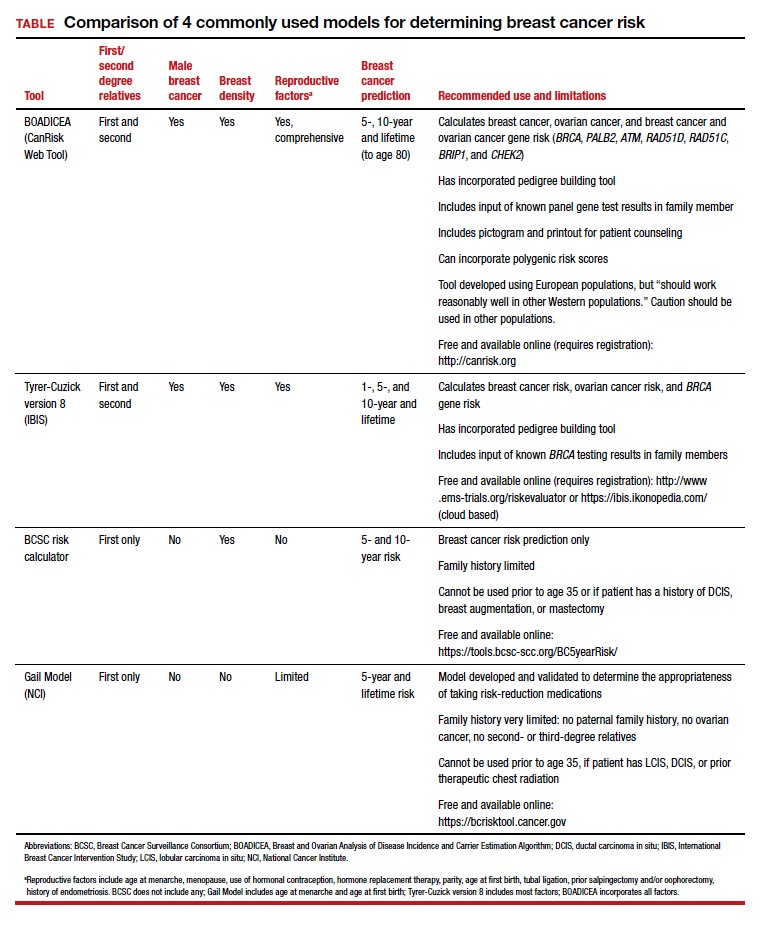

Ideally, these tools can be incorporated into an electronic health record and prepopulated using already available patient data (such as age, reproductive risk factors, current medications, breast density if available, and family history). Prepopulating available data into breast cancer risk calculators would allow clinicians to spend time on counseling women regarding breast cancer risk and appropriate screening methods. The TABLE provides a summary of useful breast cancer risk calculators and includes comments about their utility and significant limitations and benefits. In addition to breast cancer risk, the more comprehensive risk calculators (Tyrer-Cuzick and BOADICEA) allow calculation of ovarian cancer risk and gene mutation risk.

Routinely performing breast cancer risk assessment can guide discussions of screening mammography and can provide data for conducting a more individualized discussion on cancer genetic counseling and testing, risk reduction methods in high-risk women, and possible use of intensive breast cancer screening tools in identified high-risk women.

Ultimately, debating the question of whether all women should have routine breast cancer screening in their 40s should be passé. Ideally, all women should undergo breast cancer risk assessment in their 20s. Risk assessment results can then be used to guide the discussion of multiple potential interventions for women in their 40s (or earlier if appropriate), including routine screening mammography, cancer genetic counseling and testing in appropriate individuals, and intervention for women who are identified at high risk.

Absent breast cancer risk assessment, screening mammography still should be offered to women in their 40s, and the decision to proceed should be based on a discussion of risks, benefits, and the value the patient places on these factors.●

- Nelson HD, Fu R, Cantor A, et al. Effectiveness of breast cancer screening: systematic review and meta-analysis to update the 2009 US Preventive Services Task Force recommendation. Ann Intern Med. 2016;164:244-255.

- Bleyer A, Welch HG. Effect of three decades of screening mammography on breast-cancer incidence. N Engl J Med. 2012;367:1998-2005.

- Burton R, Stevenson C. Assessment of breast cancer mortality trends associated with mammographic screening and adjuvant therapy from 1986 to 2013 in the state of Victoria, Australia. JAMA Netw Open. 2020;3:e208249-e.

- Nelson HD, Cantor A, Humphrey L, et al. A systematic review to update the 2009 US Preventive Services Task Force recommendation. Evidence syntheses No. 124. AHRQ Publication No. 14-05201-EF-1. Rockville, MD: Agency for Healthcare Research and Quality; 2016.

- Bevers TB, Helvie M, Bonaccio E, et al. Breast cancer screening and diagnosis, version 3.2018, NCCN clinical practice guidelines in oncology. J Natl Compr Canc Netw. 2018;16:1362-1389.

- ACOG Committee on Practice Bulletins–Gynecology. Breast cancer risk assessment and screening in average-risk women. Obstet Gynecol. 2017;130:e1-e16.

- Duffy SW, Vulkan D, Cuckle H, et al. Effect of mammographic screening from age 40 years on breast cancer mortality (UK Age trial): final results of a randomised, controlled trial. Lancet Oncol. 2020;21:1165-1172.

- Arleo EK, Monticciolo DL, Monsees B, et al. Persistent untreated screening-detected breast cancer: an argument against delaying screening or increasing the interval between screenings. J Am Coll Radiol. 2017;14:863-867.

- DeSantis CE, Ma J, Gaudet MM, et al. Breast cancer statistics, 2019. CA Cancer J Clin. 2019;69:438-451.

- Kaunitz AM. How effective is screening mammography for preventing breast cancer mortality? OBG Manag. 2020;32(8):17,49.

- Oeffinger KC, Fontham ET, Etzioni R, et al; American Cancer Society. Breast cancer screening for women at average risk: 2015 guideline update from the American Cancer Society. JAMA. 2015;314:1599-1614.

- US Preventive Services Task Force; Owens DK, Davidson KW, Krist AH, et al. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer: US Preventive Services Task Force recommendation statement. JAMA. 2019;322:652-665.

In the 1970s and early 1980s, population-based screening mammography was studied in numerous randomized control trials (RCTs), with the primary outcome of reduced breast cancer mortality. Although technology and the sensitivity of mammography in the 1980s was somewhat rudimentary compared with current screening, a meta-analysis of these RCTs demonstrated a clear mortality benefit for screening mammography.1 As a result, widespread population-based mammography was introduced in the mid-1980s in the United States and has become a standard for breast cancer screening.

Since that time, few RCTs of screening mammography versus observation have been conducted because of the ethical challenges of entering women into such studies as well as the difficulty and expense of long-term follow-up to measure the effect of screening on breast cancer mortality. Without ongoing RCTs of mammography, retrospective, observational, and computer simulation trials of the efficacy and harms of screening mammography have been conducted using proxy measures of mortality (such as stage at diagnosis), and some have questioned the overall benefit of screening mammography.2,3

To further complicate this controversy, some national guidelines have recommended against routinely recommending screening mammography for women aged 40 to 49 based on concerns that the harms (callbacks, benign breast biopsies, overdiagnosis) exceed the potential benefits (earlier diagnosis, possible decrease in needed treatments, reduced breast cancer mortality).4 This has resulted in a confusing morass of national recommendations with uncertainty regarding the question of whether to routinely offer screening mammography for women in their 40s at average risk for breast cancer.4-6

Recently, to address this question Duffy and colleagues conducted a large RCT of women in their 40s to evaluate the long-term effect of mammography on breast cancer mortality.7 Here, I review the study in depth and offer some guidance to clinicians and women struggling with screening decisions.

Breast cancer mortality significantly lower in the screening group

The RCT, known as the UK Age trial, was conducted in England, Wales, and Scotland and enrolled 160,921 women from 1990 through 1997.7 Women were randomly assigned in a 2:1 ratio to observation or annual screening mammogram beginning at age 39–41 until age 48. (In the United Kingdom, all women are screened starting at age 50.) Study enrollees were followed for a median of 22.8 years, and the primary outcome was breast cancer mortality.

The study results showed a 25% relative risk (RR) reduction in breast cancer mortality at 10 years of follow-up in the mammography group compared with the unscreened women (83 breast cancer deaths in the mammography group vs 219 in the observation group [RR, 0.75; 95% confidence interval (CI), 0.58–0.97; P = .029]). Based on the prevalence of breast cancer in women in their 40s, this 25% relative risk reduction translates into approximately 1 less death per 1,000 women who undergo routine screening in their 40s.

While there was no additional significant mortality reduction beyond 10 years of follow-up, as noted mammography is offered routinely starting at age 50 to all women in the United Kingdom. The authors concluded that “reducing the lower age limit for screening from 50 to 40 years [of age] could potentially reduce breast cancer mortality.”

Was overdiagnosis a concern? Another finding in this trial was related to overdiagnosis of breast cancer in the screened group. Overdiagnosis refers to mammographic-only diagnosis (that is, no clinical findings) of nonaggressive breast cancer, which would remain indolent and not harm the patient. The study results demonstrated essentially no overdiagnosis in women screened at age 40 compared with the unscreened group.

Continue to: Large trial, long follow-up are key strengths...

Large trial, long follow-up are key strengths

The UK Age trial’s primary strength is its study design: a large population-based RCT that included diverse participants with the critical study outcome for cancer screening (mortality). The study’s long-term follow-up is another key strength, since breast cancer mortality typically occurs 7 to 10 years after diagnosis. In addition, results were available for 99.9% of the women enrolled in the trial (that is, only 0.1% of women were lost to follow-up). Interestingly, the demonstrated mortality reduction with screening mammography for women in their 40s validates the mortality benefit demonstrated in other large RCTs of women in their 40s.1

Another strong point is that the study addresses the issue of whether screening women in their 40s results in overdiagnosis compared with women who start screening in their 50s. Further, this study validates a prior observational study that mammographic findings of nonprogressive cancers do not disappear, so nonaggressive cancers that present on mammography in women in their 40s still would be detected when women start screening in their 50s.8

Study limitations should be noted

The study has several limitations. For example, significant improvements have been made in breast cancer treatments that may mitigate against the positive impact of screening mammography. The impact of changed breast cancer management over the past 20 years could not be addressed with this study’s design since women would have been treated in the 1990s. In addition, substantial improvements have occurred in breast cancer screening standards (2 views vs the single view used in the study) and technology since the 1990s. Current mammography includes nearly uniform use of either digital mammography (DM) or digital breast tomosynthesis (DBT), both of which improve breast cancer detection for women in their 40s compared with the older film-screen technology. In addition, DBT reduces false-positive results by approximately 40%, resulting in fewer callbacks and biopsies. While improved cancer detection and reduced false-positive results are seen with DM and DBT, whether these technology improvements result in improved breast cancer mortality has not yet been sufficiently studied.

Perhaps the most important limitation in this study is that the women did not undergo routine risk assessment before trial entry to assure that they all were at “average risk.” As a result, both high- and average-risk women would have been included in this population-based trial. Without risk stratification, it remains uncertain whether the reduction in breast cancer mortality disproportionately exists within a high-risk subgroup (such as breast cancer gene mutation carriers).

Finally, the cost efficacy of routine screening mammography for women in their 40s was not evaluated in this study.

The UK Age trial in perspective

The good news is that there is the clear evidence that breast cancer mortality rates (deaths per 100,000) have decreased by about 40% over the past 50 years, likely due to improvements in breast cancer treatment and routine screening mammography.9 Breast cancer mortality reduction is particularly important because breast cancer remains the most common cancer and is the second leading cause of cancer death in women in the United States. In the past decade, considerable debate has arisen arguing whether this reduction in breast cancer mortality is due to improved treatments, routine screening mammography, or both. Authors of a retrospective trial in Australia, recently reviewed in OBG Management, suggested that the majority of improvement is due to improvements in treatment.3,10 However, as the authors pointed out, due to the trial’s retrospective design, causality only can be inferred. The current UK Age trial does add to the numerous prospective trials demonstrating mortality benefit for mammography in women in their 40s.11

What remains a challenge for clinicians, and for women struggling with the mammography question, is the absence of risk assessment in these long-term RCT trials as well as in the large retrospective database studies. Without risk stratification, these studies treated all the study population as “average risk.” Because breast cancer risk assessment is sporadically performed in clinical practice and there are no published RCTs of screening mammography in risk-assessed “average risk” women in their 40s, it remains uncertain whether the women benefiting from screening in their 40s are in a high-risk group or whether women of average risk in this age group also are benefiting from routine screening mammography.

Continue to: What’s next: Incorporate routine risk assessment into clinical practice...

What’s next: Incorporate routine risk assessment into clinical practice

It is not time to abandon screening mammography for all women in their 40s. Rather, routine risk assessment should be performed using one of many available validated or widely tested tools, a recommendation supported by the American College of Obstetricians and Gynecologists, the National Comprehensive Cancer Network, and the US Preventive Services Task Force.5,6,12

Ideally, these tools can be incorporated into an electronic health record and prepopulated using already available patient data (such as age, reproductive risk factors, current medications, breast density if available, and family history). Prepopulating available data into breast cancer risk calculators would allow clinicians to spend time on counseling women regarding breast cancer risk and appropriate screening methods. The TABLE provides a summary of useful breast cancer risk calculators and includes comments about their utility and significant limitations and benefits. In addition to breast cancer risk, the more comprehensive risk calculators (Tyrer-Cuzick and BOADICEA) allow calculation of ovarian cancer risk and gene mutation risk.

Routinely performing breast cancer risk assessment can guide discussions of screening mammography and can provide data for conducting a more individualized discussion on cancer genetic counseling and testing, risk reduction methods in high-risk women, and possible use of intensive breast cancer screening tools in identified high-risk women.

Ultimately, debating the question of whether all women should have routine breast cancer screening in their 40s should be passé. Ideally, all women should undergo breast cancer risk assessment in their 20s. Risk assessment results can then be used to guide the discussion of multiple potential interventions for women in their 40s (or earlier if appropriate), including routine screening mammography, cancer genetic counseling and testing in appropriate individuals, and intervention for women who are identified at high risk.

Absent breast cancer risk assessment, screening mammography still should be offered to women in their 40s, and the decision to proceed should be based on a discussion of risks, benefits, and the value the patient places on these factors.●

In the 1970s and early 1980s, population-based screening mammography was studied in numerous randomized control trials (RCTs), with the primary outcome of reduced breast cancer mortality. Although technology and the sensitivity of mammography in the 1980s was somewhat rudimentary compared with current screening, a meta-analysis of these RCTs demonstrated a clear mortality benefit for screening mammography.1 As a result, widespread population-based mammography was introduced in the mid-1980s in the United States and has become a standard for breast cancer screening.

Since that time, few RCTs of screening mammography versus observation have been conducted because of the ethical challenges of entering women into such studies as well as the difficulty and expense of long-term follow-up to measure the effect of screening on breast cancer mortality. Without ongoing RCTs of mammography, retrospective, observational, and computer simulation trials of the efficacy and harms of screening mammography have been conducted using proxy measures of mortality (such as stage at diagnosis), and some have questioned the overall benefit of screening mammography.2,3

To further complicate this controversy, some national guidelines have recommended against routinely recommending screening mammography for women aged 40 to 49 based on concerns that the harms (callbacks, benign breast biopsies, overdiagnosis) exceed the potential benefits (earlier diagnosis, possible decrease in needed treatments, reduced breast cancer mortality).4 This has resulted in a confusing morass of national recommendations with uncertainty regarding the question of whether to routinely offer screening mammography for women in their 40s at average risk for breast cancer.4-6

Recently, to address this question Duffy and colleagues conducted a large RCT of women in their 40s to evaluate the long-term effect of mammography on breast cancer mortality.7 Here, I review the study in depth and offer some guidance to clinicians and women struggling with screening decisions.

Breast cancer mortality significantly lower in the screening group

The RCT, known as the UK Age trial, was conducted in England, Wales, and Scotland and enrolled 160,921 women from 1990 through 1997.7 Women were randomly assigned in a 2:1 ratio to observation or annual screening mammogram beginning at age 39–41 until age 48. (In the United Kingdom, all women are screened starting at age 50.) Study enrollees were followed for a median of 22.8 years, and the primary outcome was breast cancer mortality.

The study results showed a 25% relative risk (RR) reduction in breast cancer mortality at 10 years of follow-up in the mammography group compared with the unscreened women (83 breast cancer deaths in the mammography group vs 219 in the observation group [RR, 0.75; 95% confidence interval (CI), 0.58–0.97; P = .029]). Based on the prevalence of breast cancer in women in their 40s, this 25% relative risk reduction translates into approximately 1 less death per 1,000 women who undergo routine screening in their 40s.

While there was no additional significant mortality reduction beyond 10 years of follow-up, as noted mammography is offered routinely starting at age 50 to all women in the United Kingdom. The authors concluded that “reducing the lower age limit for screening from 50 to 40 years [of age] could potentially reduce breast cancer mortality.”

Was overdiagnosis a concern? Another finding in this trial was related to overdiagnosis of breast cancer in the screened group. Overdiagnosis refers to mammographic-only diagnosis (that is, no clinical findings) of nonaggressive breast cancer, which would remain indolent and not harm the patient. The study results demonstrated essentially no overdiagnosis in women screened at age 40 compared with the unscreened group.

Continue to: Large trial, long follow-up are key strengths...

Large trial, long follow-up are key strengths

The UK Age trial’s primary strength is its study design: a large population-based RCT that included diverse participants with the critical study outcome for cancer screening (mortality). The study’s long-term follow-up is another key strength, since breast cancer mortality typically occurs 7 to 10 years after diagnosis. In addition, results were available for 99.9% of the women enrolled in the trial (that is, only 0.1% of women were lost to follow-up). Interestingly, the demonstrated mortality reduction with screening mammography for women in their 40s validates the mortality benefit demonstrated in other large RCTs of women in their 40s.1

Another strong point is that the study addresses the issue of whether screening women in their 40s results in overdiagnosis compared with women who start screening in their 50s. Further, this study validates a prior observational study that mammographic findings of nonprogressive cancers do not disappear, so nonaggressive cancers that present on mammography in women in their 40s still would be detected when women start screening in their 50s.8

Study limitations should be noted

The study has several limitations. For example, significant improvements have been made in breast cancer treatments that may mitigate against the positive impact of screening mammography. The impact of changed breast cancer management over the past 20 years could not be addressed with this study’s design since women would have been treated in the 1990s. In addition, substantial improvements have occurred in breast cancer screening standards (2 views vs the single view used in the study) and technology since the 1990s. Current mammography includes nearly uniform use of either digital mammography (DM) or digital breast tomosynthesis (DBT), both of which improve breast cancer detection for women in their 40s compared with the older film-screen technology. In addition, DBT reduces false-positive results by approximately 40%, resulting in fewer callbacks and biopsies. While improved cancer detection and reduced false-positive results are seen with DM and DBT, whether these technology improvements result in improved breast cancer mortality has not yet been sufficiently studied.

Perhaps the most important limitation in this study is that the women did not undergo routine risk assessment before trial entry to assure that they all were at “average risk.” As a result, both high- and average-risk women would have been included in this population-based trial. Without risk stratification, it remains uncertain whether the reduction in breast cancer mortality disproportionately exists within a high-risk subgroup (such as breast cancer gene mutation carriers).

Finally, the cost efficacy of routine screening mammography for women in their 40s was not evaluated in this study.

The UK Age trial in perspective

The good news is that there is the clear evidence that breast cancer mortality rates (deaths per 100,000) have decreased by about 40% over the past 50 years, likely due to improvements in breast cancer treatment and routine screening mammography.9 Breast cancer mortality reduction is particularly important because breast cancer remains the most common cancer and is the second leading cause of cancer death in women in the United States. In the past decade, considerable debate has arisen arguing whether this reduction in breast cancer mortality is due to improved treatments, routine screening mammography, or both. Authors of a retrospective trial in Australia, recently reviewed in OBG Management, suggested that the majority of improvement is due to improvements in treatment.3,10 However, as the authors pointed out, due to the trial’s retrospective design, causality only can be inferred. The current UK Age trial does add to the numerous prospective trials demonstrating mortality benefit for mammography in women in their 40s.11

What remains a challenge for clinicians, and for women struggling with the mammography question, is the absence of risk assessment in these long-term RCT trials as well as in the large retrospective database studies. Without risk stratification, these studies treated all the study population as “average risk.” Because breast cancer risk assessment is sporadically performed in clinical practice and there are no published RCTs of screening mammography in risk-assessed “average risk” women in their 40s, it remains uncertain whether the women benefiting from screening in their 40s are in a high-risk group or whether women of average risk in this age group also are benefiting from routine screening mammography.

Continue to: What’s next: Incorporate routine risk assessment into clinical practice...

What’s next: Incorporate routine risk assessment into clinical practice

It is not time to abandon screening mammography for all women in their 40s. Rather, routine risk assessment should be performed using one of many available validated or widely tested tools, a recommendation supported by the American College of Obstetricians and Gynecologists, the National Comprehensive Cancer Network, and the US Preventive Services Task Force.5,6,12

Ideally, these tools can be incorporated into an electronic health record and prepopulated using already available patient data (such as age, reproductive risk factors, current medications, breast density if available, and family history). Prepopulating available data into breast cancer risk calculators would allow clinicians to spend time on counseling women regarding breast cancer risk and appropriate screening methods. The TABLE provides a summary of useful breast cancer risk calculators and includes comments about their utility and significant limitations and benefits. In addition to breast cancer risk, the more comprehensive risk calculators (Tyrer-Cuzick and BOADICEA) allow calculation of ovarian cancer risk and gene mutation risk.

Routinely performing breast cancer risk assessment can guide discussions of screening mammography and can provide data for conducting a more individualized discussion on cancer genetic counseling and testing, risk reduction methods in high-risk women, and possible use of intensive breast cancer screening tools in identified high-risk women.

Ultimately, debating the question of whether all women should have routine breast cancer screening in their 40s should be passé. Ideally, all women should undergo breast cancer risk assessment in their 20s. Risk assessment results can then be used to guide the discussion of multiple potential interventions for women in their 40s (or earlier if appropriate), including routine screening mammography, cancer genetic counseling and testing in appropriate individuals, and intervention for women who are identified at high risk.

Absent breast cancer risk assessment, screening mammography still should be offered to women in their 40s, and the decision to proceed should be based on a discussion of risks, benefits, and the value the patient places on these factors.●

- Nelson HD, Fu R, Cantor A, et al. Effectiveness of breast cancer screening: systematic review and meta-analysis to update the 2009 US Preventive Services Task Force recommendation. Ann Intern Med. 2016;164:244-255.

- Bleyer A, Welch HG. Effect of three decades of screening mammography on breast-cancer incidence. N Engl J Med. 2012;367:1998-2005.

- Burton R, Stevenson C. Assessment of breast cancer mortality trends associated with mammographic screening and adjuvant therapy from 1986 to 2013 in the state of Victoria, Australia. JAMA Netw Open. 2020;3:e208249-e.

- Nelson HD, Cantor A, Humphrey L, et al. A systematic review to update the 2009 US Preventive Services Task Force recommendation. Evidence syntheses No. 124. AHRQ Publication No. 14-05201-EF-1. Rockville, MD: Agency for Healthcare Research and Quality; 2016.

- Bevers TB, Helvie M, Bonaccio E, et al. Breast cancer screening and diagnosis, version 3.2018, NCCN clinical practice guidelines in oncology. J Natl Compr Canc Netw. 2018;16:1362-1389.

- ACOG Committee on Practice Bulletins–Gynecology. Breast cancer risk assessment and screening in average-risk women. Obstet Gynecol. 2017;130:e1-e16.

- Duffy SW, Vulkan D, Cuckle H, et al. Effect of mammographic screening from age 40 years on breast cancer mortality (UK Age trial): final results of a randomised, controlled trial. Lancet Oncol. 2020;21:1165-1172.

- Arleo EK, Monticciolo DL, Monsees B, et al. Persistent untreated screening-detected breast cancer: an argument against delaying screening or increasing the interval between screenings. J Am Coll Radiol. 2017;14:863-867.

- DeSantis CE, Ma J, Gaudet MM, et al. Breast cancer statistics, 2019. CA Cancer J Clin. 2019;69:438-451.

- Kaunitz AM. How effective is screening mammography for preventing breast cancer mortality? OBG Manag. 2020;32(8):17,49.

- Oeffinger KC, Fontham ET, Etzioni R, et al; American Cancer Society. Breast cancer screening for women at average risk: 2015 guideline update from the American Cancer Society. JAMA. 2015;314:1599-1614.

- US Preventive Services Task Force; Owens DK, Davidson KW, Krist AH, et al. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer: US Preventive Services Task Force recommendation statement. JAMA. 2019;322:652-665.

- Nelson HD, Fu R, Cantor A, et al. Effectiveness of breast cancer screening: systematic review and meta-analysis to update the 2009 US Preventive Services Task Force recommendation. Ann Intern Med. 2016;164:244-255.

- Bleyer A, Welch HG. Effect of three decades of screening mammography on breast-cancer incidence. N Engl J Med. 2012;367:1998-2005.

- Burton R, Stevenson C. Assessment of breast cancer mortality trends associated with mammographic screening and adjuvant therapy from 1986 to 2013 in the state of Victoria, Australia. JAMA Netw Open. 2020;3:e208249-e.

- Nelson HD, Cantor A, Humphrey L, et al. A systematic review to update the 2009 US Preventive Services Task Force recommendation. Evidence syntheses No. 124. AHRQ Publication No. 14-05201-EF-1. Rockville, MD: Agency for Healthcare Research and Quality; 2016.

- Bevers TB, Helvie M, Bonaccio E, et al. Breast cancer screening and diagnosis, version 3.2018, NCCN clinical practice guidelines in oncology. J Natl Compr Canc Netw. 2018;16:1362-1389.

- ACOG Committee on Practice Bulletins–Gynecology. Breast cancer risk assessment and screening in average-risk women. Obstet Gynecol. 2017;130:e1-e16.

- Duffy SW, Vulkan D, Cuckle H, et al. Effect of mammographic screening from age 40 years on breast cancer mortality (UK Age trial): final results of a randomised, controlled trial. Lancet Oncol. 2020;21:1165-1172.

- Arleo EK, Monticciolo DL, Monsees B, et al. Persistent untreated screening-detected breast cancer: an argument against delaying screening or increasing the interval between screenings. J Am Coll Radiol. 2017;14:863-867.

- DeSantis CE, Ma J, Gaudet MM, et al. Breast cancer statistics, 2019. CA Cancer J Clin. 2019;69:438-451.

- Kaunitz AM. How effective is screening mammography for preventing breast cancer mortality? OBG Manag. 2020;32(8):17,49.

- Oeffinger KC, Fontham ET, Etzioni R, et al; American Cancer Society. Breast cancer screening for women at average risk: 2015 guideline update from the American Cancer Society. JAMA. 2015;314:1599-1614.

- US Preventive Services Task Force; Owens DK, Davidson KW, Krist AH, et al. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer: US Preventive Services Task Force recommendation statement. JAMA. 2019;322:652-665.

Pathologic CR in HER2+ breast cancer predicts long-term survival

In fact, for the majority of women, pCR appears to be a marker of cure.

The trial was conducted among 455 women with HER2-positive breast cancer tumors measuring at least 2 cm who were randomized to neoadjuvant trastuzumab, lapatinib, or both drugs in combination, each together with paclitaxel, followed by more chemotherapy and more of the same targeted therapy after surgery.

Relative to trastuzumab alone, trastuzumab plus lapatinib improved rates of pCR, as shown by data published in The Lancet in 2012. However, the dual therapy did not significantly prolong event-free or overall survival, according to data published in The Lancet Oncology in 2014. Findings were similar in an update at a median follow-up of 6.7 years, published in the European Journal of Cancer in 2019.

Study investigator Paolo Nuciforo, MD, PhD, of the Vall d’Hebron Institute of Oncology in Barcelona, reported the trial’s final results, now at a median follow-up of 9.7 years, at the 12th European Breast Cancer Conference.

There were no significant differences in 9-year outcomes by specific HER2-targeted therapy. However, in a landmark analysis among women who were event free and still on follow-up 30 weeks after randomization, those achieving pCR with any of the therapies were 52% less likely to experience events and 63% less likely to die. Benefit was greatest in the subset of patients with hormone receptor–negative disease.

“The long-term follow-up confirms that, independent of the treatment regimen that we use – in this case, the dual blockade was with lapatinib, but similar results can be expected with other dual blockade – the pCR is a very robust surrogate biomarker of long-term survival,” Dr. Nuciforo commented in a press conference, noting that dual trastuzumab and pertuzumab has emerged as the standard of care.

“If we really pay attention to the curve, it’s maybe interesting to see that, after year 6, we actually don’t see any events in the pCR population. So this means that these patients are almost cured. We cannot say the word ‘cure’ in cancer, but it’s very reassuring to see the long-term survival analysis support the use of pCR as an endpoint,” he elaborated.

“Our results support the design of future trial concepts in HER2-positive early breast cancer which use pCR as an early efficacy readout of long-term benefit to escalate or deescalate therapy, particularly for hormone receptor–negative tumors,” Dr. Nuciforo concluded.

Support for current practice

“The study lends support for the current practice of risk-stratifying by pCR as well as making treatment decisions regarding T-DM1 [trastuzumab emtansine], and there hasn’t been a big change between 5-year and 9-year outcomes,” Lisa A. Carey, MD, of the University of North Carolina at Chapel Hill Lineberger Comprehensive Cancer Center, commented in an interview.

The lack of late events in the group with pCR technically meets the definition of cure, Dr. Carey said. “I think it speaks to the relatively early relapse risk in HER2-positive breast cancer and the impact of anti-HER2 therapy that carries forward. In general, these are findings similar to long-term findings of other trials and I suspect will be the same for any regimen.”

Although the analysis of dual lapatinib-trastuzumab therapy was underpowered, the trends seen align with favorable results in the adjuvant APHINITY trial (which combined trastuzumab with pertuzumab) and the neoadjuvant CALGB 40601 trial (which combined trastuzumab with lapatinib), according to Dr. Carey. “There has been a trend in every other study [of dual therapy] performed, so this is consistent.”

Study details

NeoALTTO is noteworthy for having the longest follow-up among all neoadjuvant studies of dual HER2 blockade in early breast cancer, Dr. Nuciforo said.

He reported no significant difference in survival between the treatment arms at 9 years.

The 9-year rate of event-free survival was 69% with lapatinib-trastuzumab, 63% with lapatinib alone, and 65% with trastuzumab alone. The corresponding 9-year rates of overall survival were 80%, 77%, and 76%, respectively.

However, there were significant differences in event-free and overall survival among women who achieved pCR and those who did not.

“pCR was achieved for almost twice as many patients treated with dual HER2 blockade, compared with patients in the single-agent arms,” Dr. Nuciforo pointed out. The pCR rate was 51.3% with lapatinib-trastuzumab, 24.7% with lapatinib alone, and 29.5% with trastuzumab alone.

Relative to peers who did not achieve pCR, women who did had better 9-year event-free survival (77% vs. 61%; adjusted hazard ratio, 0.48; P = .0008). The benefit was stronger in hormone receptor–negative disease (HR, 0.43; P = .002) than in hormone receptor–positive disease (HR, 0.60; P = .15).

The pattern was similar for overall survival at 9 years – 88% in those who achieved a pCR and 72% in those who did not (adjusted HR, 0.37; P = .0004). Again, greater benefit was seen in hormone receptor–negative disease (HR, 0.33; P = .002) than in hormone receptor–positive disease (HR, 0.44; P = .09).

“Biomarker-driven approaches may improve selection of those patients who are more likely to respond to anti-HER2 therapies,” Dr. Nuciforo proposed.

From 6 years onward, there were no additional fatal adverse events or nonfatal serious adverse events recorded, and no additional primary cardiac endpoints were recorded.

The study was funded by Novartis. Dr. Nuciforo and Dr. Carey disclosed no conflicts of interest.

SOURCE: Nuciforo P et al. EBCC-12 Virtual Conference, Abstract 23.

In fact, for the majority of women, pCR appears to be a marker of cure.

The trial was conducted among 455 women with HER2-positive breast cancer tumors measuring at least 2 cm who were randomized to neoadjuvant trastuzumab, lapatinib, or both drugs in combination, each together with paclitaxel, followed by more chemotherapy and more of the same targeted therapy after surgery.

Relative to trastuzumab alone, trastuzumab plus lapatinib improved rates of pCR, as shown by data published in The Lancet in 2012. However, the dual therapy did not significantly prolong event-free or overall survival, according to data published in The Lancet Oncology in 2014. Findings were similar in an update at a median follow-up of 6.7 years, published in the European Journal of Cancer in 2019.

Study investigator Paolo Nuciforo, MD, PhD, of the Vall d’Hebron Institute of Oncology in Barcelona, reported the trial’s final results, now at a median follow-up of 9.7 years, at the 12th European Breast Cancer Conference.

There were no significant differences in 9-year outcomes by specific HER2-targeted therapy. However, in a landmark analysis among women who were event free and still on follow-up 30 weeks after randomization, those achieving pCR with any of the therapies were 52% less likely to experience events and 63% less likely to die. Benefit was greatest in the subset of patients with hormone receptor–negative disease.

“The long-term follow-up confirms that, independent of the treatment regimen that we use – in this case, the dual blockade was with lapatinib, but similar results can be expected with other dual blockade – the pCR is a very robust surrogate biomarker of long-term survival,” Dr. Nuciforo commented in a press conference, noting that dual trastuzumab and pertuzumab has emerged as the standard of care.

“If we really pay attention to the curve, it’s maybe interesting to see that, after year 6, we actually don’t see any events in the pCR population. So this means that these patients are almost cured. We cannot say the word ‘cure’ in cancer, but it’s very reassuring to see the long-term survival analysis support the use of pCR as an endpoint,” he elaborated.

“Our results support the design of future trial concepts in HER2-positive early breast cancer which use pCR as an early efficacy readout of long-term benefit to escalate or deescalate therapy, particularly for hormone receptor–negative tumors,” Dr. Nuciforo concluded.

Support for current practice

“The study lends support for the current practice of risk-stratifying by pCR as well as making treatment decisions regarding T-DM1 [trastuzumab emtansine], and there hasn’t been a big change between 5-year and 9-year outcomes,” Lisa A. Carey, MD, of the University of North Carolina at Chapel Hill Lineberger Comprehensive Cancer Center, commented in an interview.

The lack of late events in the group with pCR technically meets the definition of cure, Dr. Carey said. “I think it speaks to the relatively early relapse risk in HER2-positive breast cancer and the impact of anti-HER2 therapy that carries forward. In general, these are findings similar to long-term findings of other trials and I suspect will be the same for any regimen.”

Although the analysis of dual lapatinib-trastuzumab therapy was underpowered, the trends seen align with favorable results in the adjuvant APHINITY trial (which combined trastuzumab with pertuzumab) and the neoadjuvant CALGB 40601 trial (which combined trastuzumab with lapatinib), according to Dr. Carey. “There has been a trend in every other study [of dual therapy] performed, so this is consistent.”

Study details

NeoALTTO is noteworthy for having the longest follow-up among all neoadjuvant studies of dual HER2 blockade in early breast cancer, Dr. Nuciforo said.

He reported no significant difference in survival between the treatment arms at 9 years.

The 9-year rate of event-free survival was 69% with lapatinib-trastuzumab, 63% with lapatinib alone, and 65% with trastuzumab alone. The corresponding 9-year rates of overall survival were 80%, 77%, and 76%, respectively.

However, there were significant differences in event-free and overall survival among women who achieved pCR and those who did not.

“pCR was achieved for almost twice as many patients treated with dual HER2 blockade, compared with patients in the single-agent arms,” Dr. Nuciforo pointed out. The pCR rate was 51.3% with lapatinib-trastuzumab, 24.7% with lapatinib alone, and 29.5% with trastuzumab alone.

Relative to peers who did not achieve pCR, women who did had better 9-year event-free survival (77% vs. 61%; adjusted hazard ratio, 0.48; P = .0008). The benefit was stronger in hormone receptor–negative disease (HR, 0.43; P = .002) than in hormone receptor–positive disease (HR, 0.60; P = .15).

The pattern was similar for overall survival at 9 years – 88% in those who achieved a pCR and 72% in those who did not (adjusted HR, 0.37; P = .0004). Again, greater benefit was seen in hormone receptor–negative disease (HR, 0.33; P = .002) than in hormone receptor–positive disease (HR, 0.44; P = .09).

“Biomarker-driven approaches may improve selection of those patients who are more likely to respond to anti-HER2 therapies,” Dr. Nuciforo proposed.

From 6 years onward, there were no additional fatal adverse events or nonfatal serious adverse events recorded, and no additional primary cardiac endpoints were recorded.

The study was funded by Novartis. Dr. Nuciforo and Dr. Carey disclosed no conflicts of interest.

SOURCE: Nuciforo P et al. EBCC-12 Virtual Conference, Abstract 23.

In fact, for the majority of women, pCR appears to be a marker of cure.

The trial was conducted among 455 women with HER2-positive breast cancer tumors measuring at least 2 cm who were randomized to neoadjuvant trastuzumab, lapatinib, or both drugs in combination, each together with paclitaxel, followed by more chemotherapy and more of the same targeted therapy after surgery.

Relative to trastuzumab alone, trastuzumab plus lapatinib improved rates of pCR, as shown by data published in The Lancet in 2012. However, the dual therapy did not significantly prolong event-free or overall survival, according to data published in The Lancet Oncology in 2014. Findings were similar in an update at a median follow-up of 6.7 years, published in the European Journal of Cancer in 2019.

Study investigator Paolo Nuciforo, MD, PhD, of the Vall d’Hebron Institute of Oncology in Barcelona, reported the trial’s final results, now at a median follow-up of 9.7 years, at the 12th European Breast Cancer Conference.

There were no significant differences in 9-year outcomes by specific HER2-targeted therapy. However, in a landmark analysis among women who were event free and still on follow-up 30 weeks after randomization, those achieving pCR with any of the therapies were 52% less likely to experience events and 63% less likely to die. Benefit was greatest in the subset of patients with hormone receptor–negative disease.

“The long-term follow-up confirms that, independent of the treatment regimen that we use – in this case, the dual blockade was with lapatinib, but similar results can be expected with other dual blockade – the pCR is a very robust surrogate biomarker of long-term survival,” Dr. Nuciforo commented in a press conference, noting that dual trastuzumab and pertuzumab has emerged as the standard of care.

“If we really pay attention to the curve, it’s maybe interesting to see that, after year 6, we actually don’t see any events in the pCR population. So this means that these patients are almost cured. We cannot say the word ‘cure’ in cancer, but it’s very reassuring to see the long-term survival analysis support the use of pCR as an endpoint,” he elaborated.

“Our results support the design of future trial concepts in HER2-positive early breast cancer which use pCR as an early efficacy readout of long-term benefit to escalate or deescalate therapy, particularly for hormone receptor–negative tumors,” Dr. Nuciforo concluded.

Support for current practice

“The study lends support for the current practice of risk-stratifying by pCR as well as making treatment decisions regarding T-DM1 [trastuzumab emtansine], and there hasn’t been a big change between 5-year and 9-year outcomes,” Lisa A. Carey, MD, of the University of North Carolina at Chapel Hill Lineberger Comprehensive Cancer Center, commented in an interview.

The lack of late events in the group with pCR technically meets the definition of cure, Dr. Carey said. “I think it speaks to the relatively early relapse risk in HER2-positive breast cancer and the impact of anti-HER2 therapy that carries forward. In general, these are findings similar to long-term findings of other trials and I suspect will be the same for any regimen.”

Although the analysis of dual lapatinib-trastuzumab therapy was underpowered, the trends seen align with favorable results in the adjuvant APHINITY trial (which combined trastuzumab with pertuzumab) and the neoadjuvant CALGB 40601 trial (which combined trastuzumab with lapatinib), according to Dr. Carey. “There has been a trend in every other study [of dual therapy] performed, so this is consistent.”

Study details

NeoALTTO is noteworthy for having the longest follow-up among all neoadjuvant studies of dual HER2 blockade in early breast cancer, Dr. Nuciforo said.

He reported no significant difference in survival between the treatment arms at 9 years.

The 9-year rate of event-free survival was 69% with lapatinib-trastuzumab, 63% with lapatinib alone, and 65% with trastuzumab alone. The corresponding 9-year rates of overall survival were 80%, 77%, and 76%, respectively.

However, there were significant differences in event-free and overall survival among women who achieved pCR and those who did not.

“pCR was achieved for almost twice as many patients treated with dual HER2 blockade, compared with patients in the single-agent arms,” Dr. Nuciforo pointed out. The pCR rate was 51.3% with lapatinib-trastuzumab, 24.7% with lapatinib alone, and 29.5% with trastuzumab alone.

Relative to peers who did not achieve pCR, women who did had better 9-year event-free survival (77% vs. 61%; adjusted hazard ratio, 0.48; P = .0008). The benefit was stronger in hormone receptor–negative disease (HR, 0.43; P = .002) than in hormone receptor–positive disease (HR, 0.60; P = .15).

The pattern was similar for overall survival at 9 years – 88% in those who achieved a pCR and 72% in those who did not (adjusted HR, 0.37; P = .0004). Again, greater benefit was seen in hormone receptor–negative disease (HR, 0.33; P = .002) than in hormone receptor–positive disease (HR, 0.44; P = .09).

“Biomarker-driven approaches may improve selection of those patients who are more likely to respond to anti-HER2 therapies,” Dr. Nuciforo proposed.

From 6 years onward, there were no additional fatal adverse events or nonfatal serious adverse events recorded, and no additional primary cardiac endpoints were recorded.

The study was funded by Novartis. Dr. Nuciforo and Dr. Carey disclosed no conflicts of interest.

SOURCE: Nuciforo P et al. EBCC-12 Virtual Conference, Abstract 23.

FROM EBCC-12 VIRTUAL CONFERENCE

Combined features of benign breast disease tied to breast cancer risk

“Benign breast disease is a key risk factor for breast cancer risk prediction,” commented presenting investigator Marta Román, PhD, of the Hospital del Mar Medical Research Institute in Barcelona. “Those women who have had a benign breast disease diagnosis have an increased risk that lasts for at least 20 years.”

To assess the combined influence of various attributes of benign breast disease, the investigators studied 629,087 women, aged 50-69 years, in Spain who underwent population-based mammographic breast cancer screening during 1994-2015 and did not have breast cancer at their prevalent (first) screen. The mean follow-up was 7.8 years.

Results showed that breast cancer risk was about three times higher for women with benign breast disease that was proliferative or that was detected on an incident screen, relative to peers with no benign breast disease. When combinations of factors were considered, breast cancer risk was most elevated – more than four times higher – for women with proliferative benign breast disease with atypia detected on an incident screen.

“We believe that these findings should be considered when discussing risk-based personalized screening strategies because these differences between prevalent and incident screens might be important if we want to personalize the screening, whether it’s the first time a woman comes to the screening program or a subsequent screen,” Dr. Román said.

Practice changing?

The study’s large size and population-based design, likely permitting capture of most biopsy results, are strengths, Mark David Pearlman, MD, of the University of Michigan, Ann Arbor, commented in an interview.

But its observational, retrospective nature opens the study up to biases, such as uncertainty as to how many women were symptomatic at the time of their mammogram and the likelihood of heightened monitoring after a biopsy showing hyperplasia, Dr. Pearlman cautioned.

“Moreover, the relative risk in this study for proliferative benign breast disease without atypia is substantially higher than prior observations of this group. This discrepancy was not discussed by the authors,” Dr. Pearlman said.

At present, women’s risk of breast cancer is predicted using well-validated models that include the question of prior breast biopsies, such as the Gail Model, the Tyrer-Cuzick model (IBIS tool), and the Breast and Ovarian Analysis of Disease Incidence and Carrier Estimation Algorithm, Dr. Pearlman noted.

“This study, without further validation within a model, would not change risk assessment,” he said, disagreeing with the investigators’ conclusions. “What I would say is that further study to determine how to use this observation to decide if any change in screening or management should occur would be more appropriate.”

Study details

The 629,087 women studied underwent 2,327,384 screens, Dr. Román reported. In total, screening detected 9,184 cases of benign breast disease and 9,431 breast cancers.

Breast cancer was diagnosed in 2.4% and 3.0% of women with benign breast disease detected on prevalent and incident screens, respectively, compared with 1.5% of women without any benign breast disease detected.

Elevation of breast cancer risk varied across benign breast disease subtype. Relative to peers without any benign disease, risk was significantly elevated for women with nonproliferative disease (adjusted hazard ratio, 1.95), proliferative disease without atypia (aHR, 3.19), and proliferative disease with atypia (aHR, 3.82).

Similarly, elevation of risk varied depending on the screening at which the benign disease was detected. Risk was significantly elevated when the disease was found at prevalent screens (aHR, 1.87) and more so when it was found at incident screens (aHR, 2.67).

There was no significant interaction of these two factors (P = .83). However, when combinations were considered, risk was highest for women with proliferative benign breast disease with atypia detected on incident screens (aHR, 4.35) or prevalent screens (aHR, 3.35), and women with proliferative benign breast disease without atypia detected on incident screens (aHR, 3.83).

This study was supported by grants from Instituto de Salud Carlos III FEDER and by the Research Network on Health Services in Chronic Diseases. Dr. Román and Dr. Pearlman disclosed no conflicts of interest.

SOURCE: Román M et al. EBCC-12 Virtual Conference, Abstract 15.

“Benign breast disease is a key risk factor for breast cancer risk prediction,” commented presenting investigator Marta Román, PhD, of the Hospital del Mar Medical Research Institute in Barcelona. “Those women who have had a benign breast disease diagnosis have an increased risk that lasts for at least 20 years.”

To assess the combined influence of various attributes of benign breast disease, the investigators studied 629,087 women, aged 50-69 years, in Spain who underwent population-based mammographic breast cancer screening during 1994-2015 and did not have breast cancer at their prevalent (first) screen. The mean follow-up was 7.8 years.

Results showed that breast cancer risk was about three times higher for women with benign breast disease that was proliferative or that was detected on an incident screen, relative to peers with no benign breast disease. When combinations of factors were considered, breast cancer risk was most elevated – more than four times higher – for women with proliferative benign breast disease with atypia detected on an incident screen.

“We believe that these findings should be considered when discussing risk-based personalized screening strategies because these differences between prevalent and incident screens might be important if we want to personalize the screening, whether it’s the first time a woman comes to the screening program or a subsequent screen,” Dr. Román said.

Practice changing?

The study’s large size and population-based design, likely permitting capture of most biopsy results, are strengths, Mark David Pearlman, MD, of the University of Michigan, Ann Arbor, commented in an interview.

But its observational, retrospective nature opens the study up to biases, such as uncertainty as to how many women were symptomatic at the time of their mammogram and the likelihood of heightened monitoring after a biopsy showing hyperplasia, Dr. Pearlman cautioned.

“Moreover, the relative risk in this study for proliferative benign breast disease without atypia is substantially higher than prior observations of this group. This discrepancy was not discussed by the authors,” Dr. Pearlman said.

At present, women’s risk of breast cancer is predicted using well-validated models that include the question of prior breast biopsies, such as the Gail Model, the Tyrer-Cuzick model (IBIS tool), and the Breast and Ovarian Analysis of Disease Incidence and Carrier Estimation Algorithm, Dr. Pearlman noted.

“This study, without further validation within a model, would not change risk assessment,” he said, disagreeing with the investigators’ conclusions. “What I would say is that further study to determine how to use this observation to decide if any change in screening or management should occur would be more appropriate.”

Study details

The 629,087 women studied underwent 2,327,384 screens, Dr. Román reported. In total, screening detected 9,184 cases of benign breast disease and 9,431 breast cancers.

Breast cancer was diagnosed in 2.4% and 3.0% of women with benign breast disease detected on prevalent and incident screens, respectively, compared with 1.5% of women without any benign breast disease detected.

Elevation of breast cancer risk varied across benign breast disease subtype. Relative to peers without any benign disease, risk was significantly elevated for women with nonproliferative disease (adjusted hazard ratio, 1.95), proliferative disease without atypia (aHR, 3.19), and proliferative disease with atypia (aHR, 3.82).

Similarly, elevation of risk varied depending on the screening at which the benign disease was detected. Risk was significantly elevated when the disease was found at prevalent screens (aHR, 1.87) and more so when it was found at incident screens (aHR, 2.67).

There was no significant interaction of these two factors (P = .83). However, when combinations were considered, risk was highest for women with proliferative benign breast disease with atypia detected on incident screens (aHR, 4.35) or prevalent screens (aHR, 3.35), and women with proliferative benign breast disease without atypia detected on incident screens (aHR, 3.83).

This study was supported by grants from Instituto de Salud Carlos III FEDER and by the Research Network on Health Services in Chronic Diseases. Dr. Román and Dr. Pearlman disclosed no conflicts of interest.

SOURCE: Román M et al. EBCC-12 Virtual Conference, Abstract 15.

“Benign breast disease is a key risk factor for breast cancer risk prediction,” commented presenting investigator Marta Román, PhD, of the Hospital del Mar Medical Research Institute in Barcelona. “Those women who have had a benign breast disease diagnosis have an increased risk that lasts for at least 20 years.”

To assess the combined influence of various attributes of benign breast disease, the investigators studied 629,087 women, aged 50-69 years, in Spain who underwent population-based mammographic breast cancer screening during 1994-2015 and did not have breast cancer at their prevalent (first) screen. The mean follow-up was 7.8 years.

Results showed that breast cancer risk was about three times higher for women with benign breast disease that was proliferative or that was detected on an incident screen, relative to peers with no benign breast disease. When combinations of factors were considered, breast cancer risk was most elevated – more than four times higher – for women with proliferative benign breast disease with atypia detected on an incident screen.

“We believe that these findings should be considered when discussing risk-based personalized screening strategies because these differences between prevalent and incident screens might be important if we want to personalize the screening, whether it’s the first time a woman comes to the screening program or a subsequent screen,” Dr. Román said.

Practice changing?

The study’s large size and population-based design, likely permitting capture of most biopsy results, are strengths, Mark David Pearlman, MD, of the University of Michigan, Ann Arbor, commented in an interview.

But its observational, retrospective nature opens the study up to biases, such as uncertainty as to how many women were symptomatic at the time of their mammogram and the likelihood of heightened monitoring after a biopsy showing hyperplasia, Dr. Pearlman cautioned.

“Moreover, the relative risk in this study for proliferative benign breast disease without atypia is substantially higher than prior observations of this group. This discrepancy was not discussed by the authors,” Dr. Pearlman said.

At present, women’s risk of breast cancer is predicted using well-validated models that include the question of prior breast biopsies, such as the Gail Model, the Tyrer-Cuzick model (IBIS tool), and the Breast and Ovarian Analysis of Disease Incidence and Carrier Estimation Algorithm, Dr. Pearlman noted.

“This study, without further validation within a model, would not change risk assessment,” he said, disagreeing with the investigators’ conclusions. “What I would say is that further study to determine how to use this observation to decide if any change in screening or management should occur would be more appropriate.”

Study details

The 629,087 women studied underwent 2,327,384 screens, Dr. Román reported. In total, screening detected 9,184 cases of benign breast disease and 9,431 breast cancers.

Breast cancer was diagnosed in 2.4% and 3.0% of women with benign breast disease detected on prevalent and incident screens, respectively, compared with 1.5% of women without any benign breast disease detected.

Elevation of breast cancer risk varied across benign breast disease subtype. Relative to peers without any benign disease, risk was significantly elevated for women with nonproliferative disease (adjusted hazard ratio, 1.95), proliferative disease without atypia (aHR, 3.19), and proliferative disease with atypia (aHR, 3.82).

Similarly, elevation of risk varied depending on the screening at which the benign disease was detected. Risk was significantly elevated when the disease was found at prevalent screens (aHR, 1.87) and more so when it was found at incident screens (aHR, 2.67).

There was no significant interaction of these two factors (P = .83). However, when combinations were considered, risk was highest for women with proliferative benign breast disease with atypia detected on incident screens (aHR, 4.35) or prevalent screens (aHR, 3.35), and women with proliferative benign breast disease without atypia detected on incident screens (aHR, 3.83).

This study was supported by grants from Instituto de Salud Carlos III FEDER and by the Research Network on Health Services in Chronic Diseases. Dr. Román and Dr. Pearlman disclosed no conflicts of interest.

SOURCE: Román M et al. EBCC-12 Virtual Conference, Abstract 15.

FROM EBCC-12 VIRTUAL CONFERENCE

HIT-6 may help track meaningful change in chronic migraine

, recent research suggests.

Using data from the phase 3 PROMISE-2 study, which evaluated intravenous eptinezumab in doses of 100 mg or 300 mg, or placebo every 12 weeks in 1,072 participants for the prevention of chronic migraine, Carrie R. Houts, PhD, director of psychometrics at the Vector Psychometric Group, in Chapel Hill, N.C., and colleagues determined that their finding of 6-point improvement of HIT-6 total score was consistent with other studies. However, they pointed out that little research has been done in evaluating how item-specific scores of HIT-6 impact individuals with chronic migraine. HIT-6 item scores examine whether individuals with headaches experience severe pain, limit their daily activities, have a desire to lie down, feel too tired to do daily activities, felt “fed up or irritated” because of headaches, and feel their headaches limit concentration on work or daily activities.

“The item-specific responder definitions give clinicians and researchers the ability to evaluate and track the impact of headache on specific item-level areas of patients’ lives. These responder definitions provide practical and easily interpreted results that can be used to evaluate treatment benefits over time and to improve clinician-patients communication focus on improvements in key aspects of functioning in individuals with chronic migraine,” Dr. Houts and colleagues wrote in their study, published in the October issue of Headache.

The 6-point value and the 1-2 category improvement values in item-specific scores, they suggested, could be used as a benchmark to help other clinicians and researchers detect meaningful change in individual patients with chronic migraine. Although the user guide for HIT-6 highlights a 5-point change in the total score as clinically meaningful, the authors of the guide do not provide evidence for why the 5-point value signifies clinically meaningful change, they said.

Determining thresholds of clinically meaningful change

In their study, Dr. Houts and colleagues used distribution-based methods to gauge responder values for the HIT-6 total score, while item-specific HIT-6 analyses were measured with Patients’ Global Impression of Change (PGIC), reduction in migraine frequency through monthly migraine days (MMDs), and EuroQol 5 dimensions 5 levels visual analog scale (EQ-5D-5L VAS). The researchers also used HIT-6 values from a literature review and from analyses in PROMISE-2 to calculate “a final chronic migraine-specific responder definition value” between baseline and 12 weeks. Participants in the PROMISE-2 study were mostly women (88.2%) and white (91.0%) with a mean age of 40.5 years.

The literature search revealed responder thresholds for the HIT-6 total score in a range between a decrease of 3 points and 8 points. Within PROMISE-2, the HIT-6 total score responder threshold was found to be between –2.6 and –2.2, which the researchers rounded down to a decrease of 3 points. When taking both sets of responder thresholds into account, the researchers calculated the median responder value as –5.5, which was rounded down to a decrease in 6 points in the HIT-6 total score. “[The estimate] appears most appropriate for discriminating between individuals with chronic migraine who have experienced meaningful change over time and those who have not,” Dr. Houts and colleagues said.

For item-specific HIT-6 scores, the mean score changes were –1 points for categories involving severe pain, limiting activities, lying down, and –2 points for categories involving feeling tired, being fed up or irritated, and limiting concentration.

“Taken together, the current chronic migraine-specific results are consistent with values derived from general headache/migraine samples and suggest that a decrease of 6 points or more on the HIT-6 total score would be considered meaningful to chronic migraine patients,” Dr. Houts and colleagues said. “This would translate to approximately a 4-category change on a single item, change on 2 items of approximately 2 and 3 categories, or a 1-category change on 3 or 4 of the 6 items, depending on the initial category.”

The researchers cautioned that the values outlined in the study “should not be used to determine clinically meaningful difference between treatment groups” and that “future work, similar to that reported here, will identify a chronic migraine-specific clinically meaningful difference between treatment groups value.”

A better measure of chronic migraine?

In an interview, J. D. Bartleson Jr., MD, a retired neurologist with the Mayo Clinic in Rochester, Minn., questioned why HIT-6 criteria was used in the initial PROMISE-2 study. “There is not a lot of difference between the significant and insignificant categories. Chronic migraine may be better measured with pain severity and number of headache days per month,” he said.

,“It may be appropriate to use just 1 or 2 symptoms for evaluating a given patient’s headache burden,” in terms of clinical application of the study for neurologists, Dr. Bartleson said. He emphasized that more research is needed.

This study was funded by H. Lundbeck A/S, which also provided funding of medical writing and editorial support for the manuscript. Three authors report being employees of Vector Psychometric Group at the time of the study, and the company received funding from H. Lundbeck A/S for their time conducting study-related research. Three other authors report relationships with pharmaceutical companies, medical societies, government agencies, and industry related to the study in the form of consultancies, advisory board memberships, honoraria, research support, stock or stock options, and employment. Dr. Bartleson reports no relevant conflicts of interest.

, recent research suggests.

Using data from the phase 3 PROMISE-2 study, which evaluated intravenous eptinezumab in doses of 100 mg or 300 mg, or placebo every 12 weeks in 1,072 participants for the prevention of chronic migraine, Carrie R. Houts, PhD, director of psychometrics at the Vector Psychometric Group, in Chapel Hill, N.C., and colleagues determined that their finding of 6-point improvement of HIT-6 total score was consistent with other studies. However, they pointed out that little research has been done in evaluating how item-specific scores of HIT-6 impact individuals with chronic migraine. HIT-6 item scores examine whether individuals with headaches experience severe pain, limit their daily activities, have a desire to lie down, feel too tired to do daily activities, felt “fed up or irritated” because of headaches, and feel their headaches limit concentration on work or daily activities.

“The item-specific responder definitions give clinicians and researchers the ability to evaluate and track the impact of headache on specific item-level areas of patients’ lives. These responder definitions provide practical and easily interpreted results that can be used to evaluate treatment benefits over time and to improve clinician-patients communication focus on improvements in key aspects of functioning in individuals with chronic migraine,” Dr. Houts and colleagues wrote in their study, published in the October issue of Headache.

The 6-point value and the 1-2 category improvement values in item-specific scores, they suggested, could be used as a benchmark to help other clinicians and researchers detect meaningful change in individual patients with chronic migraine. Although the user guide for HIT-6 highlights a 5-point change in the total score as clinically meaningful, the authors of the guide do not provide evidence for why the 5-point value signifies clinically meaningful change, they said.

Determining thresholds of clinically meaningful change

In their study, Dr. Houts and colleagues used distribution-based methods to gauge responder values for the HIT-6 total score, while item-specific HIT-6 analyses were measured with Patients’ Global Impression of Change (PGIC), reduction in migraine frequency through monthly migraine days (MMDs), and EuroQol 5 dimensions 5 levels visual analog scale (EQ-5D-5L VAS). The researchers also used HIT-6 values from a literature review and from analyses in PROMISE-2 to calculate “a final chronic migraine-specific responder definition value” between baseline and 12 weeks. Participants in the PROMISE-2 study were mostly women (88.2%) and white (91.0%) with a mean age of 40.5 years.