User login

Cognitive bias: Its influence on clinical diagnosis

CASE A patient with a history of drug-seeking behavior asks to be seen by you for lower back pain. Your impression upon entering the examination room is that the patient appears to be in minimal pain. A review of the patient’s chart leads you to suspect that the patient’s past behavior pattern is the reason for the visit. You find yourself downplaying his reports of weight loss, changed bowel habits, and lower extremity weakness—despite the fact that these complaints might have led you to consider more concerning causes of back pain in a different patient.

This situation is not uncommon. At one time or another, it’s likely that we have all placed an undue emphasis on a patient’s social background to reinforce a pre-existing opinion of the likely diagnosis. Doing so is an example of both anchoring and confirmation biases—just 2 of the many biases known to influence critical thinking in clinical practice (and which we’ll describe in a bit).

Reconsidering the diagnostic process. Previous attempts to address the issue of incorrect diagnosis and medical error have focused on systems-based approaches such as adopting electronic medical records to avert prescribing errors or eliminating confusing abbreviations in documentation.

Graber et al reviewed 100 errors involving internists and found that 46% of the errors resulted from a combination of systems-based and cognitive reasoning factors.2 More surprisingly, 28% of errors were attributable to reasoning failures alone.2 Singh et al showed that in one primary care network, most errors occurred during the patient-doctor encounter, with 56% involving errors in history taking and 47% involving oversights in the physical examination.3 Furthermore, most of the errors occurred in the context of common conditions such as pneumonia and congestive heart failure—rather than esoteric diseases—implying that the failures were due to errors in the diagnostic process rather than from a lack of knowledge.3

An understanding of the diagnostic process and the etiology of diagnostic error is of utmost importance in primary care. Family physicians who, on a daily basis, see a high volume of patients with predominantly low-acuity conditions, must be vigilant for the rare life-threatening condition that may mimic a more benign disease. It is in this setting that cognitive errors may abound, leading to both patient harm and emotional stress in physicians.3

This article reviews the current understanding of the cognitive pathways involved in diagnostic decision making, explains the factors that contribute to diagnostic errors, and summarizes the current research aimed at preventing these errors.

Continue to: The diagnostic process, as currently understood

The diagnostic process, as currently understood

Much of what is understood about the cognitive processes involved in diagnostic reasoning is built on research done in the field of behavioral science—specifically, the foundational work by psychologists Amos Tversky and Daniel Kahneman in the 1970s.4 Only relatively recently has the medical field begun to apply the findings of this research in its attempt to understand how clinicians diagnose.1 This work led to the description of 2 main cognitive pathways described by Croskerry and others.5

Type 1 processing, also referred to as the “intuitive” approach, uses a rapid, largely subconscious pattern-recognition method. Much in the same way one recognizes a familiar face, the clinician using a type 1 process rapidly comes to a conclusion by seeing a recognizable pattern among the patient’s signs and symptoms. For example, crushing chest pain radiating to the left arm instantly brings to mind a myocardial infarction without the clinician methodically formulating a differential diagnosis.4,5

Type 2 processing is an “analytic” approach in which the provider considers the salient characteristics of the case, generates a list of hypotheses, and proceeds to systematically test them and come to a more definitive conclusion.5 For example, an intern encountering a patient with a painfully swollen knee will consider the possibilities of septic arthritis, Lyme disease, and gout, and then carefully determine the likelihood of each disease based on the evidence available at the time.

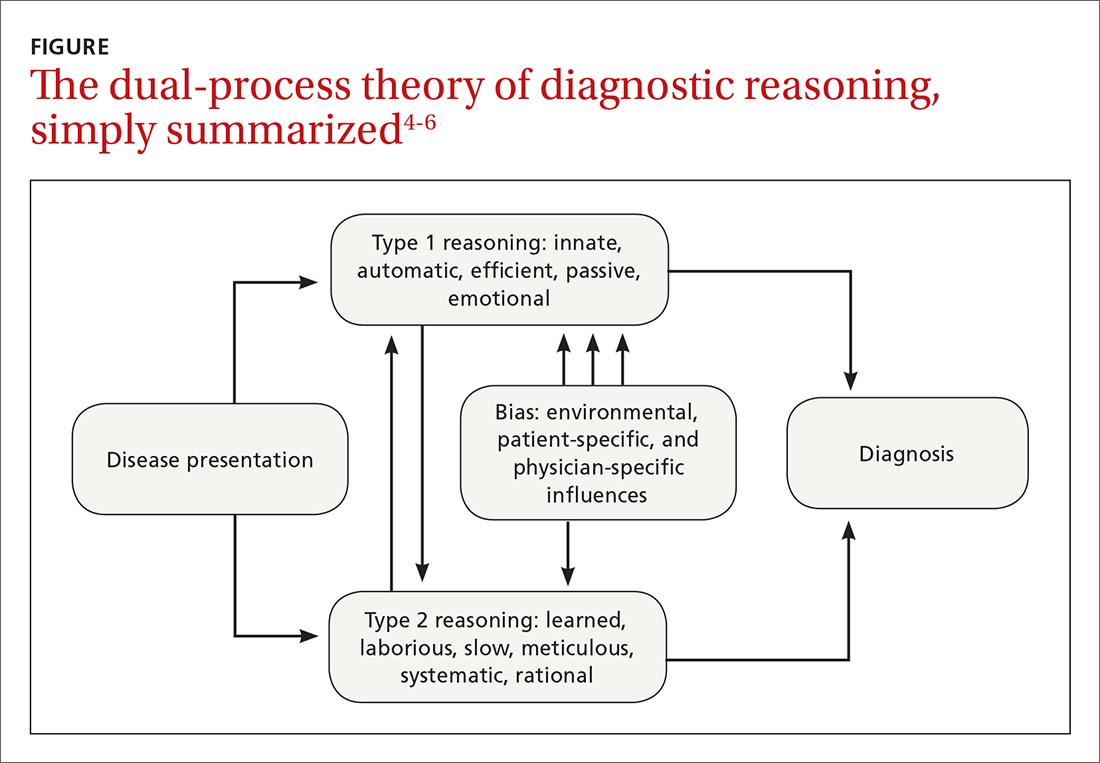

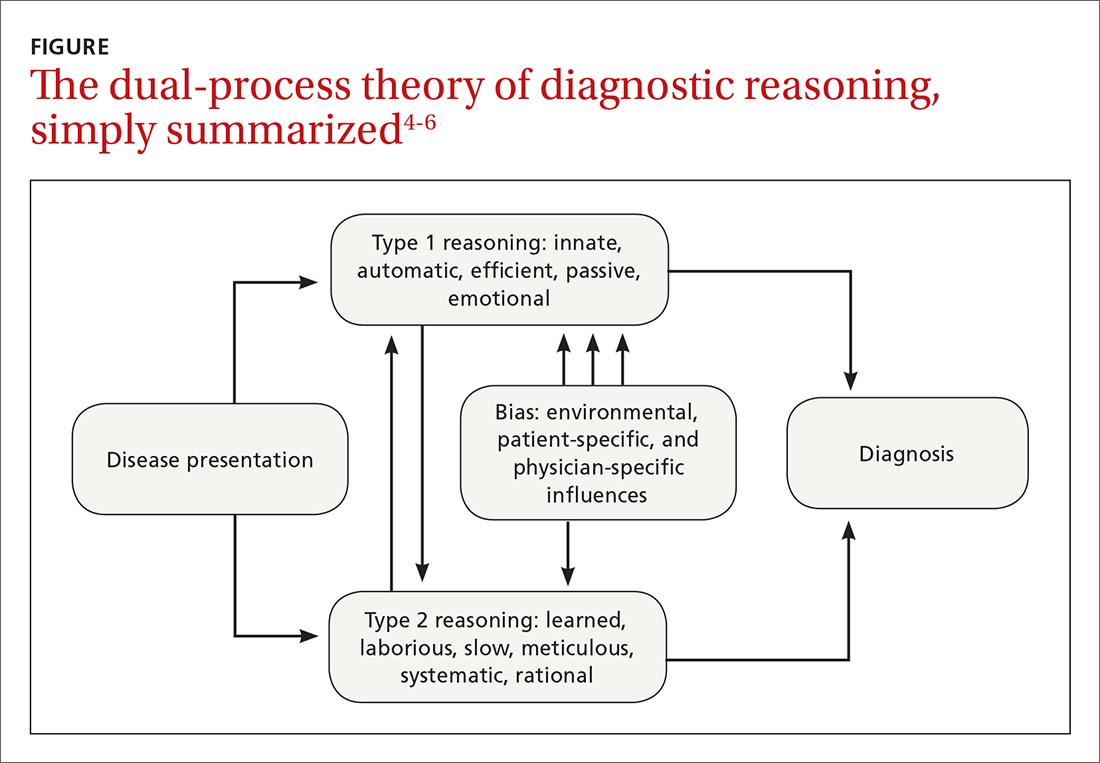

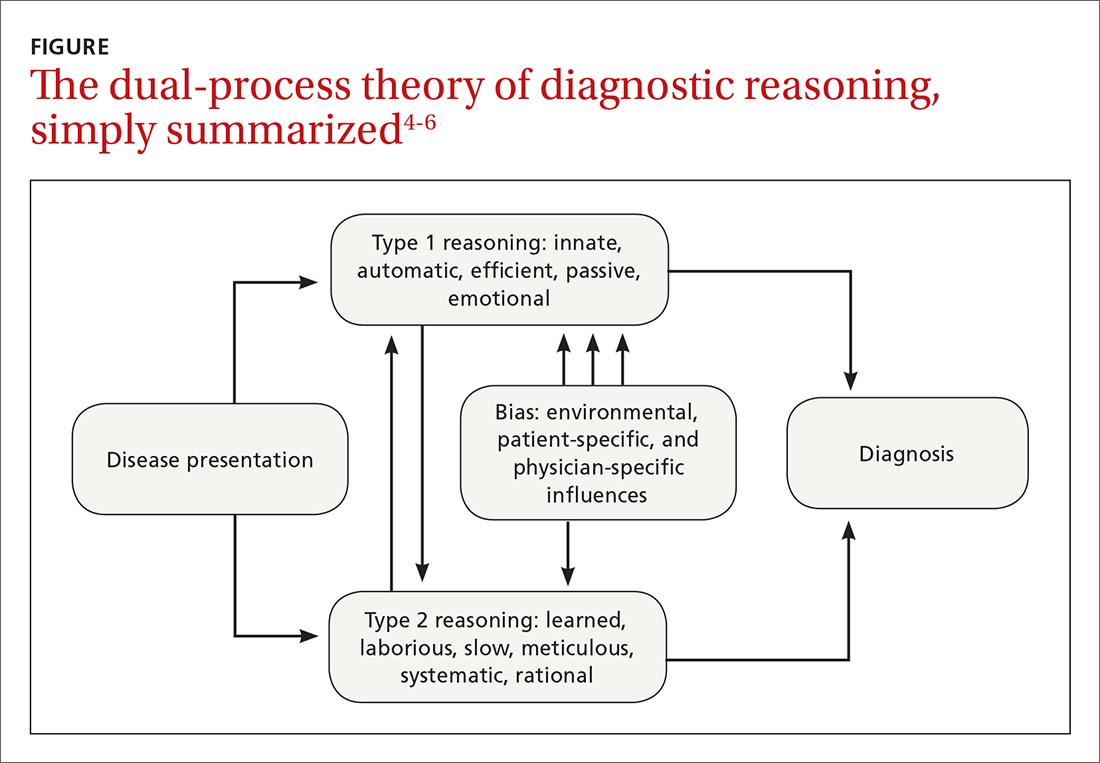

How the processes work in practice. While these 2 pathways are well studied within behavioral circles and are even supported by neurobiologic evidence, most clinical encounters incorporate both methodologies in a parallel system known as the “dual-process” theory (FIGURE).4-6

For example, during an initial visit for back pain, a patient may begin by relaying that the discomfort began after lifting a heavy object. Immediately the clinician, using a type 1 process, will suspect a simple lumbar strain. However, upon further questioning, the patient reveals that the pain occurs at rest and wakes him from sleep; these characteristics are atypical for a simple strain. At this point, the clinician may switch to a type 2 analytic approach and generate a broad differential that includes infection and malignancy.

Continue to: Heuristics: Indispensable, yet susceptible to bias

Heuristics: Indispensable, yet susceptible to bias

Heuristics are cognitive shortcuts often operating subconsciously to solve problems more quickly and efficiently than if the problem were analyzed and solved deductively.7 The act of driving a car, for instance, is a complex everyday task wherein the use of heuristics is not just efficient but essential. Deliberately analyzing and consciously considering every action required in daily living prior to execution would be impractical and even dangerous.

Heuristics also have a role in the practice of medicine. When presented with a large volume of low-acuity patients, the primary care provider would find it impractical to formulate an extensive differential and test each diagnosis before devising a plan of action. Using heuristics during clinical decision-making, however, does make the clinician more vulnerable to biases, which are described in the text that follows.

Biases

Bias is the psychological tendency to make a decision based on incomplete information and subjective factors rather than empirical evidence.4

Anchoring. One of the best-known biases, described in both behavioral science and medical literature, is anchoring. With this bias, the clinician fixates on a particular aspect of the patient’s initial presentation, excluding other more relevant clinical facts.8

A busy clinician, for example, may be notified by a medical assistant that the patient in Room One is complaining about fatigue and seems very depressed. The clinician then unduly anchors his thought process to this initial label of a depressed patient and, without much deliberation, prescribes an antidepressant medication. Had the physician inquired about skin and hair changes (unusual in depression), the more probable diagnosis of hypothyroidism would have come to mind.

Continue to: Premature closure...

Premature closure is another well-known bias associated with diagnostic errors.2,6 This is the tendency to cease inquiry once a possible solution for a problem is found. As the name implies, premature closure leads to an incomplete investigation of the problem and perhaps to incorrect conclusions.

If police arrested a potential suspect in a crime and halted the investigation, it’s possible the true culprit might not be found. In medicine, a classic example would be a junior clinician presented with a case of rectal bleeding in a 75-year-old man who has experienced weight loss and a change in bowel movements. The clinician observes a small nonfriable external hemorrhoid, incorrectly attributes the patient’s symptoms to that finding, and does not pursue the more appropriate investigation for gastrointestinal malignancy.

Interconnected biases. Often diagnostic errors are the result of multiple interconnected biases. For example, a busy emergency department physician is told that an unconscious patient smells of alcohol, so he is “probably drunk and just needs to sleep it off” (anchoring bias). The physician then examines the patient, who is barely arousable and indeed has a heavy odor of alcohol. The physician, therefore, decides not to order a basic laboratory work-up (premature closure). Because of this, the physician misses the correct and life-threatening diagnosis of a mental status change due to alcoholic ketoacidosis.6

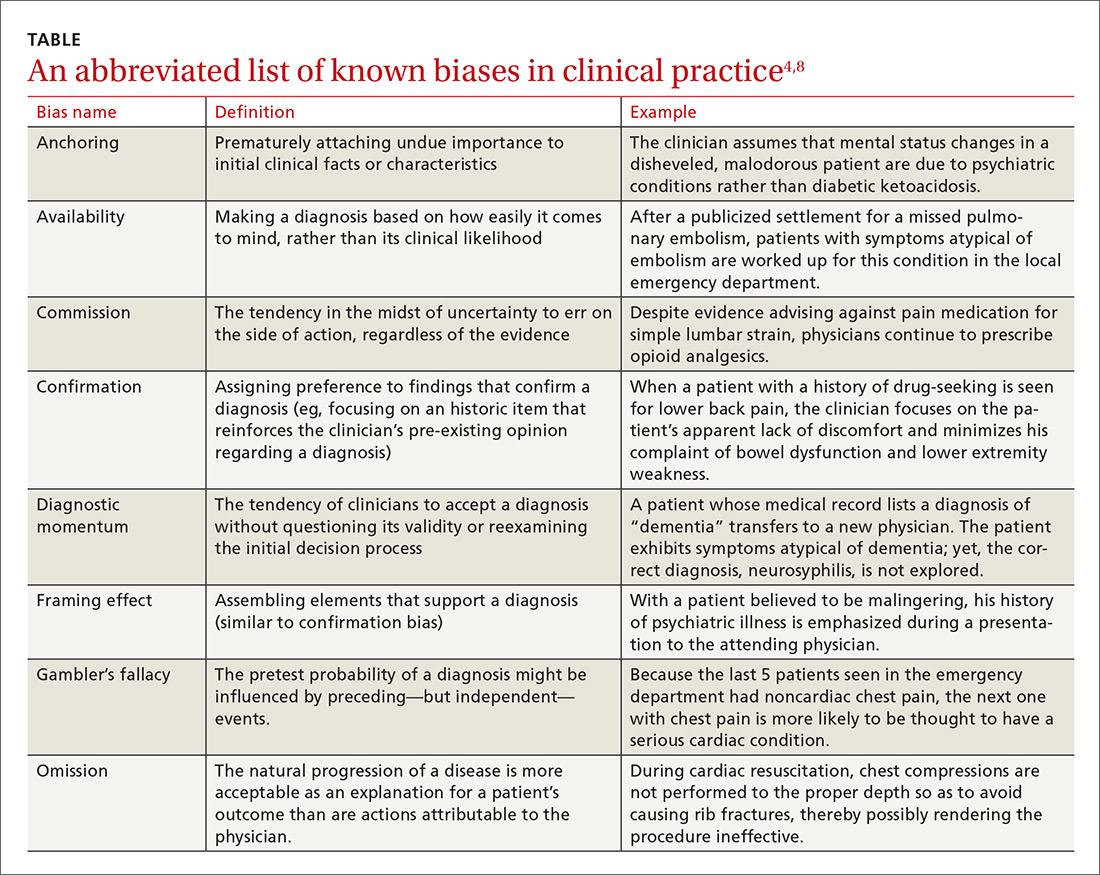

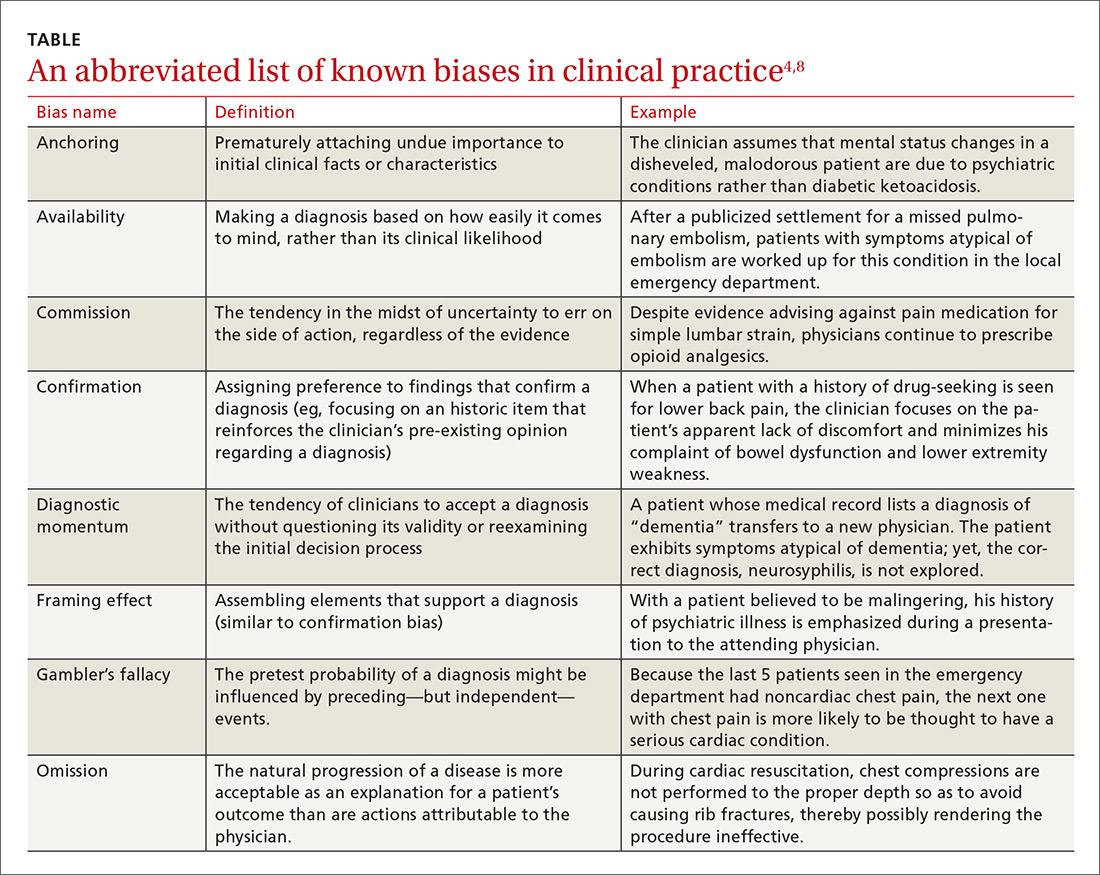

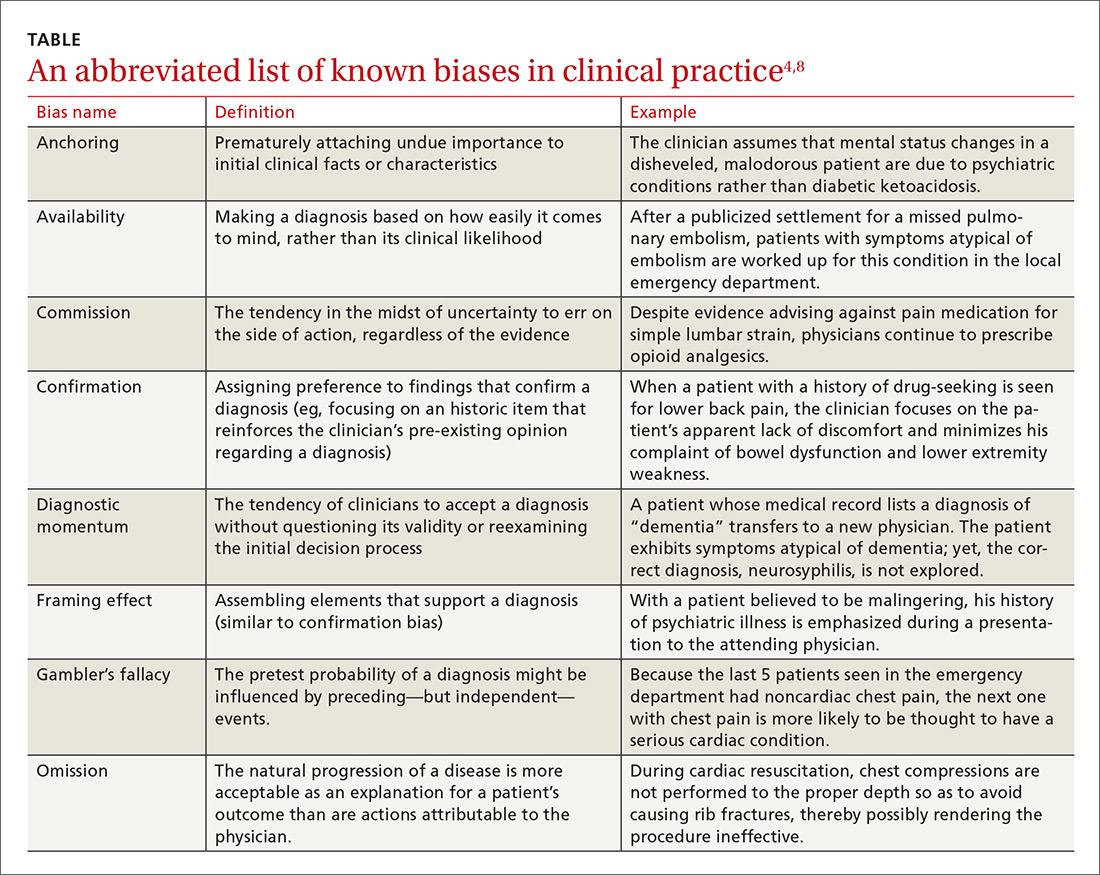

Numerous other biases have been identified and studied.4,8 While an in-depth examination of all biases is beyond the scope of this article, some of those most relevant to medical practice are listed and briefly defined in the TABLE.4,8

Multiple studies point to the central role biases play in diagnostic error. A systematic review by Saposnik et al found that physician cognitive biases were associated with diagnostic errors in 36.5% to 77% of case studies, and that 71% of the studies reviewed found an association between cognitive errors and therapeutic errors.6 In experimental studies, cognitive biases have also been shown to decrease accuracy in the interpretation of radiologic studies and electrocardiograms.9 In one case review, cognitive errors were identified in 74% of cases where an actual medical error had been committed.2

Continue to: The human component: When the patient is "difficult"

The human component: When the patient is “difficult”

Failures in reasoning are not solely responsible for diagnostic errors. One increasingly scrutinized cause of impaired clinical judgment is the physician-patient relationship, especially one involving a “difficult” patient. Additionally, the medical literature is beginning to highlight the strong correlation between clinician fatigue or burnout and diagnostic errors.10

Patient-specific factors clearly impact the likelihood of diagnostic error. One randomized controlled trial showed that patients with disruptive behaviors negatively influence the accuracy of clinicians’ diagnoses.11 In this study, family medicine residents made 42% more diagnostic errors when evaluating complex clinical presentations involving patients with negative interpersonal characteristics (demeaning, aggressive, or demanding communication styles). Even with simple clinical problems, difficult patient behaviors were associated with a 6% higher rate of error than when such behaviors were absent, although this finding did not reach statistical significance.11

Researchers have proposed the “resource depletion” theory as an explanation for this finding.11 A patient with difficult behaviors will require additional cognitive resources from the physician to manage those behaviors.11 This leaves less cognitive capacity for solving the diagnostic problem.11 Furthermore, Riskin et al demonstrated that pediatric intensive care teams committed increased rates of medical errors and experienced poorer team performance when exposed to simulated families displaying rude behavior.12 Clearly, the power of the patient-physician relationship cannot be overstated when discussing diagnostic error.

Continue to: Strategies for reducing errors in the diagnostic process

Strategies for reducing errors in the diagnostic process

Although the mental pathways involved in diagnostic reasoning have become better elucidated, there is still considerable controversy and uncertainty surrounding effective ways to counter errors. In their review of the literature, Norman et al concluded that diagnostic errors are multifactorial and that strategies that solely educate novice clinicians about biases are unlikely to lead to significant gains because of “limited transfer.”9 That is, in simply teaching the theory of cognitive errors before trainees have had time to accumulate real-world experience, they do not learn how to apply corrective solutions.

Graber et al argue that mental shortcuts are often a beneficial behavior, and it would be unrealistic and perhaps even detrimental to eliminate them completely from clinical judgment.13 Despite the controversy, several corrective methods have been proposed and have shown promise. Two such methods are medical education on cognitive error and the use of differential diagnosis generators.2

Medical education on cognitive error. If heuristics and biases are acquired subconscious patterns of thinking, then it would be logical to assume that the most effective way to prevent their intrusion into the clinical decision-making process would be to intervene when the art of diagnosis is taught. Graber et al reference several small studies that demonstrated a small improvement in diagnostic accuracy when learners were educated about cognitive biases and clinical judgment.13

Additionally, with medical students, Mamede et al describe how structured reflection during case-based learning enhanced diagnostic accuracy.14 However, none of these studies have proven that increased awareness of cognitive biases results in fewer delayed or missed diagnoses in clinical practice. Clearly, further research is needed to determine whether the skills gained in the classroom would be transferable to clinical practice and result in lower rates of delayed or missed diagnoses. Future studies could also investigate if these findings are replicable when applied to more experienced clinicians rather than medical students and residents.

Continue to: Differential diagnosis generators

Differential diagnosis generators.

However, few randomized controlled studies have investigated whether the use of a DDx generator reduces diagnostic error, and evidence is lacking to prove their usefulness in clinical practice. Furthermore, while an exhaustive list of possible diagnoses may be helpful, some proposed diagnoses may be irrelevant and may distract from timely attention being paid to more likely possibilities. Additionally, forming an extensive DDx list during every patient encounter would significantly add to the physician’s workload and could contribute to physician burnout.

Selective use? We believe that DDx generators would be best used selectively as a safeguard for the clinician who becomes aware of an increased risk of diagnostic error in a particular patient. As previously discussed, errors involving cognitive processes are more often errors of improper reasoning rather than of insufficient knowledge.3 The DDx generator then serves as a way of double-checking to ensure that additional diagnoses are being considered. This can be especially helpful when facing patients who display difficult behaviors or when the clinician’s cognitive reserve is depleted by other factors.

DDx generators may also help the physician expand his or her differential diagnosis when a patient is failing to improve despite appropriately treating the working diagnosis.

Another option worth studying? Future studies could also investigate whether discussing a case with another clinician is an effective way to reduce cognitive biases and diagnostic errors.

Continue to: Looking foward

Looking forward

More research will hopefully lead to corrective solutions. But it is also likely that solutions will require additional time and resources on the part of already overburdened providers. Thus, new challenges will arise in applying remedies to the current model of health care management and reimbursement.

Despite clinically useful advances in technology and science, family physicians are left with the unsettling conclusion that the most common source of error may also be the most difficult to change: physicians themselves. Fortunately, history has shown that the field of medicine can overcome even the most ingrained and harmful tendencies of the human mind, including prejudice and superstition.16,17 This next challenge will be no exception.

CORRESPONDENCE

Thomas Yuen, MD, Crozer Keystone Family Medicine Residency, 1260 East Woodland Avenue, Suite 200, Philadelphia, PA 19064; [email protected].

1. Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775-780.

2. Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493-1499.

3. Singh H, Giardina TD, Meyer AN, et al. Types and origins of diagnostic errors in primary care settings. JAMA Intern Med. 2013;173:418-425.

4. Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185:1124-1131.

5. Croskerry P. A universal model of diagnostic reasoning. Acad Med. 2009;84:1022-1028.

6. Saposnik G, Redelmeier D, Ruff CC, et al. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16:138.

7. Gigerenzer G, Gaissmaier W. Heuristic decision making. Annu Rev Psychol. 2011;62:451-482.

8. Wellbery C. Flaws in clinical reasoning: a common cause of diagnostic error. Am Fam Physician. 2011;84:1042-1048.

9. Norman GR, Monteiro SD, Sherbino J, et al. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92:23-30.

10. Lockley SW, Cronin JW, Evans EE, et al. Effect of reducing interns’ weekly work hours on sleep and attentional failures. NEJM. 2004;351:1829-1837.

11. Schmidt HG, Van Gog T, Schuit SC, et al. Do patients’ disruptive behaviours influence the accuracy of a doctor’s diagnosis? A randomised experiment. BMJ Qual Saf. 2017;26:19-23.

12. Riskin A, Erez A, Foulk TA, et al. Rudeness and medical team performance. Pediatrics. 2017;139:e20162305.

13. Graber M, Gordon R, Franklin N. Reducing diagnostic errors in medicine: what’s the goal? Acad Med. 2002;77:981-992.

14. Mamede S, Van Gog T, Sampaio AM, et al. How can students’ diagnostic competence benefit most from practice with clinical cases? The effects of structured reflection on future diagnosis of the same and novel diseases. Acad Med. 2014;89:121-127.

15. Bond WF, Schwartz LM, Weaver KR, et al. Differential diagnosis generators: an evaluation of currently available computer programs. J Gen Intern Med. 2012;27:213-219.

16. Porter R. The Greatest Benefit to Mankind: A Medical History of Humanity. New York, NY: W.W. Norton and Company, Inc.;1999.

17. Lazarus BA. The practice of medicine and prejudice in a New England town: the founding of Mount Sinai Hospital, Hartford, Connecticut. J Am Ethn Hist. 1991;10:21-41.

CASE A patient with a history of drug-seeking behavior asks to be seen by you for lower back pain. Your impression upon entering the examination room is that the patient appears to be in minimal pain. A review of the patient’s chart leads you to suspect that the patient’s past behavior pattern is the reason for the visit. You find yourself downplaying his reports of weight loss, changed bowel habits, and lower extremity weakness—despite the fact that these complaints might have led you to consider more concerning causes of back pain in a different patient.

This situation is not uncommon. At one time or another, it’s likely that we have all placed an undue emphasis on a patient’s social background to reinforce a pre-existing opinion of the likely diagnosis. Doing so is an example of both anchoring and confirmation biases—just 2 of the many biases known to influence critical thinking in clinical practice (and which we’ll describe in a bit).

Reconsidering the diagnostic process. Previous attempts to address the issue of incorrect diagnosis and medical error have focused on systems-based approaches such as adopting electronic medical records to avert prescribing errors or eliminating confusing abbreviations in documentation.

Graber et al reviewed 100 errors involving internists and found that 46% of the errors resulted from a combination of systems-based and cognitive reasoning factors.2 More surprisingly, 28% of errors were attributable to reasoning failures alone.2 Singh et al showed that in one primary care network, most errors occurred during the patient-doctor encounter, with 56% involving errors in history taking and 47% involving oversights in the physical examination.3 Furthermore, most of the errors occurred in the context of common conditions such as pneumonia and congestive heart failure—rather than esoteric diseases—implying that the failures were due to errors in the diagnostic process rather than from a lack of knowledge.3

An understanding of the diagnostic process and the etiology of diagnostic error is of utmost importance in primary care. Family physicians who, on a daily basis, see a high volume of patients with predominantly low-acuity conditions, must be vigilant for the rare life-threatening condition that may mimic a more benign disease. It is in this setting that cognitive errors may abound, leading to both patient harm and emotional stress in physicians.3

This article reviews the current understanding of the cognitive pathways involved in diagnostic decision making, explains the factors that contribute to diagnostic errors, and summarizes the current research aimed at preventing these errors.

Continue to: The diagnostic process, as currently understood

The diagnostic process, as currently understood

Much of what is understood about the cognitive processes involved in diagnostic reasoning is built on research done in the field of behavioral science—specifically, the foundational work by psychologists Amos Tversky and Daniel Kahneman in the 1970s.4 Only relatively recently has the medical field begun to apply the findings of this research in its attempt to understand how clinicians diagnose.1 This work led to the description of 2 main cognitive pathways described by Croskerry and others.5

Type 1 processing, also referred to as the “intuitive” approach, uses a rapid, largely subconscious pattern-recognition method. Much in the same way one recognizes a familiar face, the clinician using a type 1 process rapidly comes to a conclusion by seeing a recognizable pattern among the patient’s signs and symptoms. For example, crushing chest pain radiating to the left arm instantly brings to mind a myocardial infarction without the clinician methodically formulating a differential diagnosis.4,5

Type 2 processing is an “analytic” approach in which the provider considers the salient characteristics of the case, generates a list of hypotheses, and proceeds to systematically test them and come to a more definitive conclusion.5 For example, an intern encountering a patient with a painfully swollen knee will consider the possibilities of septic arthritis, Lyme disease, and gout, and then carefully determine the likelihood of each disease based on the evidence available at the time.

How the processes work in practice. While these 2 pathways are well studied within behavioral circles and are even supported by neurobiologic evidence, most clinical encounters incorporate both methodologies in a parallel system known as the “dual-process” theory (FIGURE).4-6

For example, during an initial visit for back pain, a patient may begin by relaying that the discomfort began after lifting a heavy object. Immediately the clinician, using a type 1 process, will suspect a simple lumbar strain. However, upon further questioning, the patient reveals that the pain occurs at rest and wakes him from sleep; these characteristics are atypical for a simple strain. At this point, the clinician may switch to a type 2 analytic approach and generate a broad differential that includes infection and malignancy.

Continue to: Heuristics: Indispensable, yet susceptible to bias

Heuristics: Indispensable, yet susceptible to bias

Heuristics are cognitive shortcuts often operating subconsciously to solve problems more quickly and efficiently than if the problem were analyzed and solved deductively.7 The act of driving a car, for instance, is a complex everyday task wherein the use of heuristics is not just efficient but essential. Deliberately analyzing and consciously considering every action required in daily living prior to execution would be impractical and even dangerous.

Heuristics also have a role in the practice of medicine. When presented with a large volume of low-acuity patients, the primary care provider would find it impractical to formulate an extensive differential and test each diagnosis before devising a plan of action. Using heuristics during clinical decision-making, however, does make the clinician more vulnerable to biases, which are described in the text that follows.

Biases

Bias is the psychological tendency to make a decision based on incomplete information and subjective factors rather than empirical evidence.4

Anchoring. One of the best-known biases, described in both behavioral science and medical literature, is anchoring. With this bias, the clinician fixates on a particular aspect of the patient’s initial presentation, excluding other more relevant clinical facts.8

A busy clinician, for example, may be notified by a medical assistant that the patient in Room One is complaining about fatigue and seems very depressed. The clinician then unduly anchors his thought process to this initial label of a depressed patient and, without much deliberation, prescribes an antidepressant medication. Had the physician inquired about skin and hair changes (unusual in depression), the more probable diagnosis of hypothyroidism would have come to mind.

Continue to: Premature closure...

Premature closure is another well-known bias associated with diagnostic errors.2,6 This is the tendency to cease inquiry once a possible solution for a problem is found. As the name implies, premature closure leads to an incomplete investigation of the problem and perhaps to incorrect conclusions.

If police arrested a potential suspect in a crime and halted the investigation, it’s possible the true culprit might not be found. In medicine, a classic example would be a junior clinician presented with a case of rectal bleeding in a 75-year-old man who has experienced weight loss and a change in bowel movements. The clinician observes a small nonfriable external hemorrhoid, incorrectly attributes the patient’s symptoms to that finding, and does not pursue the more appropriate investigation for gastrointestinal malignancy.

Interconnected biases. Often diagnostic errors are the result of multiple interconnected biases. For example, a busy emergency department physician is told that an unconscious patient smells of alcohol, so he is “probably drunk and just needs to sleep it off” (anchoring bias). The physician then examines the patient, who is barely arousable and indeed has a heavy odor of alcohol. The physician, therefore, decides not to order a basic laboratory work-up (premature closure). Because of this, the physician misses the correct and life-threatening diagnosis of a mental status change due to alcoholic ketoacidosis.6

Numerous other biases have been identified and studied.4,8 While an in-depth examination of all biases is beyond the scope of this article, some of those most relevant to medical practice are listed and briefly defined in the TABLE.4,8

Multiple studies point to the central role biases play in diagnostic error. A systematic review by Saposnik et al found that physician cognitive biases were associated with diagnostic errors in 36.5% to 77% of case studies, and that 71% of the studies reviewed found an association between cognitive errors and therapeutic errors.6 In experimental studies, cognitive biases have also been shown to decrease accuracy in the interpretation of radiologic studies and electrocardiograms.9 In one case review, cognitive errors were identified in 74% of cases where an actual medical error had been committed.2

Continue to: The human component: When the patient is "difficult"

The human component: When the patient is “difficult”

Failures in reasoning are not solely responsible for diagnostic errors. One increasingly scrutinized cause of impaired clinical judgment is the physician-patient relationship, especially one involving a “difficult” patient. Additionally, the medical literature is beginning to highlight the strong correlation between clinician fatigue or burnout and diagnostic errors.10

Patient-specific factors clearly impact the likelihood of diagnostic error. One randomized controlled trial showed that patients with disruptive behaviors negatively influence the accuracy of clinicians’ diagnoses.11 In this study, family medicine residents made 42% more diagnostic errors when evaluating complex clinical presentations involving patients with negative interpersonal characteristics (demeaning, aggressive, or demanding communication styles). Even with simple clinical problems, difficult patient behaviors were associated with a 6% higher rate of error than when such behaviors were absent, although this finding did not reach statistical significance.11

Researchers have proposed the “resource depletion” theory as an explanation for this finding.11 A patient with difficult behaviors will require additional cognitive resources from the physician to manage those behaviors.11 This leaves less cognitive capacity for solving the diagnostic problem.11 Furthermore, Riskin et al demonstrated that pediatric intensive care teams committed increased rates of medical errors and experienced poorer team performance when exposed to simulated families displaying rude behavior.12 Clearly, the power of the patient-physician relationship cannot be overstated when discussing diagnostic error.

Continue to: Strategies for reducing errors in the diagnostic process

Strategies for reducing errors in the diagnostic process

Although the mental pathways involved in diagnostic reasoning have become better elucidated, there is still considerable controversy and uncertainty surrounding effective ways to counter errors. In their review of the literature, Norman et al concluded that diagnostic errors are multifactorial and that strategies that solely educate novice clinicians about biases are unlikely to lead to significant gains because of “limited transfer.”9 That is, in simply teaching the theory of cognitive errors before trainees have had time to accumulate real-world experience, they do not learn how to apply corrective solutions.

Graber et al argue that mental shortcuts are often a beneficial behavior, and it would be unrealistic and perhaps even detrimental to eliminate them completely from clinical judgment.13 Despite the controversy, several corrective methods have been proposed and have shown promise. Two such methods are medical education on cognitive error and the use of differential diagnosis generators.2

Medical education on cognitive error. If heuristics and biases are acquired subconscious patterns of thinking, then it would be logical to assume that the most effective way to prevent their intrusion into the clinical decision-making process would be to intervene when the art of diagnosis is taught. Graber et al reference several small studies that demonstrated a small improvement in diagnostic accuracy when learners were educated about cognitive biases and clinical judgment.13

Additionally, with medical students, Mamede et al describe how structured reflection during case-based learning enhanced diagnostic accuracy.14 However, none of these studies have proven that increased awareness of cognitive biases results in fewer delayed or missed diagnoses in clinical practice. Clearly, further research is needed to determine whether the skills gained in the classroom would be transferable to clinical practice and result in lower rates of delayed or missed diagnoses. Future studies could also investigate if these findings are replicable when applied to more experienced clinicians rather than medical students and residents.

Continue to: Differential diagnosis generators

Differential diagnosis generators.

However, few randomized controlled studies have investigated whether the use of a DDx generator reduces diagnostic error, and evidence is lacking to prove their usefulness in clinical practice. Furthermore, while an exhaustive list of possible diagnoses may be helpful, some proposed diagnoses may be irrelevant and may distract from timely attention being paid to more likely possibilities. Additionally, forming an extensive DDx list during every patient encounter would significantly add to the physician’s workload and could contribute to physician burnout.

Selective use? We believe that DDx generators would be best used selectively as a safeguard for the clinician who becomes aware of an increased risk of diagnostic error in a particular patient. As previously discussed, errors involving cognitive processes are more often errors of improper reasoning rather than of insufficient knowledge.3 The DDx generator then serves as a way of double-checking to ensure that additional diagnoses are being considered. This can be especially helpful when facing patients who display difficult behaviors or when the clinician’s cognitive reserve is depleted by other factors.

DDx generators may also help the physician expand his or her differential diagnosis when a patient is failing to improve despite appropriately treating the working diagnosis.

Another option worth studying? Future studies could also investigate whether discussing a case with another clinician is an effective way to reduce cognitive biases and diagnostic errors.

Continue to: Looking foward

Looking forward

More research will hopefully lead to corrective solutions. But it is also likely that solutions will require additional time and resources on the part of already overburdened providers. Thus, new challenges will arise in applying remedies to the current model of health care management and reimbursement.

Despite clinically useful advances in technology and science, family physicians are left with the unsettling conclusion that the most common source of error may also be the most difficult to change: physicians themselves. Fortunately, history has shown that the field of medicine can overcome even the most ingrained and harmful tendencies of the human mind, including prejudice and superstition.16,17 This next challenge will be no exception.

CORRESPONDENCE

Thomas Yuen, MD, Crozer Keystone Family Medicine Residency, 1260 East Woodland Avenue, Suite 200, Philadelphia, PA 19064; [email protected].

CASE A patient with a history of drug-seeking behavior asks to be seen by you for lower back pain. Your impression upon entering the examination room is that the patient appears to be in minimal pain. A review of the patient’s chart leads you to suspect that the patient’s past behavior pattern is the reason for the visit. You find yourself downplaying his reports of weight loss, changed bowel habits, and lower extremity weakness—despite the fact that these complaints might have led you to consider more concerning causes of back pain in a different patient.

This situation is not uncommon. At one time or another, it’s likely that we have all placed an undue emphasis on a patient’s social background to reinforce a pre-existing opinion of the likely diagnosis. Doing so is an example of both anchoring and confirmation biases—just 2 of the many biases known to influence critical thinking in clinical practice (and which we’ll describe in a bit).

Reconsidering the diagnostic process. Previous attempts to address the issue of incorrect diagnosis and medical error have focused on systems-based approaches such as adopting electronic medical records to avert prescribing errors or eliminating confusing abbreviations in documentation.

Graber et al reviewed 100 errors involving internists and found that 46% of the errors resulted from a combination of systems-based and cognitive reasoning factors.2 More surprisingly, 28% of errors were attributable to reasoning failures alone.2 Singh et al showed that in one primary care network, most errors occurred during the patient-doctor encounter, with 56% involving errors in history taking and 47% involving oversights in the physical examination.3 Furthermore, most of the errors occurred in the context of common conditions such as pneumonia and congestive heart failure—rather than esoteric diseases—implying that the failures were due to errors in the diagnostic process rather than from a lack of knowledge.3

An understanding of the diagnostic process and the etiology of diagnostic error is of utmost importance in primary care. Family physicians who, on a daily basis, see a high volume of patients with predominantly low-acuity conditions, must be vigilant for the rare life-threatening condition that may mimic a more benign disease. It is in this setting that cognitive errors may abound, leading to both patient harm and emotional stress in physicians.3

This article reviews the current understanding of the cognitive pathways involved in diagnostic decision making, explains the factors that contribute to diagnostic errors, and summarizes the current research aimed at preventing these errors.

Continue to: The diagnostic process, as currently understood

The diagnostic process, as currently understood

Much of what is understood about the cognitive processes involved in diagnostic reasoning is built on research done in the field of behavioral science—specifically, the foundational work by psychologists Amos Tversky and Daniel Kahneman in the 1970s.4 Only relatively recently has the medical field begun to apply the findings of this research in its attempt to understand how clinicians diagnose.1 This work led to the description of 2 main cognitive pathways described by Croskerry and others.5

Type 1 processing, also referred to as the “intuitive” approach, uses a rapid, largely subconscious pattern-recognition method. Much in the same way one recognizes a familiar face, the clinician using a type 1 process rapidly comes to a conclusion by seeing a recognizable pattern among the patient’s signs and symptoms. For example, crushing chest pain radiating to the left arm instantly brings to mind a myocardial infarction without the clinician methodically formulating a differential diagnosis.4,5

Type 2 processing is an “analytic” approach in which the provider considers the salient characteristics of the case, generates a list of hypotheses, and proceeds to systematically test them and come to a more definitive conclusion.5 For example, an intern encountering a patient with a painfully swollen knee will consider the possibilities of septic arthritis, Lyme disease, and gout, and then carefully determine the likelihood of each disease based on the evidence available at the time.

How the processes work in practice. While these 2 pathways are well studied within behavioral circles and are even supported by neurobiologic evidence, most clinical encounters incorporate both methodologies in a parallel system known as the “dual-process” theory (FIGURE).4-6

For example, during an initial visit for back pain, a patient may begin by relaying that the discomfort began after lifting a heavy object. Immediately the clinician, using a type 1 process, will suspect a simple lumbar strain. However, upon further questioning, the patient reveals that the pain occurs at rest and wakes him from sleep; these characteristics are atypical for a simple strain. At this point, the clinician may switch to a type 2 analytic approach and generate a broad differential that includes infection and malignancy.

Continue to: Heuristics: Indispensable, yet susceptible to bias

Heuristics: Indispensable, yet susceptible to bias

Heuristics are cognitive shortcuts often operating subconsciously to solve problems more quickly and efficiently than if the problem were analyzed and solved deductively.7 The act of driving a car, for instance, is a complex everyday task wherein the use of heuristics is not just efficient but essential. Deliberately analyzing and consciously considering every action required in daily living prior to execution would be impractical and even dangerous.

Heuristics also have a role in the practice of medicine. When presented with a large volume of low-acuity patients, the primary care provider would find it impractical to formulate an extensive differential and test each diagnosis before devising a plan of action. Using heuristics during clinical decision-making, however, does make the clinician more vulnerable to biases, which are described in the text that follows.

Biases

Bias is the psychological tendency to make a decision based on incomplete information and subjective factors rather than empirical evidence.4

Anchoring. One of the best-known biases, described in both behavioral science and medical literature, is anchoring. With this bias, the clinician fixates on a particular aspect of the patient’s initial presentation, excluding other more relevant clinical facts.8

A busy clinician, for example, may be notified by a medical assistant that the patient in Room One is complaining about fatigue and seems very depressed. The clinician then unduly anchors his thought process to this initial label of a depressed patient and, without much deliberation, prescribes an antidepressant medication. Had the physician inquired about skin and hair changes (unusual in depression), the more probable diagnosis of hypothyroidism would have come to mind.

Continue to: Premature closure...

Premature closure is another well-known bias associated with diagnostic errors.2,6 This is the tendency to cease inquiry once a possible solution for a problem is found. As the name implies, premature closure leads to an incomplete investigation of the problem and perhaps to incorrect conclusions.

If police arrested a potential suspect in a crime and halted the investigation, it’s possible the true culprit might not be found. In medicine, a classic example would be a junior clinician presented with a case of rectal bleeding in a 75-year-old man who has experienced weight loss and a change in bowel movements. The clinician observes a small nonfriable external hemorrhoid, incorrectly attributes the patient’s symptoms to that finding, and does not pursue the more appropriate investigation for gastrointestinal malignancy.

Interconnected biases. Often diagnostic errors are the result of multiple interconnected biases. For example, a busy emergency department physician is told that an unconscious patient smells of alcohol, so he is “probably drunk and just needs to sleep it off” (anchoring bias). The physician then examines the patient, who is barely arousable and indeed has a heavy odor of alcohol. The physician, therefore, decides not to order a basic laboratory work-up (premature closure). Because of this, the physician misses the correct and life-threatening diagnosis of a mental status change due to alcoholic ketoacidosis.6

Numerous other biases have been identified and studied.4,8 While an in-depth examination of all biases is beyond the scope of this article, some of those most relevant to medical practice are listed and briefly defined in the TABLE.4,8

Multiple studies point to the central role biases play in diagnostic error. A systematic review by Saposnik et al found that physician cognitive biases were associated with diagnostic errors in 36.5% to 77% of case studies, and that 71% of the studies reviewed found an association between cognitive errors and therapeutic errors.6 In experimental studies, cognitive biases have also been shown to decrease accuracy in the interpretation of radiologic studies and electrocardiograms.9 In one case review, cognitive errors were identified in 74% of cases where an actual medical error had been committed.2

Continue to: The human component: When the patient is "difficult"

The human component: When the patient is “difficult”

Failures in reasoning are not solely responsible for diagnostic errors. One increasingly scrutinized cause of impaired clinical judgment is the physician-patient relationship, especially one involving a “difficult” patient. Additionally, the medical literature is beginning to highlight the strong correlation between clinician fatigue or burnout and diagnostic errors.10

Patient-specific factors clearly impact the likelihood of diagnostic error. One randomized controlled trial showed that patients with disruptive behaviors negatively influence the accuracy of clinicians’ diagnoses.11 In this study, family medicine residents made 42% more diagnostic errors when evaluating complex clinical presentations involving patients with negative interpersonal characteristics (demeaning, aggressive, or demanding communication styles). Even with simple clinical problems, difficult patient behaviors were associated with a 6% higher rate of error than when such behaviors were absent, although this finding did not reach statistical significance.11

Researchers have proposed the “resource depletion” theory as an explanation for this finding.11 A patient with difficult behaviors will require additional cognitive resources from the physician to manage those behaviors.11 This leaves less cognitive capacity for solving the diagnostic problem.11 Furthermore, Riskin et al demonstrated that pediatric intensive care teams committed increased rates of medical errors and experienced poorer team performance when exposed to simulated families displaying rude behavior.12 Clearly, the power of the patient-physician relationship cannot be overstated when discussing diagnostic error.

Continue to: Strategies for reducing errors in the diagnostic process

Strategies for reducing errors in the diagnostic process

Although the mental pathways involved in diagnostic reasoning have become better elucidated, there is still considerable controversy and uncertainty surrounding effective ways to counter errors. In their review of the literature, Norman et al concluded that diagnostic errors are multifactorial and that strategies that solely educate novice clinicians about biases are unlikely to lead to significant gains because of “limited transfer.”9 That is, in simply teaching the theory of cognitive errors before trainees have had time to accumulate real-world experience, they do not learn how to apply corrective solutions.

Graber et al argue that mental shortcuts are often a beneficial behavior, and it would be unrealistic and perhaps even detrimental to eliminate them completely from clinical judgment.13 Despite the controversy, several corrective methods have been proposed and have shown promise. Two such methods are medical education on cognitive error and the use of differential diagnosis generators.2

Medical education on cognitive error. If heuristics and biases are acquired subconscious patterns of thinking, then it would be logical to assume that the most effective way to prevent their intrusion into the clinical decision-making process would be to intervene when the art of diagnosis is taught. Graber et al reference several small studies that demonstrated a small improvement in diagnostic accuracy when learners were educated about cognitive biases and clinical judgment.13

Additionally, with medical students, Mamede et al describe how structured reflection during case-based learning enhanced diagnostic accuracy.14 However, none of these studies have proven that increased awareness of cognitive biases results in fewer delayed or missed diagnoses in clinical practice. Clearly, further research is needed to determine whether the skills gained in the classroom would be transferable to clinical practice and result in lower rates of delayed or missed diagnoses. Future studies could also investigate if these findings are replicable when applied to more experienced clinicians rather than medical students and residents.

Continue to: Differential diagnosis generators

Differential diagnosis generators.

However, few randomized controlled studies have investigated whether the use of a DDx generator reduces diagnostic error, and evidence is lacking to prove their usefulness in clinical practice. Furthermore, while an exhaustive list of possible diagnoses may be helpful, some proposed diagnoses may be irrelevant and may distract from timely attention being paid to more likely possibilities. Additionally, forming an extensive DDx list during every patient encounter would significantly add to the physician’s workload and could contribute to physician burnout.

Selective use? We believe that DDx generators would be best used selectively as a safeguard for the clinician who becomes aware of an increased risk of diagnostic error in a particular patient. As previously discussed, errors involving cognitive processes are more often errors of improper reasoning rather than of insufficient knowledge.3 The DDx generator then serves as a way of double-checking to ensure that additional diagnoses are being considered. This can be especially helpful when facing patients who display difficult behaviors or when the clinician’s cognitive reserve is depleted by other factors.

DDx generators may also help the physician expand his or her differential diagnosis when a patient is failing to improve despite appropriately treating the working diagnosis.

Another option worth studying? Future studies could also investigate whether discussing a case with another clinician is an effective way to reduce cognitive biases and diagnostic errors.

Continue to: Looking foward

Looking forward

More research will hopefully lead to corrective solutions. But it is also likely that solutions will require additional time and resources on the part of already overburdened providers. Thus, new challenges will arise in applying remedies to the current model of health care management and reimbursement.

Despite clinically useful advances in technology and science, family physicians are left with the unsettling conclusion that the most common source of error may also be the most difficult to change: physicians themselves. Fortunately, history has shown that the field of medicine can overcome even the most ingrained and harmful tendencies of the human mind, including prejudice and superstition.16,17 This next challenge will be no exception.

CORRESPONDENCE

Thomas Yuen, MD, Crozer Keystone Family Medicine Residency, 1260 East Woodland Avenue, Suite 200, Philadelphia, PA 19064; [email protected].

1. Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775-780.

2. Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493-1499.

3. Singh H, Giardina TD, Meyer AN, et al. Types and origins of diagnostic errors in primary care settings. JAMA Intern Med. 2013;173:418-425.

4. Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185:1124-1131.

5. Croskerry P. A universal model of diagnostic reasoning. Acad Med. 2009;84:1022-1028.

6. Saposnik G, Redelmeier D, Ruff CC, et al. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16:138.

7. Gigerenzer G, Gaissmaier W. Heuristic decision making. Annu Rev Psychol. 2011;62:451-482.

8. Wellbery C. Flaws in clinical reasoning: a common cause of diagnostic error. Am Fam Physician. 2011;84:1042-1048.

9. Norman GR, Monteiro SD, Sherbino J, et al. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92:23-30.

10. Lockley SW, Cronin JW, Evans EE, et al. Effect of reducing interns’ weekly work hours on sleep and attentional failures. NEJM. 2004;351:1829-1837.

11. Schmidt HG, Van Gog T, Schuit SC, et al. Do patients’ disruptive behaviours influence the accuracy of a doctor’s diagnosis? A randomised experiment. BMJ Qual Saf. 2017;26:19-23.

12. Riskin A, Erez A, Foulk TA, et al. Rudeness and medical team performance. Pediatrics. 2017;139:e20162305.

13. Graber M, Gordon R, Franklin N. Reducing diagnostic errors in medicine: what’s the goal? Acad Med. 2002;77:981-992.

14. Mamede S, Van Gog T, Sampaio AM, et al. How can students’ diagnostic competence benefit most from practice with clinical cases? The effects of structured reflection on future diagnosis of the same and novel diseases. Acad Med. 2014;89:121-127.

15. Bond WF, Schwartz LM, Weaver KR, et al. Differential diagnosis generators: an evaluation of currently available computer programs. J Gen Intern Med. 2012;27:213-219.

16. Porter R. The Greatest Benefit to Mankind: A Medical History of Humanity. New York, NY: W.W. Norton and Company, Inc.;1999.

17. Lazarus BA. The practice of medicine and prejudice in a New England town: the founding of Mount Sinai Hospital, Hartford, Connecticut. J Am Ethn Hist. 1991;10:21-41.

1. Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775-780.

2. Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493-1499.

3. Singh H, Giardina TD, Meyer AN, et al. Types and origins of diagnostic errors in primary care settings. JAMA Intern Med. 2013;173:418-425.

4. Tversky A, Kahneman D. Judgment under uncertainty: heuristics and biases. Science. 1974;185:1124-1131.

5. Croskerry P. A universal model of diagnostic reasoning. Acad Med. 2009;84:1022-1028.

6. Saposnik G, Redelmeier D, Ruff CC, et al. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16:138.

7. Gigerenzer G, Gaissmaier W. Heuristic decision making. Annu Rev Psychol. 2011;62:451-482.

8. Wellbery C. Flaws in clinical reasoning: a common cause of diagnostic error. Am Fam Physician. 2011;84:1042-1048.

9. Norman GR, Monteiro SD, Sherbino J, et al. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92:23-30.

10. Lockley SW, Cronin JW, Evans EE, et al. Effect of reducing interns’ weekly work hours on sleep and attentional failures. NEJM. 2004;351:1829-1837.

11. Schmidt HG, Van Gog T, Schuit SC, et al. Do patients’ disruptive behaviours influence the accuracy of a doctor’s diagnosis? A randomised experiment. BMJ Qual Saf. 2017;26:19-23.

12. Riskin A, Erez A, Foulk TA, et al. Rudeness and medical team performance. Pediatrics. 2017;139:e20162305.

13. Graber M, Gordon R, Franklin N. Reducing diagnostic errors in medicine: what’s the goal? Acad Med. 2002;77:981-992.

14. Mamede S, Van Gog T, Sampaio AM, et al. How can students’ diagnostic competence benefit most from practice with clinical cases? The effects of structured reflection on future diagnosis of the same and novel diseases. Acad Med. 2014;89:121-127.

15. Bond WF, Schwartz LM, Weaver KR, et al. Differential diagnosis generators: an evaluation of currently available computer programs. J Gen Intern Med. 2012;27:213-219.

16. Porter R. The Greatest Benefit to Mankind: A Medical History of Humanity. New York, NY: W.W. Norton and Company, Inc.;1999.

17. Lazarus BA. The practice of medicine and prejudice in a New England town: the founding of Mount Sinai Hospital, Hartford, Connecticut. J Am Ethn Hist. 1991;10:21-41.

PRACTICE RECOMMENDATIONS

› Acquire a basic understanding of key cognitive biases to better appreciate how they could interfere with your diagnostic reasoning. C

› Consider using a differential diagnosis generator as a safeguard if you suspect an increased risk of diagnostic error in a particular patient. C

Strength of recommendation (SOR)

A Good-quality patient-oriented evidence

B Inconsistent or limited-quality patient-oriented evidence

C Consensus, usual practice, opinion, disease-oriented evidence, case series